I’ve met a lot of amazing, uplifting people over the years. My PhD advisor who helped get me through graduate school. My father who was always there for me as a kid — and still is now. And my girlfriend who has always been positive, helpful, and supportive (even when I probably didn’t deserve it).

I’ve also met some demoralizing, discouraging ones. Family members who have gone out of their way to deter me from being an entrepreneur and working for myself. Colleagues who either disliked me or my work and chose to express their disdain in a public fashion. And then there are those who have said some pretty disheartening things over email, Twitter, and other internet outlets.

We’re all familiar with these types of people. Yet regardless of their demeanor (whether positive or negative), we’re all built from the same genetic material of four nucleobases: cytosine, guanine, adenine, and thymine.

These base pairs are combined in such a way that our bodies all have the same basic structure regardless of gender, race, or ethnicity. At the most structural level we all have a head, two arms, a torso, and two legs.

We can use computer vision to exploit this semi-rigid structure and extract features to quantify the human body. These features can be passed on to machine learning models that when trained can be used to detect and track humans in images and video streams. This is especially useful for the task of pedestrian detection, which is the topic we’ll be talking about in today’s blog post.

Read on to find out how you can use OpenCV and Python to perform pedestrian detection.

Pedestrian Detection OpenCV

Did you know that OpenCV has built-in methods to perform pedestrian detection?

OpenCV ships with a pre-trained HOG + Linear SVM model that can be used to perform pedestrian detection in both images and video streams. If you’re not familiar with the Histogram of Oriented Gradients and Linear SVM method, I suggest you read this blog post where I discuss the 6 step framework.

If you’re already familiar with the process (or if you just want to see some code on how pedestrian detection with OpenCV is done), just open up a new file, name it detect.py , and we’ll get coding:

# import the necessary packages

from __future__ import print_function

from imutils.object_detection import non_max_suppression

from imutils import paths

import numpy as np

import argparse

import imutils

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--images", required=True, help="path to images directory")

args = vars(ap.parse_args())

# initialize the HOG descriptor/person detector

hog = cv2.HOGDescriptor()

hog.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())

Lines 2-8 start by importing our necessary packages. We’ll import print_function to ensure our code is compatible with both Python 2.7 and Python 3 (this code will also work for OpenCV 2.4.X and OpenCV 3). From there, we’ll import the non_max_suppression function from my imutils package.

If you do not have imutils installed, let pip install it for you:

$ pip install imutils

If you do have imutils installed, you’ll need to upgrade to the latest version (v0.3.1) which includes the implementation of the non_max_suppression function, along with a few other minor updates:

$ pip install --upgrade imutils

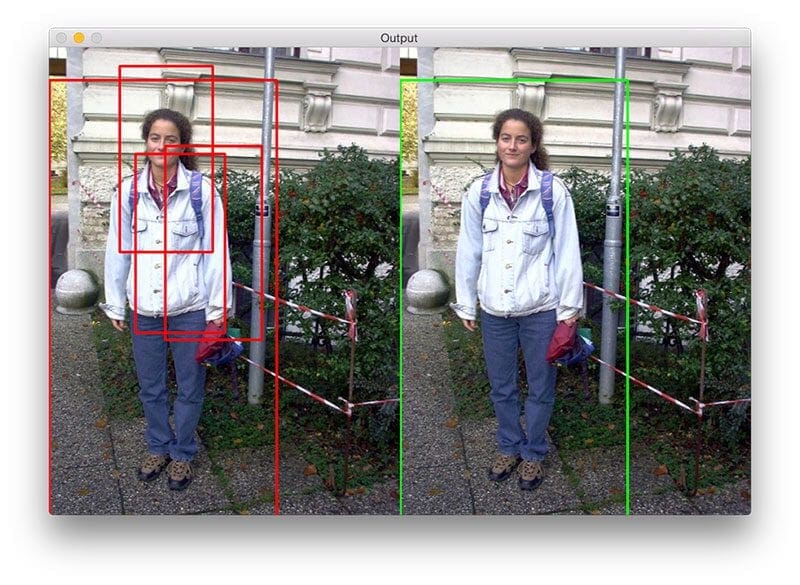

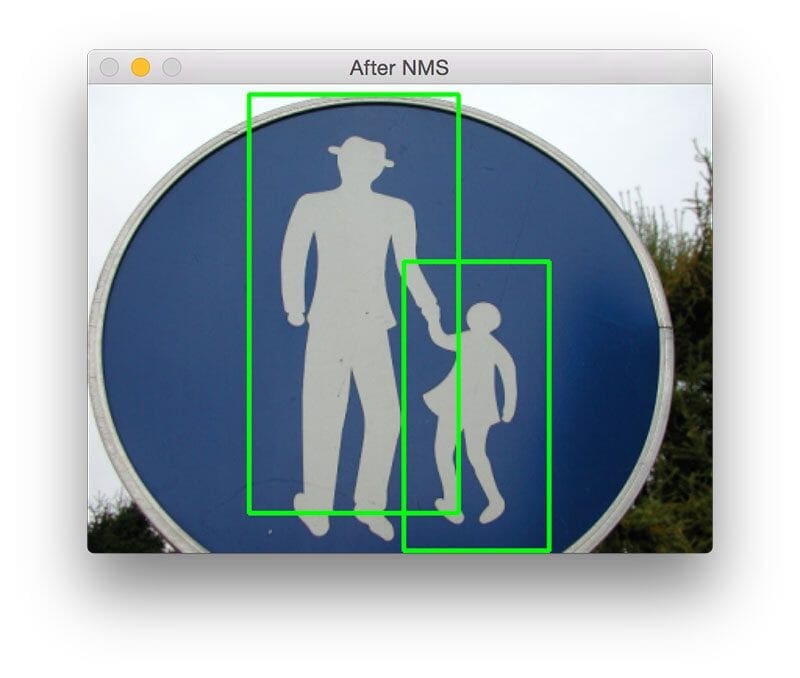

I’ve talked about non-maxima suppression twice on the PyImageSearch blog, once in this introductory post, and again in this post on implementing a faster NMS algorithm. In either case, the gist of the non-maxima suppression algorithm is to take multiple, overlapping bounding boxes and reduce them to only a single bounding box:

This helps reduce the number of false-positives reported by the final object detector.

Lines 11-13 handle parsing our command line arguments. We only need a single switch here, --images , which is the path to the directory that contains the list of images we are going to perform pedestrian detection on.

Finally, Lines 16 and 17 initialize our pedestrian detector. First, we make a call to hog = cv2.HOGDescriptor() which initializes the Histogram of Oriented Gradients descriptor. Then, we call the setSVMDetector to set the Support Vector Machine to be pre-trained pedestrian detector, loaded via the cv2.HOGDescriptor_getDefaultPeopleDetector() function.

At this point our OpenCV pedestrian detector is fully loaded, we just need to apply it to some images:

# loop over the image paths

for imagePath in paths.list_images(args["images"]):

# load the image and resize it to (1) reduce detection time

# and (2) improve detection accuracy

image = cv2.imread(imagePath)

image = imutils.resize(image, width=min(400, image.shape[1]))

orig = image.copy()

# detect people in the image

(rects, weights) = hog.detectMultiScale(image, winStride=(4, 4),

padding=(8, 8), scale=1.05)

# draw the original bounding boxes

for (x, y, w, h) in rects:

cv2.rectangle(orig, (x, y), (x + w, y + h), (0, 0, 255), 2)

# apply non-maxima suppression to the bounding boxes using a

# fairly large overlap threshold to try to maintain overlapping

# boxes that are still people

rects = np.array([[x, y, x + w, y + h] for (x, y, w, h) in rects])

pick = non_max_suppression(rects, probs=None, overlapThresh=0.65)

# draw the final bounding boxes

for (xA, yA, xB, yB) in pick:

cv2.rectangle(image, (xA, yA), (xB, yB), (0, 255, 0), 2)

# show some information on the number of bounding boxes

filename = imagePath[imagePath.rfind("/") + 1:]

print("[INFO] {}: {} original boxes, {} after suppression".format(

filename, len(rects), len(pick)))

# show the output images

cv2.imshow("Before NMS", orig)

cv2.imshow("After NMS", image)

cv2.waitKey(0)

On Line 20 we start looping over the images in our --images directory. The examples in this blog post (and the additional images included in the source code download of this article) are samples form the popular INRIA Person Dataset (specifically, from the GRAZ-01 subset).

From there, Lines 23-25 handle loading our image off disk and resizing it to have a maximum width of 400 pixels. The reason we attempt to reduce our image dimensions is two-fold:

- Reducing image size ensures that less sliding windows in the image pyramid need to be evaluated (i.e., have HOG features extracted from and then passed on to the Linear SVM), thus reducing detection time (and increasing overall detection throughput).

- Resizing our image also improves the overall accuracy of our pedestrian detection (i.e., less false-positives).

Actually detecting pedestrians in images is handled by Lines 28 and 29 by making a call to the detectMultiScale method of the hog descriptor. The detectMultiScale method constructs an image pyramid with scale=1.05 and a sliding window step size of (4, 4) pixels in both the x and y direction, respectively.

The size of the sliding window is fixed at 64 x 128 pixels, as suggested by the seminal Dalal and Triggs paper, Histograms of Oriented Gradients for Human Detection. The detectMultiScale function returns a 2-tuple of rects , or the bounding box (x, y)-coordinates of each person in the image, and weights , the confidence value returned by the SVM for each detection.

A larger scale size will evaluate less layers in the image pyramid which can make the algorithm faster to run. However, having too large of a scale (i.e., less layers in the image pyramid) can lead to pedestrians not being detected. Similarly, having too small of a scale size dramatically increases the number of image pyramid layers that need to be evaluated. Not only can this be computationally wasteful, it can also dramatically increase the number of false-positives detected by the pedestrian detector. That said, the scale is one of the most important parameters to tune when performing pedestrian detection. I’ll perform a more thorough review of each of the parameters to detectMultiScale in a future blog post.

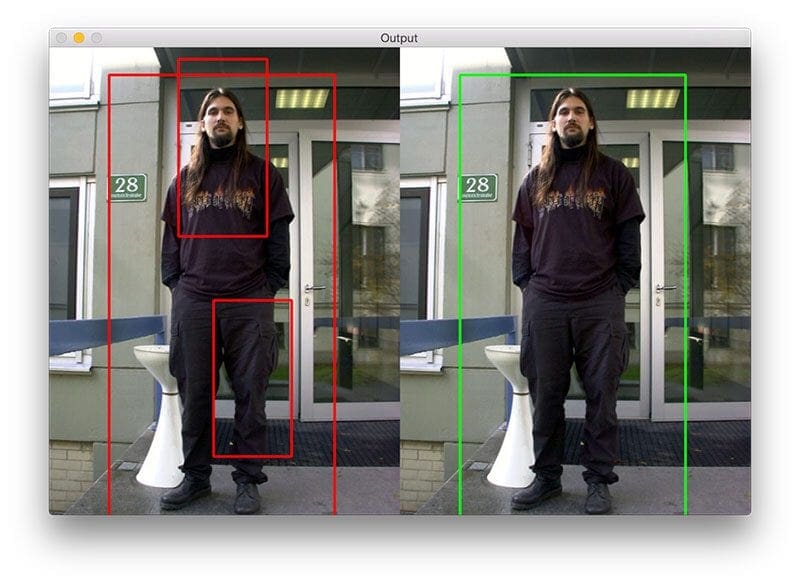

Lines 32 and 33 take our initial bounding boxes and draw them on our image.

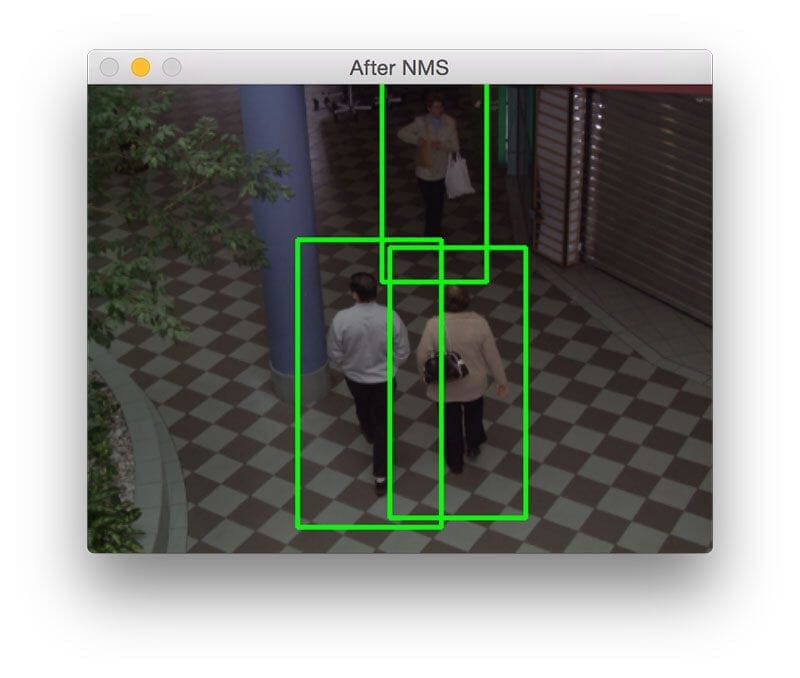

However, for some images you’ll notice that there are multiple, overlapping bounding boxes detected for each person (as demonstrated by Figure 1 above).

In this case, we have two options. We can detect if one bounding box is fully contained within another (as one of the OpenCV examples implements). Or we can apply non-maxima suppression and suppress bounding boxes that overlap with a significant threshold — and that’s exactly what Lines 38 and 39 do.

Note: If you’re interested in learning more about the HOG framework and non-maxima suppression, I would start by reading this introductory post on the 6-step framework. From there, check out this post on simple non-maxima suppression followed by an updated post that implements the optimized Malisiewicz method.

After applying non-maxima suppression, we draw the finalized bounding boxes on Lines 42 and 43, display some basic information about the image and number of bounding boxes on Lines 46-48, and finally display our output images to our screen on Lines 51-53.

Results of pedestrian detection in images

To see our pedestrian detection script in action, just issue the following command:

$ python detect.py --images images

Below I have provided a sample of results from the detection script:

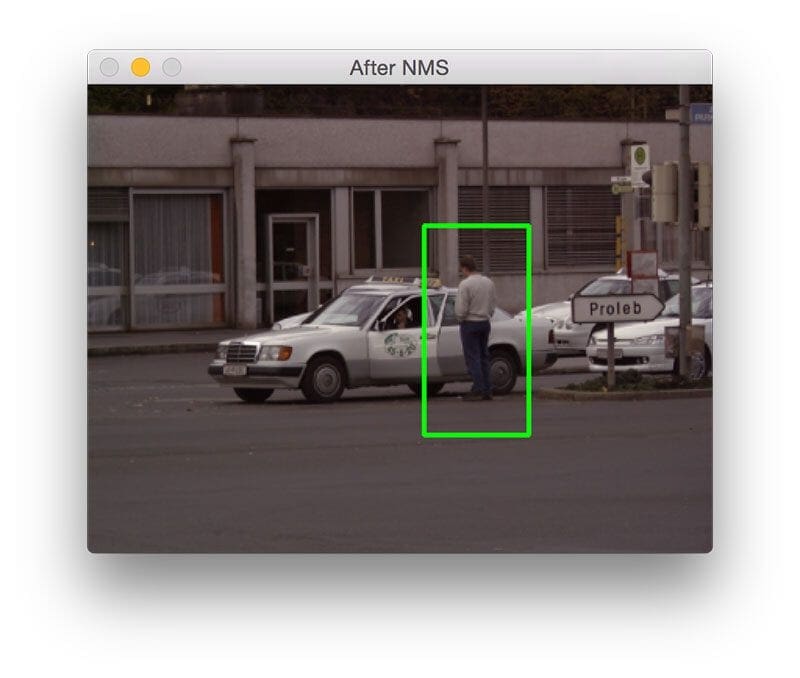

Here we have detected a single person standing next to a police car.

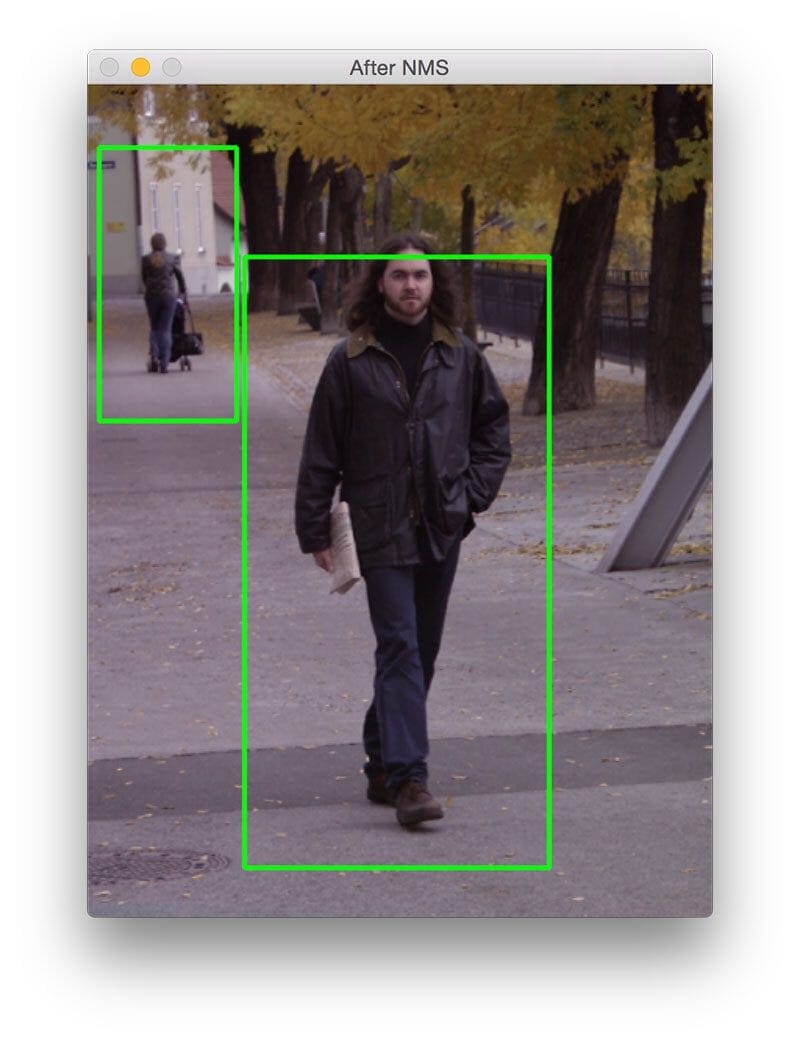

In the above example we can see a man detected in the foreground of the image, while a woman pushing a baby stroller is detected in the background.

The above image serves an example of why applying non-maxima suppression is important. The detectMultiScale function falsely detected two bounding boxes (along with the correct bounding box), both overlapping the true person in the image. By applying non-maxima suppression we were able to suppress the extraneous bounding boxes, leaving us with the true detection

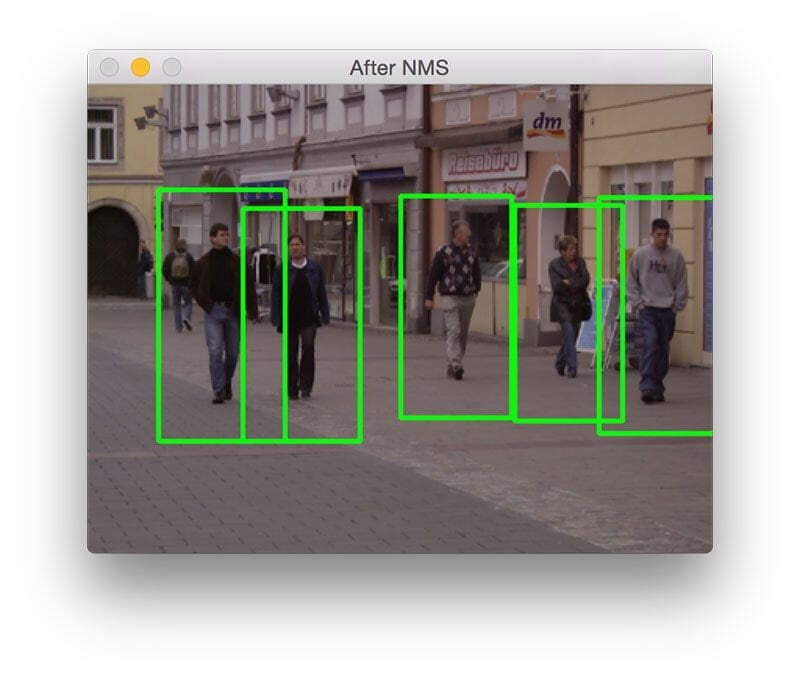

Again, we see that multiple false bounding boxes are detected, but by applying NMS we can remove them, leaving us with the true detection in the image.

Here we are detecting pedestrians in a shopping mall. Notice two people are walking away from the camera while another is walking towards the camera. In either case, our HOG method is able to detect the people. The larger overlapThresh in the non_maxima_suppression function ensures that the bounding boxes are not suppressed, even though they do partially overlap.

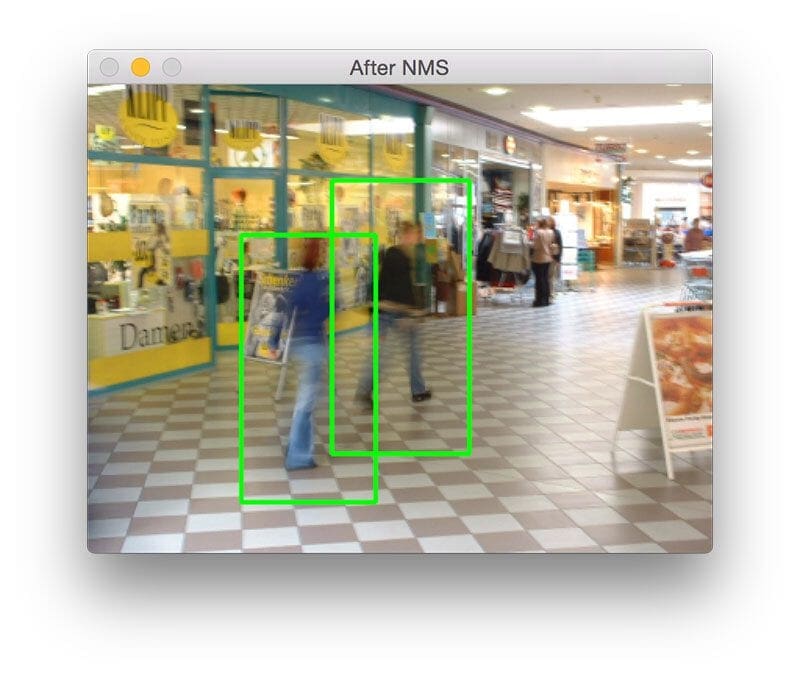

I was particularly surprised by the results of the above image. Normally the HOG descriptor does not perform well in the presence of motion blur, yet we are still able to detect the pedestrians in this image.

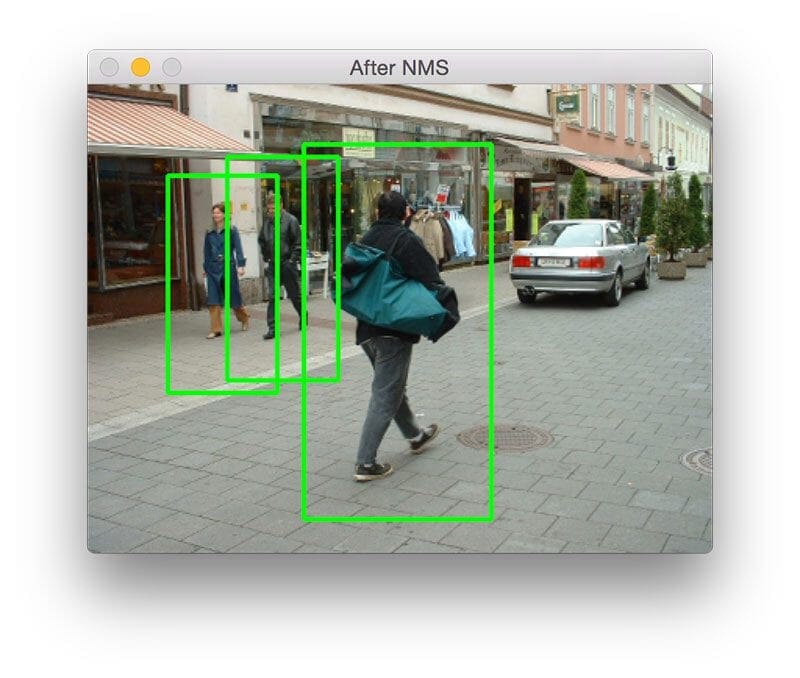

This is another example of multiple, overlapping bounding boxes, but due to the larger overlapThresh they are not suppressed, leaving us with the correct person detections.

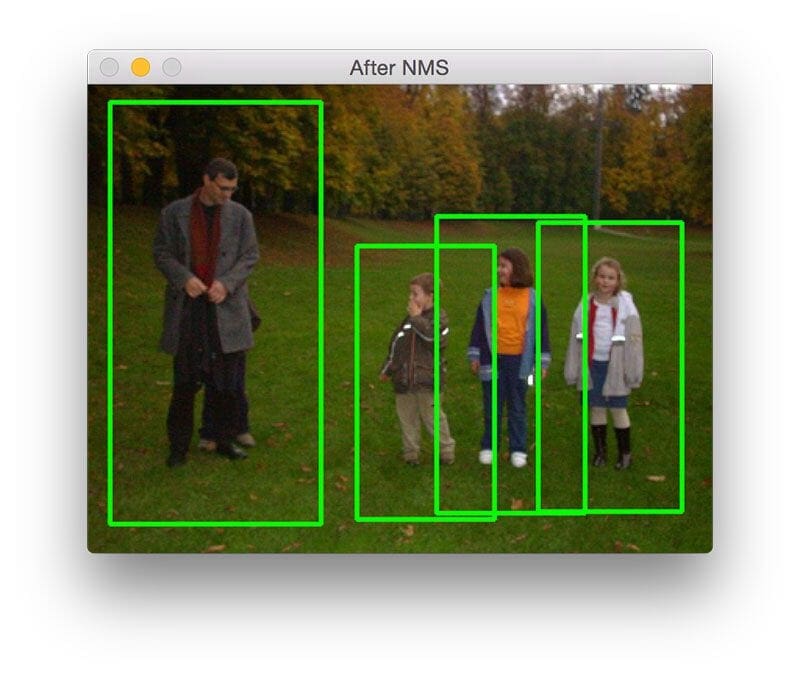

The above image shows the versatility of our HOG + SVM pedestrian detector. We are not only able to detect the adult male, but also the three small children as well. (Note that the detector is not able to find the other child hiding behind his [presumed to be] father).

I include this image last simply because I find it amusing. We are clearly viewing a road sign, likely used to indicate a pedestrian crossing. However, our HOG + SVM detector marks the two people in this image as positive classifications!

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post we learned how to perform pedestrian detection using the OpenCV library and the Python programming language.

The OpenCV library actually ships with a pre-trained HOG + Linear SVM detector based on the Dalal and Triggs method to automatically detect pedestrians in images.

While the HOG method tends to be more accurate than its Haar counter-part, it still requires that the parameters to detectMultiScale be set properly. In future blog posts, I’ll review each of the parameters to detectMultiScale , detail how to tune each of them, and describe the trade-offs between accuracy and performance.

Anyway, I hope you enjoyed this article! I’m planning on doing more object detection tutorials in the future, so if you want to be notified when these posts go live, please consider subscribing to the newsletter using the form below.

I also cover object detection using the HOG + Linear SVM method in detail inside the PyImageSearch Gurus course, so be sure to take a look!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!