Table of Contents

Introduction to OpenCV AI Kit (OAK)

We are super excited to start a new series on the Luxonis OpenCV AI Kit (OAK), a one-of-a-kind embedded vision hardware. OAK is a one-stop shop solution for deploying computer vision and deep learning solutions since it has multiple cameras and neural network inference accelerators baked right into a coin-size device. It is powered by Intel Movidius Myriad X Visual Processing Unit (VPU) for neural network inference.

In today’s tutorial, we will introduce you to the OAK hardware family and discuss its capabilities and numerous applications that can run on OAK. Furthermore, discuss how OAK with stereo depth can simultaneously run advanced neural networks and provide depth information, making it one-of-a-kind embedded hardware in the market. In short, we would like to give you a holistic view of the OAK device and showcase its true potential.

This lesson is the 1st in a 4-part series on OAK 101:

- Introduction to OpenCV AI Kit (OAK) (today’s tutorial)

- OAK-D: Understanding and Running Neural Network Inference with DepthAI API

- Training a Custom Image Classification Network for OAK-D

- Deploying a Custom Image Classifier on an OAK-D

To learn what OAK-D has to offer in computer vision and spatial AI and why it is one of the best embedded vision hardware in the market for hobbyists and enterprises, just keep reading.

Introduction to OpenCV AI Kit (OAK)

Introduction

To celebrate the 20th anniversary of the OpenCV library, Luxonis partnered with the official OpenCV.org organization to create the OpenCV AI Kit, an MIT-licensed open-source software API, and Myriad X-based embedded board/camera.

In July 2020, Luxonis and the OpenCV organization launched a Kickstarter campaign to fund the creation of these fantastic embedded AI boards. The campaign was a mega success; it raised roughly $1.3M in funding from 6500+ Kickstarter backers, potentially making it one of the most successful embedded boards in crowdfunding history!

When they first made their product public via Kickstarter, OAK consisted of the OAK API software suite supporting both Python and OpenCV that allowed to program the device and two different types of hardware:

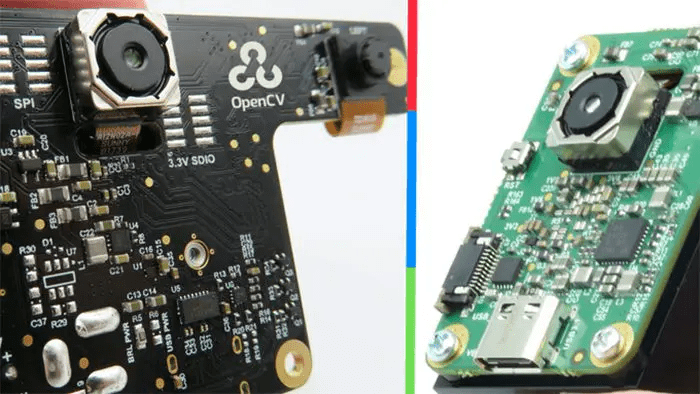

- OAK-1: The standard OpenCV AI board (shown in Figure 1 (left)) that can perform neural network inference, object detection, object tracking, feature detection, and basic image processing operations.

- OAK-D: Everything in OAK-1, but with a stereo depth camera, 3D object localization, and tracking objects in a 3D space. OAK-D (shown in Figure 1 (right)) was a flagship product that could simultaneously run advanced neural networks and provide object depth information.

OAK-D provides spatial AI leveraging two monocular cameras in addition to the 4K/30FPS 12MP camera that both models OAK-1 and OAK-D share. OAK-1 and OAK-D are super easy to use, even more so with the DepthAI API. Trust us, if you are a researcher, hobbyist, or professional, you won’t be disappointed, as OAK allows anyone to leverage its power to the fullest.

After a year of launching OAK-1 and OAK-D, in September 2021, Luxonis with OpenCV launched a second Kickstarter campaign, and this time it was an OAK variant, OAK-D Lite (as shown in Figure 2). As the name suggests, it had a smaller weight and form factor but the same Spatial AI functionality as OAK-D. In addition, while OAK-1 and OAK-D were priced at $99 and $149, respectively, OAK-D Lite was priced at an even lower price than OAK-1 (i.e., just $89).

To reduce the cost and smaller form factor, the OAK-D Lite had lower resolution monocular cameras and removed the use of IMU (Inertial measurement unit) as they are costly and rarely used. With many such changes, OAK-D Lite required less power, and you could spin it up with just a USB-C delivering 900mA at 5V power.

The list of OAK variants is not limited to only OAK-1, OAK-D, and OAK-D Lite. Still, many more variants are offered by Luxonis, which targets almost all market segments like developers, researchers, and enterprises for production-grade deployment.

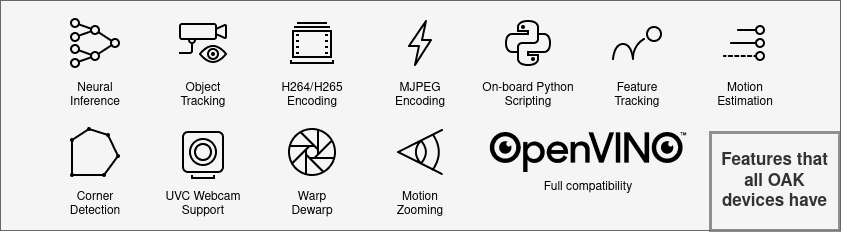

However, all the OAK modules have many standard features, including Neural Network Inference, Computer Vision Methods (Object Tracking, Feature Tracking, Crop, Warp/Dewarp), Onboard Python Scripting, OpenVINO compatibility for deploying deep learning models (as shown in Figure 3).

OAK Hardware

Till now, we have discussed OAK-1 and OAK-D from the family of OAK hardware, and we also discussed a variant of OAK (i.e., OAK-D Lite). In this section, we will dive deeper into what all Luxonis has to offer apart from OAK-1, OAK-D, and OAK-D Lite, as they segregate all their hardware offerings into three categories: family, variant, and connectivity, as shown in Figure 4.

As shown in the above figure, the OAK family of modules offers three types of hardware; all have the same onboard Myriad X VPU for Neural Network Inference and offer a set of standard features discussed in Figure 3.

If you want to learn more about Intel’s Myriad X VPU, check out this product document.

OAK-1

It comes packaged with a 12MP RGB Camera supporting USB-based connectivity and Power-over-Ethernet (PoE) connectivity. The Myriad X VPU chip allows it to perform 4-trillion-operations-per-second onboard, keeping the host (computer) free. Since the device has a 12 MP camera and the neural inference chip onboard, the communication happens super fast over an onboard 2.1 Gbps MIPI interface. As a result, the overall inference speed is more than six times that compared to the standard setting (a Neural Compute Stick 2 connected to a computer with USB), where the host deals with the video stream. Figure 5 shows an NCS2 connected to a laptop through a USB.

Following are the numbers achieved when an object detection network (MobileNet-SSD) is run on OAK-1 viz-a-viz NCS2 with host as Raspberry Pi:

- OAK-1 + Raspberry Pi: 50+FPS, 0% RPi CPU Utilization

- NCS2 + Raspberry Pi: 8FPS, 225% RPi CPU Utilization

OAK-D

Like OAK-1, the OAK-D module comes with a 12 MP RGB color camera and supports USB and PoE connectivity. Along with the RGB camera, it has a pair of grayscale stereo cameras that help perform depth perception. All three onboard cameras implement stereo and RGB vision, piped directly into the OAK System on Module (SoM) for depth perception and AI inference.

One interesting fact that caught our attention was the distance between the left, and the right grayscale stereo camera is 7.5 cm, similar to the distance between the center of the pupils of the two eyes. This distance estimation works well for most applications. The minimum perceivable distance is 20 cm, and the maximum perceivable distance is 35 meters.

Limitation

The stereo cameras in OAK-D have a baseline of 7.5 cm, denoted as BL in Figure 6. The grayscale stereo camera pair has an 89.5° Field of View (FOV), and since both the cameras are separated by 7.5 cm, they will have a specific blind spot zone. And only one of the two cameras would be able to see in this blind spot zone.

Since we need to compute the depth from disparity (depth is inversely proportional to disparity), which requires the pixels to overlap, there are blind spots. For example, areas on the left side of the left mono camera and on the right side of the right mono camera, where depth cannot be calculated since it is seen by only one of two cameras. Therefore, in Figure 6, that area is marked with B

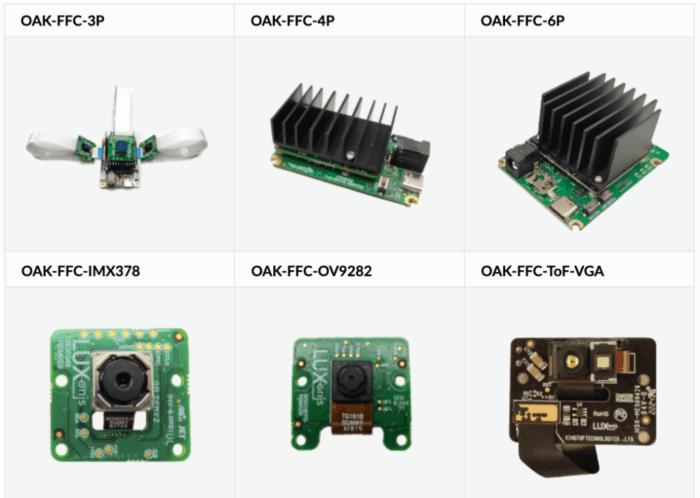

OAK-FFC

The OAK FFC modular lineup, as shown in Figure 7, is for developers and practitioners requiring flexibility in the hardware setup, and the standard OAK-D doesn’t fit well with their application. They are more for applications that require custom mounting, custom baseline (the distance between the left and right camera), or custom orientation of the cameras. The modularity allows you to place the stereo cameras at various baselines, unlike in OAK-D, which is set to 7.5 cm.

As the saying goes, “Nothing worth having comes easy,” which means that with this flexibility comes a challenge that you need to figure out how and where you will mount the cameras, and once mounted, you will need to perform a stereo calibration. Of course, all of this groundwork is already done for you in OAK-D, so it’s essential to remember that these OAK-FFC modules aren’t “plug and play” like OAK-1, OAK-D, OAK-D Lite, etc. So, in short, you need to be a pro in working on these modular devices or have some experience setting up custom hardware to leverage the power of OAK-FFC.

OAK USB Hardware Offerings

The OAK modules first brought to the market through a Kickstarter campaign were the OAK with USB connectivity, which are also the most popular modules. OAK-1, OAK-D, and OAK-D Lite have solved real-world problems for over two years. USB connection is great for development – it’s easy to use and allows up to 10Gbps throughput.

The more recent variants of OAK, like the OAK-D Pro, have active stereo and night vision capabilities, making it an even more promising edge device in the market. It is often difficult to find a camera that supports night vision, let alone run the neural network inference in that scenario. However, what’s better than having all these capabilities baked into one device that perceives low-light environments and then performs neural network inference on those frames (as shown in Figure 9)?

OAK PoE Hardware Offerings

OAK PoE modules are very similar to USB modules, but instead of USB connectivity, they have PoE connectivity. The PoE cables have a much longer range than USB. Another critical difference is that they also feature onboard flash so you can run pipelines in standalone mode. This means you do not need to connect your OAK to a host, and the OAK PoE can run inference hostless or on-the-edge.

The PoE devices have IP67-rated enclosures, which means its waterproof and dustproof.

OAK Developer Kit

The OAK developer kit is our favorite of all the variants that Luxonis offers. In simple terms, the developer kit is like combining a Raspberry Pi (or any microprocessor like Nvidia’s Jetson Nano) with an OAK-D in a compact solution. The microprocessor acts as a host for the OAK-D and makes it fully standalone.

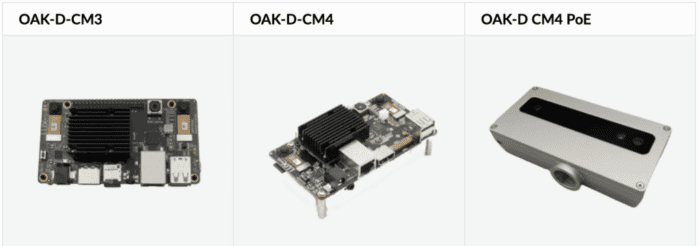

Figure 11 shows three variants: the OAK-D-CM3, OAK-D-CM4, and OAK-D CM4 PoE. The latest Compute Module 4 variant integrates the Raspberry Pi Compute Module 4 (Wireless, 4 GB RAM) and all of its interfaces (USB, Ethernet, 3.5 mm Audio, etc.). In addition, the Raspberry Pi OS is also flashed into the 32 GB eMMC storage. Finally, to connect, configure, build pipelines, and communicate with the OAK device, it has a DepthAI Python interface.

OAK Modules Comparison

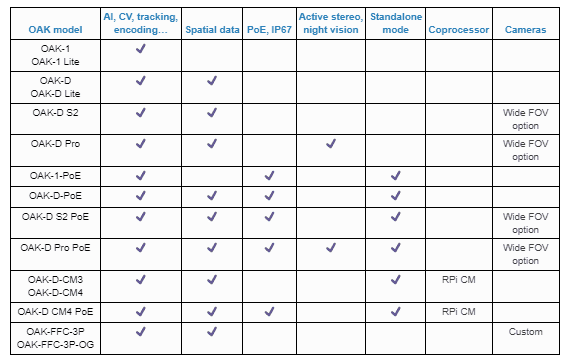

Table 1 shows all the OAK variants with the features and functionalities each offers. As you can observe from the below table, all the OAK models support AI and CV functionalities since all modules come with at least one camera and a Myriad X chip. In addition, all devices except OAK-1 variants support Spatial data (i.e., depth perception).

All OAK variants with PoE-based connectivity also have an IP67 rating, as evident from the below table. In addition, the OAK-D Pro USB and PoE have Active stereo and Night vision support, taking computer vision even one notch higher.

Recall the OAK with PoE has onboard flash so that it can run without a host. Similarly, the ones with Raspberry Pi Compute Modules already have the host. Hence, both PoE and CM variants support standalone mode. Additionally, the CM modules have a Coprocessor (i.e., the Raspberry Pi CM 4).

Applications on OAK

In this section, we will discuss a few of the applications that can be run out-of-the-box on OAK-1, while some require OAK-D.

Image Classifier On-Device

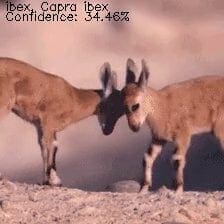

Image Classification is like the “hello world” of Deep Learning for Computer Vision. Like most edge devices, you can also efficiently run image classification models on the OAK device. Figure 12 shows an example of running an Efficientnet-b0 variant which classifies the animal as Ibex (Mountain Goat) with a probability of 34.46%. This Efficientnet classifier is trained on a 1000-class ImageNet dataset and can be loaded on-the-fly in DepthAI API.

Face Detection

Figure 13 shows the face detection application demonstration on an OAK device based on the implementation of libfacedetection. It detects the faces in images and five facial landmarks: a pair of eyes, nose tip, and mouth corner points. The detector achieves 40 FPS on the OAK device.

Face Mask Detection

Figure 14 shows a face mask/no-mask detector that uses the MobileNet SSD v2 object detection model to detect whether people are wearing a mask or not. As you can see from the below gif, the model does quite well. This was, of course, run on the OAK-D. You can read more about this here.

People Counting

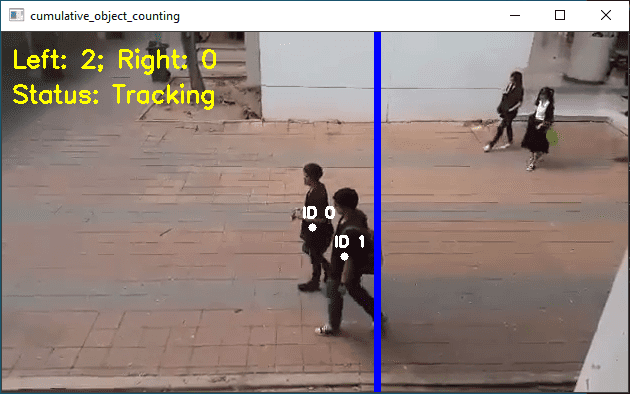

Object counting is a two-step process that involves detecting the objects and then counting the unique occurrences with the help of an object tracker. Figure 15 shows a demonstration of counting people that are going left and right. It is inspired by the PyImageSearch blog post, OpenCV People Counter. People counting can be beneficial in scenarios when you need to count the number of people entering and exiting a mall or a department store. Refer to the GitHub repository to know more about object tracking for OAK.

Age and Gender Recognition

Figure 16 shows a great example of running two-stage inference with the DepthAI library on OAK-D. It first detects the faces in a given frame, crops them, and then sends those faces to an age-gender classification model. The face detection and age gender models are available on OpenVINO’s GitHub Repository inside Intel’s directory. You can also refer to this official OpenVINO docs for pre-trained models.

Gaze Estimation

Gaze Estimation is a bit complex example but an interesting one. It predicts where a person looks, given the person’s entire face. As shown in Figure 17, the two gaze vectors move based on the person’s eye movement.

This example shows the capability of running more complex applications on the OAK that involves running not 1 but 4-5 different neural network models. Like the previous example was a two-stage inference pipeline, the gaze estimation is three-stage inference (3-stage, 2-parallel) with DepthAI. You can learn more about it on the luxonis repository.

Automated Face-Blurring

Another bit simpler example of running a pre-trained face detection neural network on the OAK device to extract the face region of interest from a given image. Then the face regions are blurred using standard OpenCV methods on the host. The end-to-end application shown in Figure 18 runs in real-time.

Human Skeletal Pose Estimation

Figure 19 shows an example of a Human Pose Estimation Network using Gen2 Pipeline Builder on an OAK device. This is a multi-person 2D pose estimation network (based on the OpenPose approach). The network detects a human pose for every person in an image: a body skeleton consisting of key points and connections between them. For example, the pose may contain 18 key points like ears, eyes, nose, neck, shoulders, elbows, etc. You can load the human pose estimation model off-the-shelf with the blobconverter module that helps convert the OpenVINO to MyriadX blob format.

3D Object Detection

Object detection with depth requires an OAK-D; it would not work on OAK-1 devices, and the following two examples you would see also require an OAK-D.

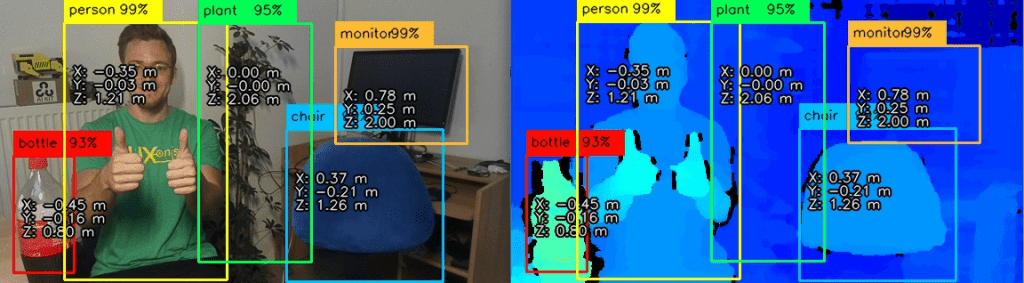

In simple terms, 3D object detection is about finding an object’s location/coordinates in physical space instead of pixel space (2D). Hence, it is useful when measuring or interacting with the physical world in real time. Figure 20 shows an example of Neural inference fused with stereo depth where the DepthAI extends the 2D object detectors like SSD and YOLO with spatial information to give them 3D context.

So in the below image, a MobileNet detects objects and fuses object detections with a depth map to provide spatial coordinates (XYZ) of objects it sees: person, monitor, potted plant, bottle, and chair. If you notice carefully, the zzz

3D Landmark Localization

The 3D hand landmark detector is very similar to the previous example. With a regular camera, this network returns the 2D (XY) coordinates of all 21 hand landmarks (contours of all joints in fingers). Then, using this same concept of neural inference fused with stereo depth, these 21 hand landmarks are converted to 3D points in physical space, as shown in Figure 21. The application achieves roughly 20 FPS on the OAK-D.

Semantic Segmentation with Depth

Like image classification, object detection, and keypoint detection, you can also run semantic segmentation on OAK-D. Figure 22 demonstrates an example that runs DeepLabv3+ at 27 FPS with DepthAI API. It further crops the depth image based on the semantic segmentation model output. The first window is the semantic segmentation output, the second is a cropped depth map based on the semantic segmentation output, and the third is the depth map.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: July 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, we introduced you to the OAK hardware family and discussed its capabilities and numerous applications that can run on the OAK device.

Specifically, we discussed in detail the two flagship products of Luxonis, i.e., OAK-1 and OAK-D, and how they got traction from the market with the help of a Kickstarter campaign. We also discussed the limitation of OAK-D.

We further delved into the Luxonis hardware offerings, where we discussed the OAK-FFC, OAK USB offerings, OAK PoE hardware, the developer kit, and the OAK complete modules.

Finally, we discussed various computer vision applications that can be run on the OAK hardware.

Citation Information

Sharma, A. “Introduction to OpenCV AI Kit (OAK),” PyImageSearch, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, R. Raha, and A. Thanki, eds., 2022, https://pyimg.co/dus4w

@incollection{Sharma_2022_OAK1,

author = {Aditya Sharma},

title = {Introduction to {OpenCV AI} Kit {(OAK)}},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha and Abhishek Thanki},

year = {2022},

note = {https://pyimg.co/dus4w},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.