Table of Contents

- Thermal Vision: Fever Detector with Python and OpenCV (starter project)

- Simple Face Detection in Thermal Images

- Configuring Your Development Environment

- Having Problems Configuring Your Development Environment?

- Project Structure

- Face Detection

- Fever Detection

- Real-Time Fever Detection on your Raspberry Pi in 2 Steps

- Summary

Thermal Vision: Fever Detector with Python and OpenCV (starter project)

In this lesson, you will apply the knowledge previously learned in the last two classes to a starter project, including:

- Simple face detection in thermal images

- Fever detection (approximate solution)

- Real-time fever detection on your Raspberry Pi in 3 steps

This tutorial is the 3rd in a 4-part series on Infrared Vision Basics:

- Introduction to Infrared Vision: Near vs. Mid-Far Infrared Images

- Thermal Vision: Measuring your First Temperature from an Image with Python and OpenCV

- Thermal Vision: Fever Detector with Python and OpenCV (starter project) (today’s tutorial)

- Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project)

By the end of this lesson, you’ll be able to detect human faces in real-time on a thermal image, video, or camera video stream by estimating their temperatures.

We hope this tutorial inspires you to apply temperature measurement to images in your projects.

Disclaimer: This tutorial attempts to estimate human body temperature using thermal images. It doesn’t mean that COVID-19 or other diseases could be detected with the proposed methods. We encourage you to follow the U.S. Food and Drug Administration (FDA) guidance that indicates the required standards to travel deeper into this topic.

Simple Face Detection in Thermal Images

Introduction

As you have probably noticed, due to the COVID pandemic, demand for thermal infrared cameras and thermal infrared thermometers for use in mass access control has experienced an enormous increase.

We can find this kind of device, for example, in airports or other mass events, as Figure 1 shows.

As we’ve already learned, thermal cameras allow us to contribute to this real-life issue by applying our knowledge as Computer Vision beginners/ practitioners/researchers/experts.

Therefore, let’s start with facial detection in our thermal images!

Computer Vision offers us many ways to detect a face in a standard color visible-light/RGB image: Haar Cascades, Histogram of Oriented Gradients (HOG) + Linear Support Vector Machines (SVM), Deep Learning methods, etc.

There are tons of online tutorials, but here you can find the 4 most popular face detection methods explained in a straightforward manner.

But what happened with a thermal image?

We are going to try to answer this question quickly.

Let’s simplify the issue by classifying the methods above into 2 groups:

- Traditional Machine Learning for face detection: Haar Cascades and Histogram of Oriented Gradients (HOG) + Linear Support Vector Machines (SVM).

- Deep Learning (Convolutional Neural Networks) methods for face detection: Max-Margin Object Detector (MMOD) and Single Shot Detector (SSD).

At first glance, we could consider using any of them.

Nevertheless, looking at Face detection tips, suggestions, and best practices, we should verify if the libraries used, OpenCV and Dlib, implement algorithms trained over thermal images.

Alternatively, we could train our own face detector model, which is clearly out of the scope of our Infrared Vision Basics course.

In addition, we should consider that as a starter project of this tutorial, we want to integrate our solution into a Raspberry Pi. Therefore, we need a fast and small model.

So, for these reasons and to simplify this tutorial, we will use the Haar Cascade method (Figure 2).

Thermal Face Detection with Haar Cascades

Paul Viola and Michael Jones proposed this well-known object detector in Rapid Object Detection using a Boosted Cascade of Simple Features in 2001.

Yes, 2001, “a few” years ago.

And yes! We have tested it over thermal images, and it works without any training on our part!

Configuring Your Development Environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

Start by accessing this tutorial’s “Downloads” section to retrieve the source code and example images.

Let’s start coding.

First, take a look at our project structure:

$ tree --dirsfirst

.

├── fever_detector_image.py

├── fever_detector_video.py

├── fever_detector_camera.py

├── faces_gray16_image.tiff

├── haarcascade_frontalface_alt2.xml

└── gray16_sequence

├── gray16_frame_000.tiff

├── gray16_frame_001.tiff

├── gray16_frame_002.tiff

├── ...

└── gray16_frame_069.tiff

1 directory, 76 files

We’ll apply the Haar Cascade algorithm to a face in a thermal image, then in a thermal video sequence, and finally, using a USB Video Class (UVC) thermal camera. These parts are implemented, respectively, in:

fever_detector_image.py: Applies the Haar Cascade face detection algorithm to an input image (faces_gray16_image.tiff).fever_detector_video.py: The Haar Cascade face detection algorithm is applied to input video frames (gray16_sequencefolder).fever_detector_camera.py: Applies the Haar Cascade face detection to the video input stream of a UVC thermal camera.

We are going to follow this structure during the entire class.

The faces_gray16_image.tiff is our raw gray16 thermal image shown in Figure 3, right, and extracted from the thermal camera RGMVision ThermalCAM 1.

The gray16_sequence folder contains a sample video sequence.

The haarcascade_frontalface_alt2.xml file is our pre-trained face detector, provided by the developers and maintainers of the OpenCV library (GitHub).

Face Detection

Face Detection in a Thermal Image

Open your fever_detector_image.py file and import the NumPy and OpenCV libraries:

# import the necessary packages import cv2 import numpy as np

You should have installed the NumPy and OpenCV libraries.

First, we are going to start opening the thermal gray16 image, faces_gray16_image.tiff:

# open the gray16 image

gray16_image = cv2.imread("faces_gray16_image.tiff", cv2.IMREAD_ANYDEPTH)

The cv2.IMREAD_ANYDEPTH flag allows us to open the gray16 image in 16-bit format.

# convert the gray16 image into a gray8 gray8_image = np.zeros((120,160), dtype=np.uint8) gray8_image = cv2.normalize(gray16_image, gray8_image, 0, 255, cv2.NORM_MINMAX) gray8_image = np.uint8(gray8_image)

On Lines 9-11, respectively, we create an empty 160x120 image, we normalize the gray16 image from 0-65,553 (16 bits) to 0-255 (8 bits), and we ensure that the final image is an 8-bit image.

We use the 160x120 resolution because the faces_gray16_image.tiff gray16 image has this size.

Then we color the gray8 image using our favorite OpenCV colormap* to get the different thermal color palettes (Figure 3, middle):

# color the gray8 image using OpenCV colormaps gray8_image = cv2.applyColorMap(gray8_image, cv2.COLORMAP_INFERNO)

(*) Please, visit ColorMaps in OpenCV to ensure that your selected colormap is available on your OpenCV version. In this case, we are using OpenCV 4.5.4.

Finally, we implement our Haar Cascade algorithm.

# load the haar cascade face detector

haar_cascade_face = cv2.CascadeClassifier('haarcascade_frontalface_alt2.xml')

On Line 17, the XML haarcascade_frontalface_alt2.xml file with our pre-trained face detector, provided by the developers and maintainers of the OpenCV library (GitHub), is loaded.

# detect faces in the input image using the haar cascade face detector faces = haar_cascade_face.detectMultiScale(gray8_image, scaleFactor=1.1, minNeighbors=5, minSize=(10, 10), flags=cv2.CASCADE_SCALE_IMAGE)

On Line 20, the Haar Cascade detector is applied.

# loop over the bounding boxes for (x, y, w, h) in faces: # draw the rectangles cv2.rectangle(gray8_image, (x, y), (x + w, y + h), (255, 255, 255), 1)

On Lines 23-25, we loop over our detected faces and draw the corresponding rectangle.

# show result

cv2.imshow("gray8-face-detected", gray8_image)

cv2.waitKey(0)

Finally, we show the results (Lines 28 and 29).

That’s right! We are detecting faces on thermal infrared images (Figure 3, right).

faces_gray16_image.tiff. Middle: Thermal gray8 image after converting the gray16 faces_gray16_image.tiff and coloring it with the Inferno OpenCV colormap. Right: Haar Cascade face detector applied on the thermal gray8 image.Face Detection in a Thermal Video Sequence

Assuming we don’t have a thermal camera on hand and want to detect faces on thermal video streams, we follow the same previous steps.

To simplify, we will use the video sequence stored in our gray16_sequence. This folder contains TIFF frames instead of a gray16 video file because standard compressed video files tend to lose information. The gray16 frames maintain the temperature value of each pixel that we use then to estimate face temperatures.

After importing the necessary libraries, we use the fever_detector_video.py file to follow the same processes that we saw in the previous section, looping over each frame:

# import the necessary packages import numpy as np import cv2 import os import argparse

In this case, we also import the argparse and the os libraries.

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-v", "--video", required=True, help="path of the video sequence")

args = vars(ap.parse_args())

If you are familiar with the PyImageSearch tutorials, you already know the argparse Python library. We use it to give additional information (e.g., command line arguments) to a program at runtime. In this case, we’ll use it to specify our thermal video path (Lines 8-10).

# load the haar cascade face detector

haar_cascade_face = cv2.CascadeClassifier("haarcascade_frontalface_alt2.xml")

# create thermal video fps variable (8 fps in this case)

fps = 8

Let’s load the pre-trained Haar Cascade face detector and define the sequence Frames Per Second value (Lines 13-16):

# loop over the thermal video frames to detect faces

for image in sorted(os.listdir(args["video"])):

# filter .tiff files (gray16 images)

if image.endswith(".tiff"):

# define the gray16 frame path

file_path = os.path.join(args["video"], image)

# open the gray16 frame

gray16_image = cv2.imread(args["video"], cv2.IMREAD_ANYDEPTH)

# convert the gray16 image into a gray8

gray8_frame = np.zeros((120, 160), dtype=np.uint8)

gray8_frame = cv2.normalize(gray16_frame, gray8_frame, 0, 255, cv2.NORM_MINMAX)

gray8_frame = np.uint8(gray8_frame)

# color the gray8 image using OpenCV colormaps

gray8_frame = cv2.applyColorMap(gray8_frame, cv2.COLORMAP_INFERNO)

# detect faces in the input image using the haar cascade face detector

faces = haar_cascade_face.detectMultiScale(gray8_frame, scaleFactor=1.1, minNeighbors=5, minSize=(10, 10),

flags=cv2.CASCADE_SCALE_IMAGE)

# loop over the bounding boxes

for (x, y, w, h) in faces:

# draw the rectangles

cv2.rectangle(gray8_frame, (x, y), (x + w, y + h), (255, 255, 255), 1)

# show results

cv2.imshow("gray8-face-detected", gray8_frame)

# wait 125 ms: RGMVision ThermalCAM1 frames per second = 8

cv2.waitKey(int((1 / fps) * 1000))

Lines 19-50 include the loop process that detects faces for each frame.

Figure 4 shows face detection in the video scene.

Fever Detection

Fever Detection in a Thermal Image

Once we have learned how to easily detect faces in our thermal image, we need to measure the temperature of each pixel to decide if a person has a normal value.

So, we divide this process into 2 steps:

- Measure the temperature value of our Region of Interest (ROI), that is, a specific area of the detected faces.

- Set a threshold for our ROI temperature value to establish when we detect a fevered person (or a person with a temperature higher than usual).

Let’s continue coding! First, we open our fever_detector_image.py file.

# loop over the bounding boxes to measure their temperature

for (x, y, w, h) in faces:

# draw the rectangles

cv2.rectangle(gray8_image, (x, y), (x + w, y + h), (255, 255, 255), 1)

# define the roi with a circle at the haar cascade origin coordinate

# haar cascade center for the circle

haar_cascade_circle_origin = x + w // 2, y + h // 2

# circle radius

radius = w // 4

# get the 8 most significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_high_byte = (np.right_shift(gray16_image, 8)).astype('uint8')

# get the 8 less significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_low_byte = (np.left_shift(gray16_image, 8) / 256).astype('uint16')

# apply the mask to our 8 most significant bits

mask = np.zeros_like(gray16_high_byte)

cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1)

gray16_high_byte = np.bitwise_and(gray16_high_byte, mask)

# apply the mask to our 8 less significant bits

mask = np.zeros_like(gray16_low_byte)

cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1)

gray16_low_byte = np.bitwise_and(gray16_low_byte, mask)

# create/recompose our gray16 roi

gray16_roi = np.array(gray16_high_byte, dtype=np.uint16)

gray16_roi = gray16_roi * 256

gray16_roi = gray16_roi | gray16_low_byte

# estimate the face temperature by obtaining the higher value

higher_temperature = np.amax(gray16_roi)

# calculate the temperature

higher_temperature = (higher_temperature / 100) - 273.15

# higher_temperature = (higher_temperature / 100) * 9 / 5 - 459.67

# write temperature value in gray8

if higher_temperature < fever_temperature_threshold:

# white text: normal temperature

cv2.putText(gray8_image, "{0:.1f} Celsius".format(higher_temperature), (x - 10, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (255, 255, 255), 1)

else:

# - red text + red circle: fever temperature

cv2.putText(gray8_image, "{0:.1f} Celsius".format(higher_temperature), (x - 10, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (0, 0, 255), 2)

cv2.circle(gray8_image, haar_cascade_circle_origin, radius, (0, 0, 255), 2)

Again, we loop over the detected faces on Lines 36-90. This time we’ll define our ROI.

The current ISO standards establish that the inner eye canthus is the best place to determine the body temperature using a thermal face image.

For this reason and to simplify the process, we will define an ROI with a circle shape around the center point of our Haar Cascade face detection.

# draw the rectangles cv2.rectangle(gray8_image, (x, y), (x + w, y + h), (255, 255, 255), 1)

For this purpose, first, we draw a Haar Cascade rectangle again for each detected face (Line 39).

# define the roi with a circle at the haar cascade origin coordinate # haar cascade center for the circle haar_cascade_circle_origin = x + w // 2, y + h // 2 # circle radius radius = w // 4

Then, we define our ROI circle by establishing the center of the face, that is, the center of the circle (Line 44) and the circle’s radius (Line 46).

As OpenCV doesn’t allow us to draw a circle in a 16-bit image and we need the gray16 information to measure the temperature, we will use the following trick.

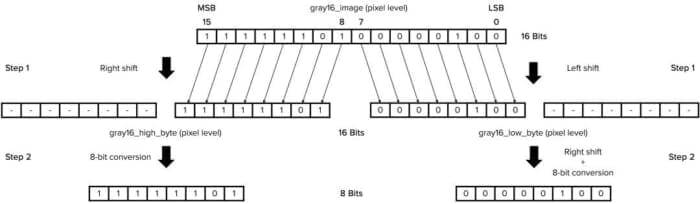

First, we split each pixel value of our 16-bit thermal image into 2 groups of 8 bits (2 bytes), as Figure 5 shows.

# get the 8 most significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_high_byte = (np.right_shift(gray16_image, 8)).astype('uint8')

We right shift the 16 bits on Line 51, losing the 8 Less Significant Bits and removing the new Most Significant Bits (8 zeros) by converting the image into a gray8.

# get the 8 less significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_low_byte = (np.left_shift(gray16_image, 8) / 256).astype('uint16')

We left shift the 16 bits on Line 56, losing the 8 Most Significant Bits (MSBs) and translating the new 8 MSBs by dividing the values by 256.

# apply the mask to our 8 most significant bits mask = np.zeros_like(gray16_high_byte) cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1) gray16_high_byte = np.bitwise_and(gray16_high_byte, mask) # apply the mask to our 8 less significant bits mask = np.zeros_like(gray16_low_byte) cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1) gray16_low_byte = np.bitwise_and(gray16_low_byte, mask)

Then, we separately apply the mask and the bitwise operation to our 2 split bytes to isolate our ROI that contains the temperature information (Lines 59-66).

If you are not familiar with these operations, we encourage you to follow the OpenCV Bitwise AND, OR, XOR, and NOT.

# create/recompose our gray16 roi gray16_roi = np.array(gray16_high_byte, dtype=np.uint16) gray16_roi = gray16_roi * 256 gray16_roi = gray16_roi | gray16_low_byte

On Lines 69-71, we recompose the image obtaining our 16-bit circle ROI with the temperature value of the inner eye canthus area.

# estimate the face temperature by obtaining the higher value higher_temperature = np.amax(gray16_roi) # calculate the temperature higher_temperature = (higher_temperature / 100) - 273.15 #higher_temperature = (higher_temperature / 100) * 9 / 5 - 459.67

Finally, to easily determine if the detected face has a temperature above the normal range, i.e., more than 99-100.5 °F (around 37-38 °C), we calculate the maximum temperature value of our circle ROI on Lines 74-78.

# fever temperature threshold in Celsius or Fahrenheit fever_temperature_threshold = 37.0 fever_temperature_threshold = 99.0

Then, we show a fever red alert text (Line 88) if this value is higher (Line 81) than our threshold (fever_temperature_threshold, Lines 32 and 33). Again we should remember the above-mentioned Disclaimer.

# write temperature value in gray8

if higher_temperature < fever_temperature_threshold:

# white text: normal temperature

cv2.putText(gray8_image, "{0:.1f} Celsius".format(higher_temperature), (x - 10, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (255, 255, 255), 1)

else:

# - red text + red circle: fever temperature

cv2.putText(gray8_image, "{0:.1f} Celsius".format(higher_temperature), (x - 10, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (0, 0, 255), 2)

cv2.circle(gray8_image, haar_cascade_circle_origin, radius, (0, 0, 255), 2)

# show result

cv2.imshow("gray8-face-detected", gray8_image)

cv2.waitKey(0)

And that’s it: we have our fever detector using Computer Vision!

Figure 6 shows the face temperature value in the thermal gray8 image.

Fever Detection in a Thermal Video Sequence

Again, as in the previous tutorial section, we follow the same steps as in a “static” image. We loop over each frame of our video sequence, measuring and showing the maximum temperature value of the ROI of each detected face.

Figure 7 shows the results.

Real-Time Fever Detection on your Raspberry Pi in 2 Steps

In this last section of the class, we will apply everything we have learned so far in this course.

We will implement our easy fever detector process using OpenCV and Python on our Raspberry Pi and using one of the best price-performance thermal cameras, the RGMVision ThermalCAM 1.

Whether you have this camera or any other UVC thermal camera, you’ll have fun implementing a real-time fever detector. If you don’t have a thermal camera on hand, you can also run everything shown so far in this class on your Raspberry Pi.

You’ll implement this project successfully just by following these 3 CPP steps:

- Code

- Plug

- Play

Let’s code!

Open fever_detector_camera.py and import the NumPy and OpenCV libraries:

# import the necessary packages import cv2 import numpy as np

Of course, your Raspberry Pi should have both libraries installed. If not, please look at Install OpenCV 4 on Raspberry Pi 4 and Raspbian Buster.

Set up the thermal camera index and resolution, in our case, 160x120:

# set up the thermal camera index (thermal_camera = cv2.VideoCapture(0, cv2.CAP_DSHOW) on Windows OS) thermal_camera = cv2.VideoCapture(0) # set up the thermal camera resolution thermal_camera.set(cv2.CAP_PROP_FRAME_WIDTH, 160) thermal_camera.set(cv2.CAP_PROP_FRAME_HEIGHT, 120)

On Line 6, you should select your camera ID. If you are using Windows OS, be sure to specify your backend video library, for example, Direct Show (DSHOW): thermal_camera = cv2.VideoCapture(0, cv2.CAP_DSHOW).

For more information, please visit Video I/O with OpenCV Overview.

Set up the thermal camera to work as a gray16 source and to receive the data in raw format:

# set up the thermal camera to get the gray16 stream and raw data

thermal_camera.set(cv2.CAP_PROP_FOURCC, cv2.VideoWriter.fourcc('Y','1','6',' '))

thermal_camera.set(cv2.CAP_PROP_CONVERT_RGB, 0)

Line 14 prevents the RGB conversion.

# load the haar cascade face detector

haar_cascade_face = cv2.CascadeClassifier("haarcascade_frontalface_alt2.xml")

# fever temperature threshold in Celsius or Fahrenheit

fever_temperature_threshold = 37.0

#fever_temperature_threshold = 99.0

Let’s load the pre-trained Haar Cascade face detector and define our fever threshold (fever_temperature_threshold, Lines 20 and 21).

# loop over the thermal camera frames

while True:

# grab the frame from the thermal camera stream

(grabbed, gray16_frame) = thermal_camera.read()

# convert the gray16 image into a gray8

gray8_frame = np.zeros((120, 160), dtype=np.uint8)

gray8_frame = cv2.normalize(gray16_frame, gray8_frame, 0, 255, cv2.NORM_MINMAX)

gray8_frame = np.uint8(gray8_frame)

# color the gray8 image using OpenCV colormaps

gray8_frame = cv2.applyColorMap(gray8_frame, cv2.COLORMAP_INFERNO)

# detect faces in the input frame using the haar cascade face detector

faces = haar_cascade_face.detectMultiScale(gray8_frame, scaleFactor=1.1, minNeighbors=5, minSize=(10, 10),

flags=cv2.CASCADE_SCALE_IMAGE)

# loop over the bounding boxes to measure their temperature

for (x, y, w, h) in faces:

# draw the rectangles

cv2.rectangle(gray8_frame, (x, y), (x + w, y + h), (255, 255, 255), 1)

# define the roi with a circle at the haar cascade origin coordinate

# haar cascade center for the circle

haar_cascade_circle_origin = x + w // 2, y + h // 2

# circle radius

radius = w // 4

# get the 8 most significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_high_byte = (np.right_shift(gray16_frame, 8)).astype('uint8')

# get the 8 less significant bits of the gray16 image

# (we follow this process because we can't extract a circle

# roi in a gray16 image directly)

gray16_low_byte = (np.left_shift(gray16_frame, 8) / 256).astype('uint16')

# apply the mask to our 8 most significant bits

mask = np.zeros_like(gray16_high_byte)

cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1)

gray16_high_byte = np.bitwise_and(gray16_high_byte, mask)

# apply the mask to our 8 less significant bits

mask = np.zeros_like(gray16_low_byte)

cv2.circle(mask, haar_cascade_circle_origin, radius, (255, 255, 255), -1)

gray16_low_byte = np.bitwise_and(gray16_low_byte, mask)

# create/recompose our gray16 roi

gray16_roi = np.array(gray16_high_byte, dtype=np.uint16)

gray16_roi = gray16_roi * 256

gray16_roi = gray16_roi | gray16_low_byte

# estimate the face temperature by obtaining the higher value

higher_temperature = np.amax(gray16_roi)

# calculate the temperature

higher_temperature = (higher_temperature / 100) - 273.15

# higher_temperature = (higher_temperature / 100) * 9 / 5 - 459.67

# write temperature value in gray8

if higher_temperature < fever_temperature_threshold:

# white text: normal temperature

cv2.putText(gray8_frame, "{0:.1f} Celsius".format(higher_temperature), (x, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (255, 255, 255), 1)

else:

# - red text + red circle: fever temperature

cv2.putText(gray8_frame, "{0:.1f} Celsius".format(higher_temperature), (x, y - 10), cv2.FONT_HERSHEY_PLAIN,

1, (0, 0, 255), 2)

cv2.circle(gray8_frame, haar_cascade_circle_origin, radius, (0, 0, 255), 2)

# show the temperature results

cv2.imshow("final", gray8_frame)

cv2.waitKey(1)

# do a bit of cleanup

thermal_camera.release()

cv2.destroyAllWindows()

Loop over the thermal camera frames by detecting the faces using the Haar Cascade algorithm, isolating the circle ROI, and thresholding the maximum temperature value as in the previous sections (Lines 24-100).

Let’s plug your thermal camera into 1 of your 4 Raspberry Pi USB sockets (Figure 8)!

And finally, let’s play/run the code via command or using your favorite Python IDE (Figure 9)!

And that’s all! Enjoy your project!

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: March 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, we learned how to implement an easy fever detector as a thermal starter project.

First, for this purpose, we have discovered how to detect faces in a thermal image/video/camera stream by implementing the Haar Cascade Machine Learning algorithm.

Then, we isolated a Region of Interest (ROI) in the detected faces using a circle shape mask without losing the gray16 temperature information.

After that, we thresholded the maximum temperature value of the defined ROI to determine if the detected face belongs to a person who has a temperature above the normal range.

Finally, we implemented a real-time fever detector in 3 steps, using a UVC thermal camera and your Raspberry Pi.

In the following tutorial, we will travel into Deep Learning applied to Thermal Vision, and you will learn to detect different objects even in complete darkness at night.

See you there!

Citation Information

Garcia-Martin, R. “Thermal Vision: Fever Detector with Python and OpenCV (starter project),” PyImageSearch, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, and R. Raha, eds., 2022, https://pyimg.co/6nxs0

@incollection{Garcia-Martin_2022_Fever,

author = {Raul Garcia-Martin},

title = {Thermal Vision: Fever Detector with {P}ython and {OpenCV} (starter project)},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha},

year = {2022},

note = {https://pyimg.co/6nxs0},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.