Now that we’ve trained our model and serialized it, we need to load it from disk. As a practical application of model serialization, I’ll be demonstrating how to classify individual images from the Animals dataset and then display the classified images to our screen.

To learn how to load a trained Keras/TensFlow model from disk, just keep reading.

Configuring your development environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, I highly recommend that you read my pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having problems configuring your development environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Load a trained Keras/TensorFlow model from disk

Open a new file, name it shallownet_load.py, and we’ll get our hands dirty:

# import the necessary packages from pyimagesearch.preprocessing import ImageToArrayPreprocessor from pyimagesearch.preprocessing import SimplePreprocessor from pyimagesearch.datasets import SimpleDatasetLoader from tensorflow.keras.models import load_model from imutils import paths import numpy as np import argparse import cv2

We start by importing our required Python packages. Lines 2-4 import the classes used to construct our standard pipeline of resizing an image to a fixed size, converting it to a Keras compatible array, and then using these preprocessors to load an entire image dataset into memory.

The actual function used to load our trained model from disk is load_model on Line 5. This function is responsible for accepting the path to our trained network (an HDF5 file), decoding the weights and optimizer inside the HDF5 file, and setting the weights inside our architecture so we can (1) continue training or (2) use the network to classify new images.

We’ll import our OpenCV bindings on Line 9 as well so we can draw the classification label on our images and display them to our screen.

Next, let’s parse our command line arguments:

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-d", "--dataset", required=True,

help="path to input dataset")

ap.add_argument("-m", "--model", required=True,

help="path to pre-trained model")

args = vars(ap.parse_args())

# initialize the class labels

classLabels = ["cat", "dog", "panda"]

Just like in shallownet_save.py, we’ll need two command line arguments:

--dataset: The path to the directory that contains images that we wish to classify (in this case, the Animals dataset).--model: The path to the trained network serialized on disk.

Line 20 then initializes a list of class labels for the Animals dataset.

Our next code block handles randomly sampling ten image paths from the Animals dataset for classification:

# grab the list of images in the dataset then randomly sample

# indexes into the image paths list

print("[INFO] sampling images...")

imagePaths = np.array(list(paths.list_images(args["dataset"])))

idxs = np.random.randint(0, len(imagePaths), size=(10,))

imagePaths = imagePaths[idxs]

Each of these ten images will need to be preprocessed, so let’s initialize our preprocessors and load the ten images from disk:

# initialize the image preprocessors

sp = SimplePreprocessor(32, 32)

iap = ImageToArrayPreprocessor()

# load the dataset from disk then scale the raw pixel intensities

# to the range [0, 1]

sdl = SimpleDatasetLoader(preprocessors=[sp, iap])

(data, labels) = sdl.load(imagePaths)

data = data.astype("float") / 255.0

Notice how we are preprocessing our images in the exact same manner in which we preprocessed our images during training. Failing to do this procedure can lead to incorrect classifications since the network will be presented with patterns it cannot recognize. Always take special care to ensure your testing images were preprocessed in the same way as your training images.

Next, let’s load our saved network from disk:

# load the pre-trained network

print("[INFO] loading pre-trained network...")

model = load_model(args["model"])

Loading our serialized network is as simple as calling load_model and supplying the path to model’s HDF5 file residing on disk.

Once the model is loaded, we can make predictions on our ten images:

# make predictions on the images

print("[INFO] predicting...")

preds = model.predict(data, batch_size=32).argmax(axis=1)

Keep in mind that the .predict method of model will return a list of probabilities for every image in data — one probability for each class label, respectively. Taking the argmax on axis=1 finds the index of the class label with the largest probability for each image.

Now that we have our predictions, let’s visualize the results:

# loop over the sample images

for (i, imagePath) in enumerate(imagePaths):

# load the example image, draw the prediction, and display it

# to our screen

image = cv2.imread(imagePath)

cv2.putText(image, "Label: {}".format(classLabels[preds[i]]),

(10, 30), cv2.FONT_HERSHEY_SIMPLEX, 0.7, (0, 255, 0), 2)

cv2.imshow("Image", image)

cv2.waitKey(0)

On Line 48, we start looping over our ten randomly sampled image paths. For each image, we load it from disk (Line 51) and draw the class label prediction on the image itself (Lines 52 and 53). The output image is then displayed to our screen on Lines 54 and 55.

To give shallownet_load.py a try, execute the following command:

$ python shallownet_load.py --dataset ../datasets/animals \ --model shallownet_weights.hdf5 [INFO] sampling images... [INFO] loading pre-trained network... [INFO] predicting...

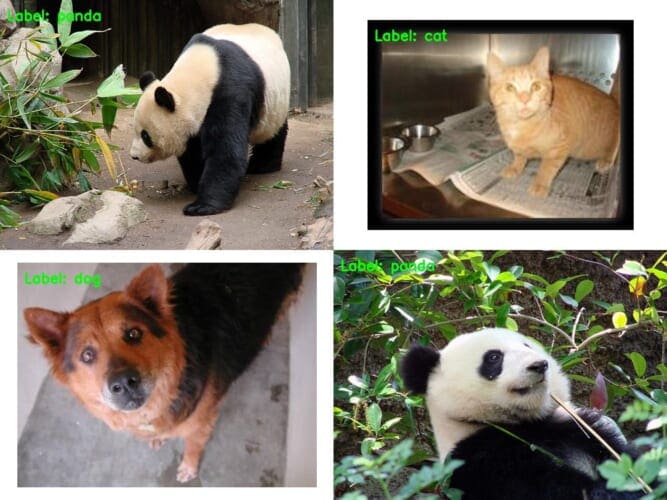

Based on the output, you can see that our images have been sampled, the pre-trained ShallowNet weights have been loaded from disk, and that ShallowNet has made predictions on our images. I have included a sample of predictions from the ShallowNet drawn on the images themselves in Figure 2.

Keep in mind that ShallowNet is obtaining ≈70% classification accuracy on the Animals dataset, meaning that nearly one in every three example images will be classified incorrectly. Furthermore, based on the classification_report from an earlier tutorial, we know that the network still struggles to consistently discriminate between dogs and cats. As we continue our journey applying deep learning to computer vision classification tasks, we’ll look at methods to help us boost our classification accuracy.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, we learned how to:

- Train a network.

- Serialize the network weights and optimizer state to disk.

- Load the trained network and classify images.

In a separate tutorial, we’ll discover how we can save our model’s weights to disk after every epoch, allowing us to “checkpoint” our network and choose the best performing one. Saving model weights during the actual training process also enables us to restart training from a specific point if our network starts exhibiting signs of overfitting.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.