In this tutorial, you will learn how to use the GridSearchCV class to do grid search hyperparameter tuning using the scikit-learn machine learning library. We’ll apply the grid search to a computer vision project.

This blog post is part two in our four-part series on hyperparameter tuning:

- Introduction to hyperparameter tuning with scikit-learn and Python (last week’s tutorial)

- Grid search hyperparameter tuning with scikit-learn’s GridSearchCV class (today’s post)

- Hyperparameter tuning for Deep Learning with scikit-learn, Keras, and TensorFlow (next week’s post)

- Easy Hyperparameter Tuning with Keras Tuner and TensorFlow (tutorial two weeks from now)

Last week we learned how to tune hyperparameters to a Support Vector Machine (SVM) trained to predict the age of a marine snail. This was a good introduction to the concept of hyperparameter tuning, but it didn’t demonstrate how to apply hyperparameter tuning to a computer vision project.

Today, we’ll build a computer vision system to automatically recognize the texture of an object in an image. We will use hyperparameter tuning to find the optimal set of hyperparameters that yields the highest accuracy.

You can use the code included with this post as a starting point when you need to tune hyperparameters in your own projects.

To learn how to grid search hyperparameters with GridSearchCV and scikit-learn, just keep reading.

Grid search hyperparameter tuning with scikit-learn’s GridSearchCV

In the first part of this tutorial, we’ll discuss:

- What a grid search is

- How a grid search can be applied to hyperparameter tuning

- How the scikit-learn machine learning library implements grid search through the

GridSearchCVclass

From there, we’ll configure our development environment and review our project directory structure.

I’ll then show you how to use computer vision, machine learning, and grid search hyperparameter tuning to tune the parameters to a texture recognition pipeline, resulting in a system with near 100% texture recognition accuracy.

By the end of this guide, you’ll have a strong understanding of how to apply a grid search to the hyperparameters of a computer vision project.

What is a hyperparameter grid search?

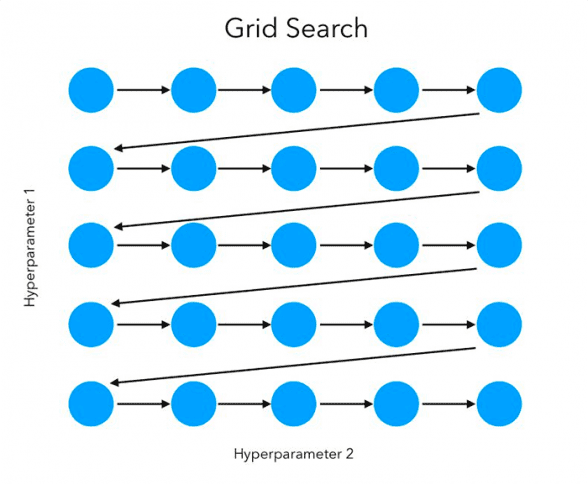

A grid search allows us to exhaustively test all possible hyperparameter configurations that we are interested in tuning.

Later in this tutorial, we’ll tune the hyperparameters of a Support Vector Machine (SVM) to obtain high accuracy. The hyperparameters to an SVM include:

- Kernel choice: linear, polynomial, radial basis function

- Strictness (

C): Typical values are in the range of0.0001to1000 - Kernel-specific parameters: degree (for polynomial) and gamma (RBF)

For example, consider the following list of possible hyperparameters:

parameters = [

{"kernel":

["linear"],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]},

{"kernel":

["poly"],

"degree": [2, 3, 4],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]},

{"kernel":

["rbf"],

"gamma": ["auto", "scale"],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]}

]

A grid search will exhaustively test all possible combinations of these hyperparameters, training an SVM for each set. The grid search will then report the best hyperparameters (i.e., the ones that maximized accuracy).

Configuring your development environment

To follow this guide, you need to have the following libraries installed on your machine:

Luckily, both of these packages are pip-installable:

$ pip install opencv-contrib-python $ pip install scikit-learn $ pip install scikit-image $ pip install imutils

If you need help configuring your development environment for OpenCV, I highly recommend that you read my pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having problems configuring your development environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux systems?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Our example texture dataset

We’ll create a computer vision and machine learning model capable of automatically recognizing the texture of an object in an image.

There are three textures we’ll train our model to recognize:

- Brick

- Marble

- Sand

Each class has 30 images each for a total of 90 images in the dataset.

Our goal is to now:

- Quantify the texture of each image in the dataset

- Define the set of hyperparameters we’re going to search over

- Use a grid search to tune the hyperparameters and find the values that maximize our texture recognition accuracy

Note: This dataset was created by following my tutorial on creating an image dataset with Google Images. I’ve provided the example texture dataset inside the “Downloads” associated with this tutorial. That way, you don’t have to recreate the dataset yourself.

Project structure

Before we can implement a grid search for hyperparameter tuning, let’s take a second to review our project directory structure.

Start with the “Downloads” section of this tutorial to access the source code and example texture dataset.

From there, unzip the archive, and you should find the following project directory:

$ tree . --dirsfirst --filelimit 10 . ├── pyimagesearch │ ├── __init__.py │ └── localbinarypatterns.py ├── texture_dataset │ ├── brick [30 entries exceeds filelimit, not opening dir] │ ├── marble [30 entries exceeds filelimit, not opening dir] │ └── sand [30 entries exceeds filelimit, not opening dir] └── train_model.py 5 directories, 3 files

The texture_dataset contains the dataset where we’ll train our model. We have three subdirectories, brick, marble, and sand, each with 30 images.

We’ll use Local Binary Patterns (LBPs) to quantify the contents of each image in the texture dataset. The LBP image descriptor is implemented inside the localbinarypatterns.py file inside the pyimagesearch module.

The train_model.py script is responsible for:

- Loading all images in

texture_datasetfrom disk - Quantifying each of the images using LBPs

- Performing a grid search on the hyperparameter space to determine the values that optimize accuracy

Let’s get started implementing our Python scripts.

Our Local Binary Pattern (LBP) descriptor

The Local Binary Patterns implementation we’ll follow today comes from my previous tutorial. While I’ve included the full code here as a matter of completeness, I will defer a detailed review of the implementation to my previous blog post.

With that said, open the localbinarypatterns.py file in the pyimagesearch module of your project directory structure, and we can get started:

# import the necessary packages from skimage import feature import numpy as np class LocalBinaryPatterns: def __init__(self, numPoints, radius): # store the number of points and radius self.numPoints = numPoints self.radius = radius

Lines 2 and 3 import our required Python packages. The feature submodule of scikit-image contains the local_binary_pattern function — this method computes the LBPs from an input image.

Next, we define our describe function:

def describe(self, image, eps=1e-7):

# compute the Local Binary Pattern representation

# of the image, and then use the LBP representation

# to build the histogram of patterns

lbp = feature.local_binary_pattern(image, self.numPoints,

self.radius, method="uniform")

(hist, _) = np.histogram(lbp.ravel(),

bins=np.arange(0, self.numPoints + 3),

range=(0, self.numPoints + 2))

# normalize the histogram

hist = hist.astype("float")

hist /= (hist.sum() + eps)

# return the histogram of Local Binary Patterns

return hist

This method accepts an input image (i.e., the image we want to compute LBPs for) along with a small epsilon value. As we’ll see, the eps value prevents division by zero errors when normalizing the resulting LBP histogram to the range [0, 1].

From there, Lines 15 and 16 compute the uniform LBPs from the input image. Given the LBPs, we then use NumPy to construct a histogram of each LBP type (Lines 17-19).

The resulting histogram is then scaled to the range [0, 1] (Lines 22 and 23).

For a more detailed review of our LBP implementation, be sure to refer to my tutorial, Local Binary Patterns with Python & OpenCV.

Implementing our grid search for hyperparameter tuning using gridsearchcv.

With our LBP image descriptor implemented, we can create our grid search hyperparameter tuning script.

Open the train_model.py file in your project directory, and we’ll get started:

# import the necessary packages from pyimagesearch.localbinarypatterns import LocalBinaryPatterns from sklearn.model_selection import GridSearchCV from sklearn.metrics import classification_report from sklearn.svm import SVC from sklearn.model_selection import train_test_split from imutils import paths import argparse import time import cv2 import os

Lines 2-11 import our required Python packages. Our notable imports included:

LocalBinaryPatterns: Responsible for computing LBPs for each input image, thereby quantifying the textureGridSearchCV: scikit-learn’s implementation of a grid search for hyperparameter tuningSVC: Our Support Vector Machine (SVM) used for classification (SVC)paths: Grabs the paths of all images in our input dataset directorytime: Used to time how long the grid search takes

Next, we have our command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-d", "--dataset", required=True,

help="path to input dataset")

args = vars(ap.parse_args())

We only have a single command line argument here, --dataset, which will point to our texture_dataset residing on disk.

Let’s grab our image paths and initialize our LBP descriptor:

# grab the image paths in the input dataset directory

imagePaths = list(paths.list_images(args["dataset"]))

# initialize the local binary patterns descriptor along with

# the data and label lists

print("[INFO] extracting features...")

desc = LocalBinaryPatterns(24, 8)

data = []

labels = []

Line 20 grabs the paths to all input images in our --dataset directory.

We then initialize our LocalBinaryPatterns descriptor, along with two lists:

data: Stores the LBPs extracted from each imagelabels: Contains the class label of the particular image

Let’s populate both data and labels now:

# loop over the dataset of images

for imagePath in imagePaths:

# load the image, convert it to grayscale, and quantify it

# using LBPs

image = cv2.imread(imagePath)

gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

hist = desc.describe(gray)

# extract the label from the image path, then update the

# label and data lists

labels.append(imagePath.split(os.path.sep)[-2])

data.append(hist)

# partition the data into training and testing splits using 75% of

# the data for training and the remaining 25% for testing

print("[INFO] constructing training/testing split...")

(trainX, testX, trainY, testY) = train_test_split(data, labels,

random_state=22, test_size=0.25)

On Line 30, we loop over our input images.

For each image we:

- Load it from disk (Line 33)

- Convert it to grayscale (Line 34)

- Compute LBPs for the image (Line 35)

We then update our labels list with the class label of the particular image along with our data list with the computed LBP histogram.

Note: Confused on how we determined the class label from the image path? Recall that inside the texture_dataset directory, there are three subdirectories, one for each of the three texture classes: brick, marble, and sand. Since the class label of a given image is contained within the file path, all we need to do is extract the subdirectory name, which is exactly what Line 39 does.

Before we can run GridSearchCV we first need to define the hyperparameters to search over:

# construct the set of hyperparameters to tune

parameters = [

{"kernel":

["linear"],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]},

{"kernel":

["poly"],

"degree": [2, 3, 4],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]},

{"kernel":

["rbf"],

"gamma": ["auto", "scale"],

"C": [0.0001, 0.001, 0.1, 1, 10, 100, 1000]}

]

Line 49 defines a parameters list that the grid search will run over. As you can see, we’re testing three different types of SVM kernels: linear, polynomial, and radial basis function (RBF).

Each kernel has its own set of associated hyperparameters to search over as well.

SVMs tend to be quite sensitive to hyperparameter choices; that is especially true for the non-linear kernels. If we want high texture classification accuracy, we need to get these hyperparameter choices correct.

The values listed above are the ones you’ll typically want to tune for an SVM and given kernel.

Let’s now run GridSearchCV over the hyperparameter space:

# tune the hyperparameters via a cross-validated GridSearchCV

print("[INFO] tuning hyperparameters via gridsearchcv")

grid = GridSearchCV(estimator=SVC(), param_grid=parameters, n_jobs=-1)

start = time.time()

grid.fit(trainX, trainY)

end = time.time()

# show GridSearchCV information

print("[INFO] GridSearchCV took {:.2f} seconds".format(

end - start))

print("[INFO] GridSearchCV best score: {:.2f}%".format(

grid.best_score_ * 100))

print("[INFO] GridSearchCV best parameters: {}".format(

grid.best_params_))

Line 65 initializes our GridSearchCV, which accepts three parameters:

estimator: The model we are tuning (in this case, a Support Vector Machine classifier).param_grid: The hyperparameter space we wish to search (i.e., ourparameterslist).n_jobs: The number of parallel jobs to run. A value of-1implies that all processors/cores of your machine will be used, thereby speeding up theGridSearchCV

Line 67 starts the grid search of the hyperparameter space. We wrap the .fit call with the time() function to measure how long the hyperparameter search space takes.

Once GridSearchCV

- How long

GridSearchCV - The best accuracy we obtained during the grid search

- The hyperparameters associated with our highest accuracy model

From there, we do a full evaluation of the best model:

# grab the best model and evaluate it

print("[INFO] evaluating...")

model = grid.best_estimator_

predictions = model.predict(testX)

print(classification_report(testY, predictions))

Line 80 grabs the best_estimator_ from the grid search. This is the SVM with the highest accuracy.

Note: After a hyperparameter search is complete, the scikit-learn library always populates the best_estimator_ variable of the grid with our highest accuracy model.

Lines 81 uses the best model found to make predictions on our testing data. We then display a full classification report on Line 82.

GridSearchCV for computer vision project results

We are now ready to apply a grid search to tune the hyperparameters to our texture recognition system.

Be sure to access the “Downloads” section of this tutorial to retrieve the source code and example texture dataset.

From there, you can execute the train_model.py script:

$ time python train_model.py --dataset texture_dataset

[INFO] extracting features...

[INFO] constructing training/testing split...

[INFO] tuning hyperparameters via gridsearchcv

[INFO] GridSearchCV took 1.17 seconds

[INFO] GridSearchCV best score: 86.81%

[INFO] GridSearchCV best parameters: {'C': 1000, 'degree': 3,

'kernel': 'poly'}

[INFO] evaluating...

precision recall f1-score support

brick 1.00 1.00 1.00 10

marble 1.00 1.00 1.00 5

sand 1.00 1.00 1.00 8

accuracy 1.00 23

macro avg 1.00 1.00 1.00 23

weighted avg 1.00 1.00 1.00 23

real 1m39.581s

user 1m45.836s

sys 0m2.896s

As you can see, we’ve obtained 100% accuracy on our testing set, meaning that our SVM was capable of recognizing the texture inside every one of our images.

Furthermore, running the tuning script took only 1m39s.

A grid search worked well here, but as I mentioned in last week’s tutorial, a random search tends to work just as well and requires less time to run — the more hyperparameters in your search space, the longer GridSearchCV

To illustrate this point, next week, I’ll show you how to use a random search to tune the hyperparameters in a deep learning model.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: July 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to use a GridSearchCVGridSearchCV class.

Our goal was to train a computer vision model that can automatically recognize the texture of an object in an image (brick, marble, or sand).

The training pipeline itself included:

- Looping over all images in our dataset

- Quantifying the texture of each image using the Local Binary Patterns descriptor (a popular image descriptor often used for quantifying texture)

- Using a grid search to explore hyperparameters to our Support Vector Machine

After tuning our SVM hyperparameters, we obtained 100% classification accuracy on our texture recognition dataset.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.