In a previous tutorial, we learned how to automatically OCR and scan receipts by:

- Detecting the receipt in the input image

- Applying a perspective transform to obtain a top-down view of the receipt

- Utilizing Tesseract to OCR the text on the receipt

- Using regular expressions to extract the price data

To learn how to OCR a business card using Python, just keep reading.

OCR’ing Business Cards

In this tutorial, we will use a very similar workflow, but this time apply it to business card OCR. More specifically, we’ll learn how to extract the name, title, phone number, and email address from a business card.

You’ll then be able to extend this implementation to your projects.

Learning Objectives

In this tutorial, you will:

- Learn how to detect business cards in images

- Apply OCR to a business card image

- Utilize regular expressions to extract:

- Name

- Job title

- Phone number

- Email address

Business Card OCR

In the first part of this tutorial, we will review our project directory structure. We’ll then implement a simple yet effective Python script to allow us to OCR a business card.

We’ll wrap up this tutorial with a discussion of our results, along with the next steps.

Configuring your development environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, I highly recommend that you read my pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

From there, take a look at the directory structure:

|-- larry_page.png |-- ocr_business_card.py |-- tony_stark.png

We only have a single Python script to review, ocr_business_card.py. This script will load example business card images (i.e., larry_page.png and tony_stark.png), OCR them, and then output the name, job title, phone number, and email address from the business card.

Best of all, we’ll be able to accomplish our goal in under 120 lines of code (including comments)!

Implementing Business Card OCR

We are now ready to implement our business card OCR script! First, open the ocr_business_card.py file in our project directory structure and insert the following code:

# import the necessary packages from imutils.perspective import four_point_transform import pytesseract import argparse import imutils import cv2 import re

Our imports here are similar to the ones in a previous tutorial on OCR’ing receipts.

We need our four_point_transform function to obtain a top-down, bird’s-eye view of the business card. Obtaining this view typically yields higher OCR accuracy.

The pytesseract package is used to interface with the Tesseract OCR engine. We then have Python’s regular expression library, re, which will allow us to parse the names, job titles, email addresses, and phone numbers from business cards.

With the imports taken care of, we can move on to command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-d", "--debug", type=int, default=-1,

help="whether or not we are visualizing each step of the pipeline")

ap.add_argument("-c", "--min-conf", type=int, default=0,

help="minimum confidence value to filter weak text detection")

args = vars(ap.parse_args())

Our first command line argument, --image, is the path to our input image on disk. We assume that this image contains a business card with sufficient contrast between the foreground and background, ensuring we can successfully apply edge detection and contour processing to extract the business card.

We then have two optional command line arguments, --debug and --min-conf. The --debug command line argument is used to indicate if we are debugging our image processing pipeline and showing more of the processed images on our screen (useful for when you can’t determine why a business card was detected or not).

We then have --min-conf, the minimum confidence (on a scale of 0-100) required for successful text detection. You can increase --min-conf to prune out weak text detections.

Let’s now load our input image from disk:

# load the input image from disk, resize it, and compute the ratio # of the *new* width to the *old* width orig = cv2.imread(args["image"]) image = orig.copy() image = imutils.resize(image, width=600) ratio = orig.shape[1] / float(image.shape[1])

Here, we load our input --image from disk and then clone it. We make it a clone to extract the original high-resolution version of the business card after contour processing.

We then resize our image to have a width of 600px and then compute the ratio of the new width to the old width (a requirement for when we want to obtain a top-down view of the original high-resolution business card).

We continue our image processing pipeline below.

# convert the image to grayscale, blur it, and apply edge detection # to reveal the outline of the business card gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY) blurred = cv2.GaussianBlur(gray, (5, 5), 0) edged = cv2.Canny(blurred, 30, 150) # detect contours in the edge map, sort them by size (in descending # order), and grab the largest contours cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts) cnts = sorted(cnts, key=cv2.contourArea, reverse=True)[:5] # initialize a contour that corresponds to the business card outline cardCnt = None

First, we take our original image and then convert it to grayscale, blur it, and then apply edge detection, the result of which can be seen in Figure 2.

Note that the outline/border of the business card is visible on the edge map. However, suppose there are any gaps in the edge map. In that case, the business card will not be detectable via our contour processing technique, so you may need to tweak the parameters to the Canny edge detector or capture your image in an environment with better lighting conditions.

From there, we detect contours and sort them in descending order (largest to smallest) based on the area of the computed contour. Our assumption here will be that the business card contour will be one of the largest detected contours, hence this operation.

We also initialize cardCnt (Line 40), which is the contour that corresponds to the business card.

Let’s now loop over the largest contours:

# loop over the contours

for c in cnts:

# approximate the contour

peri = cv2.arcLength(c, True)

approx = cv2.approxPolyDP(c, 0.02 * peri, True)

# if this is the first contour we've encountered that has four

# vertices, then we can assume we've found the business card

if len(approx) == 4:

cardCnt = approx

break

# if the business card contour is empty then our script could not

# find the outline of the card, so raise an error

if cardCnt is None:

raise Exception(("Could not find receipt outline. "

"Try debugging your edge detection and contour steps."))

Lines 45 and 46 perform contour approximation.

If our approximated contour has four vertices, then we can assume that we found the business card. If that happens, we break from the loop and update our cardCnt.

If we reach the end of the for loop and still haven’t found a valid cardCnt, we gracefully exit the script. Remember, we cannot process the business card if one cannot be found in the image!

Our next code block handles showing some debugging images as well as obtaining our top-down view of the business card:

# check to see if we should draw the contour of the business card

# on the image and then display it to our screen

if args["debug"] > 0:

output = image.copy()

cv2.drawContours(output, [cardCnt], -1, (0, 255, 0), 2)

cv2.imshow("Business Card Outline", output)

cv2.waitKey(0)

# apply a four-point perspective transform to the *original* image to

# obtain a top-down bird's-eye view of the business card

card = four_point_transform(orig, cardCnt.reshape(4, 2) * ratio)

# show transformed image

cv2.imshow("Business Card Transform", card)

cv2.waitKey(0)

Lines 62-66 make a check to see if we are in --debug mode, and if so, we draw the contour of the business card on the output image.

We then apply a four-point perspective transform to the original, high-resolution image, thus obtaining the top-down, bird’s-eye view of the business card (Line 70).

We multiply the cardCnt by our computed ratio here since cardCnt was computed for the reduced image dimensions. Multiplying by ratio scales the cardCnt back into the dimensions of the orig image.

We then display the transformed image to our screen (Lines 73 and 74).

With our top-down view of the business card obtain, we can move on to OCR’ing it:

# convert the business card from BGR to RGB channel ordering and then

# OCR it

rgb = cv2.cvtColor(card, cv2.COLOR_BGR2RGB)

text = pytesseract.image_to_string(rgb)

# use regular expressions to parse out phone numbers and email

# addresses from the business card

phoneNums = re.findall(r'[\+\(]?[1-9][0-9 .\-\(\)]{8,}[0-9]', text)

emails = re.findall(r"[a-z0-9\.\-+_]+@[a-z0-9\.\-+_]+\.[a-z]+", text)

# attempt to use regular expressions to parse out names/titles (not

# necessarily reliable)

nameExp = r"^[\w'\-,.][^0-9_!¡?÷?¿/\\+=@#$%ˆ&*(){}|~<>;:[\]]{2,}"

names = re.findall(nameExp, text)

Lines 78 and 79 OCR the business card, resulting in the text output.

But the question remains, how are we going to extract the information from the business card itself? The answer is to utilize regular expressions.

Lines 83 and 84 utilize regular expressions to extract phone numbers and email addresses (Walia, 2020) from the text, while Lines 88 and 89 do the same for names and job titles (Regular expression for first and last name, 2020).

A review of regular expressions is outside the scope of this tutorial, but the gist is that they can be used to match particular patterns in text.

For example, a phone number consists of a specific digits pattern and sometimes includes dashes and parentheses. Email addresses also follow a pattern, including a text string, followed by an “@” symbol, and then the domain name.

Any time you can reliably guarantee a pattern of text, regular expressions can work quite well. That said, they aren’t perfect either, so you may want to look into more advanced natural language processing (NLP) algorithms if you find your business card OCR accuracy is suffering significantly.

The final step here is to display our output to the terminal:

# show the phone numbers header

print("PHONE NUMBERS")

print("=============")

# loop over the detected phone numbers and print them to our terminal

for num in phoneNums:

print(num.strip())

# show the email addresses header

print("\n")

print("EMAILS")

print("======")

# loop over the detected email addresses and print them to our

# terminal

for email in emails:

print(email.strip())

# show the name/job title header

print("\n")

print("NAME/JOB TITLE")

print("==============")

# loop over the detected name/job titles and print them to our

# terminal

for name in names:

print(name.strip())

This final code block loops over the extracted phone numbers (Lines 96 and 97), email addresses (Lines 106 and 107), and names/job titles (Lines 116 and 117), displaying each to our terminal.

Of course, you could take this extracted information, write to disk, save it to a database, etc. Still, for the sake of simplicity (and not knowing your project specifications of business card OCR), we’ll leave it as an exercise to you to save the data as you see fit.

Business Card OCR Results

We are now ready to apply OCR to business cards. Open a terminal and execute the following command:

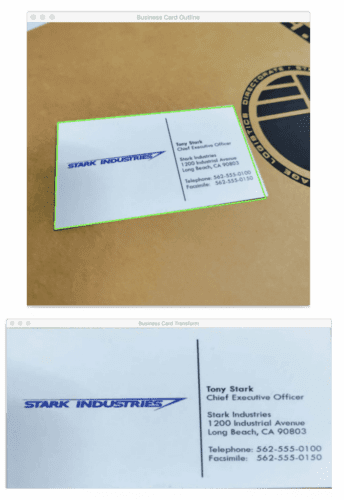

$ python ocr_business_card.py --image tony_stark.png --debug 1 PHONE NUMBERS ============= 562-555-0100 562-555-0150 EMAILS ====== NAME/JOB TITLE ============== Tony Stark Chief Executive Officer Stark Industries

Figure 3 (top) shows the results of our business card localization. Notice how we have correctly detected the business card in the input image.

From there, Figure 3 (bottom) displays the results of applying a perspective transform of the business card, thus resulting in the top-down, bird’s-eye view of the image.

Once we have the top-down view of the image (typically required to obtain higher OCR accuracy), we can apply Tesseract to OCR it, the results of which can be seen in our terminal output above.

Note that our script has successfully extracted both phone numbers on Tony Stark’s business card.

No email addresses are reported as there is no email address on the business card.

We then have the name and job title displayed as well. It’s interesting that we can OCR all the text successfully because the text of the name is more distorted than the phone number text. Our perspective transform dealt with all the text effectively even though the amount of distortion changes as you go further away from the camera. That’s the point of perspective transform and why it’s important to the accuracy of our OCR.

Let’s try another example image, this one of an old Larry Page (co-founder of Google) business card:

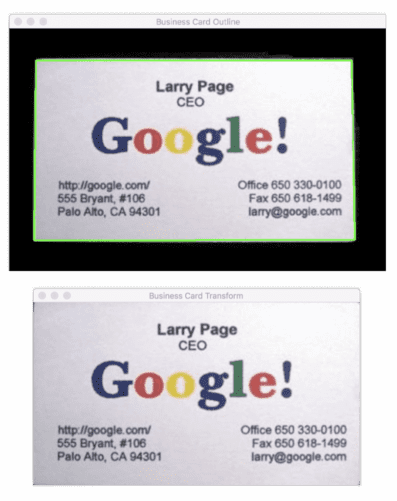

$ python ocr_business_card.py --image larry_page.png --debug 1 PHONE NUMBERS ============= 650 330-0100 650 618-1499 EMAILS ====== larry@google.com NAME/JOB TITLE ============== Larry Page CEO Google

Figure 4 (top) displays the output of localizing Page’s business card. The bottom then shows the top-down transform of the image.

This top-down transform is passed through Tesseract OCR, yielding the OCR’d text as output. We take this OCR’d text, apply a regular expression, and thus obtain the results above.

Examining the results, you can see that we have successfully extracted Larry Page’s two phone numbers, email address, and name/job title from the business card.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to build a basic business card OCR system. Essentially, this system was an extension of our receipt scanner but with different regular expressions and text localization strategies.

If you ever need to build a business card OCR system, I recommend that you use this tutorial as a starting point, but keep in mind that you may want to utilize more advanced text post-processing techniques, such as true natural language processing (NLP) algorithms, rather than regular expressions.

Regular expressions can work very well for email addresses and phone numbers, but for names and job titles that may fail to obtain high accuracy. If and when that time comes, you should consider leveraging NLP as much as possible to improve your results.

Citation Information

Rosebrock, A. “OCR’ing Business Cards,” PyImageSearch, 2021, https://pyimagesearch.com/2021/11/03/ocring-business-cards/

@article{Rosebrock_2021_OCR_BCards,

author = {Adrian Rosebrock},

title = {{OCR}’ing Business Cards},

journal = {PyImageSearch},

year = {2021},

note = {https://pyimagesearch.com/2021/11/03/ocring-business-cards/},

}

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.