Table of Contents

- Text Detection and OCR with Amazon Rekognition API

- Configuring Your Development Environment

- Amazon Rekognition API for OCR

- Obtaining Your AWS Rekognition Keys

- Installing Amazon’s Python Package

- Having Problems Configuring Your Development Environment?

- Project Structure

- Creating Our Configuration File

- Implementing the Amazon Rekognition OCR Script

- Amazon Rekognition OCR Results

- Summary

Text Detection and OCR with Amazon Rekognition API

In this tutorial, you will:

- Learn about the Amazon Rekognition API

- Discover how the Amazon Rekognition API can be used for OCR

- Obtain your Amazon Web Services (AWS) Rekognition Keys

- Install Amazon’s

boto3package to interface with the OCR API - Implement a Python script that interfaces with Amazon Rekognition API to OCR an image

This lesson is part 1 in a 3-part series on Text Detection and OCR:

- Text Detection and OCR with Amazon Rekognition API (today’s tutorial)

- Text Detection and OCR with Microsoft Cognitive Services

- Text Detection and OCR with Google Cloud Vision API

To learn about text detection and OCR, just keep reading.

Text Detection and OCR with Amazon Rekognition API

So far, we’ve primarily focused on using the Tesseract OCR engine. However, other optical character recognition (OCR) engines are available, some of which are far more accurate than Tesseract and capable of accurately OCR’ing text, even in complex, unconstrained conditions.

Typically, these OCR engines live in the cloud. Many are proprietary-based. To keep these models and associated datasets proprietary, the companies do not distribute the models themselves and instead put them behind a REST API.

While these models do tend to be more accurate than Tesseract, there are some downsides, including:

- An internet connection is required to OCR images — that’s less of an issue for most laptops/desktops, but if you’re working on the edge, an internet connection may not be possible

- Additionally, if you are working with edge devices, then you may not want to spend the power draw on a network connection

- There will be latency introduced by the network connection

- OCR results will take longer because the image needs to be packaged into an API request and uploaded to the OCR API. The API will need to chew on the image and OCR it, and then finally return the results to the client

- Due to the latency and amount of time it will take to OCR each image, it’s doubtful that these OCR APIs will be able to run in real-time

- They cost money (but typically offer a free trial or are free up to a number of monthly API requests)

Looking at the previous list, you may wonder why on earth would we cover these APIs at all — what is the benefit?

As you’ll see, the primary benefit here is accuracy. So first, consider the amount of data that Google and Microsoft have from running their respective search engines. Then consider the amount of data Amazon generates daily from simply printing shipping labels.

These companies have an incredible amount of image data — and when they train their novel, state-of-the-art OCR models on their data, the result is an incredibly robust and accurate OCR model.

In this tutorial, you’ll learn how to use the Amazon Rekognition API to OCR images. In upcoming tutorials, we will cover Microsoft Azure Cognitive Services and Google Cloud Vision API.

Configuring Your Development Environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install boto3

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Amazon Rekognition API for OCR

The first part of this tutorial will focus on obtaining your AWS Rekognition Keys. These keys will include a public access key and a secret key, similar to SSH, SFTP, etc.

We’ll then show you how to install boto3, the Amazon Web Services (AWS) software development kit (SDK) for Python. Finally, we’ll use the boto3 package to interface with Amazon Rekognition OCR API.

Next, we’ll implement our Python configuration file (which will store our access key, secret key, and AWS region) and then create our driver script used to:

- Load an input image from disk

- Package it into an API request

- Send the API request to AWS Rekognition for OCR

- Retrieve the results from the API call

- Display our OCR results

We’ll wrap up this tutorial with a discussion of our results.

Obtaining Your AWS Rekognition Keys

You will need additional information from our companion site for instructions on obtaining your AWS Rekognition keys. You can find the instructions here.

Installing Amazon’s Python Package

To interface with the Amazon Rekognition API, we need to use the boto3 package: the AWS SDK. Luckily, boto3 is incredibly simple to install, requiring only a single pip-install command:

$ pip install boto3

If you are using a Python virtual environment or an Anaconda environment, be sure to use the appropriate command to access your Python environment before running the above command (otherwise, boto3 will be installed in the system install of Python).

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

Before we can perform text detection and OCR with Amazon Rekognition API, we first need to review our project directory structure.

|-- config | |-- __init__.py | |-- aws_config.py |-- images | |-- aircraft.png | |-- challenging.png | |-- park.png | |-- street_signs.png |-- amazon_ocr.py

The aws_config.py file contains our AWS access key, secret key, and region. You learned how to obtain these values in the Obtaining Your AWS Rekognition Keys section.

Our amazon_ocr.py script will take this aws_config, connect to the Amazon Rekognition API, and then perform text detection and OCR to each image in our images directory.

Creating Our Configuration File

To connect to the Amazon Rekognition API, we first need to supply our access key, secret key, and region. If you haven’t yet obtained these keys, go to the Obtaining Your AWS Rekognition Keys section and be sure to follow the steps and note the values.

Afterward, you can come back here, open aws_config.py, and update the code:

# define our AWS Access Key, Secret Key, and Region ACCESS_KEY = "YOUR_ACCESS_KEY" SECRET_KEY = "YOUR_SECRET_KEY" REGION = "YOUR_AWS_REGION"

Sharing my API keys would be a security breach, so I’ve left placeholder values here. Be sure to update them with your API keys; otherwise, you will be unable to connect to the Amazon Rekognition API.

Implementing the Amazon Rekognition OCR Script

With our aws_config implemented, let’s move on to the amazon_ocr.py script, which is responsible for:

- Connecting to the Amazon Rekognition API

- Loading an input image from disk

- Packaging the image in an API request

- Sending the package to Amazon Rekognition API for OCR

- Obtaining the OCR results from Amazon Rekognition API

- Displaying our output text detection and OCR results

Let’s get started with our implementation:

# import the necessary packages from config import aws_config as config import argparse import boto3 import cv2

Lines 2-5 import our required Python packages. Notably, we need our aws_config, along with boto3, which is Amazon’s Python package, to interface with their API.

Let’s now define draw_ocr_results, a simple Python utility used to draw the output OCR results from Amazon Rekognition API:

def draw_ocr_results(image, text, poly, color=(0, 255, 0)): # unpack the bounding box, taking care to scale the coordinates # relative to the input image size (h, w) = image.shape[:2] tlX = int(poly[0]["X"] * w) tlY = int(poly[0]["Y"] * h) trX = int(poly[1]["X"] * w) trY = int(poly[1]["Y"] * h) brX = int(poly[2]["X"] * w) brY = int(poly[2]["Y"] * h) blX = int(poly[3]["X"] * w) blY = int(poly[3]["Y"] * h)

The draw_ocr_results function accepts four parameters:

image: The input image that we’re drawing the OCR’d text ontext: The OCR’d text itselfpoly: The polygon object/coordinates of the text bounding box returned by Amazon Rekognition APIcolor: The color of the bounding box

Line 10 grabs the width and height of the image we’re drawing on. Lines 11-18 then grab the bounding box coordinates of the text ROI, taking care to scale the coordinates by the width and height.

Why do we perform this scaling process?

Well, as we’ll find out later in this tutorial, Amazon Rekognition API returns bounding boxes in the range [0, 1]. Multiplying the bounding boxes by the original image width and height brings the bounding boxes back to the original image scale.

From there, we can now annotate the image:

# build a list of points and use it to construct each vertex

# of the bounding box

pts = ((tlX, tlY), (trX, trY), (brX, brY), (blX, blY))

topLeft = pts[0]

topRight = pts[1]

bottomRight = pts[2]

bottomLeft = pts[3]

# draw the bounding box of the detected text

cv2.line(image, topLeft, topRight, color, 2)

cv2.line(image, topRight, bottomRight, color, 2)

cv2.line(image, bottomRight, bottomLeft, color, 2)

cv2.line(image, bottomLeft, topLeft, color, 2)

# draw the text itself

cv2.putText(image, text, (topLeft[0], topLeft[1] - 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.8, color, 2)

# return the output image

return image

Lines 22-26 build a list of points corresponding to each vertex of the bounding box. Given the vertices, Lines 29-32 draw the bounding box of the rotated text. Lines 35 and 36 draw the OCR’d text itself.

We then return the annotated output image to the calling function.

With our helper utility defined, let’s move on to command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image that we'll submit to AWS Rekognition")

ap.add_argument("-t", "--type", type=str, default="line",

choices=["line", "word"],

help="output text type (either 'line' or 'word')")

args = vars(ap.parse_args())

The --image command line argument corresponds to the path to the input image we want to submit to the Amazon Rekognition OCR API.

The --type argument can be either line or word, indicating whether or not we want the Amazon Rekognition API to return OCR’d results grouped into lines or as individual words.

Next, let’s connect to Amazon Web Services:

# connect to AWS so we can use the Amazon Rekognition OCR API

client = boto3.client(

"rekognition",

aws_access_key_id=config.ACCESS_KEY,

aws_secret_access_key=config.SECRET_KEY,

region_name=config.REGION)

# load the input image as a raw binary file and make a request to

# the Amazon Rekognition OCR API

print("[INFO] making request to AWS Rekognition API...")

image = open(args["image"], "rb").read()

response = client.detect_text(Image={"Bytes": image})

# grab the text detection results from the API and load the input

# image again, this time in OpenCV format

detections = response["TextDetections"]

image = cv2.imread(args["image"])

# make a copy of the input image for final output

final = image.copy()

Lines 51-55 connect to AWS. Here we supply our access key, secret key, and region.

Once connected, we load our input image from disk as a binary object (Line 60) and then submit it to AWS by calling the detect_text function and supplying our image.

Calling detect_text results in a response from the Amazon Rekognition API. We then grab the TextDetections results (Line 65).

Line 66 loads the input --image from disk in OpenCV format, while Line 69 clones the image to draw on it.

We can now loop over the text detection bounding boxes from the Amazon Rekognition API:

# loop over the text detection bounding boxes

for detection in detections:

# extract the OCR'd text, text type, and bounding box coordinates

text = detection["DetectedText"]

textType = detection["Type"]

poly = detection["Geometry"]["Polygon"]

# only draw show the output of the OCR process if we are looking

# at the correct text type

if args["type"] == textType.lower():

# draw the output OCR line-by-line

output = image.copy()

output = draw_ocr_results(output, text, poly)

final = draw_ocr_results(final, text, poly)

# show the output OCR'd line

print(text)

cv2.imshow("Output", output)

cv2.waitKey(0)

# show the final output image

cv2.imshow("Final Output", final)

cv2.waitKey(0)

Line 72 loops over all detections returned by Amazon’s OCR API. We then extract the OCR’d text, textType (either “word” or “line”), along with the bounding box coordinates of the OCR’d text (Lines 74 and 75).

Line 80 makes a check to verify whether we are looking at either word or line OCR’d text. If the current textType matches our --type command line argument, we call our draw_ocr_results function on both the output image and our final cloned image (Lines 82-84).

Lines 87-89 display the current OCR’d line or word on our terminal and screen. That way, we can easily visualize each line or word without the output image becoming too cluttered.

Finally, Lines 92 and 93 show the result of drawing all text on our screen at once (for visualization purposes).

Amazon Rekognition OCR Results

Congrats on implementing a Python script to interface with Amazon Rekognition’s OCR API!

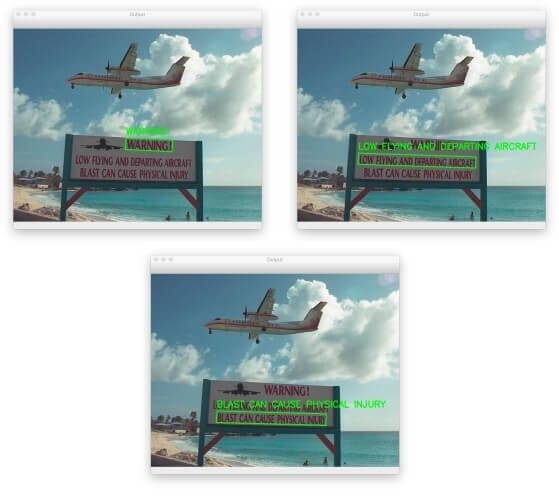

Let’s see our results in action, first by OCR’ing the entire image, line-by-line:

$ python amazon_ocr.py --image images/aircraft.png [INFO] making request to AWS Rekognition API... WARNING! LOW FLYING AND DEPARTING AIRCRAFT BLAST CAN CAUSE PHYSICAL INJURY

Figure 2 shows that we have OCR’d our input aircraft.png image line-by-line successfully, thereby demonstrating that the Amazon Rekognition API was able to:

- Locate each block of text in the input image

- OCR each text ROI

- Group the blocks of text into lines

But what if we wanted to obtain our OCR results at the word level instead of the line level?

That’s as simple as supplying the --type command line argument:

$ python amazon_ocr.py --image images/aircraft.png --type word [INFO] making request to AWS Rekognition API... WARNING! LOW FLYING AND DEPARTING AIRCRAFT BLAST CAN CAUSE PHYSICAL INJURY

As our output and Figure 3 show, we’re now OCR’ing text at the word level.

I’m a big fan of Amazon Rekognition’s OCR API. While AWS, EC2, etc., do have a bit of a learning curve, the benefit is that once you know it, you then understand Amazon’s entire web services ecosystem, which makes it far easier to start integrating different services.

I also appreciate that it’s super easy to get started with Amazon Rekognition API. Other services (e.g., Google Cloud Vision API) make it a bit harder to get that “first easy win.” If this is your first foray into using cloud service APIs, definitely consider using Amazon Rekognition API first before moving on to Microsoft Cognitive Services or the Google Cloud Vision API.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: July 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to create your Amazon Rekognition keys, install boto3, a Python package used to interface with AWS, and implement a Python script to make calls to the Amazon Rekognition API.

The Python script was simple, requiring less than 100 lines to implement (including comments).

Not only were our Amazon Rekognition OCR API results correct, but we could also parse the results at both the line and word level, giving us finer granularity than what the EAST text detection model and Tesseract OCR engine give us (at least without fine-tuning several options).

In the next lesson, we’ll look at the Microsoft Cognitive Services API for OCR.

Citation Information

Rosebrock, A. “Text Detection and OCR with Amazon Rekognition API,” PyImageSearch, D. Chakraborty, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, R. Raha, and A. Thanki, eds., 2022, https://pyimg.co/po6tf

@incollection{Rosebrock_2022_OCR_Amazon_Rekognition_API,

author = {Adrian Rosebrock},

title = {Text Detection and OCR with Amazon Rekognition API},

booktitle = {PyImageSearch},

editor = {Devjyoti Chakraborty and Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha and Abhishek Thanki},

year = {2022},

note = {https://pyimg.co/po6tf},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.