Last updated on July 8, 2021.

In this tutorial you will learn how to build a “people counter” with OpenCV and Python. Using OpenCV, we’ll count the number of people who are heading “in” or “out” of a department store in real-time.

Building a person counter with OpenCV has been one of the most-requested topics here on the PyImageSearch and I’ve been meaning to do a blog post on people counting for a year now — I’m incredibly thrilled to be publishing it and sharing it with you today.

Enjoy the tutorial and let me know what you think in the comments section at the bottom of the post!

A dataset of videos with people moving around is crucial for a people counter. It allows the model to learn to detect and track people across frames, thereby counting the number of people accurately.

Roboflow has free tools for each stage of the computer vision pipeline that will streamline your workflows and supercharge your productivity.

Sign up or Log in to your Roboflow account to access state of the art dataset libaries and revolutionize your computer vision pipeline.

You can start by choosing your own datasets or using our PyimageSearch’s assorted library of useful datasets.

Bring data in any of 40+ formats to Roboflow, train using any state-of-the-art model architectures, deploy across multiple platforms (API, NVIDIA, browser, iOS, etc), and connect to applications or 3rd party tools.

To get started building a people counter with OpenCV, just keep reading!

- Update July 2021: Added section on how to improve the efficiency, speed, and FPS throughput rate of the people counter by using multi-object tracking spread across multiple processes/cores.

OpenCV People Counter with Python

In the first part of today’s blog post, we’ll be discussing the required Python packages you’ll need to build our people counter.

From there I’ll provide a brief discussion on the difference between object detection and object tracking, along with how we can leverage both to create a more accurate people counter.

Afterwards, we’ll review the directory structure for the project and then implement the entire person counting project.

Finally, we’ll examine the results of applying people counting with OpenCV to actual videos.

Required Python libraries for people counting

In order to build our people counting applications, we’ll need a number of different Python libraries, including:

Additionally, you’ll also want to access the “Downloads” section of this blog post to retrieve my source code which includes:

- My special

pyimagesearchmodule which we’ll implement and use later in this post - The Python driver script used to start the people counter

- All example videos used here in the post

I’m going to assume you already have NumPy, OpenCV, and dlib installed on your system.

If you don’t have OpenCV installed, you’ll want to head to my OpenCV install page and follow the relevant tutorial for your particular operating system.

If you need to install dlib, you can use this guide.

Finally, you can install/upgrade your imutils via the following command:

$ pip install --upgrade imutils

Understanding object detection vs. object tracking

There is a fundamental difference between object detection and object tracking that you must understand before we proceed with the rest of this tutorial.

When we apply object detection we are determining where in an image/frame an object is. An object detector is also typically more computationally expensive, and therefore slower, than an object tracking algorithm. Examples of object detection algorithms include Haar cascades, HOG + Linear SVM, and deep learning-based object detectors such as Faster R-CNNs, YOLO, and Single Shot Detectors (SSDs).

An object tracker, on the other hand, will accept the input (x, y)-coordinates of where an object is in an image and will:

- Assign a unique ID to that particular object

- Track the object as it moves around a video stream, predicting the new object location in the next frame based on various attributes of the frame (gradient, optical flow, etc.)

Examples of object tracking algorithms include MedianFlow, MOSSE, GOTURN, kernalized correlation filters, and discriminative correlation filters, to name a few.

If you’re interested in learning more about the object tracking algorithms built into OpenCV, be sure to refer to this blog post.

Combining both object detection and object tracking

Highly accurate object trackers will combine the concept of object detection and object tracking into a single algorithm, typically divided into two phases:

- Phase 1 — Detecting: During the detection phase we are running our computationally more expensive object tracker to (1) detect if new objects have entered our view, and (2) see if we can find objects that were “lost” during the tracking phase. For each detected object we create or update an object tracker with the new bounding box coordinates. Since our object detector is more computationally expensive we only run this phase once every N frames.

- Phase 2 — Tracking: When we are not in the “detecting” phase we are in the “tracking” phase. For each of our detected objects, we create an object tracker to track the object as it moves around the frame. Our object tracker should be faster and more efficient than the object detector. We’ll continue tracking until we’ve reached the N-th frame and then re-run our object detector. The entire process then repeats.

The benefit of this hybrid approach is that we can apply highly accurate object detection methods without as much of the computational burden. We will be implementing such a tracking system to build our people counter.

Project structure

Let’s review the project structure for today’s blog post. Once you’ve grabbed the code from the “Downloads” section, you can inspect the directory structure with the tree command:

$ tree --dirsfirst . ├── pyimagesearch │ ├── __init__.py │ ├── centroidtracker.py │ └── trackableobject.py ├── mobilenet_ssd │ ├── MobileNetSSD_deploy.caffemodel │ └── MobileNetSSD_deploy.prototxt ├── videos │ ├── example_01.mp4 │ └── example_02.mp4 ├── output │ ├── output_01.avi │ └── output_02.avi └── people_counter.py 4 directories, 10 files

Zeroing in on the most-important two directories, we have:

pyimagesearch/: This module contains the centroid tracking algorithm. The centroid tracking algorithm is covered in the “Combining object tracking algorithms” section, but the code is not. For a review of the centroid tracking code (centroidtracker.py) you should refer to the first post in the series.mobilenet_ssd/: Contains the Caffe deep learning model files. We’ll be using a MobileNet Single Shot Detector (SSD) which is covered at the top of this blog post in the section, “Single Shot Detectors for object detection”.

The heart of today’s project is contained within the people_counter.py script — that’s where we’ll spend most of our time. We’ll also review the trackableobject.py script today.

Combining object tracking algorithms

To implement our people counter we’ll be using both OpenCV and dlib. We’ll use OpenCV for standard computer vision/image processing functions, along with the deep learning object detector for people counting.

We’ll then use dlib for its implementation of correlation filters. We could use OpenCV here as well; however, the dlib object tracking implementation was a bit easier to work with for this project.

I’ll be including a deep dive into dlib’s object tracking algorithm in next week’s post.

Along with dlib’s object tracking implementation, we’ll also be using my implementation of centroid tracking from a few weeks ago. Reviewing the entire centroid tracking algorithm is outside the scope of this blog post, but I’ve included a brief overview below.

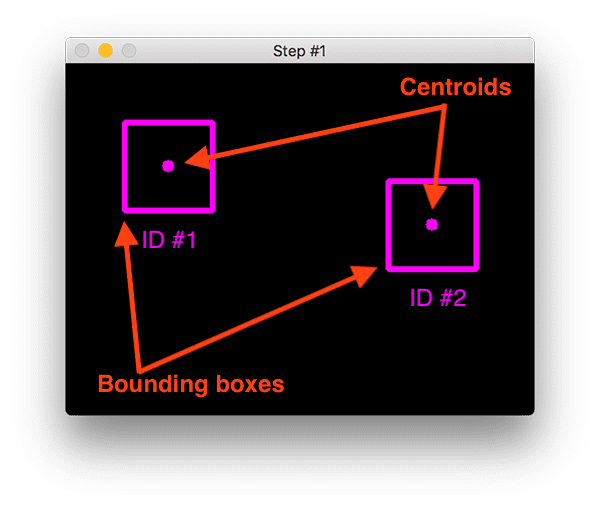

At Step #1 we accept a set of bounding boxes and compute their corresponding centroids (i.e., the center of the bounding boxes):

The bounding boxes themselves can be provided by either:

- An object detector (such as HOG + Linear SVM, Faster R- CNN, SSDs, etc.)

- Or an object tracker (such as correlation filters)

In the above image you can see that we have two objects to track in this initial iteration of the algorithm.

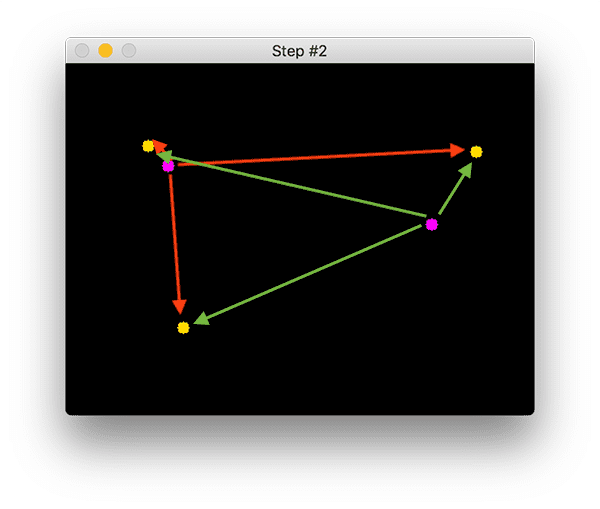

During Step #2 we compute the Euclidean distance between any new centroids (yellow) and existing centroids (purple):

The centroid tracking algorithm makes the assumption that pairs of centroids with minimum Euclidean distance between them must be the same object ID.

In the example image above we have two existing centroids (purple) and three new centroids (yellow), implying that a new object has been detected (since there is one more new centroid vs. old centroid).

The arrows then represent computing the Euclidean distances between all purple centroids and all yellow centroids.

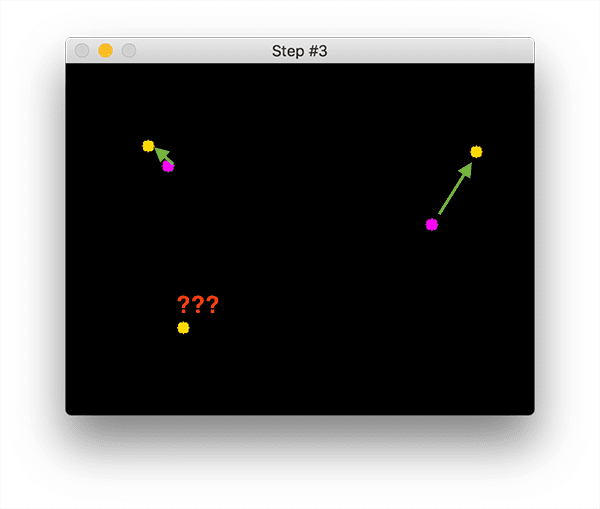

Once we have the Euclidean distances we attempt to associate object IDs in Step #3:

In Figure 3 you can see that our centroid tracker has chosen to associate centroids that minimize their respective Euclidean distances.

But what about the point in the bottom-left?

It didn’t get associated with anything — what do we do?

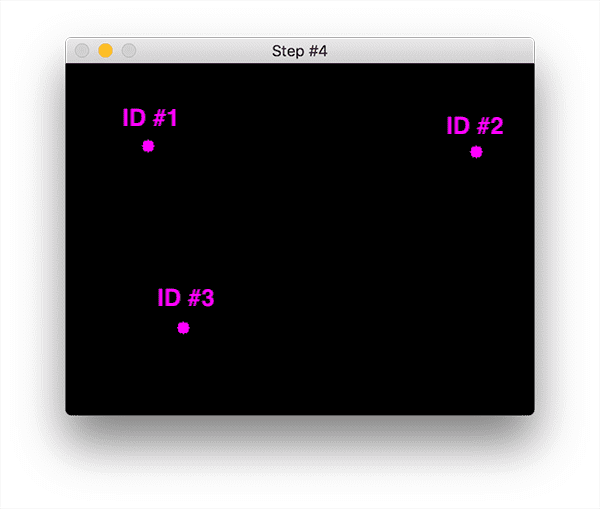

To answer that question we need to perform Step #4, registering new objects:

Registering simply means that we are adding the new object to our list of tracked objects by:

- Assigning it a new object ID

- Storing the centroid of the bounding box coordinates for the new object

In the event that an object has been lost or has left the field of view, we can simply deregister the object (Step #5).

Exactly how you handle when an object is “lost” or is “no longer visible” really depends on your exact application, but for our people counter, we will deregister people IDs when they cannot be matched to any existing person objects for 40 consecutive frames.

Again, this is only a brief overview of the centroid tracking algorithm.

Note: For a more detailed review, including an explanation of the source code used to implement centroid tracking, be sure to refer to this post.

Creating a “trackable object”

In order to track and count an object in a video stream, we need an easy way to store information regarding the object itself, including:

- It’s object ID

- It’s previous centroids (so we can easily to compute the direction the object is moving)

- Whether or not the object has already been counted

To accomplish all of these goals we can define an instance of TrackableObject — open up the trackableobject.py file and insert the following code:

class TrackableObject: def __init__(self, objectID, centroid): # store the object ID, then initialize a list of centroids # using the current centroid self.objectID = objectID self.centroids = [centroid] # initialize a boolean used to indicate if the object has # already been counted or not self.counted = False

The TrackableObject constructor accepts an objectID + centroid and stores them. The centroids variable is a list because it will contain an object’s centroid location history.

The constructor also initializes counted as False , indicating that the object has not been counted yet.

Implementing our people counter with OpenCV + Python

With all of our supporting Python helper tools and classes in place, we are now ready to built our OpenCV people counter.

Open up your people_counter.py file and insert the following code:

# import the necessary packages from pyimagesearch.centroidtracker import CentroidTracker from pyimagesearch.trackableobject import TrackableObject from imutils.video import VideoStream from imutils.video import FPS import numpy as np import argparse import imutils import time import dlib import cv2

We begin by importing our necessary packages:

- From the

pyimagesearchmodule, we import our customCentroidTrackerandTrackableObjectclasses. - The

VideoStreamandFPSmodules fromimutils.videowill help us to work with a webcam and to calculate the estimated Frames Per Second (FPS) throughput rate. - We need

imutilsfor its OpenCV convenience functions. - The

dliblibrary will be used for its correlation tracker implementation. - OpenCV will be used for deep neural network inference, opening video files, writing video files, and displaying output frames to our screen.

Now that all of the tools are at our fingertips, let’s parse command line arguments:

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-i", "--input", type=str,

help="path to optional input video file")

ap.add_argument("-o", "--output", type=str,

help="path to optional output video file")

ap.add_argument("-c", "--confidence", type=float, default=0.4,

help="minimum probability to filter weak detections")

ap.add_argument("-s", "--skip-frames", type=int, default=30,

help="# of skip frames between detections")

args = vars(ap.parse_args())

We have six command line arguments which allow us to pass information to our people counter script from the terminal at runtime:

--prototxt: Path to the Caffe “deploy” prototxt file.--model: The path to the Caffe pre-trained CNN model.--input: Optional input video file path. If no path is specified, your webcam will be utilized.--output: Optional output video path. If no path is specified, a video will not be recorded.--confidence: With a default value of0.4, this is the minimum probability threshold which helps to filter out weak detections.--skip-frames: The number of frames to skip before running our DNN detector again on the tracked object. Remember, object detection is computationally expensive, but it does help our tracker to reassess objects in the frame. By default we skip30frames between detecting objects with the OpenCV DNN module and our CNN single shot detector model.

Now that our script can dynamically handle command line arguments at runtime, let’s prepare our SSD:

# initialize the list of class labels MobileNet SSD was trained to

# detect

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

First, we’ll initialize CLASSES — the list of classes that our SSD supports. This list should not be changed if you’re using the model provided in the “Downloads”. We’re only interested in the “person” class, but you could count other moving objects as well (however, if your “pottedplant”, “sofa”, or “tvmonitor” grows legs and starts moving, you should probably run out of your house screaming rather than worrying about counting them! ? ).

On Line 38 we load our pre-trained MobileNet SSD used to detect objects (but again, we’re just interested in detecting and tracking people, not any other class). To learn more about MobileNet and SSDs, please refer to my previous blog post.

From there we can initialize our video stream:

# if a video path was not supplied, grab a reference to the webcam

if not args.get("input", False):

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(2.0)

# otherwise, grab a reference to the video file

else:

print("[INFO] opening video file...")

vs = cv2.VideoCapture(args["input"])

First we handle the case where we’re using a webcam video stream (Lines 41-44). Otherwise, we’ll be capturing frames from a video file (Lines 47-49).

We still have a handful of initializations to perform before we begin looping over frames:

# initialize the video writer (we'll instantiate later if need be)

writer = None

# initialize the frame dimensions (we'll set them as soon as we read

# the first frame from the video)

W = None

H = None

# instantiate our centroid tracker, then initialize a list to store

# each of our dlib correlation trackers, followed by a dictionary to

# map each unique object ID to a TrackableObject

ct = CentroidTracker(maxDisappeared=40, maxDistance=50)

trackers = []

trackableObjects = {}

# initialize the total number of frames processed thus far, along

# with the total number of objects that have moved either up or down

totalFrames = 0

totalDown = 0

totalUp = 0

# start the frames per second throughput estimator

fps = FPS().start()

The remaining initializations include:

writer: Our video writer. We’ll instantiate this object later if we are writing to video.WandH: Our frame dimensions. We’ll need to plug these intocv2.VideoWriter.ct: OurCentroidTracker. For details on the implementation ofCentroidTracker, be sure to refer to my blog post from a few weeks ago.trackers: A list to store the dlib correlation trackers. To learn about dlib correlation tracking stay tuned for next week’s post.trackableObjects: A dictionary which maps anobjectIDto aTrackableObject.totalFrames: The total number of frames processed.totalDownandtotalUp: The total number of objects/people that have moved either down or up. These variables measure the actual “people counting” results of the script.fps: Our frames per second estimator for benchmarking.

Note: If you get lost in the while loop below, you should refer back to this bulleted listing of important variables.

Now that all of our initializations are taken care of, let’s loop over incoming frames:

# loop over frames from the video stream

while True:

# grab the next frame and handle if we are reading from either

# VideoCapture or VideoStream

frame = vs.read()

frame = frame[1] if args.get("input", False) else frame

# if we are viewing a video and we did not grab a frame then we

# have reached the end of the video

if args["input"] is not None and frame is None:

break

# resize the frame to have a maximum width of 500 pixels (the

# less data we have, the faster we can process it), then convert

# the frame from BGR to RGB for dlib

frame = imutils.resize(frame, width=500)

rgb = cv2.cvtColor(frame, cv2.COLOR_BGR2RGB)

# if the frame dimensions are empty, set them

if W is None or H is None:

(H, W) = frame.shape[:2]

# if we are supposed to be writing a video to disk, initialize

# the writer

if args["output"] is not None and writer is None:

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(args["output"], fourcc, 30,

(W, H), True)

We begin looping on Line 76. At the top of the loop we grab the next frame (Lines 79 and 80). In the event that we’ve reached the end of the video, we’ll break out of the loop (Lines 84 and 85).

Preprocessing the frame takes place on Lines 90 and 91. This includes resizing and swapping color channels as dlib requires an rgb image.

We grab the dimensions of the frame for the video writer (Lines 94 and 95).

From there we’ll instantiate the video writer if an output path was provided via command line argument (Lines 99-102). To learn more about writing video to disk, be sure to refer to this post.

Now let’s detect people using the SSD:

# initialize the current status along with our list of bounding # box rectangles returned by either (1) our object detector or # (2) the correlation trackers status = "Waiting" rects = [] # check to see if we should run a more computationally expensive # object detection method to aid our tracker if totalFrames % args["skip_frames"] == 0: # set the status and initialize our new set of object trackers status = "Detecting" trackers = [] # convert the frame to a blob and pass the blob through the # network and obtain the detections blob = cv2.dnn.blobFromImage(frame, 0.007843, (W, H), 127.5) net.setInput(blob) detections = net.forward()

We initialize a status as “Waiting” on Line 107. Possible status states include:

- Waiting: In this state, we’re waiting on people to be detected and tracked.

- Detecting: We’re actively in the process of detecting people using the MobileNet SSD.

- Tracking: People are being tracked in the frame and we’re counting the

totalUpandtotalDown.

Our rects list will be populated either via detection or tracking. We go ahead and initialize rects on Line 108.

It’s important to understand that deep learning object detectors are very computationally expensive, especially if you are running them on your CPU.

To avoid running our object detector on every frame, and to speed up our tracking pipeline, we’ll be skipping every N frames (set by command line argument --skip-frames where 30 is the default). Only every N frames will we exercise our SSD for object detection. Otherwise, we’ll simply be tracking moving objects in-between.

Using the modulo operator on Line 112 we ensure that we’ll only execute the code in the if-statement every N frames.

Assuming we’ve landed on a multiple of skip_frames , we’ll update the status to “Detecting” (Line 114).

Then we initialize our new list of trackers (Line 115).

Next, we’ll perform inference via object detection. We begin by creating a blob from the image, followed by passing the blob through the net to obtain detections (Lines 119-121).

Now we’ll loop over each of the detections in hopes of finding objects belonging to the “person” class:

# loop over the detections for i in np.arange(0, detections.shape[2]): # extract the confidence (i.e., probability) associated # with the prediction confidence = detections[0, 0, i, 2] # filter out weak detections by requiring a minimum # confidence if confidence > args["confidence"]: # extract the index of the class label from the # detections list idx = int(detections[0, 0, i, 1]) # if the class label is not a person, ignore it if CLASSES[idx] != "person": continue

Looping over detections on Line 124, we proceed to grab the confidence (Line 127) and filter out weak results + those that don’t belong to the “person” class (Lines 131-138).

Now we can compute a bounding box for each person and begin correlation tracking:

# compute the (x, y)-coordinates of the bounding box

# for the object

box = detections[0, 0, i, 3:7] * np.array([W, H, W, H])

(startX, startY, endX, endY) = box.astype("int")

# construct a dlib rectangle object from the bounding

# box coordinates and then start the dlib correlation

# tracker

tracker = dlib.correlation_tracker()

rect = dlib.rectangle(startX, startY, endX, endY)

tracker.start_track(rgb, rect)

# add the tracker to our list of trackers so we can

# utilize it during skip frames

trackers.append(tracker)

Computing our bounding box takes place on Lines 142 and 143.

Then we instantiate our dlib correlation tracker on Line 148, followed by passing in the object’s bounding box coordinates to dlib.rectangle , storing the result as rect (Line 149).

Subsequently, we start tracking on Line 150 and append the tracker to the trackers list on Line 154.

That’s a wrap for all operations we do every N skip-frames!

Let’s take care of the typical operations where tracking is taking place in the else block:

# otherwise, we should utilize our object *trackers* rather than # object *detectors* to obtain a higher frame processing throughput else: # loop over the trackers for tracker in trackers: # set the status of our system to be 'tracking' rather # than 'waiting' or 'detecting' status = "Tracking" # update the tracker and grab the updated position tracker.update(rgb) pos = tracker.get_position() # unpack the position object startX = int(pos.left()) startY = int(pos.top()) endX = int(pos.right()) endY = int(pos.bottom()) # add the bounding box coordinates to the rectangles list rects.append((startX, startY, endX, endY))

Most of the time, we aren’t landing on a skip-frame multiple. During this time, we’ll utilize our trackers to track our object rather than applying detection.

We begin looping over the available trackers on Line 160.

We proceed to update the status to “Tracking” (Line 163) and grab the object position (Lines 166 and 167).

From there we extract the position coordinates (Lines 170-173) followed by populating the information in our rects list.

Now let’s draw a horizontal visualization line (that people must cross in order to be tracked) and use the centroid tracker to update our object centroids:

# draw a horizontal line in the center of the frame -- once an # object crosses this line we will determine whether they were # moving 'up' or 'down' cv2.line(frame, (0, H // 2), (W, H // 2), (0, 255, 255), 2) # use the centroid tracker to associate the (1) old object # centroids with (2) the newly computed object centroids objects = ct.update(rects)

On Line 181 we draw the horizontal line which we’ll be using to visualize people “crossing” — once people cross this line we’ll increment our respective counters

Then on Line 185, we utilize our CentroidTracker instantiation to accept the list of rects , regardless of whether they were generated via object detection or object tracking. Our centroid tracker will associate object IDs with object locations.

In this next block, we’ll review the logic which counts if a person has moved up or down through the frame:

# loop over the tracked objects for (objectID, centroid) in objects.items(): # check to see if a trackable object exists for the current # object ID to = trackableObjects.get(objectID, None) # if there is no existing trackable object, create one if to is None: to = TrackableObject(objectID, centroid) # otherwise, there is a trackable object so we can utilize it # to determine direction else: # the difference between the y-coordinate of the *current* # centroid and the mean of *previous* centroids will tell # us in which direction the object is moving (negative for # 'up' and positive for 'down') y = [c[1] for c in to.centroids] direction = centroid[1] - np.mean(y) to.centroids.append(centroid) # check to see if the object has been counted or not if not to.counted: # if the direction is negative (indicating the object # is moving up) AND the centroid is above the center # line, count the object if direction < 0 and centroid[1] < H // 2: totalUp += 1 to.counted = True # if the direction is positive (indicating the object # is moving down) AND the centroid is below the # center line, count the object elif direction > 0 and centroid[1] > H // 2: totalDown += 1 to.counted = True # store the trackable object in our dictionary trackableObjects[objectID] = to

We begin by looping over the updated bounding box coordinates of the object IDs (Line 188).

On Line 191 we attempt to fetch a TrackableObject for the current objectID .

If the TrackableObject doesn’t exist for the objectID , we create one (Lines 194 and 195).

Otherwise, there is already an existing TrackableObject , so we need to figure out if the object (person) is moving up or down.

To do so, we grab the y-coordinate value for all previous centroid locations for the given object (Line 204). Then we compute the direction by taking the difference between the current centroid location and the mean of all previous centroid locations (Line 205).

The reason we take the mean is to ensure our direction tracking is more stable. If we stored just the previous centroid location for the person we leave ourselves open to the possibility of false direction counting. Keep in mind that object detection and object tracking algorithms are not “magic” — sometimes they will predict bounding boxes that may be slightly off what you may expect; therefore, by taking the mean, we can make our people counter more accurate.

If the TrackableObject has not been counted (Line 209), we need to determine if it’s ready to be counted yet (Lines 213-222), by:

- Checking if the

directionis negative (indicating the object is moving Up) AND the centroid is Above the centerline. In this case we incrementtotalUp. - Or checking if the

directionis positive (indicating the object is moving Down) AND the centroid is Below the centerline. If this is true, we incrementtotalDown.

Finally, we store the TrackableObject in our trackableObjects dictionary (Line 225) so we can grab and update it when the next frame is captured.

We’re on the home-stretch!

The next three code blocks handle:

- Display (drawing and writing text to the frame)

- Writing frames to a video file on disk (if the

--outputcommand line argument is present) - Capturing keypresses

- Cleanup

First we’ll draw some information on the frame for visualization:

# draw both the ID of the object and the centroid of the

# object on the output frame

text = "ID {}".format(objectID)

cv2.putText(frame, text, (centroid[0] - 10, centroid[1] - 10),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 255, 0), 2)

cv2.circle(frame, (centroid[0], centroid[1]), 4, (0, 255, 0), -1)

# construct a tuple of information we will be displaying on the

# frame

info = [

("Up", totalUp),

("Down", totalDown),

("Status", status),

]

# loop over the info tuples and draw them on our frame

for (i, (k, v)) in enumerate(info):

text = "{}: {}".format(k, v)

cv2.putText(frame, text, (10, H - ((i * 20) + 20)),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

Here we overlay the following data on the frame:

ObjectID: Each object’s numerical identifier.centroid: The center of the object will be represented by a “dot” which is created by filling in a circle.info: IncludestotalUp,totalDown, andstatus

For a review of drawing operations, be sure to refer to this blog post.

Then we’ll write the frame to a video file (if necessary) and handle keypresses:

# check to see if we should write the frame to disk

if writer is not None:

writer.write(frame)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# increment the total number of frames processed thus far and

# then update the FPS counter

totalFrames += 1

fps.update()

In this block we:

- Write the

frame, if necessary, to the output video file (Lines 249 and 250) - Display the

frameand handle keypresses (Lines 253-258). If “q” is pressed, webreakout of the frame processing loop. - Update our

fpscounter (Line 263)

We didn’t make too much of a mess, but now it’s time to clean up:

# stop the timer and display FPS information

fps.stop()

print("[INFO] elapsed time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# check to see if we need to release the video writer pointer

if writer is not None:

writer.release()

# if we are not using a video file, stop the camera video stream

if not args.get("input", False):

vs.stop()

# otherwise, release the video file pointer

else:

vs.release()

# close any open windows

cv2.destroyAllWindows()

To finish out the script, we display the FPS info to the terminal, release all pointers, and close any open windows.

Just 283 lines of code later, we are now done ?.

People counting results

To see our OpenCV people counter in action, make sure you use the “Downloads” section of this blog post to download the source code and example videos.

From there, open up a terminal and execute the following command:

$ python people_counter.py --prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \ --model mobilenet_ssd/MobileNetSSD_deploy.caffemodel \ --input videos/example_01.mp4 --output output/output_01.avi [INFO] loading model... [INFO] opening video file... [INFO] elapsed time: 37.27 [INFO] approx. FPS: 34.42

Here you can see that our person counter is counting the number of people who:

- Are entering the department store (down)

- And the number of people who are leaving (up)

At the end of the first video you’ll see there have been 7 people who entered and 3 people who have left.

Furthermore, examining the terminal output you’ll see that our person counter is capable of running in real-time, obtaining 34 FPS throughout. This is despite the fact that we are using a deep learning object detector for more accurate person detections.

Our 34 FPS throughout rate is made possible through our two-phase process of:

- Detecting people once every 30 frames

- And then applying a faster, more efficient object tracking algorithm in all frames in between.

Another example of people counting with OpenCV can be seen below:

$ python people_counter.py --prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \ --model mobilenet_ssd/MobileNetSSD_deploy.caffemodel \ --input videos/example_01.mp4 --output output/output_02.avi [INFO] loading model... [INFO] opening video file... [INFO] elapsed time: 36.88 [INFO] approx. FPS: 34.79

Here is a full video of the demo:

This time there have been 2 people who have entered the department store and 14 people who have left.

You can see how useful this system would be to a store owner interested in foot traffic analytics.

The same type of system for counting foot traffic with OpenCV can be used to count automobile traffic with OpenCV and I hope to cover that topic in a future blog post.

Additionally, a big thank you to David McDuffee for recording the example videos used here today! David works here with me at PyImageSearch and if you’ve ever emailed PyImageSearch before, you have very likely interacted with him. Thank you for making this post possible, David! Also a thank you to BenSound for providing the music for the video demos included in this post.

Improving our people counter application

In order to build our OpenCV people counter we utilized dlib’s correlation tracker. This method is easy to use and requires very little code.

However, our implementation is a bit inefficient — in order to track multiple objects we need to create multiple instances of the correlation tracker object. And then when we need to compute the location of the object in subsequent frames, we need to loop over all N object trackers and grab the updated position.

All of this computation would take place in the main execution thread of our script which thereby slows down our FPS rate.

An easy way to improve performance would therefore be to use multi-object tracking with dlib. That tutorial covers how to use multiprocessing and queues such that our FPS rate improves by 45%!

Note: OpenCV also implements multi-object tracking, but not with multiple processes (at least at the time of this writing). OpenCV’s multi-object method is certainly far easier to use, but without the multiprocessing capability, it doesn’t help much in this instance.

Finally, for even higher tracking accuracy (but at the expense of speed without a fast GPU), you can look into deep learning-based object trackers, such as Deep SORT, introduced by Wojke et al. in their paper, Simple Online and Realtime Tracking with a Deep Association Metric.

This method is very popular for deep learning-based object tracking and has been implemented in multiple Python libraries. I would suggest starting with this implementation.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: March 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post we learned how to build a people counter using OpenCV and Python.

Our implementation is:

- Capable of running in real-time on a standard CPU

- Utilizes deep learning object detectors for improved person detection accuracy

- Leverages two separate object tracking algorithms, including both centroid tracking and correlation filters for improved tracking accuracy

- Applies both a “detection” and “tracking” phase, making it capable of (1) detecting new people and (2) picking up people that may have been “lost” during the tracking phase

I hope you enjoyed today’s post on people counting with OpenCV!

To download the code to this blog post (and apply people counting to your own projects), just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Hi Adrian ! the tutorial is really great and it’s very helpful to me . however, I was wandering that is this kind of people counting can implement on raspberry pi3 ?

If you want to use just the Raspberry Pi you need to use a more efficient object detection routine. Possible methods may include:

1. Background subtraction, such as the method used in this post.

2. Haar cascades (which are less accurate, but faster than DL-based object detectors)

3. Leveraging something like the Movidius NCS to help you reach a faster FPS throughput

Additionally, for your object tracking you may want to look into using MOSSE (covered in this post) which is faster than correlation filters. Another option could be to explore using Kalman filters.

I hope that helps!

thank you so much! another question , is it possible to combine this people counting algorithm with the method you have post before which was talk inking about Raspberry Pi: Deep learning object detection with OpenCV

Yes, you can, but keep in mind that the FPS throughput rate is going to be very, very low since you’re trying to apply deep learning object detection on the Pi.

Adrian, to get better performance with raspberry pi3, do you need to use all of these methods? Or just a few? For example, you can join background subtraction with Haar Cascade?

Thank you very much!

You can join background subtraction in with a Haar cascade and then only apply the Haar cascade to the ROI regions. But realistically Haar cascades are pretty fast anyway so that may be overkill.

Thank you so much for your work and for sharing it. It’s great.

May you detail a bit more what we are suppose to do to use the software on Raspberry. I’m not very used to it so I don’t understant everything you wrote.

I’ll likely have to write another blog post on Raspberry Pi people counting — it’s too much to detail in a blog post comment.

Seems logic…

Could you give me the URL of a trusted blog where you use to go on which I will be able to find informations ?

I’ve tried the software “Footfall” but it doesn’t work.

And many blogs are just outdated concerning this subject.

Thank you for all 🙂

I don’t know of one, which is why I would have to do one here on PyImageSearch 😉

Looking forward to the Rasberry Pi people counting!

Hi, firstly, thank you for your blog it’s so awesome! Im wondering when that Raspberry Pi counter will be posted? Also can it be made into vehicles? Thank you!

Yes, you can do the same for vehicles, just swap out the “person” class for any other objects you want to detect and track. I’m honestly not sure when the Pi people counter will be posted. I have a number of other blog posts and projects I’m working on though. I hope it will be soon but I cannot guarantee it.

yes please!

Hey Adrian!

So did you write anything related to Pi and Counting algorithms?

I will be covering it in my upcoming Computer Vision and Raspberry Pi book! Make sure you’re on the PyImageSearch email list to be notified when the book goes live in a couple of months.

Hi Adrian. I have a question about Kalman filters. I wanna implement people counter on a Raspberry PI3B and I use background substraction for detection and FindCountours to enclosing in a rectangle the person position and for tracking I need to implement MOSSE o Kalman filter but here is my question. How can I track a person with those algorithms? Because each of those algorithm need to receive the position of the object but I’m detect multiple object so it will be an issue to send the correct coordinate for each object that I need to track

can this code deals with live streaming?

Yes, absolutely. Just use my VideoStream class.

Hey Adrian, awesome post. Thank you for sharing and detailing the steps. Is there a raspberry Pi post in the near future? Would love to see your approach. Thanks again, gonna check out your other stuff.

I’ll actually be covering it in my upcoming Computer Vision + Raspberry Pi book 🙂 Stay tuned, I’ll be announcing it soon.

I can hardly wait for the book. Is there a model that will reliably detect people walking in profile (passing by a camera pointed at the sidewalk)? I haven’t found the haar do this well. The Caffee you have does it well but as you mention it won’t run well on a Pi.

Is there a haar that will detect profile or a low-cost hardware that will run the Caffee?

Again – looking forward to the book!

I’ll actually be showing you how to use deep learning-based object detectors on the Pi! They will be fast enough to run in real-time and be more accurate than Haar detectors.

Great! Awesome job as always. I was trying to improve my tracking part. This is a good reference point for my application.

Thankyou Adrian!

Hi Adrian,

This is by far my Favorite blog post from you.

I was wondering if you could also do a blog/tutorial on people counting in an image and show the gender of the people. That would make up for a really interesting blog and tutorial.

I really liked your blog lesson.. Thanks so much. I’m going to convers caffe model to NCS Movidius and go to Store my friend. Hi is going to count people and recognize (age, gender and maybe emotion). I really like your Blog. I plan to buy your book. Thanks for motivation and good practic.

Thank you for the kind words, I’m happy you liked the post. I wish the best of luck to you and your friend implementing your own person counter for their store!

Sir ,

Great Work. Thanks for Sharing.

Regards,

Anirban

Thanks Anirban!

Hi Adrian, is there any specifc reason to use dlib correlation tracker instead of opencv’s 8 inbuilt trackers.Will any of those trackers will be more precise than dlib tracker?

To quote the blog post:

“We’ll then use dlib for its implementation of correlation filters. We could use OpenCV here as well; however, the dlib object tracking implementation was a bit easier to work with for this project.”

OpenCV’s CSRT tracker may be more accurate but it will be slower. Similarly, OpenCV’s MOSSE tracker will be faster but potentially less accurate.

Loved your post and with the level of explanation so you have posted hats off to you SIr! I was wandering what if we have to implement it on multiple cameras? or we have separate door/ separate camera for entrance and exit. would like to have your words on these too. Thanks in advance.

This tutorial assumes you’re using a single camera. If you’re using multiple cameras it becomes more challenging. If the viewpoint changes then your object tracker won’t be able to associate the objects. Instead, you might want to look into face recognition or even gate recognition, enabling you to associate a person with a unique ID based on more than just appearance alone.

Yes, the view point do change. As cameras will be placed on certain different places. We would like to tag the person with his face id and recognize around all the cameras using the face recognition and ID. Thank you once again.

Yeah, if the viewpoints are changing you’ll certainly want to explore face recognition and gait recognition instead.

@Adrian Thanks for the post. I would love to see any blog on gait recognition. I am doing some research on this recently. I have tried a gait recognition paper which uses CASIA-B dataset. But getting a silhouette seems to be a difficult task. I am a little bit off the topic but if you read this one, would love to know your views.

Thanks for the suggestion. I will consider covering it but I cannot guarantee if/when that may be.

I liked the article very much. in the new centers on all the inputs to put cameras and on the computer to collect information that all the people came out and no one hid in the interior.

Thanks for sharing this tutorial – last week I was trying to do something similar – do you think you can make a comment/answer on http://answers.opencv.org/question/197182/tracking-multiobjects-recognized-by-haar/ ?!

You can try to “place” a blank region on already detected car. Since the tracking method gives you location of the object in every frame, you could just move the blank region accordingly. Then you can use it to prevent Haar cascade from finding a car there. If you’re worried about overlapping cars, I suggest you adjust the size of blank region.

Does this algorithm works fine with raspberry pi based projects ? If not suggest me a effective algorithm for detecting humman presence sir . I have treid cassade method but it does not make the satisfaction .

Thank you sir , I am awaiting for ur reply

Make sure you’re reading the comments. I’ve already answered your question in my reply to Jay — it’s actually the very first comment on this post.

Thanks. God Job. How to improve the code to detect people very close?

Hey Ando — can you clarify what you mean by “very close”? Do you have any examples?

Thank you very much for these tutorials. I am new to this and I seem to be having issues getting webcam video from imutils.video. Can you provide a short test script to open the video stream from the pi camera using imutils?

Just set:

vs = VideoStream(usePiCamera=True).start()how to link your videostream class to here? and how to run ?

is the videostream class created in the same file at there or another python file

You first need to install the “imutils” library:

$ pip install imutilsFrom there you can import it into your Python scripts via:

from imutils.video import VideoStreamThanks for the wonderful explanation. It was always a pleasure to read your post. I ran your people-counting tracker but getting some random objectID while detection. For me on 2nd example videos there was 20 people going Up and 2 people coming Down. What do you recommend to remove these ambiguities ?

Hey Rohit — that is indeed strange behavior. What version of OpenCV and dlib are you using?

Running the Downloaded scripts with the default parameter values using the same input videos, I was UNABLE to match the sample output videos. I ran into the same issue as Rohit.

I played around with the confidence values and still could NOT match the results. The code is missing some detections and what looks like overstating (false positive detections?) others? Any ideas???

Nvidia TX1

OpenCV 3.3

Python 3.5 (virtual environment)

dlib==19.15.0

numpy==1.14.3

imutils==0.5.0

The videos can be viewed on my google drive:

Video 1: (My Result = Up 3, Down 8) [Actual (ground truth) Up 3 Down 7]

https://drive.google.com/open?id=1rWp-bD7WFyL39sjqiWzLEiVnwu8mF9dM

Video 2: (My Result = Up 20, Down 2) [Actual (ground truth) Up 14 Down 3]

https://drive.google.com/open?id=1T3Vaslk2UawYYD1RVF5frzHtlT30XO2X

Upgrade from OpenCV 3.3 to OpenCV 3.4 or better and it will work for you 🙂 (which I also believe you found it from other comments but I wanted to make sure)

Comment Updated (4/19): I encountered the same issue using OpenCV 3.3, but after I upgraded to OpenCV 3.4.1, my results now match the video on this blog post. I recommend upgrading to OpenCV 3.4 for anyone encountering similar detection/tracking behavior…

Rohit – I encountered the same issue using OpenCV 3.3, but after I upgraded to OpenCV 3.4.1, my results now match the video on this blog post. I recommend upgrading to OpenCV 3.4…

Hi Adrian,

Thanks for the great post!!!!. I have few questions..

1.Will this people counter work on crowded places like Airport or Railway station’s?? Will it give accurate count??

2.Can we use it for mass(crowd) counting?? Does it consider pet’s and babies??

1. Provided you can detect the full body and there isn’t too much occlusion, yes, the method will work. If you cannot detect the full body you might want to switch to face detection instead.

2. See my note above. You should also read the code to see how we filter out non-people detection 🙂

I have come across some app developers using what looks to be custom trained head detection models. Sometimes, the back of the head can be seen, other times the frontal view can be seen. I think the “head count” approach makes sense since that is how humans think when taking class attendance for example. Is head counting a better method for people counting??? Is this even possible and will the method be accurate for the back of heads???

Examples: (VION VISION)

https://www.youtube.com/watch?v=8XQyw9c23dc

https://www.youtube.com/watch?v=DcusyBUV4do

I’m reluctant to say which is “better” as that’s entirely dependent on your application and what exactly you’re trying to do. You could argue that in dense areas a “head detector” would be better than a “body detector” since the full body might not be visible. But on the other hand, having a full body detected can reduce false positives as well. Again, it’s dependent on your application.

Dear Dr Adrian,

I need a clarification please on object detection. How does the object detector distinguish between human and non-human objects.

Thank you,

Anthony of exciting Sydney

The object detector has been pre-trained to recognize a variety of classes. Line 137 also filters out non-person detections.

Thanks for your sharing.

Hi Adrian,

For the detection part, I wanted to try another network. So I went for the ssd_mobilenet_v2_coco_2018_03_29, tensorFlow version and here: https://github.com/opencv/opencv_extra/tree/master/testdata/dnn ).

Problem is I had too much detection boxes, so I used a NMS function to help me sort out things, but even after that I had too much results even with confidence at 0.3 and NMS treshold at 0.2, see an exemple here: https://www.noelshack.com/2018-33-2-1534241531-00059.png (network detection boxes are in red, NMS output boxes are in green)

Do you know why I have got some much results? Is it because I used a TensorFlow model instead of Caffe? Or is it because the network was trained with other parameters? Something changed in SSD MobileNet v2 compared to chuanqi305’s SSD mobileNet?

David

Hey David — I haven’t tested the TensorFlow model you are referring to so I’m honestly not sure why it would be throwing so many false positives like that. Try to increase your minimum confidence threshold to see if that helps resolve the issue.

Hi Adrian,

You write “we utilize our CentroidTracker instantiation to accept the list of rects , regardless of whether they were generated via object detection or object tracking” however as far as I can see, in the Object Detection fase, you don’t actually seem to populate the rects[] variable? I’ve downloaded the source as well, couldn’t find it there either.

Am I missing something?

Very valuable post throughout, looks a lot like what I am trying to achieve for my cat tracker (which you may recall from earlier correspondence).

Hey Roald — we don’t actually have to populate the list during the object detection phase. We simply create the tracker and then allow the tracker to update “rects” during the tracking phase. Perhaps that point was not clear.

I used OpenCV 3.4 for this example. As for using the Raspberry Pi, make sure you read my reply to Jay.

Great article, I have a doubt though, It could potentially be a noob question so please bare with me.

Say I use this in my shop for tracking foot count, now all the new objects are stored in a dictionary right? If i leave the code running perpetually, wont it cause errors with the memory?

If you left it running perpetually, yes, the dictionary could inflate. It’s up to you to add any “business logic” code to update the dictionary. Some people may want to store that information in a proper database as well — it’s not up to me make those decisions for people. This code is a start point for tracking foot count.

Great blog!!! its amazing how you simplify difficult concepts.

I am working on ways to identify each and every individual going through the entrance through image captured in real time using a camera(we have their passport size photos plus other labels e.g., personal identification number, department ,etc).kindly advice on how to include this multi class labels other than the ID notation you used in the example.

Will you be covering the storage of the counted individuals to the database for later retrieval?

You mean something like face recognition? I discuss how you can perform this in an unsupervised manner inside this post on face clustering.

For those who had the following error when running the script:

Traceback (most recent call last):

File “people_counter.py”, line 160, in

rect = dlib.rectangle(startX, startY, endX, endY)

Boost.Python.ArgumentError: Python argument types in

rectangle.__init__(rectangle, numpy.int32, numpy.int32, numpy.int32, numpy.int32)

did not match C++ signature:

__init__(struct _object * __ptr64, long left, long top, long right, long bottom)

__init__(struct _object * __ptr64)

please update line 160 of people_counter.py to

rect = dlib.rectangle(int(startX), int(startY), int(endX), int(endY))

Thanks for sharing, Juan! Could you let us know which version of dlib you were using as well just so we have it documented for other readers who may run into the problem?

i have the same problem with him and my version of dlib is 19.6.0

my dlib version is 19.8.1

i have the same problem with it and i have tried 19.18.0 and 19.6.0, both of them doesn’t work.

Thanks

thanks 🙂

Hi,i meet the same question,do u solve it?

As Juan said, you change Line 160 to:

rect = dlib.rectangle(int(startX), int(startY), int(endX), int(endY))Thanks a lot! you saved me 🙂

Hi,

Your wonderful work is priceless text book. Unfortunately, my understanding is still not enough to understand the whole code. I tried to execute python files, but have an error.

Can I know how to solve it. Thank you so much

python people_counter.py –prototxt mobilenet_ssd/MobileNetSSD_deploy.prototxt \

usage: people_counter.py [-h] -p PROTOTXT -m MODEL [-i INPUT] [-o OUTPUT]

[-c CONFIDENCE] [-s SKIP_FRAMES]

people_counter.py: error: argument -m/–model is required

Your error can be solved by properly providing the command line arguments to the script. If you’re new to command line arguments, that’s fine, but you should read up on them first.

remove ‘/’ between the arguments and remove the newline space and provide the 3 lines as 1 liner command

Hi Adrian,

thanks for sharing this great article! It really helps me a lot to understand object tracking.

The CentroidTracker uses two parameters: MaxDisappeared and MaxDistance.

I understand the reason for MaxDistance, but I cannot find the implementation in the source code.

I am running this algorithm on vehicle detection in traffic and the same ID is sometimes jumping between different objects.

How can I implement MaxDistance to avoid that?

Thanks in advance! I really appreciate your work!!

Hey Jan — have you used the “Downloads” section of the blog post to download the source code? If so, take a look at the centroidtracker.py implementation. You will find both variables being used inside the file.

Kindly help me to, Have you resolve the error.

Hi Adrian,

do you think it’s worth to train a deep learning object detector with only the classes I’m interested in (about 15), instead of filtering classes on a pre-trained model, to run it on devices with limited resources(beagleBoard X-15 or similar SBC)?

Thanks

If you train on just the classes you are interested in you may be able to achieve higher accuracy, but keep in mind it’s not going to necessarily improve your inference time that much.

Hi Adrian,

Does this implement the multi-processing you were talking about the week before in https://pyimagesearch.com/2018/08/06/tracking-multiple-objects-with-opencv/ ?

It doesn’t use OpenCV’s implementation of multi-object tracking, but it uses my implementation of how to turn dlib’s object trackers into multi-object trackers.

thank you very much dear adrian for best blog post

This is really nice thank you….

I have developed a people counter using Dlib tracker and SSD detector. you have skipped 30 frames for the detector to save memory usage. but in my case the detection and the tracker run in each of the frames. when there is no detection (when the detector lost the object) I try to initialize the tracker by the previous bounding box of the tracker ( only for two frames). the problem is when there is no object in the video ( object is not lost by the detector but has passed ) the tracker bounding box stack on the screen and it cause a problem when another object came in the view of the video. is there any way to delete the tracker when I need?

I would suggest applying another layer of tracking, this time via centroid tracking like I do in this guide. If the maximum distance between the old object and new object is greater than N pixels, delete the tracker.

Hi Adrian

Again, a great tutorial. Can’t praise it enough. I’ve got my current job because of PyImageSearch and that’s what this site means to me.

I was going through the code, and trying to understand –

–If you are running the object detector every 30 frames, how are you ensuring that an *already detected* person with an associated objectID, does not get re-detected in the next iteration of the object detector after the 30 frame wait-time? For example, if we have a person walking really slowly, or if two people are having a conversation within the bounds of our input frame, how are they not getting re-detected?–

Thanks and Regards,

Aditya

They actually are getting re-detected but our centroid tracker is able to determine if (1) they are the same object or (2) two brand new objects.

Thank you Adrian for another translation of the language of the gods. The combination of graph theory, mathematics, conversion to code and implementation is like ancient Greek and you are the demigod who takes the time to explain it to us mere mortals. Most importantly, you take a stepwise approach. When ‘Rosebrock Media Group’ has more employees, someone in it can even spend more time showing how alternative code snippets behave. In terms of performance, I am just starting to figure out if a CUDA implementation would be of benefit. Of course, there is no ‘Adrian for CUDA coding’. Getting this to run smoothly on a small box would be another interesting project but requires broad knowledge of all the hardware options available – a Xilinx FPGA? an Edison board? a miniiTX pc? a hacked cell phone? (there’s an idea – it’s a camera, a quad core cpu and a gpu in a tidy package but obviously would need a mounting solution and a power source too). Of course to run on an iphone I have to jailbreak the phone and translate the code to swift. But then perhaps it would be better to go to android as the hardware selection is broader and the OS is ‘open’. Do you frequent any specific message boards where someone might pick up this project and get it to work on a cell phone? There are a lot of performance optimizations that could make it work.

Thank you for the kind words, Stefan! Your comment really made my day 🙂 To answer your question — yes, running the object detector on the GPU would dramatically improve performance. While my deep learning books cover that the OpenCV bindings themselves do not (yet). I’m also admittedly not much of an embedded device user (outside of the Raspberry Pi) so I wouldn’t be able to comment on the other hardware. Thanks again!

Hi Adrian, just spotted this…

For information I have successfully implemented this post on a Jetson TX2, replacing the SSD with one that is optimised for TensorRT. I would refer your reader to the blog of JK Jung for guidance.

Performance wise, I am finding that all 6 cores are maxed out at 100% and the GPUs at around 50% depending on the balance of SSD/trackers used. The trackers in particular are very CPU intensive and as you say, the pipieline slows a great deal with multiple objects.

As always, thanks for your huge contribution to the community and congratulations on just getting married!

Chers, Mike

Awesome, thanks so much for sharing, Mike!

I find out the problem for my issue !! it is because I changed the skip_frames to 15 .

so how to set an appropriate number of frames to skip? because maximum frame number to skip will lead to a miss to an object and smaller number of skip_frames will lead to inappropriate assignation of object ID….

As you noticed, it’s a balance. You need to balance (1) skipping frames to help the pipeline to run faster while (2) ensuring objects are not lost or trackings missed. You’ll need to experiment to find the right value for skip frames.

Hi Adrian,

I’ve recently found your blog and I really like the way you explain things.

I’m doing and people counter in a raspberry pi , I’m using background subtration and centroid tracking.

The problem I’m facing is that sometimes objects ID switch as you said in the “simple object tracking with OpenCV” post. Is there something I can do to minimize these errors?

If you have any recommendations feel free to share.

Thanks in advance.

Ps: I’d be really interested if you did a post about people counter in raspberry pi like you mentioned in the first comment

Hey Jaime — there isn’t a ton you can do about that besides reduce the maximum distance threshold.

Thanks Adrian for such a nice tutorial. You have released it on perfect timing, I am working on similar kind of project for tracking the number of people in and out from bus. Some how I am not getting proper result. But this tutorial is very good start and helped me to understand the logic.

Thanks again. Keep rocking!!!

Best of luck with the project, Nilesh!

Hi Adrian,

The camera is fixed to how many meters of the floor (approximately)?

Thank you very much!

To be totally honest I’m not sure how many meters above the ground the camera was. I don’t recall.

Thank you Adrian for inspiring me and introducing me to the world of computer vision.

I started with your 1st edition and followed quite a few of your blog projects, with great success.

I was excited to read this blog, as people counting is something I have wanted to pursue.

However,………………..there’s a problem.

.When I execute the runtime, I get,

[INFO] loading model…

[INFO] opening video file…

the sample video does open up, plays for about 1 second (The lady doesn’t reach the line), and then, boom…my computer crashes! and Python quits!

I have tried to increase the –skip-frames, still crashes. I even played with Python3 (thinking my version 2.7 was old) – no joy!

Is it time to say goodbye to my 11 year old Macbook Pro? or could this be something else?

“It’s important to understand that deep learning object detectors are very computationally expensive, especially if you are running them on your CPU.”

Out of interest is there a ballpark guide to minimum spec machines, when delving into this world of OpenCV?

Best Regards,

update:

Reading your /install-dlib-easy-complete-guide/

I noticed you say to install XCode.

I had removed XCode for my homebrew installation as instructed, as it was an old version.

When I installed dlib, I simply did pip install dlib.

Could this be related?

Cheers

Hey Nik — it sounds like you’re using a very old system and if you’ve installed/uninstalled Xcode before then that could very well be an issue. I would advise you to try to use a newer system if at all possible. Otherwise, it would be any number of problems and it’s far too challenging to diagnose at this point.

Hello, Dr. Adrian thank you for your great work. I am a beginner in this field and your webpage is really helping me through. I have a question, I’ve tried to run this code and an error popped out “people_counter.py: error: the following arguments are required: -p/–prototxt, -m/–model” and I really don’t know what to do. I would be grateful if you helped.

Thanks in advance.

If you’re new to the world of Python, that’s okay, but make sure you read up on command line arguments first. From there you’ll be able to execute the script.

Hi Adrian !!

This is the answer you give me my question !!! thank you for that….

August 24, 2018 at 8:56 am

As you noticed, it’s a balance. You need to balance (1) skipping frames to help the pipeline to run faster while (2) ensuring objects are not lost or tracking missed. You’ll need to experiment to find the right value for skip frames.

but balancing will be possible for a video because i have it in my hand….

what do you suggest me for a camera ( do not know when an object will appear to set a skip frame number)

Hi Adrian !!,

How we can evaluate the counting accuracy of this counter ? My mentor asked me for the counting accuracy. Do we need to find some videos as benchmark or is there some libraries for accuracy evaluation ?

Another great post! Thanks so much for your contributions to the community.

One question, I have tried the code provided on a few test videos and it seems like detected people can be counted as moving up or down without having actually crossed the yellow reference line. In the text you mention the fact that people are only counted once they have crossed the line. Is this a behaviour you have seen as well? Is there an approach you would recommend to place a more strict condition that only counts people who have actually crossed from one side of the line to the other? Thanks

Hey Andy — that’s actually a feature, not a bug. For example, say you are tracking someone moving from the bottom to the top of a frame. But, they are not actually detected until they have crossed the actual line. In that instance we still want to track them so we check if they are above the line, and if so, increment the respective counter. If that’s not the behavior you expect/want you will have to update the code.

Hello Adrian,

Thank you for the great post.

I modified the code for horizontal camera as below:

https://youtu.be/BNzTePvbsWE

I noticed that below problems:

1-No response on fast moving object

2-Irrelevant Centroids noise

3-Repeated counting on same person

And I try to solve these problems by introducing face recognition and pose estimation.

Do u have any suggestion/comment on this?

Thanks

Frank

Face recognition would greatly solve this problem but the issue you may have is being unable to identify faces from side/profile views. Pose estimation and gait recognition are actually more accurate than face recognition — they would be worth looking into.

Hi,

Did you solved yout problem?

Thanks.

Bruno Bonela.

Hi Adrian. First, I wanna said thank you for your time to explains each details on your code, Your blog is incredible (the best of the best!).

I have a doubt on CentroidTracker, because it creates a object ID when appears a new person on a video but never destroy that ID, so would be cause any trouble in the future with the memory if I wanna implemented on a Raspberry Pi 3? I followed your person counter code just with a some modifications to run it on the PI

My best regards

Hey Andres — the CentroidTracker actually does destroy the object ID once it has disappeared from a sufficient number of frames.

Thank you very much Adrian. Another question, I have an problem with centroid tracker update, since a person is out of the frame but instantaneously another person comes in, the algorithm thinks that is the same person, doesn’t count it and put he centroid to the person that came in (I change the maxDisappered but not succes) so I check again the code to understand in which line you use the minimum Euclidean distance to put the new position of the old centroid but I couldn’t understand the method that you used to achieve that. Can you give an advice to solve that problem?

It doesn’t happen every time but to rise the success rate.

My best regards

That is an edge case you will need to decide how to handle. If you reduce the “maxDisappared” value too much you could easily register false-positives. Keep in mind that footfall applications are meant to be approximations, they are never 100% accurate, even with a human doing the counting. If it doesn’t happen very often then I wouldn’t worry about it. You will never get 100% accurate footfall counts.

I handled modifying the CentroidTracker, where I put a condition if a distance from the old centroid to the new one is more than 200 in y-axis, continue. Thanks for the answer

Somehow i cant run the code….

I always get the error message:

Can’t open “mobilenet_ssd/MobilenetSSD_deploy.prototxt” in function ‘ReadProtoFromTextFile’

Seems like the program is unable to read the prototxt…

Do you have an idea on how to fix it?

Yes, that does seem to be the problem. Make sure you’ve used the “Downloads” section of the blog post to download the source code + models. From there double-check your paths to the input .prototxt file.

Hi Adrian,

Thank you for a great tutorial. Would it be possible for you to let me know how I can count the people moving from right to left or left to right. I am able to draw the trigger lines but unable to count the objects.

Regards

Harsha J

You’ll need to modify Lines 213 and 220 (the “if” statements) to perform the check based on the width, not the height. You’ll also want to update Line 204 to keep track of the x-coordinates rather than the y-coordinates.

Hi Adrian,

I am a beginner in this field and your webpage is really helping me through.

Could you please give me a code?

I’m so confuse how to change it for a while.

Thank in advance

NT

I am happy to hear you are finding the PyImageSearch blog helpful! However, I do not provide custom modifications to my code. I provide over 300+ free tutorials here on PyImageSearch and I do my best to guide you, again, for free, but if you need custom modification you will need to do that yourself.

Hi Adrian,

I’m wondering what does the tracker do when a object doesn’t move (i.e. the object stands in the same position for a few frames). I’m not sure if OpenCV’s trackers are able to handle this situation.

Thanks in advance.

It will keep tracking the object. If the object is lost the object detector will pick it back up.

Hello Adrian, first i want to say thank you for this amazing project it helped me understand quiet a bunch of thing concerning computer visioning.firstly, i have this question which you could help me with, i want to make this project to monitor two doors on my store and i was wondering what changes i might have to do to use two cameras simultaneously

ps: i was working on simple opencv programs since that i’m quiet the noob and i tried to use cap0 = cv2.VideoCapture(0)

cap1 = cv2.VideoCapture(1) however it opens only one camera feed even though the camera indexes are correct!

Thanks for this project again and for taking time to read my comment

Follow this guide and you’ll be able to efficiently access both your webcams 🙂

Hi @Adrian. How can we improve object detection accuracy? As your method is completely based on how good the detection is? Any other model you recommend to use for detection?

That’s a complex question. Exactly how you improve object detection accuracy varies on your dataset, your number of images, and the intended use of the model. I would suggest you read this tutorial on the fundamentals of object detection and then read Deep Learning for Computer Vision with Python to help you get up to speed.

One of the purpose of object tracking is to track people when object detection may fail right? But your tracking algorithm accuracy i if I understand correctly is completely based on whether we detect object in subsequent frames. What is my object just gets detected once, then how should I track him. What modification will be required in your solution.

No, the objects do not need to be detected in subsequent frames — I only apply the object detector every N frames, the object tracker tracks the object in between detections. You only need to detect the object once to perform the tracking.

Hey Adrian, I just downloaded the source code from “people counter” with OpenCV and Python. Using OpenCV, we’ll count the number of people who are heading “in” or “out” of a department store in real-time:

But getting the following error…

usage: people_counter.py [-h] -p PROTOTXT -m MODEL [-i INPUT] [-o OUTPUT]

[-c CONFIDENCE] [-s SKIP_FRAMES]

people_counter.py: error: the following arguments are required: -p/–prototxt, -m/–model

If you’re new to command line arguments, that’s fine, but make sure you read this tutorial before continuing. It will help you resolve the error.

Hello Adrian, I’ve been following your blog for a couple of months now and indeed there is no other blog which serves with this much of content and practices. Thanks a lot man.

Currently, I’m working on a project with the same application “Counting people”. I’m using a raspberry pi and a pi cam. Due to some constraints I’ve settled down to a over-head view of the camera. I’m using computationally less expensive practices. A haar-casacade detector (custom trained to detect head from over-head view). The detector is doing a good job. I have also integrated the tracking and counting methods which you have provided. Firstly I encountered low fps. So, I ventured around a bit and came up with the “imutils” library to spped up my fps feed. Now I have achieved a pretty decent fps throughput. And I aslo have tested the codes with a video feed. Its all working good.

BUT.

When I use my live feed from the pi cam. There is a bit of lag at detection and the whole system. How do I get this working at real-time? Is there a way to do this on real-time?

Or Is this just the computational potential of a raspberry pi.

Thanks in advance Adrian!

Curious and eagerly waiting for your reply!

Hi Bharath — thank you for the kind words, I appreciate it 🙂 And congratulations on getting your Pi People Counter this far along, wonderful job! I’d be curious to know where you found a dataset of overhead views of people? I’d like to play around with such a dataset if you don’t mind sharing.

As far as the lag goes, could you clarify a bit more? Where exactly is this “lag”? If you can be a bit more specific I an try to help but my guess is that it’s a limitation of the Pi itself.

Thanks for the reply Adrian!

The dataset was hand-labeld at my University. Let me know if you may need it!

Hey, and by “lag” I mean…

With a pre-captured video feed, the pi was able to achieve about ~160 fps (15s video)

With the live feed from pi-cam, it was able to achieve about ~ 50fps(while there was no detection) and once there is detection, the fps reduces down to around 20 fps. (This all was possible only after the implementation of the “imutils” library).

When tested without the “imutils” library, the fps was around 2fps to 6fps.

So, what I would like to conclude as the key inference is, The system performs at a pretty good accuracy when the subject(head) travels at a slower speed(Slower than the normal pace at which any human can walk).

BUT,