In this tutorial, you will learn about the PyTorch deep learning library, including:

- What PyTorch is

- How to install PyTorch on your machine

- Important PyTorch features, including tensors and autograd

- How PyTorch supports GPUs

- Why PyTorch is so popular among researchers

- Whether or not PyTorch is better than Keras/TensorFlow

- Whether you should be using PyTorch or Keras/TensorFlow in your projects

Additionally, this tutorial is part one in our five part series on PyTorch fundamentals:

- What is PyTorch? (today’s tutorial)

- Intro to PyTorch: Training your first neural network using PyTorch (next week’s tutorial)

- PyTorch: Training your first Convolutional Neural Network

- PyTorch image classification with pre-trained networks

- PyTorch object detection with pre-trained networks

By the end of this tutorial, you’ll have a good introduction to the PyTorch library and be able to discuss the pros and cons of the library with other deep learning practitioners.

To learn about the PyTorch deep learning library, just keep reading.

What is PyTorch?

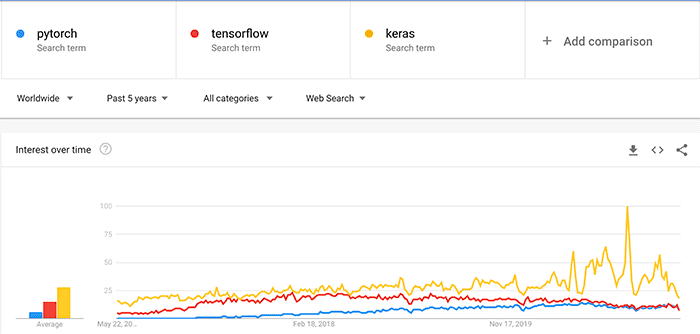

PyTorch is an open source machine learning library that specializes in tensor computations, automatic differentiation, and GPU acceleration. For those reasons, PyTorch is one of the most popular deep learning libraries, competing with both Keras and TensorFlow for the prize of “most used” deep learning package:

PyTorch tends to be especially popular among the research community due to its Pythonic nature and ease of extendability (i.e., implementing custom layer types, network architectures, etc.).

In this tutorial, we’ll discuss the basics of the PyTorch deep learning library. Starting next week, you’ll gain hands-on experience using PyTorch to train neural networks, perform image classification, and apply object detection to both images and real-time video.

Let’s get started learning about PyTorch!

PyTorch, deep learning, and neural networks

PyTorch is based on Torch, a scientific computing framework for Lua. Prior to both PyTorch and Keras/TensorFlow, deep learning packages such as Caffe and Torch tended to be the most popular.

However, as deep learning started to revolutionize nearly all areas of computer science, developers and researchers wanted an efficient, easy to use library to construct, train, and evaluate neural networks in the Python programming language.

Python, along with R, are the two most popular programming languages for data scientists and machine learning, so it’s only natural that researchers wanted deep learning algorithms inside their Python ecosystems.

François Chollet, a Google AI researcher, developed and released Keras in March 2015, an open source library that provides a Python API for training neural networks. Keras quickly gained popularity due to its easy to use API which modeled much of how scikit-learn, the de facto standard machine learning library for Python, works.

Soon over, Google released its first version of TensorFlow in November 2015. TensorFlow not only became the default backend/engine for the Keras library, but also implemented a number of lower-level features that advanced deep learning practitioners and researchers needed to create state-of-the-art networks and perform novel research.

However, there was a problem — the TensorFlow v1.x API wasn’t very Pythonic, nor was it intuitive and easy to use. To solve that problem PyTorch, sponsored by Facebook and endorsed by Yann LeCun (one of the grandfathers of the modern neural network resurgence, and AI researcher at Facebook), was released in September 2016.

PyTorch solved much of the problems researchers were having with Keras and TensorFlow. While Keras is incredibly easy to use, by its very nature and design Keras does not expose some of the low-level functions and customization that researchers needed.

On the other hand, TensorFlow certainly gave access to these types of functions, but they weren’t Pythonic and it was often hard to comb the TensorFlow documentation to find out exactly what function was needed. In short, Keras didn’t offer the low-level API that researchers needed and TensorFlow’s API wasn’t all that friendly.

PyTorch solved those problems by creating an API that was both Pythonic and easy to customize, allowing new layer types, optimizers, and novel architectures to be implemented. Research groups slowly started embracing PyTorch, switching over from TensorFlow. In essence, that is why you see so many researchers using PyTorch in their labs today.

That said, since the release of PyTorch 1.x and TensorFlow 2.x, the APIs for the respective libraries have essentially converged (pun intended). Both PyTorch and TensorFlow now implement essentially the same functionality and provide APIs and function calls to accomplish the same thing

This statement is even backed by Eli Stevens, Luca Antiga, and Thomas Viehmann, who quite literally wrote the book on PyTorch:

Interestingly, with the advent of TorchScript and eager mode, both PyTorch and TensorFlow have seen their feature sets start to converge with the other’s, though the presentation of these features and the overall experience is still quite different between the two.

— Deep Learning with PyTorch (Chapter 1, Section 1.3.1, Page 9)

My point here is to not get too caught up in the debate over whether PyTorch or Keras/TensorFlow is “better” — both libraries implement very similar features, just using different function calls and different training paradigms.

Not getting caught up in the (sometimes hostile) debate of which library is better is especially true if you are a beginner to deep learning. As I discuss later in this tutorial, it’s instead far better for you to just pick one and learn it. The fundamentals of deep learning are the same, regardless of whether you use PyTorch or Keras/TensorFlow.

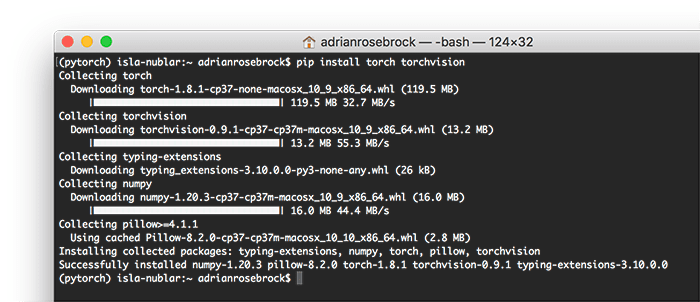

How do I install PyTorch?

The PyTorch library can be installed using pip, Python’s package manager:

$ pip install torch torchvision

From there, you should fire up a Python shell and verify that you can import both torch and torchvision:

$ python >>> import torch >>> torch.__version__ '1.8.1' >>>

Congrats you now have PyTorch installed on your machine!

Note: Need help installing PyTorch? To start, be sure to consult the official PyTorch documentation. Otherwise, you may be interested in my pre-configured Jupyter Notebooks inside PyImageSearch University which come with PyTorch pre-installed.

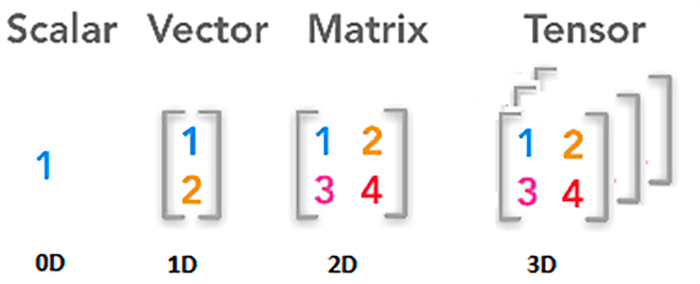

PyTorch and Tensors

PyTorch represents data as multi-dimensional, NumPy-like arrays called tensors. Tensors store inputs to your neural network, hidden layer representations, and the outputs.

Here is an example of initializing an array with NumPy:

>>> import numpy as np

>>> np.array([[0.0, 1.3], [2.8, 3.3], [4.1, 5.2], [6.9, 7.0]])

array([[0. , 1.3],

[2.8, 3.3],

[4.1, 5.2],

[6.9, 7. ]])

We can initialize the same array using PyTorch like so:

>>> import torch

>>> torch.tensor([[0.0, 1.3], [2.8, 3.3], [4.1, 5.2], [6.9, 7.0]])

tensor([[0.0000, 1.3000],

[2.8000, 3.3000],

[4.1000, 5.2000],

[6.9000, 7.0000]])

This doesn’t seem like a big deal, but under the hood, PyTorch can dynamically generate a graph from these tensors and then apply automatic differentiation on top of them:

PyTorch’s Autograd feature

Speaking of automatic differentiation, PyTorch makes it super easy to train neural networks using torch.autograd.

Under the hood, PyTorch is able to:

- Assemble a graph of a neural network

- Perform a forward pass (i.e., make predictions)

- Compute the loss/error

- Traverse the network backwards (i.e., backpropagation) and adjust the parameters of the network such that it (ideally) makes more accurate predictions based on the computed loss/output

Step #4 is always the most tedious and time consuming step to implement by hand. Luckily for us, PyTorch takes care of that step automatically.

Note: Keras users typically just call model.fit to train a network while TensorFlow users utilize the GradientTape class. PyTorch requires us to implement our training loop by hand, so the fact that torch.autograd works for us under the hood is a huge help. Be thankful to the PyTorch developers for implementing automatic differentiation so you didn’t have to.

PyTorch and GPU support

The PyTorch library primarily supports NVIDIA CUDA-based GPUs. GPU acceleration allows you to train neural networks in a fraction of a time.

Furthermore, PyTorch supports distributed training that can allow you to train your models even faster.

Why is PyTorch popular among researchers?

PyTorch gained a foothold in the research community between 2016 (when PyTorch was released) and 2019 (prior to TensorFlow 2.x being officially released).

The reasons PyTorch were able to obtain this foothold are many, but the predominant reasons are:

- Keras, while incredibly easy to use, didn’t provide access to low-level functions that researchers needed to perform novel deep learning research

- In the same vain, Keras made it hard for researchers to implement their own custom optimizers, layer types, and model architectures

- TensorFlow 1.x did provide this low-level access and custom implementation; however, the API was hard to use and not very Pythonic

- PyTorch, and specifically its autograd support, helped resolve much of the issues with TensorFlow 1.x, making it easier for researchers to implement their own custom methods

- Furthermore, PyTorch gives deep learning practitioners complete control over the training loop

There is of course a dichotomy between the two. Keras makes it trivial to train a neural network using a single call to model.fit, similar to how we train a standard machine learning model inside scikit-learn.

The downside is that researchers could not (easily) modify this model.fit call, so they had to use TensorFlow’s lower-level functions. But these methods didn’t make it easy for them to implement their training routines.

PyTorch solved that problem, which is good in the sense that we have complete control, but bad because we can easily shoot ourselves in the foot with PyTorch (every PyTorch user has forgotten to zero their gradients before).

All that said, much of the debate over whether PyTorch or TensorFlow is “better” for research is starting to settle down. The PyTorch 1.x and TensorFlow 2.x APIs implement very similar features, they just go about it in a different way, sort of like learning one programming language versus another. Each programming language has its benefits, but both implement the same types of statements and controls (i.e., “if” statements, “for” loops, etc.).

Is PyTorch better than TensorFlow and Keras?

This is the wrong question to ask, especially if you are a novice in deep learning. Neither is better than the other. Keras and TensorFlow have specific uses, just as PyTorch does.

For example, you wouldn’t make a blanket statement saying that Java is unequivocally better than Python. When working with machine learning and data science, there is a strong argument for Python over Java. But if you intend on developing enterprise applications running on multiple architectures with high reliability, then Java is likely a better choice.

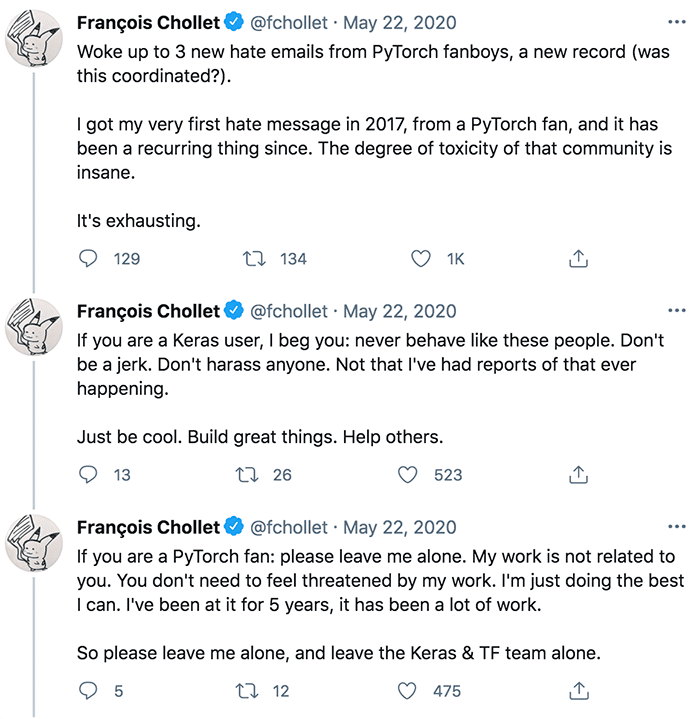

Unfortunately, we humans tend to get “entrenched” in our thinking once we become loyal to a particular camp or group. The entrenchment surrounding PyTorch versus Keras/TensorFlow can sometimes get ugly, once prompting François Chollet, the creator of Keras, to ask PyTorch users to stop sending him hate mail:

The hate mail isn’t limited to François, either. I’ve used Keras and TensorFlow in a good amount of my deep learning tutorials here on PyImageSearch, and I’m saddened to report that I’ve received hate mail criticizing me for using Keras/TensorFlow, calling me stupid/dumb, telling me to shut down PyImageSearch, that I’m not a “real” deep learning practitioner (whatever that means).

I’m sure other educators have experienced similar acts, regardless of whether they wrote tutorials using Keras/TensorFlow or PyTorch. Both sides get ugly, it’s not limited to PyTorch users.

My point here is that you shouldn’t become so entrenched that you attack others based on what deep learning library they use. Seriously, there are more important issues in the world that deserve your attention — and you really don’t need to use the reply button on your email client or social media platform to instigate and catalyze more hate into our already fragile world.

Secondly, if you are new to deep learning, it truly doesn’t matter which library you start with. The APIs of both PyTorch 1.x and TensorFlow 2.x have converged — both implement similar functionality, just done in different ways.

What you learn in one library will transfer to the other, just like learning a new programming language. The first language you learn is often the hardest since you are not only learning the syntax of the language, but also the control structures and program design.

Your second programming language is often an order of magnitude easier to learn since by that point you already understand the basics of control and program design.

The same is true for deep learning libraries. Just pick one and learn it. If you have trouble picking, flip a coin — it genuinely doesn’t matter, your experience will transfer regardless.

Should I use PyTorch instead of TensorFlow/Keras?

As I’ve mentioned multiple times in this post, choosing between Keras/TensorFlow and PyTorch doesn’t involve making blanket statements such as:

- “If you are doing research, you should absolutely use PyTorch.”

- “If you’re a beginner, you should use Keras.”

- “If you’re developing an industry application, use TensorFlow and Keras.”

Much of the feature sets between PyTorch/Keras and TensorFlow are converged — both contain essentially the same set of features, just accomplished in different ways.

If you are brand new to deep learning, just pick one and learn it. Personally, I do think Keras is the most suitable for teaching budding deep learning practitioners. I also think that Keras is the best choice to rapidly prototype and deploy deep learning models.

That said, PyTorch does make it easier for more advanced practitioners to implement custom training loops, layer types, and architectures. This argument is somewhat diminished now that the TensorFlow 2.x API is out, but I believe it’s still worth mentioning.

Most importantly, whatever deep learning library you use or choose to learn, don’t become a fanatic, don’t troll message boards, and in general, don’t cause problems. There’s enough hate in this world already — as a scientific community we should be above the hate mail and hair pulling.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: January 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned about the PyTorch deep learning library, including:

- What PyTorch is

- How to install PyTorch on your machine

- PyTorch GPU support

- Why PyTorch is popular in the research community

- Whether to use PyTorch or Keras/TensorFlow in your projects

Next week, you’ll gain some hands-on experience with PyTorch by implementing and training your first neural network.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.