In this tutorial, you will learn how to perform transfer learning with Keras, Deep Learning, and Python on your own custom datasets.

Imagine this:

You’re just hired by Yelp to work in their computer vision department.

Yelp has just launched a new feature on its website that allows reviewers to take photos of their food/dishes and then associate them with particular items on a restaurant’s menu.

It’s a neat feature…

…but they are getting a lot of unwanted image spam.

Certain nefarious users aren’t taking photos of their dishes…instead, they are taking photos of… (well, you can probably guess).

Your task?

Figure out how to create an automated computer vision application that can distinguish between “food” and “not food”, thereby allowing Yelp to continue with their new feature launch and provide value to their users.

So, how are you going to build such an application?

The answer lies in transfer learning via deep learning.

Today marks the start of a brand new set of tutorials on transfer learning using Keras. Transfer learning is the process of:

- Taking a network pre-trained on a dataset

- And utilizing it to recognize image/object categories it was not trained on

Essentially, we can utilize the robust, discriminative filters learned by state-of-the-art networks on challenging datasets (such as ImageNet or COCO), and then apply these networks to recognize objects the model was never trained on.

In general, there are two types of transfer learning in the context of deep learning:

- Transfer learning via feature extraction

- Transfer learning via fine-tuning

When performing feature extraction, we treat the pre-trained network as an arbitrary feature extractor, allowing the input image to propagate forward, stopping at pre-specified layer, and taking the outputs of that layer as our features.

Fine-tuning, on the other hand, requires that we update the model architecture itself by removing the previous fully-connected layer heads, providing new, freshly initialized ones, and then training the new FC layers to predict our input classes.

We’ll be covering both techniques in this series here on the PyImageSearch blog, but today we are going to focus on feature extraction.

To learn how to perform transfer learning via feature extraction with Keras, just keep reading!

Transfer learning with Keras and Deep Learning

2020-05-13 Update: This blog post is now TensorFlow 2+ compatible!

Note: Many of the transfer learning concepts I’ll be covering in this series tutorials also appear in my book, Deep Learning for Computer Vision with Python. Inside the book, I go into much more detail (and include more of my tips, suggestions, and best practices). If you would like more detail on transfer learning after going through this guide, definitely take a look at my book.

In the first part of this tutorial, we will review two methods of transfer learning: feature extraction and fine-tuning.

I’ll then provide a detailed discussion of how to perform transfer learning via feature extraction (the primary focus of this tutorial).

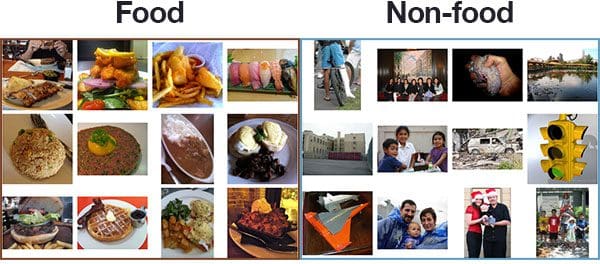

From there, we’ll review Food-5k dataset, a dataset containing 5,000 images falling into two classes: “food” and “not-food”.

We’ll utilize transfer learning via feature extraction to recognize both of these classes in this tutorial.

Once we have a good handle on the dataset, we’ll start coding.

We’ll have a number of Python files to review, each accomplishing a specific step, including:

- Creating a configuration file.

- Building our dataset (i.e., putting the images in the proper directory structure).

- Extracting features from our input images using Keras and pre-trained CNNs.

- Training a Logistic Regression model on top of the extracted features.

Parts of the code we’ll be reviewing here today will also be utilized in the rest of the transfer learning series — if you intend on following along with the tutorials, take the time now to ensure you understand the code.

Two types of transfer learning: feature extraction and fine-tuning

Note: The following section has been adapted from my book, Deep Learning for Computer Vision with Python. For the full set of chapters on transfer learning, please refer to the text.

Consider a traditional machine learning scenario where we are given two classification challenges.

In the first challenge, our goal is to train a Convolutional Neural Network to recognize dogs vs. cats in an image.

Then, in the second project, we are tasked with recognizing three separate species of bears: grizzly bears, polar bears, and giant pandas.

Using standard practices in machine learning/deep learning, we could treat these challenges as two separate problems:

- First, we would gather a sufficient labeled dataset of dogs and cats, followed by training a model on the dataset

- We would then repeat the process a second time, only this time, gathering images of our bear breeds, and then training a model on top of the labeled dataset.

Transfer learning proposes a different paradigm — what if we could utilize an existing pre-trained classifier as a starting point for a new classification, object detection, or instance segmentation task?

Using transfer learning in the context of the proposed challenges above, we would:

- First train a Convolutional Neural Network to recognize dogs versus cats

- Then, use the same CNN trained on the dog and cat data and use it to distinguish between the bear classes, even though no bear data was mixed with the dog and cat data during the initial training

Does this sound too good to be true?

It’s actually not.

Deep neural networks trained on large-scale datasets such as ImageNet and COCO have proven to be excellent at the task of transfer learning.

These networks learn a set of rich, discriminative features capable of recognizing 100s to 1,000s of object classes — it only makes sense that these filters can be reused for tasks other than what the CNN was originally trained on.

In general, there are two types of transfer learning when applied to deep learning for computer vision:

- Treating networks as arbitrary feature extractors.

- Removing the fully-connected layers of an existing network, placing a new set of FC layers on top of the CNN, and then fine-tuning these weights (and optionally previous layers) to recognize the new object classes.

In this blog post, we’ll focus primarily on the first method of transfer learning, treating networks as feature extractors.

We’ll discuss fine-tuning networks later in this series on transfer learning with deep learning.

Transfer learning via feature extraction

Note: The following section has been adapted from my book, Deep Learning for Computer Vision with Python. For the full set of chapters on feature extraction, please refer to the text.

Typically, you’ll treat a Convolutional Neural Network as an end-to-end image classifier:

- We input an image to the network.

- The image forward propagates through the network.

- We obtain our final classification probabilities at the end of the network.

However, there is no “rule” that says we must allow the image to forward propagate through the entire network.

Instead, we can:

- Stop propagation at an arbitrary, but pre-specified layer (such as an activation or pooling layer).

- Extract the values from the specified layer.

- Treat the values as a feature vector.

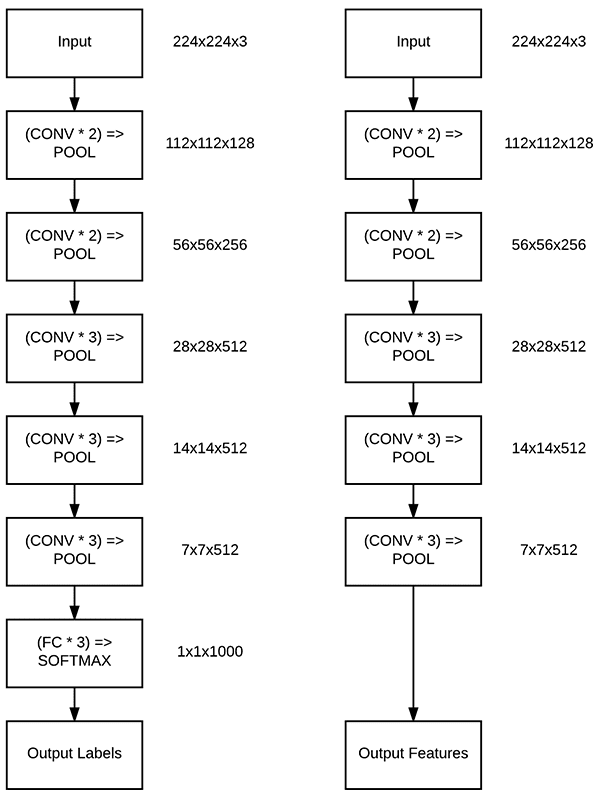

For example, let’s consider the VGG16 network by Simonyan and Zisserman in Figure 2 (left) at the top of this section.

Along with the layers in the network, I have also included the input and output volume shapes for each layer.

When treating networks a feature extractor, we essentially “chop off” the network at our pre-specified layer (typically prior to the fully-connected layers, but it really depends on your particular dataset).

If we were to stop propagation before the fully-connected layers in VGG16, the last layer in the network would become the max-pooling layer (Figure 2, right), which will have an output shape of 7 x 7 x 512. Flattening, this volume into a feature vector we would obtain a list of 7 x 7 x 512 = 25,088 values — this list of numbers serves as our feature vector used to quantify the input image.

We can then repeat the process for our entire dataset of images.

Given a total of N images in our network, our dataset would now be represented as a list of N vectors, each of 25,088-dim.

Once we have our feature vectors, we can train off-the-shelf machine learning models such as Linear SVM, Logistic Regression, Decision Trees, or Random Forests on top of these features to obtain a classifier that can recognize new classes of images.

That said, the two most common machine learning models you’ll see for transfer learning via feature extraction are:

- Logistic Regression

- Linear SVM

Why those two models?

First, keep in mind our feature extractor is a CNN.

CNN’s are non-linear models capable of learning non-linear features — we are assuming that the features learned by the CNN are already robust and discriminative.

The second, and perhaps arguably more important reason, is that our feature vectors tend to be very large and have high dimensionality.

We, therefore, need a fast model that can be trained on top of the features — linear models tend to be very fast to train.

For example, our dataset of 5,000 images, each represented by a feature vector of 25,088-dim, can be trained in a few seconds using a Logistic Regression model.

To wrap up this section, I want you to keep in mind that the CNN itself is not capable of recognizing these new classes.

Instead, we are using the CNN as an intermediary feature extractor.

The downstream machine learning classifier will take care of learning the underlying patterns of the features extracted from the CNN.

The Foods-5K dataset

The dataset we’ll be using here today is the Food-5K dataset, curated by the Multimedia Signal Processing Group (MSPG) of the Swiss Federal Institute of Technology.

The dataset, as the name suggests, consists of 5,000 images, belonging to two classes:

- Food

- Non-food

Our goal of is to train a classifier such that we can distinguish between these two classes.

MSPG has provided us with pre-split training, validation, and testing splits. We’ll be using these splits both in this guide on transfer learning via extraction as well as the rest of our tutorials on feature extraction.

Downloading the Food-5K dataset

Due to the unreliable Food-5K dataset download method originally posted at the following FTP site with password:

- Host: tremplin.epfl.ch

- Username: FoodImage@grebvm2.epfl.ch

- Password: Cahc1moo

… I’ve now included the dataset directly right here:

Project structure

Before moving on, go ahead and:

- Find the “Downloads” section of this blog post and grab the code.

- Download the Food-5K dataset using the link directly above.

You’ll then have two .zip files. First, extract transfer-learning-keras.zip. Inside, you’ll find an empty folder named Food-5K/. Second, place the Food-5K.zip file inside that folder and extract it.

Once you’ve completed those steps, you’ll be presented with the following directory structure:

$ tree --dirsfirst --filelimit 10 . ├── Food-5K │ ├── evaluation [1000 entries] │ ├── training [3000 entries] │ ├── validation [1000 entries] │ └── Food-5K.zip ├── dataset ├── output ├── pyimagesearch │ ├── __init__.py │ └── config.py ├── build_dataset.py ├── extract_features.py └── train.py 7 directories, 6 files

As you can see, the Food-5K/ contains evaluation/ , training/ , and validation/ sub-directories (these will appear after you extract Food-5K.zip). Each sub-directory contains 1,000 .jpg image files.

Our dataset/ directory, while empty now, will soon contain the Food-5K images in a more organized form (to be discussed in the section, “Building our dataset for feature extraction”).

Upon successfully executing today’s Python scripts, the output/ directory will house our extracted features (stored in three separate .csv files) as well as our label encoder and model (both of which are in .cpickle format). These files are intentionally not included in the .zip; you must follow this tutorial to create them.

Our Python scripts include:

pyimagesearch/config.py: Our custom configuration file will help us manage our dataset, class names, and paths. It is written in Python directly so that we can useos.pathto build OS-specific formatted file paths directly in the script.build_dataset.py: Using the configuration, this script will create an organized dataset on disk, making it easy to extract features from.extract_features.py: The transfer learning magic begins here. This Python script will use a pre-trained CNN to extract raw features, storing the results in a.csvfile. The label encoder.cpicklefile will also be output via this script.train.py: Our training script will train a Logistic Regression model on top of the previously computed features. We will evaluate and save the resulting model as a.cpickle.

The config.py and build_dataset.py scripts will be re-used in the rest of the series on transfer learning so make sure you pay close attention to them!

Our configuration file

Let’s get started by reviewing our configuration file.

Open up config.py in the pyimagesearch submodule and insert the following code:

# import the necessary packages import os # initialize the path to the *original* input directory of images ORIG_INPUT_DATASET = "Food-5K" # initialize the base path to the *new* directory that will contain # our images after computing the training and testing split BASE_PATH = "dataset"

We begin with a single import. We’ll use the os module (Line 2) in this config to concatenate paths properly.

The ORIG_INPUT_DATASET is the path to the original input dataset (i.e., where you downloaded and unarchived the Food-5K dataset).

The next path, BASE_PATH , will be where our dataset is organized (the result of executing build_dataset.py .

Note: The directory structure is not especially useful for this particular post, but it will be later in the series once we get to fine-tuning. Again, I consider organizing datasets in this manner a “best practice” for reasons you’ll see in this series.

Let’s specify more dataset configs as well as our class labels and batch size:

# define the names of the training, testing, and validation # directories TRAIN = "training" TEST = "evaluation" VAL = "validation" # initialize the list of class label names CLASSES = ["non_food", "food"] # set the batch size BATCH_SIZE = 32

The path to output training, evaluation, and validation directories is specified on Lines 13-15.

The CLASSES are specified in list form on Line 18. As previously mentioned, we’ll be working with "food" and "non_food" images.

When extracting features, we’ll break our data into bite-sized chunks called batches. The BATCH_SIZE is specified on Line 21.

Finally, we can build the rest of our paths:

# initialize the label encoder file path and the output directory to # where the extracted features (in CSV file format) will be stored LE_PATH = os.path.sep.join(["output", "le.cpickle"]) BASE_CSV_PATH = "output" # set the path to the serialized model after training MODEL_PATH = os.path.sep.join(["output", "model.cpickle"])

Our label encoder path is concatenated on Line 25 where the result of joining the paths is output/le.cpickle on Linux/Mac or output\le.cpickle on Windows.

The extracted features will live in a CSV file in the path specified in BASE_CSV_PATH .

Lastly, we assemble the path to our exported model file in MODEL_PATH .

Building our dataset for feature extraction

Before we can extract features from our set of input images, let’s take the time to organize our images on disk.

I prefer to have my dataset on disk organized in the format of:

dataset_name/class_label/example_of_class_label.jpg

Maintaining this directory structure:

- Not only keeps our dataset organized on disk…

- …but also enables us to utilize Keras’

flow_from_directoryfunction when we get to fine-tuning later in this series of tutorials.

Since the Food-5K dataset also provides pre-supplied data splits, our final directory structure will have the form:

dataset_name/split_name/class_label/example_of_class_label.jpg

Let’s go ahead and build our dataset + directory structure now.

Open up the build_dataset.py file and insert the following code:

# import the necessary packages

from pyimagesearch import config

from imutils import paths

import shutil

import os

# loop over the data splits

for split in (config.TRAIN, config.TEST, config.VAL):

# grab all image paths in the current split

print("[INFO] processing '{} split'...".format(split))

p = os.path.sep.join([config.ORIG_INPUT_DATASET, split])

imagePaths = list(paths.list_images(p))

Our packages are imported on Lines 2-5. We’ll use our config (Line 2) throughout this script to recall our settings. The other three imports — paths , shutil , and os — will allow us to traverse directories, create folders, and copy files.

On Line 8 we begin looping over our training, testing, and validation splits.

Lines 11 and 12 create a list of all imagePaths in the split.

From there we’ll go ahead and loop over the imagePaths :

# loop over the image paths

for imagePath in imagePaths:

# extract class label from the filename

filename = imagePath.split(os.path.sep)[-1]

label = config.CLASSES[int(filename.split("_")[0])]

# construct the path to the output directory

dirPath = os.path.sep.join([config.BASE_PATH, split, label])

# if the output directory does not exist, create it

if not os.path.exists(dirPath):

os.makedirs(dirPath)

# construct the path to the output image file and copy it

p = os.path.sep.join([dirPath, filename])

shutil.copy2(imagePath, p)

For each imagePath in the split, we proceed to:

- Extract the class

labelfrom the filename (Lines 17 and 18). - Construct the path to the output directory based on the

BASE_PATH,split, andlabel(Line 21). - Create

dirPath(if necessary) via Lines 24 and 25. - Copy the image into the destination path (Lines 28 and 29).

Now that build_dataset.py has been coded, use the “Downloads” section of the tutorial to download an archive of the source code.

You can then execute build_dataset.py using the following command:

$ python build_dataset.py [INFO] processing 'training split'... [INFO] processing 'evaluation split'... [INFO] processing 'validation split'...

Here you can see that our script executed successfully.

To verify your directory structure on disk, use the ls command:

$ ls dataset/ evaluation training validation

Inside the dataset directory, we have our training, evaluation, and validation splits.

And inside each of those directories, we have class labels directories:

$ ls dataset/training/ food non_food

Extracting features from our dataset using Keras and pre-trained CNNs

Let’s move on to the actual feature extraction component of transfer learning.

All code used for feature extraction using a pre-trained CNN will live inside extract_features.py — open up that file and insert the following code:

# import the necessary packages

from sklearn.preprocessing import LabelEncoder

from tensorflow.keras.applications import VGG16

from tensorflow.keras.applications.vgg16 import preprocess_input

from tensorflow.keras.preprocessing.image import img_to_array

from tensorflow.keras.preprocessing.image import load_img

from pyimagesearch import config

from imutils import paths

import numpy as np

import pickle

import random

import os

# load the VGG16 network and initialize the label encoder

print("[INFO] loading network...")

model = VGG16(weights="imagenet", include_top=False)

le = None

On Lines 2-12, all the packages necessary for extracting features are imported. Most notably this includes VGG16 .

VGG16 is the convolutional neural network (CNN) we are using for transfer learning (Line 3).

On Line 16, we load the model while specifying two parameters:

weights="imagenet": Pre-trained ImageNet weights are loaded for transfer learning.include_top=False: We do not include the fully-connected head with the softmax classifier. In other words, we chop off the head of the network.

With weights dialed in and by loading our model without the head, we are now ready for transfer learning. We will use the output values of the network directly, storing the results as feature vectors.

Finally, our label encoder is initialized on Line 17.

Let’s loop over our data splits:

# loop over the data splits

for split in (config.TRAIN, config.TEST, config.VAL):

# grab all image paths in the current split

print("[INFO] processing '{} split'...".format(split))

p = os.path.sep.join([config.BASE_PATH, split])

imagePaths = list(paths.list_images(p))

# randomly shuffle the image paths and then extract the class

# labels from the file paths

random.shuffle(imagePaths)

labels = [p.split(os.path.sep)[-2] for p in imagePaths]

# if the label encoder is None, create it

if le is None:

le = LabelEncoder()

le.fit(labels)

# open the output CSV file for writing

csvPath = os.path.sep.join([config.BASE_CSV_PATH,

"{}.csv".format(split)])

csv = open(csvPath, "w")

Looping over each split (training, testing, and validation) begins on Line 20.

First, we grab all imagePaths for the split (Lines 23 and 24).

Paths are randomly shuffled via Line 28, and from there, our class labels are extracted from the paths themselves (Line 29).

If necessary, our label encoder is instantiated and fitted (Lines 32-34), ensuring we can convert the string class labels to integers.

Next, we construct the path to output our CSV files (Lines 37-39). We will have three CSV files — one for each data split. Each CSV will have N number of rows — one for each of the images in the data split.

The next step is to loop over our imagePaths in BATCH_SIZE chunks:

# loop over the images in batches

for (b, i) in enumerate(range(0, len(imagePaths), config.BATCH_SIZE)):

# extract the batch of images and labels, then initialize the

# list of actual images that will be passed through the network

# for feature extraction

print("[INFO] processing batch {}/{}".format(b + 1,

int(np.ceil(len(imagePaths) / float(config.BATCH_SIZE)))))

batchPaths = imagePaths[i:i + config.BATCH_SIZE]

batchLabels = le.transform(labels[i:i + config.BATCH_SIZE])

batchImages = []

To create our batches of imagePaths , we use Python’s range function. The function accepts three parameters: start , stop , and step . You can read more about range in this detailed explanation.

Our batches will step through the entire list of imagePaths . The step is our batch size (32 unless you adjust it in the configuration settings).

On Lines 48 and 49 the current batch of image paths and labels are extracted using array slicing. Our batchImages list is then initialized on Line 50.

Let’s go ahead and populate our batchImages now:

# loop over the images and labels in the current batch for imagePath in batchPaths: # load the input image using the Keras helper utility # while ensuring the image is resized to 224x224 pixels image = load_img(imagePath, target_size=(224, 224)) image = img_to_array(image) # preprocess the image by (1) expanding the dimensions and # (2) subtracting the mean RGB pixel intensity from the # ImageNet dataset image = np.expand_dims(image, axis=0) image = preprocess_input(image) # add the image to the batch batchImages.append(image)

Looping over batchPaths (Line 53), we will load each image , preprocess it, and gather it into batchImages .

The image itself is loaded on Line 56.

Preprocessing includes:

- Resizing to 224×224 pixels via the

target_sizeparameter on Line 56. - Converting to array format (Line 57).

- Adding a batch dimension (Line 62).

- Mean subtraction (Line 63).

If these preprocessing steps appear foreign, please refer to Deep Learning for Computer Vision with Python.

Finally, the image is added to the batch via Line 66.

Now we will pass the batch of images through our network to extract features:

# pass the images through the network and use the outputs as # our actual features, then reshape the features into a # flattened volume batchImages = np.vstack(batchImages) features = model.predict(batchImages, batch_size=config.BATCH_SIZE) features = features.reshape((features.shape[0], 7 * 7 * 512))

Our batch of images is sent through the network via Lines 71 and 72.

Keep in mind that we have removed the fully-connected layer head of the network. Instead, the forward propagation stops at the max-pooling layer. We will treat the output of the max-pooling layer as a list of features , also known as a “feature vector”.

The output dimension of the max-pooling layer is (batch_size, 7 x 7 x 512). We can thus reshape the features into a NumPy array of shape (batch_size, 7 * 7 * 512), treating the output of the CNN as a feature vector.

Let’s wrap up this script:

# loop over the class labels and extracted features

for (label, vec) in zip(batchLabels, features):

# construct a row that exists of the class label and

# extracted features

vec = ",".join([str(v) for v in vec])

csv.write("{},{}\n".format(label, vec))

# close the CSV file

csv.close()

# serialize the label encoder to disk

f = open(config.LE_PATH, "wb")

f.write(pickle.dumps(le))

f.close()

Maintaining our batch efficiency, the features and associated class labels are written to our CSV file (Lines 76-80).

Inside the CSV file, the class label is the first field in each row (enabling us to easily extract it from the row during training). The feature vec follows.

Each CSV file will be closed via Line 83. Recall that upon completion we will have one CSV file per data split.

Finally, we can dump the label encoder to disk (Lines 86-88).

Let’s go ahead and extract features from our dataset using the VGG16 network pre-trained on ImageNet.

Use the “Downloads” section of this tutorial to download the source code, and from there, execute the following command:

$ python extract_features.py [INFO] loading network... [INFO] processing 'training split'... ... [INFO] processing batch 92/94 [INFO] processing batch 93/94 [INFO] processing batch 94/94 [INFO] processing 'evaluation split'... ... [INFO] processing batch 30/32 [INFO] processing batch 31/32 [INFO] processing batch 32/32 [INFO] processing 'validation split'... ... [INFO] processing batch 30/32 [INFO] processing batch 31/32 [INFO] processing batch 32/32

On an NVIDIA K80 GPU it took 2m55s to extract features from the 5,000 images in the Food-5K dataset.

You can use a CPU instead, but it will take quite a bit longer.

Implementing our training script

The final step for transfer learning via feature extraction is to implement a Python script that will take our extracted features from the CNN and then train a Logistic Regression model on top of the features.

Again, keep in mind that our CNN did not predict anything! Instead, the CNN was treated as an arbitrary feature extractor.

We inputted an image to the network, it was forward propagated, and then we extracted the layer outputs from the max-pooling layer — these outputs serve as our feature vectors.

To see how we can train a model on these feature vectors, open up the train.py file and let’s get to work:

# import the necessary packages

from sklearn.linear_model import LogisticRegression

from sklearn.metrics import classification_report

from pyimagesearch import config

import numpy as np

import pickle

import os

def load_data_split(splitPath):

# initialize the data and labels

data = []

labels = []

# loop over the rows in the data split file

for row in open(splitPath):

# extract the class label and features from the row

row = row.strip().split(",")

label = row[0]

features = np.array(row[1:], dtype="float")

# update the data and label lists

data.append(features)

labels.append(label)

# convert the data and labels to NumPy arrays

data = np.array(data)

labels = np.array(labels)

# return a tuple of the data and labels

return (data, labels)

On Lines 2-7 we import our required packages. Notably, we’ll use LogisticRegression as our machine learning classifier. Fewer imports are required for our training script as compared to extracting features. This is partly because the training script itself is actually simpler.

Let’s define a function named load_data_split on Line 9. This function is responsible for loading all data and labels given the path of a data split CSV file (the splitPath parameter).

Inside the function, we start by initializing, our data and labels lists (Lines 11 and 12).

From there we open the CSV and loop over all rows beginning on Line 15. In the loop, we:

- Load all comma separated values from the

rowinto a list (Line 17). - Grab the class

labelvia Line 18 (it is the first value in the list). - Extract all

featuresfrom the row (Line 19). These are all values in the list except the class label. The result is our feature vector. - From there, we append the feature vector and

labelto thedataandlabelslists respectively (Lines 22 and 23).

Finally, the data and labels are returned to the calling function (Line 30).

With the load_data_spit function ready to go, let’s put it to work by loading our data:

# derive the paths to the training and testing CSV files

trainingPath = os.path.sep.join([config.BASE_CSV_PATH,

"{}.csv".format(config.TRAIN)])

testingPath = os.path.sep.join([config.BASE_CSV_PATH,

"{}.csv".format(config.TEST)])

# load the data from disk

print("[INFO] loading data...")

(trainX, trainY) = load_data_split(trainingPath)

(testX, testY) = load_data_split(testingPath)

# load the label encoder from disk

le = pickle.loads(open(config.LE_PATH, "rb").read())

Lines 33-41 load our training and testing feature data from disk. We’re using our function from the previous code block to handle the loading process.

Line 44 loads our label encoder.

With our data in memory, we’re now ready to train our machine learning classifier:

# train the model

print("[INFO] training model...")

model = LogisticRegression(solver="lbfgs", multi_class="auto",

max_iter=150)

model.fit(trainX, trainY)

# evaluate the model

print("[INFO] evaluating...")

preds = model.predict(testX)

print(classification_report(testY, preds, target_names=le.classes_))

# serialize the model to disk

print("[INFO] saving model...")

f = open(config.MODEL_PATH, "wb")

f.write(pickle.dumps(model))

f.close()

Lines 48-50 are responsible for initializing and training our Logistic Regression model .

Note: To learn more about Logistic Regression and other machine learning algorithms in detail, be sure to refer to PyImageSearch Gurus, my flagship computer vision course and community.

Lines 54 and 55 facilitate evaluating the model on the testing set and printing classification statistics in the terminal.

Finally, the model is output in Python’s pickle format (Lines 59-61).

That’s a wrap for our training script! As you’ve learned, writing code for training a Logistic Regression model on top of feature data is very straightforward. In the next section, we will run the training script.

If you are wondering how we would handle so much feature data that it can’t fit into memory all at once, stay tuned for next week’s tutorial.

Note: This tutorial is long enough as is, so I haven’t covered how to tune the hyperparameters to the Logistic Regression model, something I definitely recommend doing to ensure to obtain the highest accuracy possible. If you’re interested in learning more about transfer learning, and how to tune hyperparameters, during feature extraction, be sure to refer to Deep Learning for Computer Vision with Python where I cover the techniques in more detail.

Training a model on the extracted features

At this point, we are ready to perform the final step on transfer learning via feature extraction with Keras.

Let’s briefly review what we have done so far:

- Downloaded the Food-5K dataset (5,000 images belonging to two classes, “food” and “non-food”, respectively).

- Restructured the original directory structure of the dataset in a format more suitable for transfer learning (in particular, fine-tuning which we’ll be covering later in this series).

- Extracted features from the images using VGG16 pre-trained on ImageNet.

And now, we’re going to train a Logistic Regression model on top of these extracted features.

Again, keep in mind that VGG16 was not trained to recognize the “food” versus “non-food” classes. Instead, it was trained to recognize 1,000 ImageNet classes.

But, by leveraging:

- Feature extraction with VGG16

- And applying a Logistic Regression classifier on top of those extracted features

…we will be able to recognize the new classes, even though VGG16 was never trained to recognize them!

Go ahead and use the “Downloads” section of this tutorial to download the source code to this guide.

From there, open up a terminal and execute the following command:

$ python train.py

[INFO] loading data...

[INFO] training model...

[INFO] evaluating...

precision recall f1-score support

food 0.99 0.98 0.98 500

non_food 0.98 0.99 0.99 500

accuracy 0.98 1000

macro avg 0.99 0.98 0.98 1000

weighted avg 0.99 0.98 0.98 1000

[INFO] saving model...

Training on my machine took only 27 seconds, and as you can see from our output, we are obtaining 98-99% accuracy on the testing set!

When should I use transfer learning and feature extraction?

Transfer learning via feature extraction is often one of the easiest methods to obtain a baseline accuracy in your own projects.

Whenever I am confronted with a new deep learning project, I often throw feature extraction with Keras at it just to see what happens:

- In some cases, the accuracy is sufficient.

- In others, it requires me to tune the hyperparameters to my Logistic Regression model or try another pre-trained CNN.

- And in other situations, I need to explore fine-tuning or even training from scratch with a custom CNN architecture.

Regardless, in the best case transfer learning via feature extraction gives me good accuracy and the project can be completed.

And in the worst case I’ll gain a baseline to beat with my future experiments.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

Today marked the start of our series on transfer learning with Keras and Deep Learning.

The two primary forms of feature extraction via deep learning are:

- Feature extraction

- Fine-tuning

The focus of today’s tutorial was on feature extraction, the process of treating a pre-trained network as an arbitrary feature extractor.

The steps to perform transfer learning via feature extraction include:

- Starting with a pre-trained network (typically on a dataset such as ImageNet or COCO; large enough for the model to learn discriminative filters).

- Allowing an input image to forward propagate to an arbitrary (pre-specified) layer.

- Taking the output of that layer and treating it as a feature vector.

- Training a “standard” machine learning model on the dataset of extracted features.

The benefit of performing transfer learning via feature extraction is that we do not need to train (or re-train) our neural network.

Instead, the network serves as a black box feature extractor.

Those extracted features, which are assumed to be non-linear in nature (since they were extracted from a CNN), are then passed into a linear model for classification.

If you’re interested in learning more about transfer learning, feature extraction, and fine-tuning, be sure to refer to my book, Deep Learning for Computer Vision with Python where I cover the topic in more detail.

I hope you enjoyed today’s post! Stay tuned for next week when we discuss how to work with feature extraction when our dataset is too large too fit into memory.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Adrain,

FTP credentials for the EPFL site are not working. Is there a way for you to uplaod your dataset somewhere and share the link with us.

Thanks,

Ravi

Thanks for mentioning it Ravi. The dataset is ~450MB. Let me see what I can do — I host my code/datasets on Amazon S3 but a 450MB file could get expensive really quickly.

EDIT: Are you sure you’re using FTP (and not SFTP)? I made that mistake once.

Thanks Adrian. I am using FTP. I tried 2 different ftp clients, WinSCP and Filezilla. dint work on either of them.

Were you able to download the dataset? If yes, can you share that. I tried using FileZilla (ftp) but it didn’t work (Connection timedout).

Had similar problem. Changed network setting to disable “Internet Protocol Version 6(TC?/IPv6)” and then use ftp command in terminal. And it worked.

Awesome, thank you for sharing!

Also had some problems to download the dataset, I was able to do it with ftp from the terminal

Lode also provided the solution using wget in this comment.

Thanks Lode!

I’ll also add that you should try the dataset host’s recommended FileZilla instructions. I found that no matter whether I used

wgetor FileZilla, I had to try the connection twice. Their FTP server is buggy (that’s my conclusion since I’ve never had this problem before)!Thanks for another great utorial.

Will you be covering blog on transfer learning with CNN/LSTM combination, transfer learning from text data to image, etc?

Your book is really detailed on the topic.

Thanks

Thanks Abkul. I’ve considered doing some tutorials related to LSTMs but I cannot guarantee when that will be.

Hi Adrian,

Have you covered the CNN/LSTM combination part in any of your tutorials? If yes, can you kindly share the link? If no, when will you be covering that part? :p

I have not, but it’s on my short list of topics to cover.

As always, very detailed and awesome tutorial. Will definitely try this right away.

Thanks Allan!

I understand why the input has to be split between training, evaluation, and validation, but once the model is trained, is it possible to throw the evaluation and validation images into the training pool for a few more cycles of training, to train using all the images? Has there been there any study measuring the benefit of doing this? I’d really like to use all the input data for training.

Typically that isn’t going to help very much (if at all).

this is a great an easy to understand tutorial about transfer learning. thank you very much! I have a question though, how can we use transfer learning if it is a regression problem and not a classification problem. Is it possible to extract features and then use it to train a linear regression model?

I actually cover how to use CNNs for regression here.

If you’d like to perform fine-tuning but with regression you should wait until the post in two weeks. I’ll be doing fine-tuning for classification but you’ll be able to swap out the activation in the final FC layer using the instructions above.

Great Article as usual

I can’t wait for part 2

Thanks Walid!

From far Africa, infact ive not seen the best Detailed Computer Vision lectures and application ever in internet except yours.I’m really happy more grease to your effort Dr.

Thank you for the kind words, Benya. I really appreciate that 🙂

You are a great teacher!I appreciate your dedication.

Thanks Ninan 🙂

Is this faster / more accurate than doing everything in tensorflow? It looks like the training is faster, what about prediction time? Also, how is the object detection handled?

I’m not sure what you mean by “faster/more accurate”. I recommend you read this post on Keras vs. TensorFlow first (and why that’s the wrong question to be asking).

@adrian hoo : Keras is an API which uses Tensorflow or Theano as the core framework.

Hi Adrian.

Thank you for you good tutorials.

Your tutorials are the best educational topics in computer vision i have ever seen.

I ran you codes successfully.

But in the last step (saving model in pickle format) i faced this problem:

ConvergenceWarning: lbfgs failed to converge. Increase the number of iterations.

“of iterations.”, ConvergenceWarning)

what is the problem?is it about a hyperparameter tuning?

Thank you.

Thanks Naser, I’m glad you are enjoying the tutorials.

The message is just a warning from scikit-learn, you can safely ignore it.

Good Morning.. excellent tutorial as usual from you. Had a problem with multi_class being set to ‘auto’. Set it to ‘ovr’ and it worked fine. Got the same precision scores as the example you showed. Waiting patiently for kickstarter project to arrive in the fall. Congrats on your HUGE subscription.

Thanks Don 🙂

Ya I had to do the same, then it worked … thanks for this. What does this option do vs. the other … is there a reason why auto didn’t work .. I like ‘auto’ settings 🙂

First of all, thank you for the great tutorial sir. You’re the best!

I have a question about the extract_feature.py which it will display an error “Allocation of 411041792 exceeds 10% of the memory”. What kind of action that I need to do to solve this problem? Does this kind of error has anything to do with the size of images?

Is that a warning from TensorFlow? If so, ignore it.

Hi Adrian, Can you recommend some good books on bayesian deep learning?

i am unable to download dataset from the ways mentioned above please provide direct link to dataset

See my reply to Ravi.

Thank you for this great tutorial. I always enjoy reading your articles. Would you be considering doing a semi-supervised learning tutorial in your future posts. Thank you.

Thanks for the suggestion, Gery. I’ll consider doing such a tutorial but cannot guarantee if/when.

Dear Adrian

Amazing knowledge transfer, once again! I wish to throw a random image to the model and have it to predict but I have problems getting it right.

How may I do it?

Please advise.

Kind Regards

Lyron

Make sure you read the “What’s next — where do I learn more about transfer learning and feature extraction?”.

The short answer is that the blog post is long enough/detailed enough as is. If you want to learn how to make predictions using your own input images you should refer to Deep Learning for Computer Vision with Python where I cover the method in detail.

Hi Adrian,

thanks for a great tutorial.

Is it possible to validate results on a video? and how to add bounding boxes with accuracy of prediction? I cannot seem to find any information regarding this. I guess you can extract frames from a video, analyze it and put it back into a video, but is there a better way?

This blog post performs classification. For bounding boxes you need to perform object detection. Take a look at this tutorial to get started with object detection.

Secondly, if you want to perform classification on video, you would loop over each frame of the video, apply classification, and write the frame back to disk.

Will I run this code, and its further parts on windows 10 via Visual studio? Or is it better to do it on a virtual machine with Linux?

Best wishes!

Artur

ps. I’m a beginner, and I know only basics of Python and C#

Provided your Windows machine is configured for computer vision and deep learning, yes, this code will run on Windows.

However.

I do not support Windows here on the PyImageSearch blog. I strongly recommend you use Linux instead.

For the Food/nonFood classes, would it work if we only train Food class? if the image do not have Food, then it is not food.

No, you need two classes to train the model.

People who are struggling to download the food 5k dataset on Mac just navigate to the terminal and run this command: ‘networksetup -setv6off Wi-Fi’. After this use the wget command Adrian has stated above and it should start to download! Remember to turn IPv6 back on afterwards 🙂

Amazing, thanks Sean!

Hey Adrian,

After doing the training as instructed, I got a row with ‘accuracy’ and results didn’t visually tally with your final output. Something I missed?

What version of Keras, TensorFlow, and scikit-learn are you using?

Hello Adrian ,

Thank you for great article – really concise and easy to understand.

What is your opinion – which CNN model is best to extract features to be used for transfer learning – VGG16, Resnet …. ?

What do you think about using Google’s EfficientNet for feature extraction – it seems to represent the best tunned CNN model ….

I am talking about using it for a dataset of images just like you used in the article.

Vasil

There is no single “best” network for feature extraction and transfer learning but typically VGG16 and ResNet work very well for transfer learning.

can we use cv2 to resize the image instead of numpy

Keras is being used to resize the image here (not NumPy) but yes, you can use OpenCV via the “cv2.resize” function.

Hi Adrian! Thanks for your posts; they’ve been amazing. I was wondering – Is it possible to do image data augmentation if I’m also doing transfer learning? I’m using fit_generator and getting the error “you must compile your model before using it.”

Yes, you can absolutely perform data augmentation when doing transfer learning. Your error here doesn’t have to do with that though (you’re forgetting to run the “.compile” method before training).

why u have added an extra dimension?

Did u apply vgg16 model just to reduce dimensions?

if so can we use PCA or SVD

I’m not sure what you mean by “added an extra dimension” — which line of code are you referring to?

line 62- extract_features.py

The additional dimension is for the batch. It has the shape (batch_size, height, width, num_channels)

Hi,

So you used a csv file to get the features from the CNN, which is obviously a huge file. My concern is, when you load the features in the trainX to train the LR model, my RAM runs out of memory.

SO what should I do in this case? What I did was I got the features of in batches of 15 (total dataset is 10100 images), stored them in an array and trained the LR model in batches. SO will this work?

You can use incremental learning.

Hi Adrian,

This post contains labelled data/supervised learning.

what if we have a unsupervised data or unlabeled images contains in a single folder ,how do u clustering those images and assign them lablel/tag?

Sorry, I don’t have any unsupervised deep learning tutorials. Perhaps I’ll cover it in the future.

Great tutorial, Adrian. Thank you.

You are welcome!

Thanks for the tutorial,

I am new to python, and I do not know what cause this problem in python 3.6 but the line

imagePaths = list(paths.list_images(p))

had problem with Food-5k naming and changing it to Food solved my problem.

Another way to download the dataset is to use the bellow link:

https://www.kaggle.com/tohidul/food11/downloads/food11.zip

Thanks, I didn’t realize that the dataset was also on Kaggle!

Excellent Post.Thanks for sharing with us.

If it is multi-class classification what are the changes to be in the same project

See this tutorial.

Such a great tutorial Adrian, Thank you so much! I wonder to test the result of training on an input image like in other models, so how can I do it please?

Take a look at Deep Learning for Computer Vision with Python which shows you how to use the trained and fine-tune models for prediction on new images.

thank you adrian for this amazing post.The size of extracted features in csv file using transfer learning is way bigger than the size of original dataset.How can i reduce the size of extracted features?can we extract features using our own cnn architecture instead of standard architecture like resnet?

1. I guess the bigger question is why is the large feature dataset a problem? Storage is “cheap”, I wouldn’t worry about that.

2. Secondly, yes, you can absolutely perform feature extraction using your own custom CNNs. Deep Learning for Computer Vision with Python will teach you how to do that.

Thank you Adrian for this amazing work. I always enjoy & get benefit from your tutorials 🙂

I have three questions, first; how do I get intermediate layer output? If I drops some layers (model.drop), should I add a new layer instead?

Second, the intermediate layers may lead to have a huge features vector; how to reduce it?

Third, what is different from using (mode.prediction ()) and (model.output ())?

Thank you so much.

Hey Hind — take a look at Deep Learning for Computer Vision with Python where I discuss the answers to those questions.

First, I would like to say thanks for your great Machine Learning posts!

I have a question about transfer learning. Let say I’ve trained and save models from a serial of training, such as cat and dog, frog and toad, and dolphin and whael. How do I use transfer learning to combine all these model together, so the program will be able to tell all mentioned animals?

Best regards,

Stonez

You can’t unfortunately reliably do that. You need to have the training data available for each of the classes when either training from scratch or fine-tuning.

Hi Adrian

Great post as always. I will try your model but I was wondering, If the input image will contain for example people eating food, will this model be able to detect that there is food in it?

Thank you so much Dr Adrian

You are welcome!

Hello, how i can use de model generate before training ? I need use the model in another dataset, for extract features.

I assume you’re referring to feature extraction for transfer learning? If so, refer to Deep Learning for Computer Vision with Python where I cover that topic in detail.

Hello Adrian,

Thank you, as always, for this very helpful tutorial. I have the following questions: We are taking a pre-trained CNN and using it as a feature extractor then to extract features for new classes. This feature extractor in this case was supervised learned. We could use an auto encoder (unsupervised) as a feature extractor in the same manner. is there any comparison done in terms of results between the two methods?

Hi, this is really good tutorial however I have few queries

1) If we see the feature vector saved in csv,Most of the feature values extracted this way are equal to zero, Why is it so?

2) What if I want to reduce the number of these extracted features or what if I want to extract features from 2nd last Conv block (ie with the shape 14*14*512

Thanks in advance

Many features will be zero since specific neurons were not activated. The ReLU function will clamp those values down to zero.

if you need additional help with transfer learning I would suggest you read Deep Learning for Computer Vision with Python where I cover transfer learning in detail.