In this tutorial, you will learn how to get started with your NVIDIA Jetson Nano, including:

- First boot

- Installing system packages and prerequisites

- Configuring your Python development environment

- Installing Keras and TensorFlow on the Jetson Nano

- Changing the default camera

- Classification and object detection with the Jetson Nano

I’ll also provide my commentary along the way, including what tripped me up when I set up my Jetson Nano, ensuring you avoid the same mistakes I made.

By the time you’re done with this tutorial, your NVIDIA Jetson Nano will be configured and ready for deep learning!

To learn how to get started with the NVIDIA Jetson Nano, just keep reading!

Getting started with the NVIDIA Jetson Nano

In the first part of this tutorial, you will learn how to download and flash the NVIDIA Jetson Nano .img file to your micro-SD card. I’ll then show you how to install the required system packages and prerequisites.

From there you will configure your Python development library and learn how to install the Jetson Nano-optimized version of Keras and TensorFlow on your device.

I’ll then show you how to access the camera on your Jetson Nano and even perform image classification and object detection on the Nano as well.

We’ll then wrap up the tutorial with a brief discussion on the Jetson Nano — a full benchmark and comparison between the NVIDIA Jetson Nano, Google Coral, and Movidius NCS will be published in a future blog post.

Before you get started with the Jetson Nano

Before you can even boot up your NVIDIA Jetson Nano you need three things:

- A micro-SD card (minimum 16GB)

- A 5V 2.5A MicroUSB power supply

- An ethernet cable

I really want to stress the minimum of a 16GB micro-SD card. The first time I configured my Jetson Nano I used a 16GB card, but that space was eaten up fast, particularly when I installed the Jetson Inference library which will download a few gigabytes of pre-trained models.

I, therefore, recommend a 32GB micro-SD card for your Nano.

Secondly, when it comes to your 5V 2.5A MicroUSB power supply, in their documentation NVIDIA specifically recommends this one from Adafruit.

Finally, you will need an ethernet cable when working with the Jetson Nano which I find really, really frustrating.

The NVIDIA Jetson Nano is marketed as being a powerful IoT and edge computing device for Artificial Intelligence…

…and if that’s the case, why is there not a WiFi adapter on the device?

I don’t understand NVIDIA’s decision there and I don’t believe it should be up to the end user of the product to “bring their own WiFi adapter”.

If the goal is to bring AI to IoT and edge computing then there should be WiFi.

But I digress.

You can read more about NVIDIA’s recommendations for the Jetson Nano here.

Download and flash the .img file to your micro-SD card

Before we can get started installing any packages or running any demos on the Jetson Nano, we first need to download the Jetson Nano Developer Kit SD Card Image from NVIDIA’s website.

NVIDIA provides documentation for flashing the .img file to a micro-SD card for Windows, macOS, and Linux — you should choose the flash instructions appropriate for your particular operating system.

First boot of the NVIDIA Jetson Nano

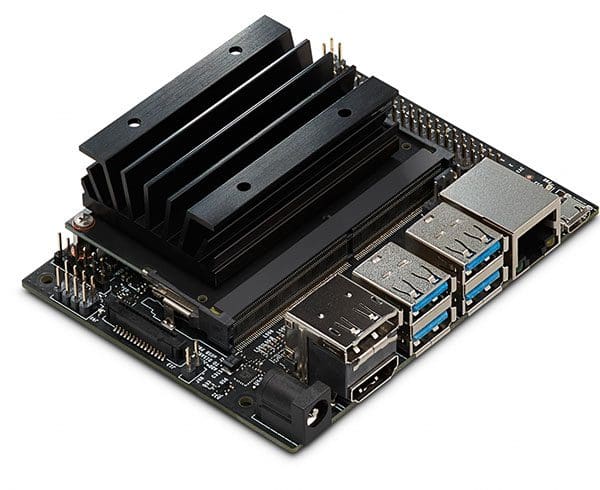

After you’ve downloaded and flashed the .img file to your micro-SD card, insert the card into the micro-SD card slot.

I had a hard time finding the card slot — it’s actually underneath the heat sync, right where my finger is pointing to:

I think NVIDIA could have made the slot a bit more obvious, or at least better documented it on their website.

After sliding the micro-SD card home, connect your power supply and boot.

Assuming your Jetson Nano is connected to an HDMI output, you should see the following (or similar) displayed to your screen:

The Jetson Nano will then walk you through the install process, including setting your username/password, timezone, keyboard layout, etc.

Installing system packages and prerequisites

In the remainder of this guide, I’ll be showing you how to configure your NVIDIA Jetson Nano for deep learning, including:

- Installing system package prerequisites.

- Installing Keras and TensorFlow and Keras on the Jetson Nano.

- Installing the Jetson Inference engine.

Let’s get started by installing the required system packages:

$ sudo apt-get install git cmake $ sudo apt-get install libatlas-base-dev gfortran $ sudo apt-get install libhdf5-serial-dev hdf5-tools $ sudo apt-get install python3-dev

Provided you have a good internet connection, the above commands should only take a few minutes to finish up.

Configuring your Python environment

The next step is to configure our Python development environment.

Let’s first install pip, Python’s package manager:

$ wget https://bootstrap.pypa.io/get-pip.py $ sudo python3 get-pip.py $ rm get-pip.py

We’ll be using Python virtual environments in this guide to keep our Python development environments independent and separate from each other.

Using Python virtual environments are a best practice and will help you avoid having to maintain a micro-SD for each development environment you want to use on your Jetson Nano.

To manage our Python virtual environments we’ll be using virtualenv and virtualenvwrapper which we can install using the following command:

$ sudo pip install virtualenv virtualenvwrapper

Once we’ve installed virtualenv and virtualenvwrapper we need to update our ~/.bashrc file. I’m choosing to use nano but you can use whatever editor you are most comfortable with:

$ nano ~/.bashrc

Scroll down to the bottom of the ~/.bashrc file and add the following lines:

# virtualenv and virtualenvwrapper export WORKON_HOME=$HOME/.virtualenvs export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3 source /usr/local/bin/virtualenvwrapper.sh

After adding the above lines, save and exit the editor.

Next, we need to reload the contents of the ~/.bashrc file using the source command:

$ source ~/.bashrc

We can now create a Python virtual environment using the mkvirtualenv command — I’m naming my virtual environment deep_learning, but you can name it whatever you would like:

$ mkvirtualenv deep_learning -p python3

Installing TensorFlow and Keras on the NVIDIA Jetson Nano

Before we can install TensorFlow and Keras on the Jetson Nano, we first need to install NumPy.

First, make sure you are inside the deep_learning virtual environment by using the workon command:

$ workon deep_learning

From there, you can install NumPy:

$ pip install numpy

Installing NumPy on my Jetson Nano took ~10-15 minutes to install as it had to be compiled on the system (there currently no pre-built versions of NumPy for the Jetson Nano).

The next step is to install Keras and TensorFlow on the Jetson Nano. You may be tempted to do a simple pip install tensorflow-gpu — do not do this!

Instead, NVIDIA has provided an official release of TensorFlow for the Jetson Nano.

You can install the official Jetson Nano TensorFlow by using the following command:

$ pip install --extra-index-url https://developer.download.nvidia.com/compute/redist/jp/v42 tensorflow-gpu==1.13.1+nv19.3

Installing NVIDIA’s tensorflow-gpu package took ~40 minutes on my Jetson Nano.

The final step here is to install SciPy and Keras:

$ pip install scipy $ pip install keras

These installs took ~35 minutes.

Compiling and installing Jetson Inference on the Nano

The Jetson Nano .img already has JetPack installed so we can jump immediately to building the Jetson Inference engine.

The first step is to clone down the jetson-inference repo:

$ git clone https://github.com/dusty-nv/jetson-inference $ cd jetson-inference $ git submodule update --init

We can then configure the build using cmake.

$ mkdir build $ cd build $ cmake ..

There are two important things to note when running cmake:

- The

cmakecommand will ask for root permissions so don’t walk away from the Nano until you’ve provided your root credentials. - During the configure process,

cmakewill also download a few gigabytes of pre-trained sample models. Make sure you have a few GB to spare on your micro-SD card! (This is also why I recommend a 32GB microSD card instead of a 16GB card).

After cmake has finished configuring the build, we can compile and install the Jetson Inference engine:

$ make $ sudo make install

Compiling and installing the Jetson Inference engine on the Nano took just over 3 minutes.

What about installing OpenCV?

I decided to cover installing OpenCV on a Jetson Nano in a future tutorial. There are a number of cmake configurations that need to be set to take full advantage of OpenCV on the Nano, and frankly, this post is long enough as is.

Again, I’ll be covering how to configure and install OpenCV on a Jetson Nano in a future tutorial.

Running the NVIDIA Jetson Nano demos

When using the NVIDIA Jetson Nano you have two options for input camera devices:

- A CSI camera module, such as the Raspberry Pi camera module (which is compatible with the Jetson Nano, by the way)

- A USB webcam

I’m currently using all of my Raspberry Pi camera modules for my upcoming book, Raspberry Pi for Computer Vision so I decided to use my Logitech C920 which is plug-and-play compatible with the Nano (you could use the newer Logitech C960 as well).

The examples included with the Jetson Nano Inference library can be found in jetson-inference:

detectnet-camera: Performs object detection using a camera as an input.detectnet-console: Also performs object detection, but using an input image rather than a camera.imagenet-camera: Performs image classification using a camera.imagenet-console: Classifies an input image using a network pre-trained on the ImageNet dataset.segnet-camera: Performs semantic segmentation from an input camera.segnet-console: Also performs semantic segmentation, but on an image.- A few other examples are included as well, including deep homography estimation and super resolution.

However, in order to run these examples, we need to slightly modify the source code for the respective cameras.

In each example you’ll see that the DEFAULT_CAMERA value is set to -1, implying that an attached CSI camera should be used.

However, since we are using a USB camera, we need to change the DEFAULT_CAMERA value from -1 to 0 (or whatever the correct /dev/video V4L2 camera is).

Luckily, this change is super easy to do!

Let’s start with image classification as an example.

First, change directory into ~/jetson-inference/imagenet-camera:

$ cd ~/jetson-inference/imagenet-camera

From there, open up imagenet-camera.cpp:

$ nano imagenet-camera.cpp

You’ll then want to scroll down to approximately Line 37 where you’ll see the DEFAULT_CAMERA value:

#define DEFAULT_CAMERA -1 // -1 for onboard camera, or change to index of /dev/video V4L2 camera (>=0)

Simply change that value from -1 to 0:

#define DEFAULT_CAMERA 0 // -1 for onboard camera, or change to index of /dev/video V4L2 camera (>=0)

From there, save and exit the editor.

After editing the C++ file you will need to recompile the example which is as simple as:

$ cd ../build $ make $ sudo make install

Keep in mind that make is smart enough to not recompile the entire library. It will only recompile files that have changed (in this case, the ImageNet classification example).

Once compiled, change to the aarch64/bin directory and execute the imagenet-camera binary:

$ cd aarch64/bin/

$ ./imagenet-camera

imagenet-camera

args (1): 0 [./imagenet-camera]

[gstreamer] initialized gstreamer, version 1.14.1.0

[gstreamer] gstCamera attempting to initialize with GST_SOURCE_NVCAMERA

[gstreamer] gstCamera pipeline string:

v4l2src device=/dev/video0 ! video/x-raw, width=(int)1280, height=(int)720, format=YUY2 ! videoconvert ! video/x-raw, format=RGB ! videoconvert !appsink name=mysink

[gstreamer] gstCamera successfully initialized with GST_SOURCE_V4L2

imagenet-camera: successfully initialized video device

width: 1280

height: 720

depth: 24 (bpp)

imageNet -- loading classification network model from:

-- prototxt networks/googlenet.prototxt

-- model networks/bvlc_googlenet.caffemodel

-- class_labels networks/ilsvrc12_synset_words.txt

-- input_blob 'data'

-- output_blob 'prob'

-- batch_size 2

[TRT] TensorRT version 5.0.6

[TRT] detected model format - caffe (extension '.caffemodel')

[TRT] desired precision specified for GPU: FASTEST

[TRT] requested fasted precision for device GPU without providing valid calibrator, disabling INT8

[TRT] native precisions detected for GPU: FP32, FP16

[TRT] selecting fastest native precision for GPU: FP16

[TRT] attempting to open engine cache file networks/bvlc_googlenet.caffemodel.2.1.GPU.FP16.engine

[TRT] loading network profile from engine cache... networks/bvlc_googlenet.caffemodel.2.1.GPU.FP16.engine

[TRT] device GPU, networks/bvlc_googlenet.caffemodel loaded

Here you can see that the GoogLeNet is loaded into memory, after which inference starts:

Image classification is running at ~10 FPS on the Jetson Nano at 1280×720.

IMPORTANT: If this is the first time you are loading a particular model then it could take 5-15 minutes to load the model.

Internally, the Jetson Nano Inference library is optimizing and preparing the model for inference. This only has to be done once so subsequent runs of the program will be significantly faster (in terms of model loading time, not inference).

Now that we’ve tried image classification, let’s look at the object detection example on the Jetson Nano which is located in ~/jetson-inference/detectnet-camera/detectnet-camera.cpp.

Again, if you are using a USB webcam you’ll want to edit approximately Line 39 of detectnet-camera.cpp and change DEFAULT_CAMERA from -1 to 0 and then recompile via make (again, only necessary if you are using a USB webcam).

After compiling you can find the detectnet-camera binary in ~/jetson-inference/build/aarch64/bin.

Let’s go ahead and run the object detection demo on the Jetson Nano now:

$ ./detectnet-camera

detectnet-camera

args (1): 0 [./detectnet-camera]

[gstreamer] initialized gstreamer, version 1.14.1.0

[gstreamer] gstCamera attempting to initialize with GST_SOURCE_NVCAMERA

[gstreamer] gstCamera pipeline string:

v4l2src device=/dev/video0 ! video/x-raw, width=(int)1280, height=(int)720, format=YUY2 ! videoconvert ! video/x-raw, format=RGB ! videoconvert !appsink name=mysink

[gstreamer] gstCamera successfully initialized with GST_SOURCE_V4L2

detectnet-camera: successfully initialized video device

width: 1280

height: 720

depth: 24 (bpp)

detectNet -- loading detection network model from:

-- prototxt networks/ped-100/deploy.prototxt

-- model networks/ped-100/snapshot_iter_70800.caffemodel

-- input_blob 'data'

-- output_cvg 'coverage'

-- output_bbox 'bboxes'

-- mean_pixel 0.000000

-- class_labels networks/ped-100/class_labels.txt

-- threshold 0.500000

-- batch_size 2

[TRT] TensorRT version 5.0.6

[TRT] detected model format - caffe (extension '.caffemodel')

[TRT] desired precision specified for GPU: FASTEST

[TRT] requested fasted precision for device GPU without providing valid calibrator, disabling INT8

[TRT] native precisions detected for GPU: FP32, FP16

[TRT] selecting fastest native precision for GPU: FP16

[TRT] attempting to open engine cache file networks/ped-100/snapshot_iter_70800.caffemodel.2.1.GPU.FP16.engine

[TRT] loading network profile from engine cache... networks/ped-100/snapshot_iter_70800.caffemodel.2.1.GPU.FP16.engine

[TRT] device GPU, networks/ped-100/snapshot_iter_70800.caffemodel loaded

Here you can see that we are loading a model named ped-100 used for pedestrian detection (I’m actually not sure what the specific architecture is as it’s not documented on NVIDIA’s website — if you know what architecture is being used, please leave a comment on this post).

Below you can see an example of myself being detected using the Jetson Nano object detection demo:

According to the output of the program, we’re obtaining ~5 FPS for object detection on 1280×720 frames when using the Jetson Nano. Not too bad!

How does the Jetson Nano compare to the Movidius NCS or Google Coral?

This tutorial is simply meant to be a getting started guide for your Jetson Nano — it is not meant to compare the Nano to the Coral or NCS.

I’m in the process of comparing each of the respective embedded systems and will be providing a full benchmark/comparison in a future blog post.

In the meantime, take a look at the following guides to help you configure your embedded devices and start running benchmarks of your own:

- Getting started with Google Coral’s TPU USB Accelerator

- OpenVINO, OpenCV, and Movidius NCS on the Raspberry Pi

How do I deploy custom models to the Jetson Nano?

One of the benefits of the Jetson Nano is that once you compile and install a library with GPU support (compatible with the Nano, of course), your code will automatically use the Nano’s GPU for inference.

For example:

Earlier in this tutorial, we installed Keras + TensorFlow on the Nano. Any Python scripts that leverage Keras/TensorFlow will automatically use the GPU.

And similarly, any pre-trained Keras/TensorFlow models we use will also automatically use the Jetson Nano GPU for inference.

Pretty awesome, right?

Provided the Jetson Nano supports a given deep learning library (Keras, TensorFlow, Caffe, Torch/PyTorch, etc.), we can easily deploy our models to the Jetson Nano.

The problem here is OpenCV.

OpenCV’s Deep Neural Network (dnn) module does not support NVIDIA GPUs, including the Jetson Nano.

OpenCV is working to provide NVIDIA GPU support for their dnn module. Hopefully, it will be released by the end of the summer/autumn.

But until then we cannot leverage OpenCV’s easy to use cv2.dnn functions.

If using the cv2.dnn module is an absolute must for you right now, then I would suggest taking a look at Intel’s OpenVINO toolkit, the Movidius NCS, and their other OpenVINO-compatible products, all of which are optimized to work with OpenCV’s deep neural network module.

If you’re interested in learning more about the Movidius NCS and OpenVINO (including benchmark examples), be sure to refer to this tutorial.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to get started with the NVIDIA Jetson Nano.

Specifically, you learned how to install the required system packages, configure your development environment, and install Keras and TensorFlow on the Jetson Nano.

We wrapped up learning how to change the default camera and perform image classification and object detection on the Jetson Nano using the pre-supplied scripts.

I’ll be providing a full comparison and benchmarks of the NVIDIA Jetson Nano, Google, Coral, and Movidius NCS in a future tutorial.

To be notified when future tutorials are published here on PyImageSearch (including the Jetson Nano vs. Google Coral vs. Movidus NCS benchmark), just enter your email address in the form below!

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

“I decided to cover installing OpenCV on a Jetson Nano in a future tutorial”.

Looking forward 🙂 !

P.S.

It seems like I always looking forward with this site for the last 5 years already.

Thanks to keep me motivated for such a long time.

Thanks Yurii 🙂

Hello Adrian

Very nice tutorial. First time ever in my life I used Linux Ubuntu and first time on Jetson Nano. I was above to follow all the above steps and ran the program for Imagenet. It ran perfect for the first time when I followed your instructions. But how do I run it again ? how do I switch to next example model to run ? can you please help me with the correct command I need to type in Unbuntu terminal ?

A Tegra optimised version of OpenCV is a part of Jet Pack, right?

what a timing… i just finished listening to the podcast interview on super data science with you where your made your predictions about next best thing and you mentioned NVIDIA Jetson Nano…. it def blows rasberrypi out of the water!

guess. i will be shelling $99 soon to get on the wagon soon

If you are interested in embedding computer vision/deep learning I think it’s worth the investment. I also think NVIDIA did a pretty good job nailing the price point as well.

Wow another timely post!

My Jetson Nano is still on backorder, but this will get me jumpstarted when it finally arrives.

I hope you enjoy hacking with it when it arrives!

Great post, Adrian.

I too am really excited about embedded computer vision and deep learning. There will likely be explosive growth and some amazing opportunities in the very near future.

Now I’m trying to decide whether to buy a Jetson or a Google Coral! Or both…

One interesting point you hit upon — current camera libraries aren’t very flexible if you want to use different cameras (or even the full capabilities of a given USB camera) and will reduce you to tears if you (for example) have more than one USB camera connected to your system at a time. I’d love to see a better solution.

OpenCV does a fairly reasonable job for at least accessing the camera. The problem becomes programmatically adjusting any settings on the camera sensor. OpenCV provides “.get” and “.set” methods for various parameters but not all of them will be supported by a given camera. It can be a royal pain to work with.

Hi Adrian

Thanks for this blog, its really helpful. I have the kit for like 1 week now and was struggling to get started with it. I have few questions.

1. How can we write our own code to run the detection process.?

2. I see that all the demos are in cpp, so no support for python.?

3. Are you planning to write other blog on writing python code for detection.?

Thanks

Hey AR, I’m actually covering all of topics Raspberry Pi for Computer Vision. If you’d like to learn how to use your Nano from within Python projects (image classification, object detection, etc.), I would definitely suggest pre-ordering a copy.

You probably like to add these things to complete the “on the edge module”

Intel Dual Band Wireless-Ac 8265 BT/WF module

Noctua NF-A4x20 5V PWM, Premium Quiet Fan, 4-Pin, 5V Version (40x20mm, Brown)

5V 5A AC to DC Power Supply Adapter Converter Charger 5.5×2.1mm

2 x 6dBi RP-SMA Dual Band 2.4GHz 5GHz + 2 x 35cm M.2(NGFF)Cable Antenna Mod Kit

128GB Ultra microSDXC UHS-I Memory Card with Adapter – C10 (at least 64GB)

4 x M3-0.6x16mm or 4x 13mm

Thanks for documenting this, I have my devices on order from SEEED, hopefully arriving soon. Do you plan on a similar getting started guide for Coral and the TPU?

Regarding the lack of Wi-Fi , the issue is certification, and every country has different regulations. Therefore the systems that do ship with Wi-Fi have soldered down parts, and on board antennae, similar to the newer pi and pi-zeros’. in the US there’s also FCC restrictions on number of “prototype” and “pre-production” devices a company can ship, usually in the hundreds, with labelling issues, and $$$ fines for non-compliance So that further complicates issues, and gaining w-fi certification takes time.

As an independent user I can add a wifi card, and any old antennae to a system, and even if my unshielded rf-emitting device now causes interference, its not as big an issue.

I fully expect any real edge device, built using this system would be in a case, shielded, with Wi-FI, and a totally seperate certification.

I actually already did one for the Google Coral USB Accelerator. I may do the TPU dev board later.

Also, thanks for the clarification regarding the WiFi certification, I hadn’t thought of it through that lens 🙂

Hi Adrian,

Cool tutorial! Great Job!

I just porting the ZMQ host to Jetson nano(without python virtualenv due to opencv issue), I can’t wait your new tutorials in the future, maybe one day we can receive images from Pi through internet and inference using the CUDA resource on nano.

Thanks a lot!!

Thanks Rean, enjoy hacking with your Nano!

Awesome as always Adrian!

Thanks Joseph!

Pls Im waiting for the Jetson nano to perform a decent face recognition and use its full potential as so far dlib is not working, opencv dnn is performing bad, their inbuilt face inference is a joke… please help, and great work adrian

Hey Karthik — I don’t have any tutorials right now for face recognition on the Nano. I’ll consider it though, thanks for the suggestion!

yes it will good to include face recognition as Karthik suggested

thanks a lot 🙂

Nano is ever on my desk 🙂

You’re welcome, Jerome! Enjoy the guide and let me know if you have any issues with it 🙂

“I’m actually not sure what the specific architecture is as it’s not documented on NVIDIA’s website — if you know what architecture is being used, please leave a comment on this post”

It is using the DetectNet model, which is trained using DIGITS/Caffe in the full tutorial which covers training + inferencing: https://github.com/dusty-nv/jetson-inference/blob/master/docs/detectnet-training.md

You can read more about the DetectNet architecture here: https://devblogs.nvidia.com/detectnet-deep-neural-network-object-detection-digits/

Awesome, thank you for clarifying the model architecture Dustin! 🙂

I love my Nano and NVIDIA has code and plans for two ground robots you can make: JetBot and Kaya. I built and installed OpenCV 4.1.0, by using the following cmake command:

cmake -D CMAKE_BUILD_TYPE=RELEASE

-D CMAKE_INSTALL_PREFIX=/usr/local

-D INSTALL_PYTHON_EXAMPLES=ON

-D INSTALL_C_EXAMPLES=OFF

-D OPENCV_ENABLE_NONFREE=ON

-D OPENCV_EXTRA_MODULES_PATH=~/path to your opencv contrib modules folder

-D PYTHON_EXECUTABLE=~/.virtualenvs/cv/bin/python

-D BUILD_EXAMPLES=ON ..

make -j4

sudo make install

sudo ldconfig

Thanks for sharing the install configuration, Andrew!

I used a different one to get everything running with CUDA.

cmake -D CMAKE_BUILD_TYPE=RELEASE \

-D CMAKE_INSTALL_PREFIX=/usr/local \

-D WITH_CUDA=ON \

-D ENABLE_FAST_MATH=1 \

-D CUDA_FAST_MATH=1 \

-D WITH_CUBLAS=1 \

-D WITH_GSTREAMER=ON \

-D WITH_LIBV4L=ON \

-D OPENCV_ENABLE_NONFREE=ON \

-D OPENCV_EXTRA_MODULES_PATH=../../opencv_contrib/modules \

-D BUILD_EXAMPLES=OFF ..

It required a 64GB card minimum for me, and I had to use a swap usb drive since the memory requirements redlined. Jetsonhacks has a good video on the swap drive. Installing OpenCV required 17.8GB of space beyond the opencv zip download, it was incredible.

Final symbolic link was different too:

` ln -s /usr/local/lib/python3.6/site-packages/cv2/python-3.6/cv2.so cv2.so`

Wow, thanks for sharing this Rich!

Great! yes looking forward for more NANO articles, particularly using OpenCV DNN for CUDA, as I see lot of OpenCV in your articles, but using it in Jetson platform will be a challenge due to the lack of support, as your code runs on frugal powered Pi, I can’t imagine what you can achieve with NANO.

One of the problems you’ll run into is that the “dnn” (i.e., Deep Neural Network) module of OpenCV does not yet support CUDA. Ideally it will soon but it’s one of the current limitations of OpenCV. You’ll need to use whatever library the model was trained on to access the GPU and then just use OpenCV to facilitate reading frames/images, image processing, etc.

That’s one aspect that the Movidius NCS really has going for it — basically one line of code added to the script and you’re able to perform faster inference. (tutorial here if you’re interested)

Hello Adrian,

I wonder how difficult is it to setup OpenVINO with the Jetson Nano. I’d like to do some work with the emotion recognition sample available for OpenVINO. Any suggestions about emotion recognition would be greatly appreciated.

Thank you for your tutorial!

I don’t know about OpenVINO but as far as emotion recognition goes, I cover that inside Deep Learning for Computer Vision with Python.

The renders of the module show that there is a WiFi on the module, but not populated. Therefore we will see a connected version of it, just not on the dev board for now.

Got it, that makes sense.

Thanks a lot. Adrian. There will be a new “toy” for me. Ha Ha!

Thanks Lucas.

Hi Adrian,

Thanks a lot for the new post, that’s excellent, please continue this post.

I want to deploy ssd_mobilenet on Jetson, and I want to use TensorRT for this, what do I do? how convert .pb file Tensorflow object detection API file to TesorRT format? In this code you use TensorRT library, right? you write TensoRT codes for this or cv.dnn by default use from TensorRT?

I’ll be covering how to train your own object detectors, convert them, and then deploy them to the Nano inside Raspberry Pi for Computer Vision.

May be this could help : https://www.dlology.com/blog/how-to-run-keras-model-on-jetson-nano/

I was investigating this, and found a way to use the TensorRT “create_inference_graph” method.

Let me know if it helps !

Thanks for sharing, Vivek!

I am really impressed that you keep your blog updated so that new technologies are always covered. Thank you Adrian, and keep going, please :).

Thanks Mehdi, I really appreciate that 🙂

Hi Adrian,

A great article. I tested it on my Jetson nano and its fantastic. Thank you for making things easy. Eagerly waiting for content on jetson nano in RPi for CV ebook.

Kudos.

Paawan.

Thanks Paawan — and congrats on getting the example to run on your Nano 🙂

I love you man

Your tutorials are always

in the right place

and

always at the right time

Thanks, I’m glad you’re enjoying the guides 🙂

WOW!

becuase of you i bought today the jetson nano, and started to play with it.

first of all thanks a lot for you job, and for your help.

one question, how can i connect a rtsp camera rather then home camera.

i would like to play with home security camera rather then room camera.

and thanks a lot for your efforts

Thanks Zeev, I’m glad you liked the guide. I hope you enjoy hacking with the Nano!

As far as RTSP goes, I don’t really like working with that protocol. See this tutorial for my suggested way to stream frames and consume them.

about the openCV issue.

on nano you should install openCV 4 (what a lucky i bought your e-book for that)

for the install script, just use the following link:

https://github.com/AastaNV/JEP/tree/master/script

OpenCV should be able to decode rtsp streams using:

vs=cv2.VideoCapture(rtspURL)

ret, frame = vs.read()

As long as the rtspURL plays in VLC.

On a Pi3B+ with NCS using rtsp streams drops my fps from about 6.5 to 4.5 fps. Perhaps the nano can do better.

On various systems I’ve used OpenCV versions 3.4.2 to 4.1.0, although certainly not all the variations in between 🙂

My project is here: https://github.com/wb666greene/AI_enhanced_video_security/blob/master/README.md

Any reason that script can’t be modified to build 4.1.0 instead of 4.0.0?

I try to avoid building OpenCV whenever possible, but key parts (for us) of OpenVINO have been Open Sourced by Intel (announced today https://github.com/opencv/dldt/blob/2019/inference-engine/README.md ) so I’m looking into the possibility of changing the x86-64 build to try compiling for arm64 of the Jetson Nano.

Cmake projects generaly give me the fits, so I’m not really expecting success but feel its worth a try tomorrow while I’m stuck home waiting for package deliveries.

Excuse me, have you built the dldt project in jetson nano successfully? Can you please tell me how you solve the problem of opencv

Hi, Adrian very good article. its really helpful.

I have some questions.

I am using a Logitech c170 camera, is it compatible with the jetson nano?

Error message: “fail to capture”.

Can you please help me with that

I haven’t trained with the Logitech C170 so I’m not sure. I used a Logitech C920 and it worked out of the box.

Is there anyone out there that actually understands how to use the TensorRT Python API so that this awesome tut would actually be more helpful to the real world? I blame nVidia for the poor docs, but there doesn’t seem to be hardly and support for non C++ coders.

You couldn’t actually use this to generate predictions that are usable in your python code, like, take this bounding box and send it somewhere. You could do this if it was all python code.

Any ideas Adrian or others how to do this?

Hey Rich — all of those questions are being addressed inside Raspberry Pi for Computer Vision. I’ll likely do TensorRT + Python blog posts as well.

Does the Jetson Nano not come with OpenCV preinstalled? I have a Jetson Xavier and using the Jetpack sdk installer, it was one of the additional libraries pushed to the device upon flashing it. If it does come preinstalled on the Nano, why can’t you use that version? I was able to get this real time object detection library to work on my Jetson with the preinstalled openCV: https://github.com/ayooshkathuria/pytorch-yolo-v3. There was some custom stuff I had to do because I am using some Jetson specific cameras (https://www.e-consystems.com/nvidia-cameras/jetson-agx-xavier-cameras/four-synchronized-4k-cameras.asp) but it is performing well with GPU support. Maybe if does not come preinstalled you could leverage some help from this post: https://www.jetsonhacks.com/2018/11/08/build-opencv-3-4-on-nvidia-jetson-agx-xavier-developer-kit/ which you may already be aware of.

It does come pre-installed. If you want to use a virtualenv though, I believe you have to build all non-core modules yourself (though you might be able to symlink your install ?)

Mine seems to have come with OpenCV 3.3.1, which is close to the minimum version required to support the dnn module.

I plan to try and install OpenVINO later today, which will install a “local” OpenCV 4.1.0 with OpenVINO mods to the dnn module.

I tried installing the Coral TPU and all looked to go fine, but running the parrot demo fails with:

File “/usr/local/lib/python3.6/dist-packages/edgetpu/swig/edgetpu_cpp_wrapper.py”, line 18, in swig_import_helper

fp, pathname, description = imp.find_module(‘_edgetpu_cpp_wrapper’, [dirname(__file__)])

File “/usr/lib/python3.6/imp.py”, line 297, in find_module

raise ImportError(_ERR_MSG.format(name), name=name)

ImportError: No module named ‘_edgetpu_cpp_wrapper’

I’m going to poke around a bit more before giving up and trying OpenVINO.

I’ve got the Coral TPU runing on my Jetson, found the solution via Google:

cd /usr/local/lib/python3.6/dist-packages/edgetpu/swig/

sudo cp _edgetpu_cpp_wrapper.cpython-35m-aarch64-linux-gnu.so _edgetpu_cpp_wrapper.so

Details were here: https://github.com/f0cal/google-coral/issues/7

Seems the install script fails to create the required symlink for arm64.

Hi Adrian. As always, thanks fo the amazing tutorial. I am building a Computer Vision application based on Face Detection, so I really need the cv2.dnn module. As you mentioned, this won’t be available on NVIDIA GPU until the end of the summer. I also have an Intel NCS, but not a Raspberry py. So I was thinking if it is possible to use the Jetson Nano + NCS to use the cv2.dnn module?

If this is not possible I will start using a Raspberry pi + NCS .

Thanks

That may be possible but I have not tried.

… why use the way overpowered cv2.dnn when you can use a simple Haar Cascade for face detection. Perhaps you’re talking about facial recognition?

Hey Adrian!

I found this script for building OpenCV on Jetson Nano. The NVIDIA peeps linked to it. Have you had a chance to try it already?

https://devtalk.nvidia.com/default/topic/1049972/opencv-cuda-python-with-jetson-nano/

https://github.com/AastaNV/JEP/blob/master/script/install_opencv4.0.0_Nano.sh

Thanks!

Alex

I have not tried yet. It’s on my todo list though 🙂

Hi Adrian! Thanks for the post – it was very helpful when setting up my Jetson Nano

I had a few questions:

– My Jetson froze a few times while running install scripts for scipy and keras. I think it’s a RAM issue. Do you know if it’s possible/what the best way is to enable a swap file?

– When deploying custom models, do you know if it is faster to use TensorRT to accelerate the model rather than simply running it on Keras/Tensorflow?

– Finally, is there a reason why you use get-pip.py over apt-get install python3-pip?

Thanks so much for the post!

See the other comments regarding SciPy. Try creating a swapfile.

TensorRT should theoretically be faster but I haven’t confirmed that yet.

hi Adrian,

is there anything one can do if jetson nano keeps hangin on ‘running setup.py install for scipy’??

it hangs on that for some time and then the whole thing freezes up. Am i supposed to do something with a swapfile or something?

thank you!

See the other comments — try creating a swapfile.

Hi Adrian, after read a lots of article and your post about jetson nano, i very confuse should i buy a rasberry to develop or should i save money for the jetson nano. I afraid that the community support for jetson not large like rasberry.

Thank for all your article, i learn from it a lots:))

It really depends on what you are trying to build and the goals of the project. Could you describe what you would like the RPi or Nano to be doing? What types of models do you want to run?

Hi. Great article. However a point you may have overlooked. In order to get the scipy install to work I had to install a swapfile first. Part of the install uses all the avaliable ram (about 110MB of extra ram is required). Otherwise my nano crashed and not very gracefully.

Thanks for sharing. I didn’t need a swapfile though, strange!

Hello

Just in case of someone have the same problem that me.

I followed the tutorial and when I tried to install scipy:

$ pip install scipy

My Nano hangs after 10 minutes, more less .

I see that my Nano has no configured swapfile, so I created one swap file and try to install scipy again and then it worked. Scipy is installed.

Do you think that I must remove the swapfile after install everything? I know it runs on the SD Card….

Thanks for sharing, Jorge! How large was your swapfile?

Hi Adrian,

is the Jetson nano meant to be only used for computer vision tasks or can I use it in place of a GPU to train models on? Thanks for the tutorials, always learning something new

No, you don’t use the Jetson Nano for training, only for inference.

Hi Adrian, I am having a problem during the scipy installation. It get stuck forever, do you know what can be the problem?

Thanks

It’s not stuck. SciPy will take awhile to compile and install.

I am working my way through the installation (for the second day) because the system keeps locking up. It just freezes, even the System Monitor. I am running it low power, with one browser page, one terminal, and one System Monitor window open. It eventually recovers, but this happens so often the system is almost useless. Nothing seems to interrupt it, but if the screen saver comes on, the picture will swap every couple minutes (this happens when I am away) but getting the prompt to unlock it is hit and miss. When I pull the plug to hard reboot, the package has finished installing.

One different thing is that I am using a larger memory card (200 GB) but nothing warns me about that being a problem.

So far, system updated, scipy and TF installed, and watching nothing happen for the last 20 minutes while installing scipy.

Update, it appears that running at 4k UHD is what the problem was. When I downgraded resolution to 1920, it became completely stable and let me finish the install.

Thanks for sharing, Bob!

In your examples you run the binaries from ~/jetson-inference/build/aarch64/bin

But sudo make install puts copies of them into /usr/local/bin and so you can just run them from anywhere

The problem is you need to tell them where to get the libjetson-inference.so library

Add this to your ~/.bashrc file

export LD_LIBRARY_PATH=$LD_LIBRARY_PATH:/usr/local/lib

Nice, thanks for sharing Rob!

My jetson nano would stuck in ‘pip install scipy’ command,

does anyone has the same problem?

Make sure you read the other comments on the post. Try creating a swap file as other readers suggested.

Just managed to install scipy in a RPi 3 model B+. It crashed once after 20 minutes and I went through the comments to see if anyone has run into it before. I ran the pip install scipy again after a cold boot and waited for 70 minutes for it to complete successfully. I was planning to increase the default swap space from 100 MB to 1024 MB if it had failed.

hello adrian thank you for everything you do, I have a problem I installed on a 64GB card but when I connect the nano does not turn on, the screen stays black and only the green light of the led on stays on. I used a 64GB kingston card and followed the nvidia instructions

I have not encountered that error before so I unfortunately do not know. Try reflashing the .img file. I would also post the issue on the official Jetson Nano forums.

Hi Adrian,

I have the same question, which one to purchase. Jetson nano or RPI+accelorator.

1. I’m doing school IOT project on real-time video object detection, especially on instance segmentation and would like to put mask R-CNN or other instance segmentation model for this purpose.

Would like to measure the speed and accuracy as a reference /comparison with my other method implemented on IOT device.

So I hope it can handle my task.

2. Do you think it’ll be very hard for me to use Jetson because the community for Jetson is smaller than Raspberry Pi?

3. If I purchase Raspberry Pi 3 B+, which one do you recommend, Google coral or Intel NCS?

Thank you very much!

Your comment is awaiting moderation.

The Jetson community is smaller, but keep in mind that while the RPi community is huge, not all of the RPi community performs CV and DL. My recommendation for you would probably be a Jetson Nano. It’s a faster and has a dedicated onboard GPU.

I am a Raspberry Pi enthusiast strictly with python. (I tried an Orange Pi for better hardware, but quit in frustration due to the incompatibility with the RPi packages; ex GPIO for LED and servos – aka robotics)

Any insight for running RPI code, unmodified, on the Jetson Nano?

Seems like I’ll run into the same limitations.

https://github.com/NVIDIA/jetson-gpio/issues/1

(note: maybe this will be in your upcoming comparison between Raspberry, Coral, and Jetson? – I’ll wait)

What do you mean by “unmodified” RPi code?

Hey Bingxin Hou ,

i have similar task to do , just wanted to know if you are able to run instance segmentation model in jetson nano

Thanks Adrian!

I’m still try to follow your instructions above. The “nano ~/.bashrc” returned “bash: nano: command not found”. Also, I am still searching for bashrc, can’t find it anywhere.

Your thoughts?

Derek

That’s definitely not correct — try re-flashing your Nano .img file to your micro-SD card, I think you may have messed up your paths.

I have the same issue although I have tried several times (re-flashing).

I think there is something wrong with the image. Mine is “jetson-nano-sd-card-image-r3223” from 19.11.2019.

Besides, I have unstable internet connection and I can’t use SSH from a PC, it says “connection refused”

A side question, I am using 128GB SD Card. Would that be matter?

I asked the same question at the Jetson-Nano official forum, no response yet.

Any pointers would be greatly appreciated.

— The “nano ~/.bashrc” returned “bash: nano: command not found”—

Happened to me too – turns out nano just isn’t installed (check the ‘nano’ command in your terminal).

‘sudo apt-get install ano’ should do it if you encounter this problem.

I have to use “sudo -H” for the pip install jumpy and I still get an error stating “Error: uff 0.5.5 ..protobuf≥3.3.0 required”

Is that at typo? Do you mean “numpy”?

sudo -H seems to be required for me too, otherwise I get error “Could not build wheels…”

Seems to be installing fine now with sudo -H

Great intro as always, thanks,

Johann

My Jetson arrived yesterday, without this great tutorial I’d have been getting nowhere fast!

Very smooth installation and setup with your instructions.

However ~5fps “person detection” with C++ code is most unimpressive compared to what I’ve gotten on Pi3B+ with NCS and the overhead of servicing multiple cameras, or your Coral tutorial, both running Python code.

Maybe Jetson does better on other networks, but this is my main concern at the moment.

I’m sorry I think I posted this in the Coral TPU tutorial by mistake, although it is relevant their as well.

I am less than impressed with the Jetson Nano Cuda/GPU “person detection” in this tutorial, ~5fps seems hardly worthwhile compared to previous tutorials on the Pi3B+ with Coral TPU or OpenVINO

But the Jetson CPU makes a great host for the Coral TPU!

Running your Coral tutorial detect_video.py modified for timing and using a USB webcam I get the following results with my HP HD usb webcam:

Pi3B+ ~6.5 fps (Raspbian, 32-bit, USB2)

Odroid XU-4 ~19.8 fps (Mate16, 32-bit, USB3)

i3-4025 ~46.4 fps (Mate16, 32-bit, USB3)

Nano ~44.5 fps (Ubuntu18, 64-bit, USB3)

I know my HP webcam is not doing 40+ fps, imutils is returning duplicate frames, but the inference loop is processing that many images per second.

The Tegra arm64 might just be the best bang/buck “sleeper” among the small, low power Pi-like computers. My i3 Mini-PC needs a 60W power supply the others are under 25W.

Thanks for sharing these numbers, Wally! I really appreciate it (as I’m sure other readers do as well).

Hi Adrian, going forward in jetson nano setup, I am stuck on scipy installation. I followed your instructions, successfully installed numpy, tensorflow, but now stuck on scipy. I am including the error below. Any help will be very useful.

ERROR: Could not build wheels for scipy which use PEP 517 and cannot be installed directly

Hi Adithya, did you solve it?

Have figured out how to solve that problem? ’cause i’m having the same here and don’t know how to deal with it.

I already tried to pip install pep517 and still not working..+

Hi, I do not know if you solved the problem, but I had the same error and I could install it in the following way

$ pip install –no-binary :all: scipy

source: https://github.com/pypa/pip/issues/6222

Pip install PEP517

then sudo apt upgrade

It worked for me, i had the same error.

Great Adrian tutorial, congratulations on your work.

I think of taking a master’s degree in computer vision and image processing, do you accept being my teacher advisor? It would be like winning a prize at the mega sena in Brazil. lol

I look forward to your tutorial by installing Opencv on a Jetson Nano.

I’m flattered that you would want me to be your advisor; however, I am not affiliated with any university so I cannot take on MSc or PhD students.

OK Adrian, understood!

Hi Adrian,

Great article again. Thanks and congrats.

I got a problem after cmake has finished configuring the build. I typed “make” but I got the following error:

make: *** No targets specified and no makefile found. Stop.

When I checked the content of the build folder, I realized that there is no makefile indeed. What may be the possible cause and what should I do?

The “cmake” step failed. Go back to the cmake step and examine it for errors.

Thanks Adrian. I was able to resolve this

Congrats on resolving the issue, Gopal!

Hello Adrian, I was successfully able to install Keras as per your instructions. However, when I ran the classify.py (this I took from one of your cnn-keras classifier), i get the following error on jetson nano:”… tensorflow.python.framework.errors_impl.InternalError: Dst tensor is not initialized” the error is quite long.. but have pasted the main part here”. Am completely lost here. Fromm google search, the information is GPU memory insufficient. Please help how to overcome this..

This is great article, easy to follow, helped with the initial setup. Waiting for the opencv article.

Thanks Vignesh!

Hi Adrian Rosebrock

I follow all your article. It is crisp and clear. It helped me a lot with my projects. Thanks !!!

Recently, I bought the Jetson developer kit and sadly I used 16GB and ran out of memory. Haha, I should have gone through your article first.

So, what should I do to increase the memory?

1. I understand that my kernel is built on the present SD card (16GB). How can I use a new SD card with more memory? Should I reflash and what are the step involved to use a new SD card? Because, I tried flashing a 512gb SD card and inserted the SD card into the Nano board (which was already flashed by 16 GB card), but reinstallation didn’t happen?

2. What are the requirements should I consider while buying a new SD card (like storage, type)?

It sounds like you weren’t too far along in the install/config process so I would recommend starting with a new, larger SD card and then a fresh install.

Thanks so much. I’m a hw guy but was able to make my way through and am up and running.

Thanks Jeff, I’m glad you liked the tutorial!

Wow! as usual amazing tutorial and just on time!

Thank you so much Adrian!

p.s.

maybe it will help someone,

I had permission problems with all the installations in the virtual env and to fix it I just has to add “sudo” before each one and then the installations went perfect 🙂

forgot to add to the fix – a “sudo apt-get update” was needed just after creating the virtual env and before installing the rest of its packages.

I installed Pytorch using the prebuilt wheel provided by NVIDIA https://devtalk.nvidia.com/default/topic/1049071/jetson-nano/pytorch-for-jetson-nano/. When I move my models & tensors to the GPU, predictions become very slow. Should I switch to TF / Keras? Or am I missing something…

Sorry, I haven’t used PyTorch with the Nano so I’m not sure what the issue is there.

Hey Adrian, what exactly does the system package pre-reqs do ?

As the camera screen opens, the nano shuts down automatically.

Please help

When I had this problem, it was due to not enough current coming off the power supply. A 5v, 2A USB power supply will not be enough to power the GPUs, and, when it tries, the entire power will cut out. I switched to a 5V, 4A supply through the barrel jack and had no more problems.

I ran through all of this tutorial and hooked up a PiCamera (v2) instead of a USB webcam, and got 40-55 fps at 1360×768 running object detection (using unmodified imagenet-camera)

Thanks for your clear instructions and many tutorials.

Hi Adrian ,

Thanks for the nice post. I have done installation successfully as per your steps and when i started running

./detectnet-camera

jetson nano board gets restarted and even i tried swap memory also ,nothing helps me.

I am using CSI camera [pi camera – v2]. Please share your thoughts on this

I think this part is wrong…

“#define DEFAULT_CAMERA -1 // -1 for onboard camera, or change to index of /dev/video V4L2 camera (>=0) ” you say we can find it in .cpp file but this code is python code.. C doesnt have “#” coments, also I was looking in that imagenet-camera.cpp file (which is btw. in /jetson-inference/examples) and there was nothing about default camera 0. But It was in the file with same name .py in python folder

The “#” is not a comment. It’s part of the “#define” statement. Give the file another look.

Adrian

The latest version of the inference engine code does not have #define camera statement and also has python examples. You can set the camera input now for either python example or c++ example from the command line . I might be mistaken but I think that is the confusion here.

Ah okay, that makes sense. Thanks for the clarification Steven!

Thanks Steven for the clarification, I had the same confusion, now with the latest release of the code you don’t have to change the code to change cameras.

The command (for USB camera) from ~/jetson-inference/build/aarch64/bin is:

./imagenet-camera –camera /dev/video0

Yo Adrian. Check out Nvidia Deepstream 4.0. A tutorial on installing that on the Nano would be really helpful. With Deepstream, uou can do 4k at 60fps with the Jetson Nano while detecting.

yess!! Adrian! Please the nano and on Ubuntu as well!! this will be a life saver for the community!

Hi Adrian, thanks for this tutorial!! I’m looking forward to integrating this into my robot.

Do you have any plans for writing tutorials or a book for integrating this into ROS or other robot control systems? I’m planning on taking Udacity’s robotics nanodegree but I don’t know how good it is and I already know your books are awesome, so I’ll hold off if you’re going to release one soon.

Thanks again,

Robert

I’ve considered doing something related to ROS but haven’t committed yet. Maybe in the future but I cannot guarantee it.

Hello adrian,thank you for your awesome tutorial.

I have a question,could i use gstreamer in opencv install?i have a 2080 ti gpu and i want to use gstreamer for accelerating multiple cameras frame grabbing and processing in my gpu.

How i can use hardware decoding using opencv and python?

For example i have 10 cameras 4k resolution.

Yes, you can use gstreamer with OpenCV. I don’t have any tutorials on it at the moment but I’ll definitely consider it for a future post.

Hi Adrian,

Awhile ago, I had a Lego League Robotics Team to whom I wrote weekly notes on AI. The notes were all conceptual. We did no programming except in the simply Mindstorms Robotics language. Later on, I got Mindsensors kit, and I did the simply Python programming necessary to get a robot to follow a wall or a black line on the floor, but nothing more sophisticated, but nothing more. I would have liked to build a robot with a simple visual or IR based navigation system, but that was way beyond my knowledge level.

So now, Nvidia has launched the Nano, and that appears to be a robust platform on which to build a robot using the Mindstorms mechanical kit and the Nano brain. The only problem is,”How to program it?…(that seems to be your expertise).”

The Mindstorms kit came with fairly specific programming instructions for the beginner. I think any directed effort created by you would need that level of detail. Let me know if you are willing to collaborate in this project.

Jim Noon

Thanks for the comment. I don’t have any experience with the Mindstorms kits so unforuntately don’t have any input there.

Hi Adrian, thank you very much. Just a little precision, after the last update there is no constant for camera id, it must now be set via command line arguments.

For instance, if you are using a USB cam, the command should be:

./imagenet-camera –camera /dev/video0

Again thank you for the great tutorial

Sorry, did not see the previous reply

Hello Adrian,

I have found your tutorial on Jetson nano very well explained, I just have purchased a Jetson nano module to learn/study machine learning /deep learning, and I happened see your offer, and books.

Question: your course includes practical applications with focus on jetson nano and RB-pi ?

or do I have to learn to use the jetson module in order to implement your class examples?

Thank you,

Hey Adrian — yes, Raspberry Pi for Computer Vision is compatible with both the RPi and Jetson Nano. I’ve included instructions on how to use the Nano for for CV and DL. Code is included as well.

Thank you for the excellent tutorial. I bumped into an error while was following your instructions. Right after the command, ./imagenet-camera, it pops up the error, ‘imagenet-camera: camera open for streaming

GST_ARGUS: Creating output stream

CONSUMER: Waiting until producer is connected…

GST_ARGUS: Available Sensor modes :

Segmentation fault (core dumped).’ Please help me fix the error. Thank you.

I found the solution. Type, ./imagenet-camera –camera /dev/video0 This will make it work.

Congrats on resolving the issue!

didn’t solve 🙁

./imagenet-camera –camera /dev/video0

“–”

now it worked

Hi Adrian can I use Jetson Nano for training DNN model if dataset is small say 500mb?

Technically yes but I really don’t recommend it. The Nano isn’t meant for training anything substantial, it really should be used for inference.

Adrian,

So you’re telling me if I upload a python script along with a keras h5 file and execute that ANYWHERE in a directory on the Jetson Nano, the system will automatically use the GPU to speed up the processing? Or is there an additional step that I may be missing to take advantage of the 128 cores?

I thought there was an extra step that required you to build the code into the system?

You would need to ensure you have the Jetson Nano “tensorflow-gpu” package installed, but yes, once you have loaded the trained Keras model file the GPU on the Jetson Nano would be used for inference.

Thanks for the response pal.

Keep getting a seg fault when I run the detectnet and the imagenet.

Hello:

Do you have the tutorial now available on how to install OpenCV on the Jetson Nano?

Sorry no, not yet.

Nice post very help full

Thank you very much

I have one question, How is performance of OPENCV on Jetson Nano is it operating on real time?

I received the jetson nano the other day, managed to install/build opencv 4.1.1 with CUDA support

Tried then with a python script I have running in an Odroid N2 as well as in a “old retired” Lenovo laptop running Debian

In the python script I use yolov3 (full) and darknet to check pictures for persons. In my laptop it takes just below 1 second, in the Odroid around 10 sec and in the jetson some 4 seconds

For my application, detecting people entering our premises and notifying me via the iPhone, I assume all are acceptable but I had higher expectations using the jetson

Also testing with the yolov3-tiny was for me a no-go since then it did not detect persons in some pictures where the full version was successful

I guess, without knowing, that I’m not using the power of the jetson correctly, maybe would be better to use TensorRT api like in this project: https://github.com/mosheliv/tensortrt-yolo-python-api

But I have not reached that far (yet)

Thank you Adrian, and keep going, please !!

we are waiting for OpenCV install on Jetson Nano

That post will likely be published in January/February.

Hi Adrian,

Thanks for the interesting post. I have concern about CSI module which only return 8 bit (255) images. I wonder if there is anyway that I can modify to read 10 bit.

Thanh

how can access jetson nano remotely from windows with out connecting mouse keyboard and monitor. only connecting through network cable

See this tutorial on remote development.

Great tutorial. Two things.

First I don’t know if it has changed or not since the writing of this tuto but after my first boot, nano wasn’t installed so I had to install it.

Second, numpy used PEP 517 for building its wheel and there was a conflict with .cache/pip so I had to

sudo rm -rf ~/.cache/pip

as in the OpenCV for RPi tuto.

Looking forward fot the next tutos on Jetson Nano.

Hi Adrian thank you for this tutorial, everything works flawlessly except that my CSI camera feed is upside down (i’m using IMX219-160), any suggestion ?

Hello, sorry to bother you, i found the soluion, apparently cause i use IMX219 i need to change the flip mode to 0 (default is 2) on the gstCamera.cpp and rebuild it, now its working perfectly.

Any suggestion for detectnet to be more precise or accurate ?

PS: Sorry, i forgot to tell you i’m using Jetson Nano rev. B01 for my platform.

Can we host a Flask web application on Jetson Nano? I just wanted to know if we could deploy Flask web application into production and give the whole Jetson device to the user, is it feasible? Or is there a better solution?

Technically you could, but the Nano isn’t really meant to be a web server. As long as there wasn’t a lot of load I suppose it wouldn’t be an issue.