One of my favorite photography techniques is long exposure, the process of creating a photo that shows the effect of passing time, something that traditional photography does not capture.

When applying this technique, water becomes silky smooth, stars in a night sky leave light trails as the earth rotates, and car headlights/taillights illuminate highways in a single band of continuous motion.

Long exposure is a gorgeous technique, but in order to capture these types of shots, you need to take a methodical approach: mounting your camera on a tripod, applying various filters, computing exposure values, etc. Not to mention, you need to be a skilled photographer!

As a computer vision researcher and developer, I know a lot about processing images — but let’s face it, I’m a terrible photographer.

Luckily, there is a way to simulate long exposures by applying image/frame averaging. By averaging the images captured from a mounted camera over a given period of time, we can (in effect) simulate long exposures.

And since videos are just a series of images, we can easily construct long exposures from the frames by averaging all frames in the video together. The result is stunning long exposure-esque images, like the one at the top of this blog post.

To learn more about long exposures and how to simulate the effect with OpenCV, just keep reading.

Long exposure with OpenCV and Python

This blog post is broken down into three parts.

In the first part of this blog post, we will discuss how to simulate long exposures via frame averaging.

From there, we’ll write Python and OpenCV code that can be used to create long exposure-like effects from input videos.

Finally, we’ll apply our code to some example videos to create gorgeous long exposure images.

Simulating long exposures via image/frame averaging

The idea of simulating long exposures via averaging is hardly a new idea.

In fact, if you browse popular photography websites, you’ll find tutorials on how to manually create these types of effects using your camera and tripod (a great example of such a tutorial can be found here).

Our goal today is to simply implement this approach so we can automatically create long exposure-like images from an input video using Python and OpenCV. Given an input video, we’ll average all frames together (weighting them equally) to create the long exposure effect.

Note: You can also create this long exposure effect using multiple images, but since a video is just a sequence of images, it’s easier to demonstrate this technique using video. Just keep this in mind when applying the technique to your own files.

As we’ll see, the code itself is quite simple but has a beautiful effect when applied to videos captured using a tripod, ensuring there is no camera movement in between frames.

Implementing long exposure simulation with OpenCV

Let’s begin by opening a new file named long_exposure.py , and inserting the following code:

# import the necessary packages

import argparse

import imutils

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-v", "--video", required=True,

help="path to input video file")

ap.add_argument("-o", "--output", required=True,

help="path to output 'long exposure'")

args = vars(ap.parse_args())

Lines 2-4 handle our imports — you’ll need imutils and OpenCV.

In case you don’t already have imutils installed on your environment, simply use pip :

$ pip install --upgrade imutils

If you don’t have OpenCV configured and installed, head on over to my OpenCV 3 tutorials page and select the guide that is appropriate for your system.

We parse our two command line arguments on Lines 7-12:

--video: The path to the video file.--output: The path + filename to the output “long exposure” file.

Next, we will perform some initialization steps:

# initialize the Red, Green, and Blue channel averages, along with

# the total number of frames read from the file

(rAvg, gAvg, bAvg) = (None, None, None)

total = 0

# open a pointer to the video file

print("[INFO] opening video file pointer...")

stream = cv2.VideoCapture(args["video"])

print("[INFO] computing frame averages (this will take awhile)...")

On Line 16 we initialize RGB channel averages which we will later merge into the final long exposure image.

We also initialize a count of the total number of frames on Line 17.

For this tutorial, we are working with a video file that contains all of our frames, so it is necessary to open a file pointer to the video capture stream on Line 21.

Now let’s begin our loop which will calculate the average:

# loop over frames from the video file stream

while True:

# grab the frame from the file stream

(grabbed, frame) = stream.read()

# if the frame was not grabbed, then we have reached the end of

# the sfile

if not grabbed:

break

# otherwise, split the frmae into its respective channels

(B, G, R) = cv2.split(frame.astype("float"))

In our loop, we will grab frames from the stream (Line 27) and split the frame into its respective BGR channels (Line 35). Notice the exit condition squeezed in between — if a frame is not grabbed from the stream we are at the end of the video file and we will break out of the loop (Lines 31 and 32).

In the remainder of the loop we’ll perform the running average computation:

# if the frame averages are None, initialize them if rAvg is None: rAvg = R bAvg = B gAvg = G # otherwise, compute the weighted average between the history of # frames and the current frames else: rAvg = ((total * rAvg) + (1 * R)) / (total + 1.0) gAvg = ((total * gAvg) + (1 * G)) / (total + 1.0) bAvg = ((total * bAvg) + (1 * B)) / (total + 1.0) # increment the total number of frames read thus far total += 1

If this is the first iteration, we set the initial RGB averages to the corresponding first frame channels grabbed on Lines 38-41 (it is only necessary to do this on the first go-around, hence the if-statement).

Otherwise, we’ll compute the running average for each channel on Lines 45-48. The averaging computation is quite simple — we take the total number of frames times the channel-average, add the respective channel, and then divide that result by the floating point total number of frames (we add 1 to the total in the denominator because this is a fresh frame). We store the calculations in respective RGB channel average arrays.

Finally, we increment the total number of frames, allowing us to maintain our running average (Line 51).

Once we have looped over all frames in the video file, we can merge the (average) channels into one image and write it to disk:

# merge the RGB averages together and write the output image to disk

avg = cv2.merge([bAvg, gAvg, rAvg]).astype("uint8")

cv2.imwrite(args["output"], avg)

# do a bit of cleanup on the file pointer

stream.release()

On Line 54, we utilize the handy cv2.merge function while specifying each of our channel averages in a list. Since these arrays contain floating point numbers (as they are averages across all frames), we tack on the astype("uint8") to convert pixels to integers in the range [0-255].

We write the avg image to disk on the subsequent Line 55 using the command line argument path + filename. We could also display the image to our screen via cv2.imshow , but since it can take a lot of CPU time to process a video file, we’ll simply save the image to disk just in case we want to save it as our desktop background or share it with our friends on social media.

The final step in this script is to perform cleanup by releasing our video stream pointer (Line 58).

Long exposure and OpenCV results

Let’s see our script in action by processing three sample videos. Note that each video was captured by a camera mounted on a tripod to ensure stability.

Note: The videos I used to create these examples do not belong to me and were licensed from the original creators; therefore, I cannot include them along with the source code download of this blog post. Instead, I have provided links to the original videos should you wish to replicate my results.

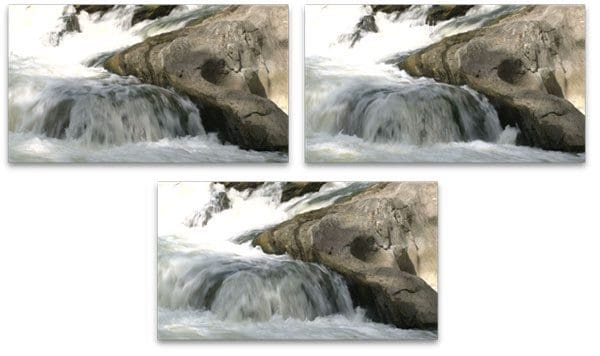

Our first example is a 15-second video of water rushing over rocks — I have included a sample of frames from the video below:

To create our long exposure effect, just execute the following command:

$ time python long_exposure.py --video videos/river_01.mov --output river_01.png [INFO] opening video file pointer... [INFO] computing frame averages (this will take awhile)... real 2m1.710s user 0m50.959s sys 0m40.207s

Notice how the water has been blended into a silky form due to the averaging process.

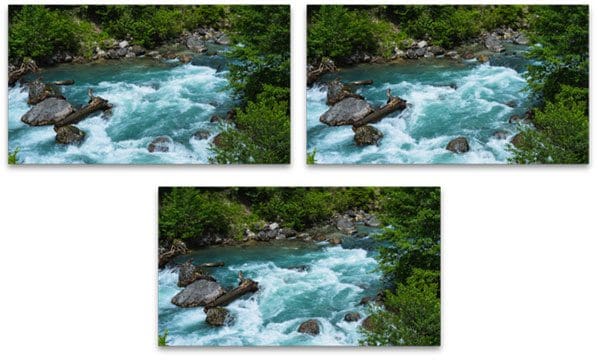

Let’s continue with a second example of a river, again, with a montage of frames displayed below:

The following command was used to generate the long exposure image:

$ time python long_exposure.py --video videos/river_02.mov --output river_02.png [INFO] opening video file pointer... [INFO] computing frame averages (this will take awhile)... real 0m57.881s user 0m27.207s sys 0m21.792s

Notice how the stationary rocks remain unchanged, but the rushing water is averaged into a continuous sheet, thus mimicking the long exposure effect.

This final example is my favorite as the color of the water is stunning, giving a stark contrast between the water itself and the forest:

When generating the long exposure with OpenCV, it gives the output a surreal, dreamlike feeling:

$ time python long_exposure.py --video videos/river_03.mov --output river_03.png [INFO] opening video file pointer... [INFO] computing frame averages (this will take awhile)... real 3m17.212s user 1m11.826s sys 0m56.707s

Different outputs can be constructed by sampling the frames from the input video at regular intervals rather than averaging all frame. This is left as an exercise to you, the reader, to implement.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: March 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post we learned how to simulate long exposure images using OpenCV and image processing techniques.

To mimic a long exposure, we applied frame averaging, the process of averaging a set of images together. We made the assumption that our input images/video were captured using a mounted camera (otherwise the resulting output image would be distorted).

While this is not a true “long exposure”, the effect is quite (visually) similar. And more importantly, this allows you to apply the long exposure effect without (1) being an expert photographer or (2) having to invest in expensive cameras, lenses, and filters.

If you enjoyed today’s blog post, be sure to enter your email address in the form below to be notified when future tutorials are published!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!