Table of Contents

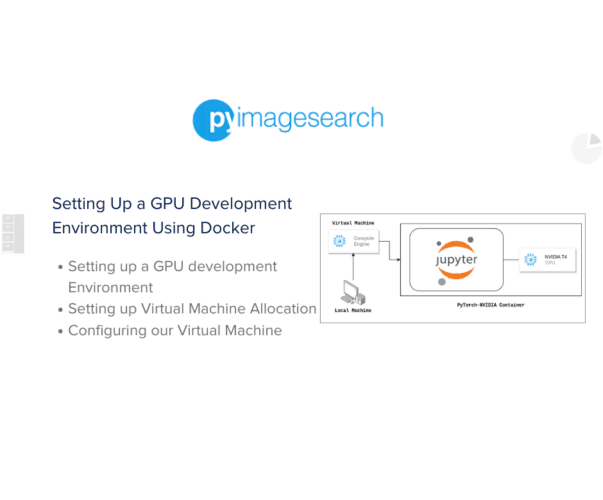

Setting Up a GPU Development Environment Using Docker

In this tutorial, you will learn how to step up a Graphics Processing Unit (GPU) development environment using Docker for machine learning.

This lesson is the last of a 3-part series on Docker for Machine Learning:

- Getting Started with Docker for Machine Learning

- Getting Used to Docker for Machine Learning

- Setting Up a GPU Development Environment Using Docker (this tutorial)

To learn how to set up your GPU development environment, just keep reading.

Setting Up a GPU Development Environment Using Docker

Introduction

Docker is slowly becoming ubiquitous within the modern development ecosystem. It has become the go-to tool for packaging complete environments at once without creating any kind of burden for the developer to check hardware and software compatibility. This has resulted in many open-source repositories as well as large-scale projects adopting it as the solution for environments.

Today, we will look at the following:

- Starting from scratch with a plain Virtual Machine on a Cloud Provider

- Setting up a Docker Container on the Virtual Machine

- Exposing ports on the container to allow network ingress/egress

- Connecting from a local machine to the Virtual Machine

By the end of this tutorial, you should be able to spin up and connect to a Docker-backed, fully integrated, GPU-enabled environment setup ready to use for any desired project.

A Quick Recap

We have covered quite a lot of ground from where we started. If you missed that, please check them out below!

- Getting Started with Docker for Machine Learning: Covers all the basics of containerization and key gotchas

- Getting Used to Docker for Machine Learning: Covers how to use Docker and experiment with its Command Line Interface

As a quick walkthrough and summary of them, we looked at the following:

- Basics of Containers

- What can Containers do?

- What makes Containers tick?

- What is Docker?

- What are some common utilities of the Docker CLI that we need to manipulate containers?

- What a sample Dockerfile, Image, and container would look like?

- How can we push the container to Docker Hub?

- How can we pull a container from Docker Hub?

Containers are simple all-in-one packages that encapsulate everything you need to set up an environment, including files, dependencies, drivers, and more.

You can use Docker to create, handle, manipulate, and run containers on your system locally. It can also be used on Cloud-based providers (e.g., AWS, GCP, Azure, DigitalOcean, etc.) to set up containerized workflows.

Setting Up Our Virtual Machine Allocation

For this blog, we will use the Google Cloud Platform (GCP) to host a GPU-enabled Virtual Machine. To get started, log onto GCP.

Note: This tutorial will require you to have a Billing-enabled account on GCP. You may incur some charges, but GCP provides a free 90-day credit of USD 300. This tutorial should not utilize over USD 10 at best.

- Navigate over to the GCP Compute Engine service and enter the VM Instances section.

- Click on CREATE INSTANCE, give your instance a suitable nickname, and then click on GPUs under Machine configuration. For our experiment, let’s take an n1-standard-4 instance.

- Scroll down to the Boot disk option and click on CHANGE. You will now get a pop-up with some options.

- In the pop-up, choose the following options

- Choose Container Optimized OS within the Operating System

- Choose 128 GB as the size

- Choose SELECT and continue

- Within Firewall, enable the Allow HTTP traffic and Allow HTTPS traffic options.

- In summary, our instance will have the following:

- An NVIDIA T4 GPU (a standard Colaboratory notebook with GPUs enabled has the same accelerator)

- 4 vCPUs

- 15 GB Memory/RAM

- ContainerOS (an OS Image for GCP VMs that is optimized to run Containers)

- Click the CREATE option, which will make the GPU-enabled VM Instance

- Now, search Firewall – VPC Network within the top navigation search bar and click the first option

- Click on Create a firewall rule and enter the wizard

- Enter the following details within the wizard:

- Name: Give it a suitable name. We choose default-allow-jupyter as our name.

- Targets: Set All Instances in the Network for now. If you have a specific instance, you can enter the name of that instance as well.

- Source IPv4 ranges: Set 0.0.0.0/0 since this will allow us to accept all source IP Addresses possible. If you are part of an enterprise network and you are sure that your traffic will be routed through that network only, then you can pass the source IP range of that network.

- Protocols and Ports:

- Select the TCP option and enter port 8080. This will expose only port 8080 of all instances on your network, to external traffic. Do the same for UDP. While we start our Jupyter server later on, we need to ensure that we only use port 8080 by passing the

--portflag.

- Select the TCP option and enter port 8080. This will expose only port 8080 of all instances on your network, to external traffic. Do the same for UDP. While we start our Jupyter server later on, we need to ensure that we only use port 8080 by passing the

Awesome! We now have our entire system set up. We have an NVIDIA T4 GPU-enabled instance with Container OS installed and port 8080 exposed for external traffic. This is where we will run our Docker containers with full GPU acceleration.

Configuring Our Virtual Machine

To enable GPU acceleration on a system, it is important to have CUDA drivers installed on the system. Fortunately, Google provides helpful utilities to simplify the process for Container OS.

- Enter the Virtual Machine by SSH-ing into it. Go back to the GCP Compute Engine service and click on the SSH button. This will open a Google Cloud Shell on the browser itself with all GCP utilities present. This Cloud Shell handles all SSH processes, like key transfers internally, thus simplifying the access procedure.

- Enter the following commands (Note: The first two steps need to be run every time the instance is restarted. It is preferable to use a startup script for this which will invoke both commands every time you start the instance.)

sudo cos-extensions install gpu: This will execute the utility to run the NVIDIA CUDA Driver installation.

``` sudo mount --bind /var/lib/nvidia /var/lib/nvidia sudo mount -o remount,exec /var/lib/nvidia /var/lib/nvidia/bin/nvidia-smi ```

- This set of commands will verify your installation of driver utilities and mount the GPU device with the right drivers. Below, we can see that the VM can now detect the existence of our T4 GPU.

``` docker run -it -d -p 8080:8080 --volume /var/lib/nvidia/lib64:/usr/local/nvidia/lib64 \ --volume /var/lib/nvidia/bin:/usr/local/nvidia/bin \ --device /dev/nvidia0:/dev/nvidia0 \ --device /dev/nvidia-uvm:/dev/nvidia-uvm \ --device /dev/nvidiactl:/dev/nvidiactl \ --ipc=host \ --ulimit memlock=-1 \ --ulimit stack=67108864 \ nvcr.io/nvidia/pytorch:23.07-py3 ```

This will execute the Docker command to start and run a GPU-enabled container that can work with PyTorch, while setting up the right drivers.

docker ps: This will give us the Container ID that we will use to start a shell session with.

docker exec -it a8c bash: This will enter the Docker container and execute thebashcommand to start a shell inside the container.

jupyter notebook --port=8080 --no-browser: This will start a Jupyter Notebook server on the container, which you can

We have now started the Jupyter Notebook server inside the container that is running on a GCP VM.

- Go back to the GCP Console. On the instance, you will see an External IP Address. Copy the external IP Address and enter it into a new tab while appending :8080 after it. Hence, the URL will look like http://34.67.21.67:8080

- The new tab will now show a prompt to enter a password or token. Go back to the terminal and copy the token present on the sample URL. Paste it into the password field and log in.

- You will now see the Jupyter Home page, which is ready for use!

Congratulations! You have successfully set up a PyTorch-NVIDIA container with CUDA 12.1 drivers set up internally without any hassle. Use this notebook environment to run whatever you like! Here are some fun and interesting notebooks to get started with!

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, we culminate the series on Docker for Machine Learning. We have accomplished the following tasks

- Set up a Virtual Machine on GCP

- Set up Firewall Rules to allow Jupyter ports

- SSH into the Virtual Machine

- Start a PyTorch-NVIDIA Container on the VM and publish the 8080 port

- SSH into the container

- Start a Jupyter Server on the container

- Log into the server from your local machine’s web browser!

This will now allow you to run any script or notebook within the container, be it something as simple as running an MNIST Training example, training Stable Diffusion models, or hosting a server for inference! Godspeed!

Citation Information

Mukherjee, S. “Setting Up a GPU Development Environment Using Docker,” PyImageSearch, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, and R. Raha, eds., 2023, https://pyimg.co/8bf3a

@incollection{Mukherjee_2023_Docker4ML-P3,

author = {Suvaditya Mukherjee},

title = {Setting Up a {GPU} Development Environment Using {Docker}},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha},

year = {2023},

url = {https://pyimg.co/8bf3a},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.