Table of Contents

- Text Detection and OCR with Microsoft Cognitive Services

- Microsoft Cognitive Services for OCR

- Obtaining Your Microsoft Cognitive Services Keys

- Configuring Your Development Environment

- Having Problems Configuring Your Development Environment?

- Project Structure

- Creating Our Configuration File

- Implementing the Microsoft Cognitive Services OCR Script

- Microsoft Cognitive Services OCR Results

- Summary

Text Detection and OCR with Microsoft Cognitive Services

This lesson is part of a 3-part series on Text Detection and OCR:

- Text Detection and OCR with Amazon Rekognition API

- Text Detection and OCR with Microsoft Cognitive Services (today’s tutorial)

- Text Detection and OCR with Google Cloud Vision API

In this tutorial, you will:

- Learn how to obtain your MCS API keys

- Create a configuration file to store your subscription key and API endpoint URL

- Implement a Python script to make calls to the MCS OCR API

- Review the results of applying the MCS OCR API to sample images

To learn about text detection and OCR, just keep reading.

Text Detection and OCR with Microsoft Cognitive Services

In our previous tutorial, you learned how to use the Amazon Rekognition API to OCR images. The hardest part of using the Amazon Rekognition API was obtaining your API keys. However, once you had your API keys, it was smooth sailing.

This tutorial focuses on a different cloud-based API called Microsoft Cognitive Services (MCS), part of Microsoft Azure. Like Amazon Rekognition API, MCS is also capable of high OCR accuracy — but unfortunately, the implementation is slightly more complex (as is both Microsoft’s login and admin dashboard).

We prefer the Amazon Web Services (AWS) Rekognition API over MCS, both for the admin dashboard and the API itself. However, if you are already ingrained into the MCS/Azure ecosystem, you should consider staying there. The MCS API isn’t that hard to use (it’s just not as straightforward as Amazon Rekognition API).

Microsoft Cognitive Services for OCR

We’ll start this tutorial with a review of how you can obtain your MCS API keys. You will need these API keys to request the MCS API to OCR images.

Once we have our API keys, we’ll review our project directory structure and then implement a Python configuration file to store our subscription key and OCR API endpoint URL.

With our configuration file implemented, we’ll move on to creating a second Python script, this one acting as a driver script that:

- Imports our configuration file

- Loads an input image to disk

- Packages the image into an API call

- Makes a request to the MCS OCR API

- Retrieves the results

- Annotates our output image

- Displays the OCR results to our screen and terminal

Let’s dive in!

Obtaining Your Microsoft Cognitive Services Keys

Before proceeding to the rest of the sections, be sure to obtain the API keys by following the instructions shown here.

Configuring Your Development Environment

To follow this guide, you need to have the OpenCV and Azure Computer Vision libraries installed on your system.

Luckily, both are pip-installable:

$ pip install opencv-contrib-python $ pip install azure-cognitiveservices-vision-computervision

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

The directory structure for our MCS OCR API is similar to the structure of the Amazon Rekognition API project in the previous tutorial:

|-- config | |-- __init__.py │ |-- microsoft_cognitive_services.py |-- images | |-- aircraft.png | |-- challenging.png | |-- park.png | |-- street_signs.png |-- microsoft_ocr.py

Inside the config, we have our microsoft_cognitive_services.py file, which stores our subscription key and endpoint URL (i.e., the URL of the API we’re submitting our images to).

The microsoft_ocr.py script will take our subscription key and endpoint URL, connect to the API, submit the images in our images directory for OCR, and display our screen results.

Creating Our Configuration File

Ensure you have followed Obtaining Your Microsoft Cognitive Services Keys to obtain your subscription keys to the MCS API. From there, open the microsoft_cognitive_services.py file and update your SUBSCRPTION_KEY:

# define our Microsoft Cognitive Services subscription key SUBSCRIPTION_KEY = "YOUR_SUBSCRIPTION_KEY" # define the ACS endpoint ENDPOINT_URL = "YOUR_ENDPOINT_URL"

You should replace the string "YOUR_SUBSCRPTION_KEY" with your subscription key obtained from Obtaining Your Microsoft Cognitive Services Keys.

Additionally, ensure you double-check your ENDPOINT_URL. At the time of this writing, the endpoint URL points to the most recent version of the MCS API; however, as Microsoft releases new API versions, this endpoint URL may change, so it’s worth double-checking.

Implementing the Microsoft Cognitive Services OCR Script

Now, let’s learn how to submit images for text detection and OCR to the MCS API.

Open the microsoft_ocr.py script in the project directory structure and insert the following code:

# import the necessary packages from config import microsoft_cognitive_services as config from azure.cognitiveservices.vision.computervision import ComputerVisionClient from azure.cognitiveservices.vision.computervision.models import OperationStatusCodes from msrest.authentication import CognitiveServicesCredentials import argparse import time import sys import cv2

Note on Line 2 that we import our microsoft_cognitive_services configuration to supply our subscription key and endpoint URL. Then, we’ll use the azure and msrest Python packages to send requests to the API.

Next, let’s define draw_ocr_results, a helper function used to annotate our output images with the OCR’d text:

def draw_ocr_results(image, text, pts, color=(0, 255, 0)): # unpack the points list topLeft = pts[0] topRight = pts[1] bottomRight = pts[2] bottomLeft = pts[3] # draw the bounding box of the detected text cv2.line(image, topLeft, topRight, color, 2) cv2.line(image, topRight, bottomRight, color, 2) cv2.line(image, bottomRight, bottomLeft, color, 2) cv2.line(image, bottomLeft, topLeft, color, 2) # draw the text itself cv2.putText(image, text, (topLeft[0], topLeft[1] - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.8, color, 2) # return the output image return image

Our draw_ocr_results function has four parameters:

image: The input image that we will draw on.text: The OCR’d text.pts: The top-left, top-right, bottom-right, and bottom-left (x, y)-coordinates of the text ROIcolor: The BGR color we’re using to draw on theimage

Lines 13-16 unpack our bounding box coordinates. From there, Lines 19-22 draw the bounding box surrounding the text in the image. We then draw the OCR’d text itself on Lines 25 and 26.

We wrap up this function by returning the output image to the calling function.

We can now parse our command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image that we'll submit to Microsoft OCR")

args = vars(ap.parse_args())

# load the input image from disk, both in a byte array and OpenCV

# format

imageData = open(args["image"], "rb").read()

image = cv2.imread(args["image"])

We only need a single argument here, --image, which is the path to the input image on disk. We read this image from disk, both as a binary byte array (so we can submit it to the MCS API, and then again in OpenCV/NumPy format (so we can draw on/annotate it).

Let’s now construct a request to the MCS API:

# initialize the client with endpoint URL and subscription key

client = ComputerVisionClient(config.ENDPOINT_URL,

CognitiveServicesCredentials(config.SUBSCRIPTION_KEY))

# call the API with the image and get the raw data, grab the operation

# location from the response, and grab the operation ID from the

# operation location

response = client.read_in_stream(imageData, raw=True)

operationLocation = response.headers["Operation-Location"]

operationID = operationLocation.split("/")[-1]

Lines 43 and 44 initialize the Azure Computer Vision client. Note that we are supplying our ENDPOINT_URL and SUBSCRIPTION_KEY here — now is a good time to go back to microsoft_cognitive_services.py and ensure you have correctly inserted your subscription key (otherwise, the request to the MCS API will fail).

We then submit the image for OCR to the MCS API on Lines 49-51.

We now have to wait and poll for results from the MCS API:

# continue to poll the Cognitive Services API for a response until

# we get a response

while True:

# get the result

results = client.get_read_result(operationID)

# check if the status is not "not started" or "running", if so,

# stop the polling operation

if results.status.lower() not in ["notstarted", "running"]:

break

# sleep for a bit before we make another request to the API

time.sleep(10)

# check to see if the request succeeded

if results.status == OperationStatusCodes.succeeded:

print("[INFO] Microsoft Cognitive Services API request succeeded...")

# if the request failed, show an error message and exit

else:

print("[INFO] Microsoft Cognitive Services API request failed")

print("[INFO] Attempting to gracefully exit")

sys.exit(0)

I’ll be honest — polling for results is not my favorite way to work with an API. It requires more code, it’s a bit more tedious, and it can be potentially error-prone if the programmer isn’t careful to break out of the loop properly.

Of course, there are pros to this approach, including maintaining a connection, submitting larger chunks of data, and having results returned in batches rather than all at once.

Regardless, this is how Microsoft has implemented its API, so we must play by their rules.

Line 55 starts a while loop that continuously checks for responses from the MCS API (Line 57).

If we do not find the status in the ["notstarted", "running"]break from the loop and process our results (Lines 61 and 62).

If the above condition is not met, we sleep for a small amount of time and then poll again (Line 65).

Line 68 checks if the request has succeeded, if it has then we can safely continue to process our results. Otherwise, if the request hasn’t succeeded, then we have no OCR results to show (since the image could not be processed), and then we exit gracefully from our script (Lines 72-75).

Provided our OCR request succeeded, let’s now process the results:

# make a copy of the input image for final output

final = image.copy()

# loop over the results

for result in results.analyze_result.read_results:

# loop over the lines

for line in result.lines:

# extract the OCR'd line from Microsoft's API and unpack the

# bounding box coordinates

text = line.text

box = list(map(int, line.bounding_box))

(tlX, tlY, trX, trY, brX, brY, blX, blY) = box

pts = ((tlX, tlY), (trX, trY), (brX, brY), (blX, blY))

# draw the output OCR line-by-line

output = image.copy()

output = draw_ocr_results(output, text, pts)

final = draw_ocr_results(final, text, pts)

# show the output OCR'd line

print(text)

cv2.imshow("Output", output)

# show the final output image

cv2.imshow("Final Output", final)

cv2.waitKey(0)

Line 78 initializes our final output image with all text drawn.

We start looping through all lines of OCR’d text on Line 81. On Line 83, we start looping through all lines of the OCR’d text. We extract the OCR’d text and bounding box coordinates for each line, then construct a list of the top-left, top-right, bottom-right, and bottom-left corners, respectively (Lines 86-89).

We then draw the OCR’d text line-by-line on the output and final image (Lines 92-94). We display the current line of text on our screen and terminal (Lines 97 and 98) — the final output image, with all OCR’d text drawn on it, is displayed on Lines 101 and 102.

Microsoft Cognitive Services OCR Results

Let’s now put the MCS OCR API to work for us. Open a terminal and execute the following command:

$ python microsoft_ocr.py --image images/aircraft.png [INFO] making request to Microsoft Cognitive Services API... WARNING! LOW FLYING AND DEPARTING AIRCRAFT BLAST CAN CAUSE PHYSICAL INJURY

Figure 2 shows the output of applying the MCS OCR API to our aircraft warning sign. If you recall, this is the same image we used in a previous tutorial when applying the Amazon Rekognition API. Therefore, I included the same image in this tutorial to demonstrate that the MCS OCR API can correctly OCR this image.

Let’s try a different image, this one containing several challenging pieces of text:

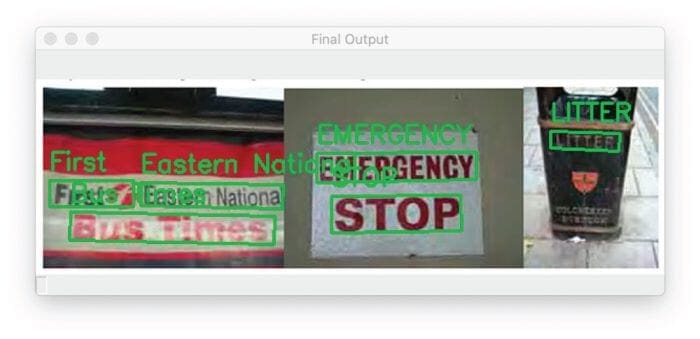

$ python microsoft_ocr.py --image images/challenging.png [INFO] making request to Microsoft Cognitive Services API... LITTER EMERGENCY First Eastern National Bus Times STOP

Figure 3 shows the results of applying the MCS OCR API to our input image — and as we can see, MCS does a great job OCR’ing the image.

On the left, we have a sample image from the First Eastern National bus timetable (i.e., the schedule of when a bus will arrive). The document is printed with a glossy finish (likely to prevent water damage). Still, the image has a significant reflection due to the gloss, particularly in the “Bus Times” text. Still, the MCS OCR API can correctly OCR the image.

In the middle, the “Emergency Stop” text is highly pixelated and low-quality, but that doesn’t phase the MCS OCR API! It’s able to correctly OCR the image.

Finally, the right shows a trash can with the text “Litter.” The text is tiny, and due to the low-quality image, it is challenging to read without squinting a bit. That said, the MCS OCR API can still OCR the text (although the text at the bottom of the trash can is illegible — neither human nor API could read that text).

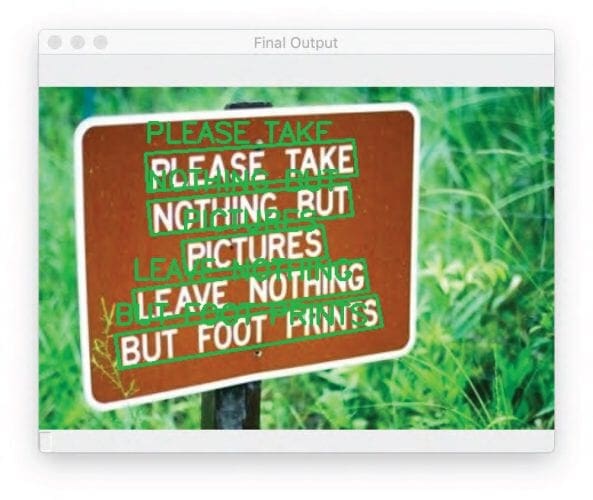

The next sample image contains a national park sign shown in Figure 4:

The MCS OCR API can OCR each sign line-by-line (Figure 4). We can also compute rotated text bounding box/polygons for each line.

$ python microsoft_ocr.py --image images/park.png [INFO] making request to Microsoft Cognitive Services API... PLEASE TAKE NOTHING BUT PICTURES LEAVE NOTHING BUT FOOT PRINTS

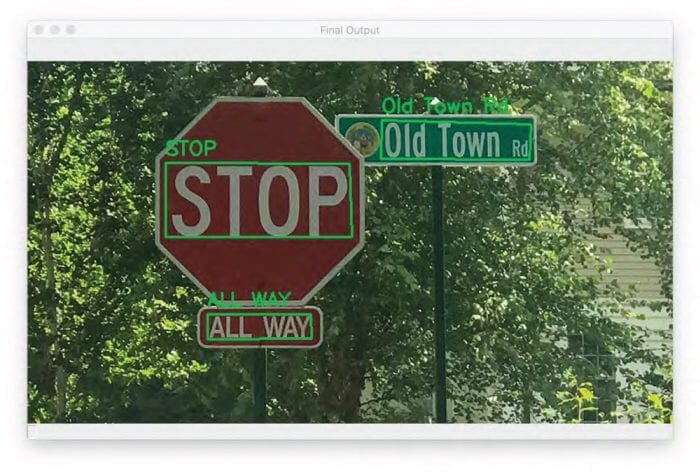

The final example we have contains traffic signs:

$ python microsoft_ocr.py --image images/street_signs.png [INFO] making request to Microsoft Cognitive Services API... Old Town Rd STOP ALL WAY

Figure 5 shows that we can correctly OCR each piece of text on both the stop sign and street name sign.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: January 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned about Microsoft Cognitive Services (MCS) OCR API. Despite being slightly harder to implement and use than the Amazon Rekognition API, the Microsoft Cognitive Services OCR API demonstrated that it’s quite robust and able to OCR text in many situations, including low-quality images.

When working with low-quality images, the MCS API shined. Typically, I recommend you programmatically detect and discard low-quality images (as we did in a previous tutorial). However, if you find yourself in a situation where you have to work with low-quality images, it may be worth your while to use the Microsoft Azure Cognitive Services OCR API.

Citation Information

Rosebrock, A. “Text Detection and OCR with Microsoft Cognitive Services,” PyImageSearch, D. Chakraborty, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, R. Raha, and A. Thanki, eds., 2022, https://pyimg.co/0r4mt

@incollection{Rosebrock_2022_OCR_MCS,

author = {Adrian Rosebrock},

title = {Text Detection and {OCR} with {M}icrosoft Cognitive Services},

booktitle = {PyImageSearch},

editor = {Devjyoti Chakraborty and Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha and Abhishek Thanki},

year = {2022},

note = {https://pyimg.co/0r4mt},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.