Table of Contents

Computer Vision and Deep Learning for Government

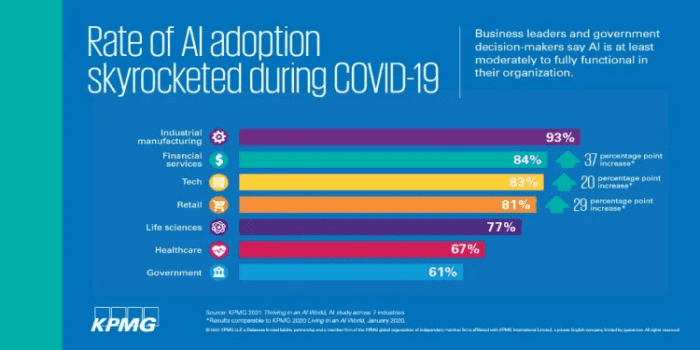

Due to its ability to learn and predict from observed data or behavior, deep learning has revolutionized big industries and businesses (Figure 1). According to Yann LeCun, the impact of deep learning in the industry began in the early 2000s. By then, convolutional neural networks (CNNs) had already processed an estimated 10% to 20% of all the checks written in the United States. Advancement in hardware (e.g., GPUs, TPUs, Cloud compute) has further accelerated this interest in using deep learning for industry applications.

The government sector is probably the largest as its services and policies affect the general public. Therefore, government agencies heavily invest in artificial intelligence (AI) to improve decision-making. Deep learning enables governments to perform more efficiently by improving their outcomes while keeping costs down. These applications include identifying tax-evasion patterns, tracking the spread of infectious cases, improving surveillance and security, etc.

This series will teach you computer vision (CV) and deep learning (DL) for Industrial and Big Business Applications. This blog will cover the benefits, applications, challenges, and tradeoffs of using deep learning in the government sector.

This lesson is the 1st in the 5-lesson course: CV and DL for Industrial and Big Business Applications 101.

- Computer Vision and Deep Learning for Government (this tutorial)

- Computer Vision and Deep Learning for Customer Service

- Computer Vision and Deep Learning for Banking and Finance

- Computer Vision and Deep Learning for Agriculture

- Computer Vision and Deep Learning for Electricity

To learn about Computer Vision and Deep Learning for Government, just keep reading.

Computer Vision and Deep Learning for Government

Benefits

The benefits of using deep learning in the public sector are three-fold:

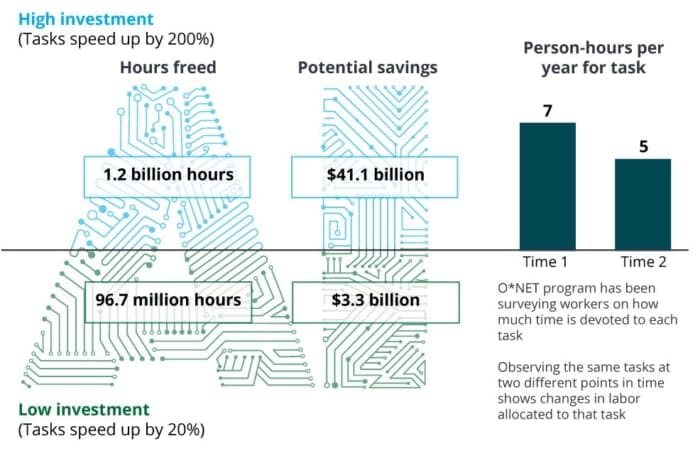

- Operational efficiency: Deloitte (Figure 2) has estimated that automation could save U.S. Government employees between 96.7 million to 1.2 billion hours a year, resulting in potential savings of between $3.3 billion to $41.1 billion a year. While it could lead to the government reducing the number of employees, they can be reinforced into more rewarding work that requires lateral thinking, empathy, and creativity.

- Improved services: Since AI systems can provide personalized content, they can help the government deliver better-personalized services to areas like education.

- Better decision-making: Since the government is collecting a lot of data every day, they can use deep learning to properly analyze the data to improve their services, make better decisions and policies, and save costs.

Applications

Federal Cyber Defense

The government sector is one of the most targeted industries for cyber attacks. According to a report, it has been identified among the five most-attacked industries over the past five years, alongside the healthcare, manufacturing, financial services, and transportation sectors. The leak of confidential data and blueprints from hackers can jeopardize the whole nation’s security. Figure 3 displays a cyber incident report for different countries.

With attacks becoming more sophisticated every day, governments must have technology available to defend against such attacks. Artificial Intelligence thus has a big scope in building protective walls to keep out any possible data and network attacks.

AI can map events and use data models to identify patterns over time and classify old and new cyber attacks without requiring any updates. It can monitor network points, emails, user behavior, and other factors to prevent cyber threats like phishing, social engineering, unauthorized access, IT breaches, etc.

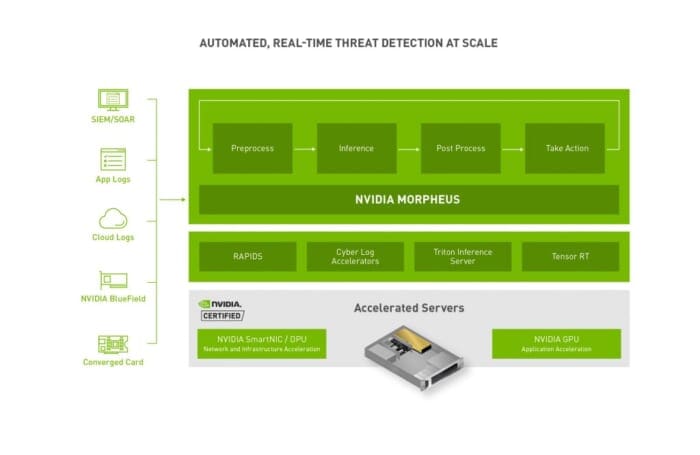

Recently, NVIDIA and Booz Allen Hamilton have teamed up to build machine learning and deep learning solutions to detect cyber threats faster and more efficiently. It first reads network data from sensor technologies, processes it, and uses AI to analyze the data in real time.

It augments established cyber defenses with AI to proactively detect adversarial attacks at the edge or in the data center using real-time network data. This enables enterprises to keep up with advancing adversaries, reduce false positives in AI-only defense tools, and create greater efficiencies without completely overhauling defense systems.

NVIDIA Morpheus (Figure 4) is a machine learning framework that identifies, captures, and takes action on cyber threats and anomalies that are new or previously difficult to identify. These include leaks of unencrypted data, phishing attacks, and malware. It analyzes security data without compromising cost or performance by applying real-time telemetry, policy enforcement, and edge processing.

Traffic Congestion

Traffic congestion is a serious problem in many urban areas and is difficult to predict. Last year, motorists in the UK lost 178 hours to road congestion, costing £1,317 ($1,608.1) per person and setting back the country’s economy to almost £8 billion overall.

An AI-powered system can help reduce traffic to a great extent. Since it considers every small detail like roads, peak time, and user convenience, it can help commuters plan their journeys as per system updates (Figure 5).

The system can analyze

- traffic patterns in different lanes and regions

- analyze critical information about road conditions and accidents

- identify the least-efficient vehicles, track their paths, and change signals ahead of vehicles

These benefits can help drivers reduce their commuting time significantly and plan ahead of the journey to avoid traffic congestion and minimize fuel consumption.

Siemens Mobility recently built an AI-based monitoring system that processes video feeds from traffic cameras. It automatically detects traffic anomalies and alerts traffic management authorities. The system effectively estimates road traffic density to modulate the traffic signals accordingly for smoother movement.

A new machine-learning algorithm, TranSEC, has been designed to solve the problem of urban traffic congestion. The tool uses traffic data collected via Uber drivers and other publicly available sensors and maps street-level traffic flow with time. It uses the computing resources at a national laboratory to create a big picture of city traffic using machine learning tools (Figure 6).

Identification of Criminals

With facial recognition systems getting better each day, governments can leverage the data in closed-circuit televisions (CCTVs) and other sources to identify on-the-run criminals in public places throughout a region where the suspect is expected to be.

Since any human is determined by their distinctive and unique facial features, the AI system can match and provide a similarity score with any query image. The police forces to track a criminal first need to feed an image of the crime doer into the AI-powered surveillance system. The cameras will then compare and analyze all the faces it detects with the query image and return possible matches.

The India-based startup, Artificial Intelligence Based Human Efface Detection (ABHED), has developed a proprietary artificial intelligence technology stack involving advanced image analysis, language, and a text-independent speaker identification engine, facial recognition and text processing, named entity recognition, and summarization APIs. The application can integrate with the first information report (FIR) database and biometric information, including voice, fingerprints, and facial images. The algorithms can perform a deep-facial analysis within milliseconds with almost 95% accuracy.

The company has also developed SmartGlass (Figure 7) with a built-in camera. The glasses use the startup’s facial recognition technology to identify individuals in a crowd. As law enforcement observes people’s faces around them, faces are recognized using the information stored in the database and displayed on the glasses in a real-time fashion.

DNA Analysis for Criminal Justice

Another potential application area in criminal justice is DNA analysis. DNA analysis produces large amounts of complex data in an electronic format, some of which may be beyond human analysis.

Researchers at Syracuse University partnered with the Onondaga County Center for Forensic Sciences and the New York City Office of Chief Medical Examiner’s Department of Forensic Biology to investigate a novel machine learning-based method of mixture deconvolution. The team used data mining and AI algorithms to separate and identify individual DNA profiles to minimize the potential weaknesses inherent in using one approach in isolation.

Tracking Disease Spreads

COVID has taught us that the threat of infectious diseases to global population health is enormous. It is important to confront such threats before it turns into a pandemic. With AI capable of solving problems that humans are incapable of, it offers huge potential for public health practitioners and policymakers to revolutionize healthcare and population health.

Following are some ways the government can leverage AI to prevent the spread of infectious diseases and take proactive measures:

- Building an AI algorithm identifies patients with similar symptoms from different locations and warns them before things turn serious. For example, BlueDot (Figure 8).

- Building a graph and using machine learning algorithms like graph neural networks to identify contacts with a known virus carrier.

- Building a system that collects data about known viruses, animal populations, human demographics, and social practices to predict hotspots where a new disease could emerge. This can be used to take proactive steps and prevent the outbreak.

Besides that, the government can extract meaningful information like geopolitical events and users’ reactions within the Twitter stream to track and forecast their behavior during disease epidemics.

Public Relations

By using deep learning algorithms, the government can better interact with the public; understand their queries, requirements, and complaints; get feedback; and proactively address them. The power of deep learning can be used to analyze Twitter, Facebook, and LinkedIn posts and identify ones related to a complaint, feedback, or query.

Chatbots can be widely used in several government activities. They are used to schedule meetings, answer public queries, direct requests to the appropriate areas within the government, assist in filling out forms, help the recruitment team, etc.

Improvements in speech-to-text translation systems using machine learning and deep learning can remove the communication barriers between citizens and government officials by providing real-time translation in public service settings.

Challenges and Tradeoffs

Unemployment

Unemployment and Artificial Intelligence are at a tradeoff. Government should be concerned about the increase in unemployment as the use of deep learning systems increases. To mitigate the potential risks of unemployment, the government should re-employ its employees in more subjective, high-value, creative tasks or move on to the private sector.

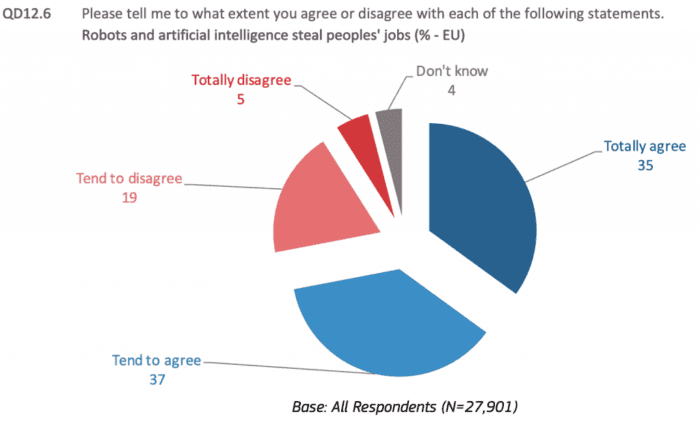

According to the European Commission’s Eurobarometer survey that presents European citizens’ thoughts on the influence of digitalization and automation on daily life (Figure 9):

- 74% agree that due to the use of robots and artificial intelligence, more jobs will disappear than new jobs will be created.

- 72% agree that robots and artificial intelligence steal people’s jobs.

- 44% of currently working respondents think their current job could at least partly be done by a robot or artificial intelligence.

Fairness

A deep learning model is as good as its data, and people are the ones who create it. However, biases can also occur because of distortions in the algorithm or how humans interpret the data.

Ensuring a bias-free decision in deep learning systems deployed in government tasks is important when protected classes of individuals are involved or avoiding disparate impacts on legally protected classes. A critical step in establishing fairness in AI systems is to evaluate the model on fairness metrics.

Probably the best way to ensure fairness is to force predictive equality. Predictive equality means that the algorithm is equally better or worse off in how decisions are being made for specific subgroups. That means the amount of false positives or false negatives for each group is proportionately the same.

Following are some ways to measure and address the fairness issue in deep learning systems.

- Willful blindness is an approach where you willingly mask the variables in data that represent or correlate to race, gender, caste, and other socioeconomic variables. It creates an algorithm that is merely unaware without any consideration for fairness.

However, fairness comes at the cost of reduced performance as masking variables reduce the quality of data used to train deep learning models. In other words, a model might be accurate for the larger population but not for some subsets of the population where the data is less.

- Demographic and statistical parity is another way of ensuring fairness in AI algorithms where you select an equal number of data points from minority and majority subsets of the data. This ensures that each data point gets the same weightage during the training process. Another similar way is to set different thresholds for different subsets to ensure parity in the outcomes for each group.

Explainability

AI explainable systems for their decisions can encourage their use as stakeholders can now understand how and why decisions are reached.

LIke fairness and explainability can also lead to performance tradeoffs. Simpler architectures and algorithms are more explainable than complex ones, but complex architectures might be more accurate and less biased. Figure 10 illustrates the concept of explainable AI.

Stability

Over time, the performance of AI systems can degrade as they are developed with data collected a few years back and do not comply with the latest scenarios. For example, a system built to track and identify the spread of diseases in the pre-COVID era might not be relevant now. Similarly, an AI-equipped cyber defense system might not be that effective after some years as cyber attacks evolve.

The systems should be updated regularly with the recently collected data points to mitigate this issue. Incremental algorithms can make it easier for the system to adapt to new data without forgetting the past one.

To estimate the speed at which an algorithm degrades, one can test its performance on backward-looking data over different periods. If the model performs great on test data that lapsed a year ago but not on data that lapsed two years ago, then retraining the model somewhere between a year and two years will likely help avoid degradation.

Adoption

Adoption poses a difficult challenge for the government to use AI in their applications. Since the workforce in the public sector is much older than in private ones, it makes it harder to implement cultural change. The fear of unemployment makes it further challenging.

Since the government is accountable to the public, the KPIs for evaluating the performance of the AI algorithm are more subjective, complex, and activity-oriented than in the private sector, which is mostly driven by profits. Government watchdogs, labor unions, and opposition parties are stakeholders whose views of AI will shape how the public perceives AI in government. This can make the transformation more difficult.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

Governments are heavily investing in deep learning to create improved services, make better decisions for the public, and reduce their operational costs by significant amounts. These applications include

- Cyber defense, where AI can be used to map events and use data models to identify patterns over time and classify old and new cyber attacks without requiring any updates

- Traffic congestion, where deep learning algorithms can analyze traffic patterns, road conditions, and accidents to help drivers reduce their commuting time and plan ahead of the journey

- Identifying criminals by matching their facial features with the query image

- Tracking the spread of infectious diseases by cross-checking patients with similar symptoms or predicting hotspots where a new disease could emerge

- Public relations, where the government can better interact with the public, understand their queries, requirements, and complaints, get feedback, and proactively address them

However, using AI in public sectors has its challenges and tradeoffs:

- An increase in unemployment as deep learning systems increase.

- Ensuring a system that is not biased toward socioeconomic variables (e.g., gender, caste, race, etc.)

- Making the system explainable encourages their use as stakeholders can now understand how and why decisions are reached.

- Performance degradation of AI systems with time.

- Harder to implement cultural change. The fear of unemployment makes the adoption further challenging.

I hope this post helped you understand the benefits, applications, challenges, and tradeoffs of using deep learning in the public sector. Stay tuned for another lesson where we will discuss deep learning and computer vision applications for customer service.

Citation Information

Mangla, P. “Computer Vision and Deep Learning for Government,” PyImageSearch, P. Chugh, R. Raha, K. Kudriavtseva, and S. Huot, eds., 2022, https://pyimg.co/gratl

@incollection{Mangla_2022_Government,

author = {Puneet Mangla},

title = {Computer Vision and Deep Learning for Government},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Ritwik Raha and Kseniia Kudriavtseva and Susan Huot},

year = {2022},

note = {https://pyimg.co/gratl},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.