In this tutorial, you will learn to use image super resolution.

This lesson is part of a 3-part series on Super Resolution:

- OpenCV Super Resolution with Deep Learning

- Image Super Resolution (this tutorial)

- Pixel Shuffle Super Resolution with TensorFlow, Keras, and Deep Learning

To learn how to use image super resolution, just keep reading.

Image Super Resolution

Just as deep learning and Convolutional Neural Networks have completely changed the landscape of art generated via deep learning methods, the same is true for super-resolution algorithms. However, it’s worth noting that the super-resolution sub-field of computer vision has been studied with more rigor. Previous methods are primarily example-based and tend to either:

- Use internal similarities of an input image to build the super-resolution output (Cui, 2014; Freedman and Fatta, 2011; Glasner et al., 2009)

- Learn low-resolution to high-resolution patches (Chang et al., 2004; Freeman et al., 2000; Jia et al., 2013)

- Use some variant of sparse-coding (Yang et al., 2010)

In this tutorial, we will implement the work of Dong et al. (2016). This method demonstrates that previous sparse-coding methods are effectively equivalent to applying deep Convolutional Neural Networks — the primary difference is that the method we are implementing is faster, produces better results, and is entirely end-to-end.

While there have been many super-resolution papers since the work of Dong et al. in 2016 (including a wonderful paper by Johnson et al. (2016) on framing super resolution as style transfer), the work of Dong et al. forms a foundation on which many others Super Resolution Convolutional Neural Networks (SRCNNs) are built.

Understanding SRCNNs

SRCNNs have numerous important characteristics. The most significant attributes are listed below:

- SRCNNs are fully convolutional (not to be confused with fully connected). We can input any image size (provided the width and height will tile) and run the SRCNN on it. This makes SRCNNs very fast.

- We train for filters, not for accuracy. In other lessons, we have been primarily concerned with training our CNNs to achieve as high accuracy as possible on a given dataset. In this context, we’re concerned with the actual filters learned by the SRCNN, which will enable us to upscale an image — the actual accuracy obtained on the training dataset to learn these filters is inconsequential.

- They do not require solving an optimization on usage. After an SRCNN has learned a set of filters, it can apply a simple forward pass to obtain the output super resolution image. We do not have to optimize a loss function on a per-image basis to obtain the output.

- They are totally end-to-end. Again, SRCNNs are totally end-to-end: input an image to the network and obtain the higher resolution output. There are no intermediary steps. Once training is complete, we are ready to apply super resolution.

As mentioned above, the goal of our SRCNN is to learn a set of filters that allow us to map low-resolution inputs to a higher-resolution output. Therefore, instead of actual full-resolution images, we are going to construct two sets of image patches:

- A low-resolution patch that will be the input to the network

- A high-resolution patch that will be the target for the network to predict/reconstruct

In this way, our SRCNN will learn how to reconstruct high-resolution patches from low-resolution input ones.

In practice, this means that we:

- First, need to build a dataset of low- and high-resolution input patches

- Train a network to learn to map the low-resolution patches to their high-resolution counterparts

- Create a script that utilizes loops over the input patches of low-resolution images, passes them through the network, and then creates the output high-resolution image from the predicted patches.

As we’ll see later in this tutorial, building an SRCNN is arguably easier than some of the other classification challenges we have tackled in other lessons.

Implementing SRCNNs

First, we’ll review the directory structure for this project, including any configuration files needed. From there, we’ll review a Python script used to build both our low-resolution and high-resolution patch datasets. We’ll then implement our SRCNN architecture itself and train it. Finally, we’ll utilize our trained model to apply SRCNNs to input images.

Configuring Your Development Environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

Starting the Project

Let’s start by reviewing the directory structure for this project. The first step is to create a new file named srcnn.py inside the conv sub-module of pyimagesearch — this is where our Super Resolution Convolutional Neural Network will live:

--- pyimagesearch | |--- __init__.py ... | |--- nn | | |--- __init__.py | | |--- conv | | | |--- __init__.py | | | |--- alexnet.py | | | |--- dcgan.py ... | | | |--- srcnn.py ...

From there, we have the following directory structure for the project itself:

--- super_resolution | |--- build_dataset.py | |--- config | | |--- sr_config.py | |--- output/ | |--- resize.py | |--- train.py | |--- uk_bench/

The sr_config.py file stores any configurations we’ll need. We’ll then use build_dataset.py to create our low-resolution and high-resolution patches for training.

Remark: Dong et al. refer to patches as sub-images instead. This attempt at clarification is meant to avoid any ambiguity regarding patches (which may imply overlapping ROIs in some computer vision literature). I will use both terms interchangeably as I believe the context of the work will define if a patch overlaps or not — both terms are totally valid.

From there, we have the train.py script that will actually train our network. And finally, we’ll implement resize.py to accept a low-resolution input image and create the high-resolution output.

The output directory will store

- our HDF5 training set of images

- the output model itself

Finally, the uk_bench directory will contain the example images where we are learning patterns.

Let’s go ahead and take a look at the sr_config.py file now:

# import the necessary packages import os # define the path to the input images we will be using to build the # training crops INPUT_IMAGES = "ukbench100" # define the path to the temporary output directories BASE_OUTPUT = "output" IMAGES = os.path.sep.join([BASE_OUTPUT, "images"]) LABELS = os.path.sep.join([BASE_OUTPUT, "labels"]) # define the path to the HDF5 files INPUTS_DB = os.path.sep.join([BASE_OUTPUT, "inputs.hdf5"]) OUTPUTS_DB = os.path.sep.join([BASE_OUTPUT, "outputs.hdf5"]) # define the path to the output model file and the plot file MODEL_PATH = os.path.sep.join([BASE_OUTPUT, "srcnn.model"]) PLOT_PATH = os.path.sep.join([BASE_OUTPUT, "plot.png"])

Line 6 defines the path to the ukbench100 dataset, a subset of the larger UKBench dataset. Dong et al. experimented with both a 91-image dataset for training along with the full 1.2-million ImageNet dataset. Replicating the work of an SRCNN trained on ImageNet is outside the scope of this lesson, so we’ll stick with an image dataset closer to the size of Dong et al.’s first experiment.

Lines 9-11 build the path to a temporary output directory where we’ll be storing our low-resolution and high-resolution sub-images. Given the low- and high-resolution sub-images, we’ll generate output HDF5 datasets from them (Lines 14 and 15). Lines 18 and 19 then define the path to our output model file along with a training plot.

Let’s continue defining our configurations:

# initialize the batch size and number of epochs for training BATCH_SIZE = 128 NUM_EPOCHS = 10 # initialize the scale (the factor in which we want to learn how to # enlarge images by) along with the input width and height dimensions # to our SRCNN SCALE = 2.0 INPUT_DIM = 33 # the label size should be the output spatial dimensions of the SRCNN # while our padding ensures we properly crop the label ROI LABEL_SIZE = 21 PAD = int((INPUT_DIM - LABEL_SIZE) / 2.0) # the stride controls the step size of our sliding window STRIDE = 14

We’ll only be training for ten epochs as NUM_EPOCHS defines. Dong et al. found that training for longer can actually hurt performance (where “performance” here is defined as the quality of the output super-resolution image). Ten epochs should be sufficient for our SRCNN to learn a set of filters to map our low-resolution patches to their higher-resolution counterparts.

The SCALE (Line 28) defines the factor by which we are upscaling our images — here we are upscaling by 2x, but you could upscale by 3x or 4x as well.

The INPUT_DIM is the spatial width and height of our sub-windows (33×33 pixels). Our LABEL_SIZE is the output spatial dimensions of the SRCNN, while our PAD ensures we properly crop the label ROI when building our dataset and applying super resolution to input images.

Finally, the STRIDE controls the step size of our sliding window when creating sub-images. Dong et al. suggest a stride of 14 pixels for smaller image datasets and a stride of 33 pixels for larger datasets.

Before we get too far, you might be confused why the INPUT_DIM is larger than the LABEL_SIZE — isn’t the entire idea here to build a higher resolution output image? How is that possible when the outputs of our neural network are smaller than the inputs? The answer is twofold.

- Earlier in this lesson, our SRCNN contained no zero-padding. Using zero-padding introduces border artifacts that would degrade the quality of our output image. Since we are not using zero-padding, our spatial dimensions will naturally reduce after each

CONVlayer. - When applying super resolution to an input image (after training), we’ll actually increase the input low-resolution image by a factor of

SCALE— the network will then transform the low-resolution image at the higher scale to a high-resolution output image. If this process seems confusing, don’t worry, the remaining sections in this tutorial will help make it clear.

Building the Dataset

Let’s go ahead and build our training dataset for the SRCNN. Open build_dataset.py and insert the following code:

# import the necessary packages

from pyimagesearch.io import HDF5DatasetWriter

from conf import sr_config as config

from imutils import paths

from PIL import Image

import numpy as np

import shutil

import random

import PIL

import cv2

import os

# if the output directories do not exist, create them

for p in [config.IMAGES, config.LABELS]:

if not os.path.exists(p):

os.makedirs(p)

# grab the image paths and initialize the total number of crops

# processed

print("[INFO] creating temporary images...")

imagePaths = list(paths.list_images(config.INPUT_IMAGES))

random.shuffle(imagePaths)

total = 0

Lines 2-11 handle our imports. Notice how we’re once again using the HDF5DatasetWriter class to write our dataset to disk in HDF5 format. Our sr_config script is imported on Line 3, so we can access our specified values.

Lines 14-16 create temporary output directories to store our sub-windows. Once all sub-windows are generated, we’ll add them to the HDF5 dataset and delete the temporary directories. Lines 21-23 then grab the paths to our input images and initialize a counter to count the total number of sub-windows generated.

Let’s loop over each of the image paths:

# loop over the image paths for imagePath in imagePaths: # load the input image image = cv2.imread(imagePath) # grab the dimensions of the input image and crop the image such # that it tiles nicely when we generate the training data + # labels (h, w) = image.shape[:2] w -= int(w % config.SCALE) h -= int(h % config.SCALE) image = image[0:h, 0:w] # to generate our training images we first need to downscale the # image by the scale factor...and then upscale it back to the # original size -- this will process allows us to generate low # resolution inputs that we'll then learn to reconstruct the high # resolution versions from lowW = int(w * (1.0 / config.SCALE)) lowH = int(h * (1.0 / config.SCALE)) highW = int(lowW * (config.SCALE / 1.0)) highH = int(lowH * (config.SCALE / 1.0)) # perform the actual scaling scaled = np.array(Image.fromarray(image).resize((lowW, lowH), resample=PIL.Image.BICUBIC)) scaled = np.array(Image.fromarray(scaled).resize((highW, highH), resample=PIL.Image.BICUBIC))

For each input image, we first load it from disk (Line 28) and then crop the image such that it tiles nicely when generating our sub-windows (Lines 33-36). If we did not take this step, our stride size would not fit, and we would crop patches outside of the image’s spatial dimensions.

To generate training data for our SRCNN, we need to:

- Downscale the original input image by a factor of

SCALE(forSCALE = 2.0, we are halving the size of the input image) - And then rescale it back to the original size

This process generates a low-resolution image with the same original spatial dimensions. We will learn how to reconstruct a high-resolution image from this low-resolution input.

Remark: I found that using OpenCV’s bilinear interpolation (recommended by Dong et al.) produced inferior results (it also introduced more code to perform the scaling). I opted for PIL/Pillow’s .resize function as it was easier to use and generated better results.

We can now generate our sub-windows for both the inputs and targets:

# slide a window from left-to-right and top-to-bottom

for y in range(0, h - config.INPUT_DIM + 1, config.STRIDE):

for x in range(0, w - config.INPUT_DIM + 1, config.STRIDE):

# crop output the 'INPUT_DIM x INPUT_DIM' ROI from our

# scaled image -- this ROI will serve as the input to our

# network

crop = scaled[y:y + config.INPUT_DIM,

x:x + config.INPUT_DIM]

# crop out the 'LABEL_SIZE x LABEL_SIZE' ROI from our

# original image -- this ROI will be the target output

# from our network

target = image[

y + config.PAD:y + config.PAD + config.LABEL_SIZE,

x + config.PAD:x + config.PAD + config.LABEL_SIZE]

# construct the crop and target output image paths

cropPath = os.path.sep.join([config.IMAGES,

"{}.png".format(total)])

targetPath = os.path.sep.join([config.LABELS,

"{}.png".format(total)])

# write the images to disk

cv2.imwrite(cropPath, crop)

cv2.imwrite(targetPath, target)

# increment the crop total

total += 1

Lines 55 and 56 slide a window from left-to-right and top-to-bottom across our images. We crop the INPUT_DIM × INPUT_DIM sub-window on Lines 60 and 61. This crop is the 33×33 from our scaled (i.e., low-resolution image) input to our neural network.

We also need a target for the SRCNN to predict (Lines 66-68) — the target is the LABEL_SIZE x LABEL_SIZE (21×21) output that the SRCNN will be trying to reconstruct. We write both the target and crop to disk on Lines 77 and 78.

The last step is to build two HDF5 datasets, one for the inputs and another for the outputs (i.e., the targets):

# grab the paths to the images

print("[INFO] building HDF5 datasets...")

inputPaths = sorted(list(paths.list_images(config.IMAGES)))

outputPaths = sorted(list(paths.list_images(config.LABELS)))

# initialize the HDF5 datasets

inputWriter = HDF5DatasetWriter((len(inputPaths), config.INPUT_DIM,

config.INPUT_DIM, 3), config.INPUTS_DB)

outputWriter = HDF5DatasetWriter((len(outputPaths),

config.LABEL_SIZE, config.LABEL_SIZE, 3), config.OUTPUTS_DB)

# loop over the images

for (inputPath, outputPath) in zip(inputPaths, outputPaths):

# load the two images and add them to their respective datasets

inputImage = cv2.imread(inputPath)

outputImage = cv2.imread(outputPath)

inputWriter.add([inputImage], [-1])

outputWriter.add([outputImage], [-1])

# close the HDF5 datasets

inputWriter.close()

outputWriter.close()

# delete the temporary output directories

print("[INFO] cleaning up...")

shutil.rmtree(config.IMAGES)

shutil.rmtree(config.LABELS)

It’s important to note that the class label is irrelevant (hence why I specify a value of -1). The “class label” is technically the output sub-window that we would try to train our SRCNN to reconstruct. We’ll be writing a custom generator in train.py to yield a tuple of both the input sub-windows and target output sub-windows.

After our HDF5 datasets are generated, Lines 108 and 109 delete the temporary output directories.

To generate our dataset, execute the following command:

$ python build_dataset.py [INFO] creating temporary images... [INFO] building HDF5 datasets... [INFO] cleaning up...

After which, you can check your BASE_OUTPUT directory and find the inputs.hdf5 and outputs.hdf5 files:

$ ls ../datasets/ukbench/output/*.hdf5 inputs.hdf5 outputs.hdf5

The SRCNN Architecture

The SRCNN architecture we are implementing follows Dong et al. exactly, making it easy to implement. Open srcnn.py and insert the following code:

# import the necessary packages

from tensorflow.keras.models import Sequential

from tensorflow.keras.layers import Conv2D

from tensorflow.keras.layers import Activation

from tensorflow.keras import backend as K

class SRCNN:

@staticmethod

def build(width, height, depth):

# initialize the model

model = Sequential()

inputShape = (height, width, depth)

# if we are using "channels first", update the input shape

if K.image_data_format() == "channels_first":

inputShape = (depth, height, width)

# the entire SRCNN architecture consists of three CONV =>

# RELU layers with *no* zero-padding

model.add(Conv2D(64, (9, 9), kernel_initializer="he_normal",

input_shape=inputShape))

model.add(Activation("relu"))

model.add(Conv2D(32, (1, 1), kernel_initializer="he_normal"))

model.add(Activation("relu"))

model.add(Conv2D(depth, (5, 5),

kernel_initializer="he_normal"))

model.add(Activation("relu"))

# return the constructed network architecture

return model

Compared to other architectures, our SRCNN could not be more straightforward. The entire architecture consists of only three CONV => RELU layers with no zero-padding (we avoid using zero-padding to ensure we don’t introduce any border artifacts in the output image).

Our first CONV layer learns 64 filters, each of which is 9×9. This volume is fed into a second CONV layer where we learn 32 1×1 filters used to reduce dimensionality and learn local features. The final CONV layer learns a total of depth channels (which will be 3 for RGB images and 1 for grayscale), each of which is 5×5.

There are two important components of this network architecture:

- It’s small and compact, meaning that it will be fast to train (remember, our goal isn’t to obtain higher accuracy in a classification sense — we’re more interested in the filters learned from the network, which will enable us to perform super resolution).

- It’s fully convolutional, making it (1) again, faster, and (2) possible for us to accept any input image size provided it tiles nicely.

Training the SRCNN

Training our SRCNN is a fairly straightforward process. Open train.py and insert the following code:

# set the matplotlib backend so figures can be saved in the background

import matplotlib

matplotlib.use("Agg")

# import the necessary packages

from conf import sr_config as config

from pyimagesearch.io import HDF5DatasetGenerator

from pyimagesearch.nn.conv import SRCNN

from tensorflow.keras.optimizers import Adam

import matplotlib.pyplot as plt

import numpy as np

Lines 2-11 handle our imports. We’ll need the HDF5DatasetGenerator to access our serialized HDF5 datasets along with our SRCNN implementation. However, a slight modification is required to work with our HDF5 dataset.

Keep in mind that we have two HDF5 datasets: the input sub-windows and the target output sub-windows. Our HDF5DatasetGenerator class is meant to work with only one HDF5 file, not two. Luckily, this is an easy fix with our super_res_generator function:

def super_res_generator(inputDataGen, targetDataGen): # start an infinite loop for the training data while True: # grab the next input images and target outputs, discarding # the class labels (which are irrelevant) inputData = next(inputDataGen)[0] targetData = next(targetDataGen)[0] # yield a tuple of the input data and target data yield (inputData, targetData)

This function requires two arguments, inputDataGen and targetDataGen which are both assumed to be HDF5DatasetGenerator objects.

We start an infinite loop that will continue to loop over our training data on Line 15. Calling next (a built-in Python function to return the next item in a generator) on each object yields us the next batch set. We discard the class labels (since we do not need them) and return a tuple of the inputData and targetData.

We can now initialize our HDF5DatasetGenerator objects along with our model and optimizer:

# initialize the input images and target output images generators

inputs = HDF5DatasetGenerator(config.INPUTS_DB, config.BATCH_SIZE)

targets = HDF5DatasetGenerator(config.OUTPUTS_DB, config.BATCH_SIZE)

# initialize the model and optimizer

print("[INFO] compiling model...")

opt = Adam(lr=0.001, decay=0.001 / config.NUM_EPOCHS)

model = SRCNN.build(width=config.INPUT_DIM, height=config.INPUT_DIM,

depth=3)

model.compile(loss="mse", optimizer=opt)

While Dong et al. used RMSprop, I found that:

- Using

Adamobtained better results with less hyperparameter tuning - A little bit of learning rate decay yields better, more stable training

Finally, note that we’ll use mean-squared loss (MSE) rather than binary/categorical cross-entropy.

We are now ready to train our model:

# train the model using our generators H = model.fit_generator( super_res_generator(inputs.generator(), targets.generator()), steps_per_epoch=inputs.numImages // config.BATCH_SIZE, epochs=config.NUM_EPOCHS, verbose=1)

Our model will be trained for a total of NUM_EPOCHS (10 epochs, according to our configuration file). Notice how we use our super_res_generator to jointly yield training batches from both the inputs and targets generators, respectively.

Our final code block handles saving our trained model to disk, plotting the loss, and closing our HDF5 datasets:

# save the model to file

print("[INFO] serializing model...")

model.save(config.MODEL_PATH, overwrite=True)

# plot the training loss

plt.style.use("ggplot")

plt.figure()

plt.plot(np.arange(0, config.NUM_EPOCHS), H.history["loss"],

label="loss")

plt.title("Loss on super resolution training")

plt.xlabel("Epoch #")

plt.ylabel("Loss")

plt.legend()

plt.savefig(config.PLOT_PATH)

# close the HDF5 datasets

inputs.close()

targets.close()

Training the SRCNN architecture is as simple as executing the following command:

$ python train.py [INFO] compiling model... Epoch 1/10 1100/1100 [==============================] - 14s - loss: 243.1207 Epoch 2/10 1100/1100 [==============================] - 13s - loss: 59.0475 ... Epoch 9/10 1100/1100 [==============================] - 13s - loss: 47.1672 Epoch 10/10 1100/1100 [==============================] - 13s - loss: 44.7597 [INFO] serializing model...

Our model is now trained and ready to increase the resolution of new input images!

Increasing Image Resolution with SRCNNs

We are now ready to implement resize.py, the script responsible for constructing a high-resolution output image from a low-resolution input image. Open resize.py and insert the following code:

# import the necessary packages

from conf import sr_config as config

from tensorflow.keras.models import load_model

from PIL import Image

import numpy as np

import argparse

import PIL

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required=True,

help="path to input image")

ap.add_argument("-b", "--baseline", required=True,

help="path to baseline image")

ap.add_argument("-o", "--output", required=True,

help="path to output image")

args = vars(ap.parse_args())

# load the pre-trained model

print("[INFO] loading model...")

model = load_model(config.MODEL_PATH)

Lines 11-18 parse our command line arguments — we’ll need three switches for this script:

--image: The path to our input, a low-resolution image that we wish to upscale.--baseline: The output baseline image after standard bilinear interpolation — this image will give us a baseline to which we can compare our SRCNN results.--output: The path to the output image after applying super resolution.

Line 22 then loads our serialized SRCNN after disk.

Next, let’s prepare our image for upscaling:

# load the input image, then grab the dimensions of the input image

# and crop the image such that it tiles nicely

print("[INFO] generating image...")

image = cv2.imread(args["image"])

(h, w) = image.shape[:2]

w -= int(w % config.SCALE)

h -= int(h % config.SCALE)

image = image[0:h, 0:w]

# resize the input image using bicubic interpolation then write the

# baseline image to disk

lowW = int(w * (1.0 / config.SCALE))

lowH = int(h * (1.0 / config.SCALE))

highW = int(lowW * (config.SCALE / 1.0))

highH = int(lowH * (config.SCALE / 1.0))

scaled = np.array(Image.fromarray(image).resize((lowW, lowH),

resample=PIL.Image.BICUBIC))

scaled = np.array(Image.fromarray(scaled).resize((highW, highH),

resample=PIL.Image.BICUBIC))

cv2.imwrite(args["baseline"], scaled)

# allocate memory for the output image

output = np.zeros(scaled.shape)

(h, w) = output.shape[:2]

We first load the image from disk on Line 27. Lines 28-31 crop our image such that it tiles nicely when applying our sliding window and passing the sub-images through our SRCNN. Lines 35-42 apply standard bilinear interpolation to our input --image using PIL/Pillow’s resize function.

Upscaling our image by a factor of SCALE serves two purposes:

- It gives us a baseline of what standard upsizing will look like using traditional image processing.

- Our SRCNN requires a high-resolution input of the original low-resolution image — this

scaledimage serves that purpose.

Finally, Line 46 allocates memory for our output image.

We can now apply our sliding window:

# slide a window from left-to-right and top-to-bottom

for y in range(0, h - config.INPUT_DIM + 1, config.LABEL_SIZE):

for x in range(0, w - config.INPUT_DIM + 1, config.LABEL_SIZE):

# crop ROI from our scaled image

crop = scaled[y:y + config.INPUT_DIM,

x:x + config.INPUT_DIM].astype("float32")

# make a prediction on the crop and store it in our output

# image

P = model.predict(np.expand_dims(crop, axis=0))

P = P.reshape((config.LABEL_SIZE, config.LABEL_SIZE, 3))

output[y + config.PAD:y + config.PAD + config.LABEL_SIZE,

x + config.PAD:x + config.PAD + config.LABEL_SIZE] = P

For each stop along the way, in LABEL_SIZE steps, we crop out the sub-image from scaled (Lines 53 and 54). The spatial dimensions of crop match the input dimensions required by our SRCNN.

We then take the crop sub-image and pass it through our SRCNN for inference. The output of the SRCNN, P, has spatial dimensions LABEL_SIZE x LABEL_SIZE x CHANNELS, which is 21×21×3 — we then store the high-resolution prediction from the network in the output image.

Remark: For the sake of simplicity, I am processing one sub-image at a time via the .predict method. To achieve a faster throughput rate, especially on a GPU, you’ll want to batch process the crop sub-images. This action can be accomplished by maintaining a list of (x,y)-coordinates for the batch that map each sample in the batch to their corresponding output location.

Our last step is to remove any black borders on the output image caused by padding and then save the image to disk:

# remove any of the black borders in the output image caused by the

# padding, then clip any values that fall outside the range [0, 255]

output = output[config.PAD:h - ((h % config.INPUT_DIM) + config.PAD),

config.PAD:w - ((w % config.INPUT_DIM) + config.PAD)]

output = np.clip(output, 0, 255).astype("uint8")

# write the output image to disk

cv2.imwrite(args["output"], output)

At this point, we are done implementing the SRCNN pipeline! In our next section, we’ll apply resize.py to a few example input low-resolution images and compare our SRCNN results to traditional image processing.

Super Resolution Results

Now that we have (1) trained our SRCNN and (2) implemented resize.py, we are ready to apply super resolution to an input image. Open up a shell and execute the following command:

$ python resize.py --image jemma.png --baseline baseline.png \ --output output.png [INFO] loading model... [INFO] generating image...

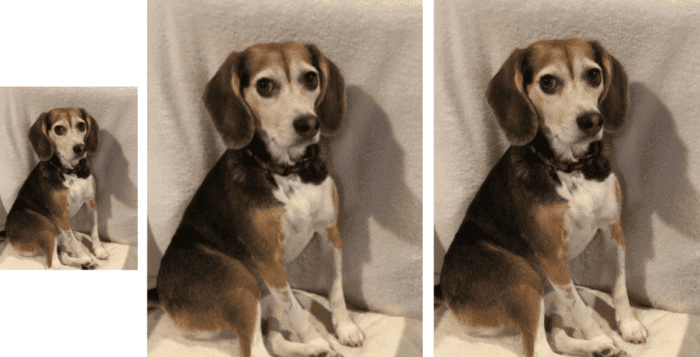

Figure 2 contains our output image. On the left is the input image we wish to increase the resolution of (125×166).

2x using our SRCNN. The image is significantly more visually appealing.Then, in the middle, we have the input image resolution increased by 2x to 250×332 via standard bilinear interpolation. This image serves as our baseline. Notice how the image is low resolution, blurry, and in general, visually unappealing.

Finally, on the right, we have the output image from the SRCNN. Here we can see that we have again increased the resolution by 2x, but this time the image is significantly less blurry and more aesthetically pleasing.

We can also increase our image resolution by higher multiples, provided we have trained our SRCNN to do so.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: July 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, we reviewed the concept of “Super Resolution” and then implemented Super Resolution Convolutional Neural Networks (SRCNN). After training our SRCNN, we applied super resolution to our input images.

Our implementation followed the work of Dong et al. (2016). While there have been many super resolution papers since then (and there will continue to be), Dong et al.’s paper is still one of the easiest ones to understand and implement, making it an excellent starting point for anyone interested in studying super resolution.

Citation Information

Rosebrock, A. “Image Super Resolution,” PyImageSearch, 2022, https://pyimg.co/jia4g

@article{Rosebrock_2022_ISR,

author = {Adrian Rosebrock},

title = {Image Super Resolution},

journal = {PyImageSearch},

year = {2022},

note = {https://pyimg.co/jia4g},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.