In this tutorial, you will learn how to use Keras and Mask R-CNN to perform instance segmentation (both with and without a GPU).

For developing and fine-tuning Mask R-CNN models, having access to rich, diverse datasets is critical. These datasets provide the foundational training material for the model, influencing its ability to accurately segment and recognize objects.

Roboflow has free tools for each stage of the computer vision pipeline that will streamline your workflows and supercharge your productivity.

Sign up or Log in to your Roboflow account to access state of the art dataset libaries and revolutionize your computer vision pipeline.

You can start by choosing your own datasets or using our PyimageSearch’s assorted library of useful datasets.

Bring data in any of 40+ formats to Roboflow, train using any state-of-the-art model architectures, deploy across multiple platforms (API, NVIDIA, browser, iOS, etc), and connect to applications or 3rd party tools.

With a few images, you can train a working computer vision model in an afternoon. For example, bring data into Roboflow from anywhere via API, label images with the cloud-hosted image annotation tool, kickoff a hosted model training with one-click, and deploy the model via a hosted API endpoint. This process can be executed in a code-centric way, in the cloud-based UI, or any mix of the two.

Over 250,000 developers and machine learning engineers from companies such as Cardinal Health, Walmart, USG, Rivian, Intel, and Medtronic build computer vision pipelines with Roboflow. Get started today, no credit card required.

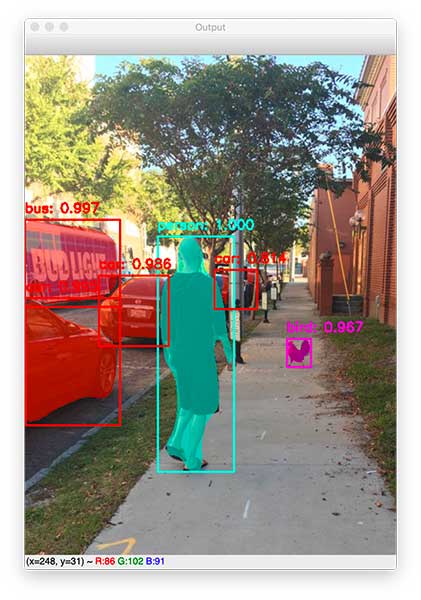

Using Mask R-CNN we can perform both:

- Object detection, giving us the (x, y)-bounding box coordinates of for each object in an image.

- Instance segmentation, enabling us to obtain a pixel-wise mask for each individual object in an image.

An example of instance segmentation via Mask R-CNN can be seen in the image at the top of this tutorial — notice how we not only have the bounding box of the objects in the image, but we also have pixel-wise masks for each object as well, enabling us to segment each individual object (something that object detection alone does not give us).

Instance segmentation, along with Mask R-CNN, powers some of the recent advances in the “magic” we see in computer vision, including self-driving cars, robotics, and more.

In the remainder of this tutorial, you will learn how to use Mask R-CNN with Keras, including how to perform instance segmentation on your own images.

To learn more about Keras and Mask R-CNN, just keep reading!

Keras Mask R-CNN

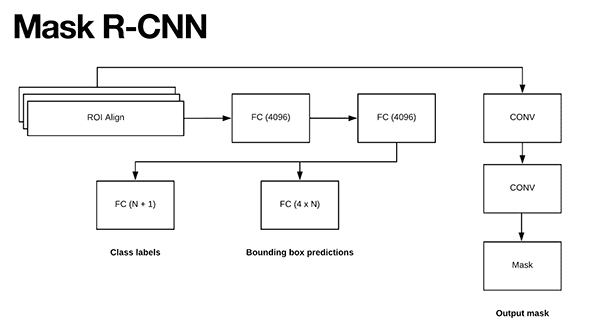

In the first part of this tutorial, we’ll briefly review the Mask R-CNN architecture. From there, we’ll review our directory structure for this project and then install Keras + Mask R-CNN on our system.

I’ll then show you how to implement Mask R-CNN and Keras using Python.

Finally, we’ll apply Mask R-CNN to our own images and examine the results.

I’ll also share resources on how to train a Mask R-CNN model on your own custom dataset.

The History of Mask R-CNN

The Mask R-CNN model for instance segmentation has evolved from three preceding architectures for object detection:

- R-CNN: An input image is presented to the network, Selective Search is run on the image, and then the output regions from Selective Search are used for feature extraction and classification using a pre-trained CNN.

- Fast R-CNN: Still uses the Selective Search algorithm to obtain region proposals, but adds the Region of Interest (ROI) Pooling module. Extracts a fixed-size window from the feature map and uses the features to obtain the final class label and bounding box. The benefit is that the network is now end-to-end trainable.

- Faster R-CNN: Introduces the Regional Proposal Network (RPN) that bakes the region proposal directly into the architecture, alleviating the need for the Selective Search algorithm.

The Mask R-CNN algorithm builds on the previous Faster R-CNN, enabling the network to not only perform object detection but pixel-wise instance segmentation as well!

I’ve covered Mask R-CNN in-depth inside both:

- The “What is Mask R-CNN?” section of the Mask R-CNN with OpenCV post.

- My book, Deep Learning for Computer Vision with Python.

Please refer to those resources for more in-depth details on how the architecture works, including the ROI Align module and how it facilitates instance segmentation.

Project structure

Go ahead and use the “Downloads” section of today’s blog post to download the code and pre-trained model. Let’s inspect our Keras Mask R-CNN project structure:

$ tree --dirsfirst . ├── images │ ├── 30th_birthday.jpg │ ├── couch.jpg │ ├── page_az.jpg │ └── ybor_city.jpg ├── coco_labels.txt ├── mask_rcnn_coco.h5 └── maskrcnn_predict.py 1 directory, 7 files

Our project consists of a testing images/ directory as well as three files:

coco_labels.txt: Comprised of a line-by-line listing of 81 class labels. The first label is the “background” class, so typically we say there are 80 classes.mask_rcnn_coco.h5: Our pre-trained Mask R-CNN model weights file which will be loaded from disk.maskrcnn_predict.py: The Mask R-CNN demo script loads the labels and model/weights. From there, an inference is made on a testing image provided via a command line argument. You may test with one of our own images or any in theimages/directory included with the “Downloads”.

Before we review today’s script, we’ll install Keras + Mask R-CNN and then we’ll briefly review the COCO dataset.

Installing Keras Mask R-CNN

The Keras + Mask R-CNN installation process is quote straightforward with pip, git, and setup.py . I recommend you install these packages in a dedicated virtual environment for today’s project so you don’t complicate your system’s package tree.

First, install the required Python packages:

$ pip install numpy scipy $ pip install pillow scikit-image matplotlib imutils $ pip install "IPython[all]" $ pip install tensorflow # or tensorflow-gpu $ pip install keras h5py

Be sure to install tensorflow-gpu if you have a GPU, CUDA, and cuDNN installed in your machine.

From there, go ahead and install OpenCV, either via pip or compiling from source:

$ pip install opencv-contrib-python

Next, we’ll install the Matterport implementation of Mask R-CNN in Keras:

$ git clone https://github.com/matterport/Mask_RCNN.git $ cd Mask_RCNN $ python setup.py install

Finally, fire up a Python interpreter in your virtual environment to verify that Mask R-CNN + Keras and OpenCV have been successfully installed:

$ python >>> import mrcnn >>> import cv2 >>>

Provided that there are no import errors, your environment is now ready for today’s blog post.

Mask R-CNN and COCO

The Mask R-CNN model we’ll be using here today is pre-trained on the COCO dataset.

This dataset includes a total of 80 classes (plus one background class) that you can detect and segment from an input image (with the first class being the background class). I have included the labels file named coco_labels.txt in the “Downloads” associated with this post, but out of convenience, I have included them here for you:

- BG

- person

- bicycle

- car

- motorcycle

- airplane

- bus

- train

- truck

- boat

- traffic light

- fire hydrant

- stop sign

- parking meter

- bench

- bird

- cat

- dog

- horse

- sheep

- cow

- elephant

- bear

- zebra

- giraffe

- backpack

- umbrella

- handbag

- tie

- suitcase

- frisbee

- skis

- snowboard

- sports ball

- kite

- baseball bat

- baseball glove

- skateboard

- surfboard

- tennis racket

- bottle

- wine glass

- cup

- fork

- knife

- spoon

- bowl

- banana

- apple

- sandwich

- orange

- broccoli

- carrot

- hot dog

- pizza

- donut

- cake

- chair

- couch

- potted plant

- bed

- dining table

- toilet

- tv

- laptop

- mouse

- remote

- keyboard

- cell phone

- microwave

- oven

- toaster

- sink

- refrigerator

- book

- clock

- vase

- scissors

- teddy bear

- hair drier

- toothbrush

In the next section, we’ll learn how to use Keras and Mask R-CNN to detect and segment each of these classes.

Implementing Mask R-CNN with Keras and Python

Let’s get started implementing Mask R-CNN segmentation script.

Open up the maskrcnn_predict.py and insert the following code:

# import the necessary packages from mrcnn.config import Config from mrcnn import model as modellib from mrcnn import visualize import numpy as np import colorsys import argparse import imutils import random import cv2 import os

Lines 2-11 import our required packages.

The mrcnn imports are from Matterport’s implementation of Mask R-CNN. From mrcnn , we’ll use Config to create a custom subclass for our configuration, modellib to load our model, and visualize to draw our masks.

Let’s go ahead and parse our command line arguments:

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-w", "--weights", required=True,

help="path to Mask R-CNN model weights pre-trained on COCO")

ap.add_argument("-l", "--labels", required=True,

help="path to class labels file")

ap.add_argument("-i", "--image", required=True,

help="path to input image to apply Mask R-CNN to")

args = vars(ap.parse_args())

Our script requires three command line arguments:

--weights: The path to our Mask R-CNN model weights pre-trained on COCO.--labels: The path to our COCO class labels text file.--image: Our input image path. We’ll be performing instance segmentation on the image provided via the command line.

Using the second argument, let’s go ahead and load our CLASS_NAMES and COLORS for each:

# load the class label names from disk, one label per line

CLASS_NAMES = open(args["labels"]).read().strip().split("\n")

# generate random (but visually distinct) colors for each class label

# (thanks to Matterport Mask R-CNN for the method!)

hsv = [(i / len(CLASS_NAMES), 1, 1.0) for i in range(len(CLASS_NAMES))]

COLORS = list(map(lambda c: colorsys.hsv_to_rgb(*c), hsv))

random.seed(42)

random.shuffle(COLORS)

Line 24 loads the COCO class label names directly from the text file into a list.

From there, Lines 28-31 generate random, distinct COLORS for each class label. The method comes from Matterport’s Mask R-CNN implementation on GitHub.

Let’s go ahead and construct our SimpleConfig class:

class SimpleConfig(Config): # give the configuration a recognizable name NAME = "coco_inference" # set the number of GPUs to use along with the number of images # per GPU GPU_COUNT = 1 IMAGES_PER_GPU = 1 # number of classes (we would normally add +1 for the background # but the background class is *already* included in the class # names) NUM_CLASSES = len(CLASS_NAMES)

Our SimpleConfig class inherits from Matterport’s Mask R-CNN Config (Line 33).

The configuration is given a NAME (Line 35).

From there we set the GPU_COUNT and IMAGES_PER_GPU (i.e., batch). If you have a GPU and tensorflow-gpu installed then Keras + Mask R-CNN will automatically use your GPU. If not, your CPU will be used instead.

Note: I performed today’s experiment on a machine using a single Titan X GPU, so I set my GPU_COUNT = 1 . While my 12GB GPU could technically handle more than one image at a time (either during training or during prediction as in this script), I decided to set IMAGES_PER_GPU = 1 as most readers will not have a GPU with as much memory. Feel free to increase this value if your GPU can handle it.

Our NUM_CLASSES is then set equal to the length of the CLASS_NAMES list (Line 45).

Next, we’ll initialize our config and load our model:

# initialize the inference configuration

config = SimpleConfig()

# initialize the Mask R-CNN model for inference and then load the

# weights

print("[INFO] loading Mask R-CNN model...")

model = modellib.MaskRCNN(mode="inference", config=config,

model_dir=os.getcwd())

model.load_weights(args["weights"], by_name=True)

Line 48 instantiates our config .

Then, using our config , Lines 53-55 load our Mask R-CNN model pre-trained on the COCO dataset.

Let’s go ahead and perform instance segmentation:

# load the input image, convert it from BGR to RGB channel

# ordering, and resize the image

image = cv2.imread(args["image"])

image = cv2.cvtColor(image, cv2.COLOR_BGR2RGB)

image = imutils.resize(image, width=512)

# perform a forward pass of the network to obtain the results

print("[INFO] making predictions with Mask R-CNN...")

r = model.detect([image], verbose=1)[0]

Lines 59-61 load and preprocess our image . Our model expects images in RGB format so we use cv2.cvtColor to swap the color channels (in contrast OpenCV’s default BGR color channel ordering).

Line 65 then performs a forward pass of the image through the network to make both object detection and pixel-wise mask predictions.

The remaining two code blocks will process the results so that we can visualize the objects’ bounding boxes and masks using OpenCV:

# loop over of the detected object's bounding boxes and masks for i in range(0, r["rois"].shape[0]): # extract the class ID and mask for the current detection, then # grab the color to visualize the mask (in BGR format) classID = r["class_ids"][i] mask = r["masks"][:, :, i] color = COLORS[classID][::-1] # visualize the pixel-wise mask of the object image = visualize.apply_mask(image, mask, color, alpha=0.5)

In order to visualize the results, we begin by looping over object detections (Line 68). Inside the loop, we:

- Grab the unique

classIDinteger (Line 71). - Extract the

maskfor the current detection (Line 72). - Determine the

colorused tovisualizethe mask (Line 73). - Apply/draw our predicted pixel-wise mask on the object using a semi-transparent

alphachannel (Line 76).

From here, we’ll draw bounding boxes and class label + score texts for each object in the image:

# convert the image back to BGR so we can use OpenCV's drawing

# functions

image = cv2.cvtColor(image, cv2.COLOR_RGB2BGR)

# loop over the predicted scores and class labels

for i in range(0, len(r["scores"])):

# extract the bounding box information, class ID, label, predicted

# probability, and visualization color

(startY, startX, endY, endX) = r["rois"][i]

classID = r["class_ids"][i]

label = CLASS_NAMES[classID]

score = r["scores"][i]

color = [int(c) for c in np.array(COLORS[classID]) * 255]

# draw the bounding box, class label, and score of the object

cv2.rectangle(image, (startX, startY), (endX, endY), color, 2)

text = "{}: {:.3f}".format(label, score)

y = startY - 10 if startY - 10 > 10 else startY + 10

cv2.putText(image, text, (startX, y), cv2.FONT_HERSHEY_SIMPLEX,

0.6, color, 2)

# show the output image

cv2.imshow("Output", image)

cv2.waitKey()

Line 80 converts our image back to BGR (OpenCV’s default color channel ordering).

On Line 83 we begin looping over objects. Inside the loop, we:

- Extract the bounding box coordinates,

classID,label, andscore(Lines 86-89). - Compute the

colorfor the bounding box and text (Line 90). - Draw each bounding box (Line 93).

- Concatenate the class/probability

text(Line 94) and then draw it at the top of theimage(Lines 95-97).

Once the process is complete, the resulting output image is displayed to the screen until a key is pressed (Lines 100-101).

Mask R-CNN and Keras results

Now that our Mask R-CNN script has been implemented, let’s give it a try.

Make sure you have used the “Downloads” section of this tutorial to download the source code.

You will need to know the concept of command line arguments to run the code. If it is unfamiliar to you, read up on argparse and command line arguments before you try to execute the code.

When you’re ready, open up a terminal and execute the following command:

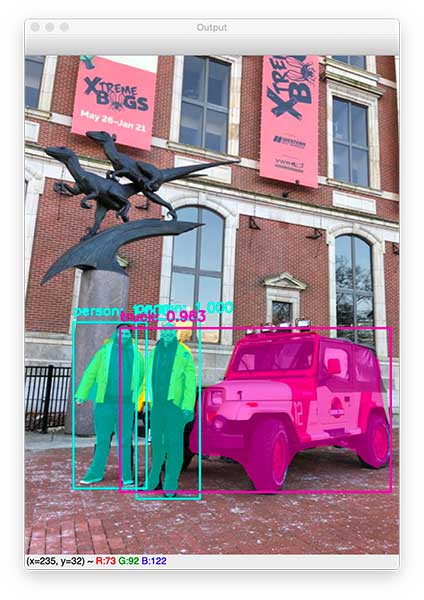

$ python maskrcnn_predict.py --weights mask_rcnn_coco.h5 --labels coco_labels.txt \ --image images/30th_birthday.jpg Using TensorFlow backend. [INFO] loading Mask R-CNN model... [INFO] making predictions with Mask R-CNN... Processing 1 images image shape: (682, 512, 3) min: 0.00000 max: 255.00000 uint8 molded_images shape: (1, 1024, 1024, 3) min: -123.70000 max: 151.10000 float64 image_metas shape: (1, 93) min: 0.00000 max: 1024.00000 float64 anchors shape: (1, 261888, 4) min: -0.35390 max: 1.29134 float32

For my 30th birthday, my wife found a person to drive us around Philadelphia in a replica Jurassic Park jeep — here my best friend and I are outside The Academy of Natural Sciences.

Notice how not only bounding boxes are produced for each object (i.e., both people and the jeep), but also pixel-wise masks as well!

Let’s give another image a try:

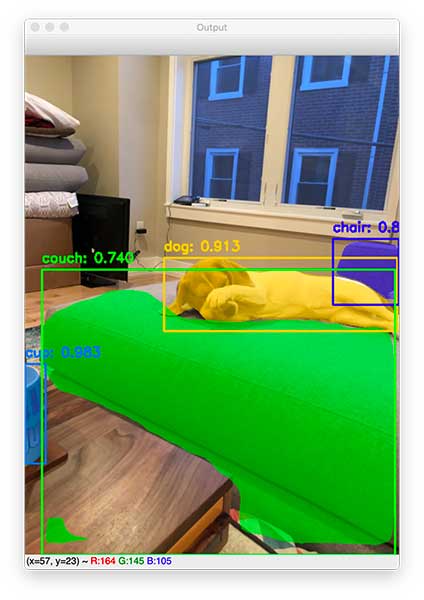

$ python maskrcnn_predict.py --weights mask_rcnn_coco.h5 --labels coco_labels.txt \ --image images/couch.jpg Using TensorFlow backend. [INFO] loading Mask R-CNN model... [INFO] making predictions with Mask R-CNN... Processing 1 images image shape: (682, 512, 3) min: 0.00000 max: 255.00000 uint8 molded_images shape: (1, 1024, 1024, 3) min: -123.70000 max: 151.10000 float64 image_metas shape: (1, 93) min: 0.00000 max: 1024.00000 float64 anchors shape: (1, 261888, 4) min: -0.35390 max: 1.29134 float32

Here is a super adorable photo of my dog, Janie, laying on the couch:

- Despite the vast majority of the couch not being visible, the Mask R-CNN is still able to label it as such.

- The Mask R-CNN is correctly able to label the dog in the image.

- And even though my coffee cup is barely visible, Mask R-CNN is able to label the cup as well (if you look really closely you’ll see that my coffee cup is a Jurassic Park mug!)

The only part of the image that Mask R-CNN is not able to correctly label is the back part of the couch which it mistakes as a chair — looking at the image closely, you can see how Mask R-CNN made the mistake (the region does look quite chair-like versus being part of the couch).

Here’s another example of using Keras + Mask R-CNN for instance segmentation:

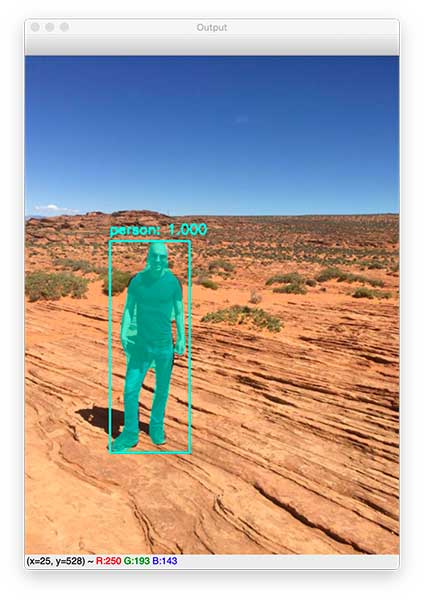

$ python maskrcnn_predict.py --weights mask_rcnn_coco.h5 --labels coco_labels.txt \ --image images/page_az.jpg Using TensorFlow backend. [INFO] loading Mask R-CNN model... [INFO] making predictions with Mask R-CNN... Processing 1 images image shape: (682, 512, 3) min: 0.00000 max: 255.00000 uint8 molded_images shape: (1, 1024, 1024, 3) min: -123.70000 max: 149.10000 float64 image_metas shape: (1, 93) min: 0.00000 max: 1024.00000 float64 anchors shape: (1, 261888, 4) min: -0.35390 max: 1.29134 float32

A few years ago, my wife and I made a trip out to Page, AZ (this particular photo was taken just outside Horseshoe Bend) — you can see how the Mask R-CNN has not only detected me but also constructed a pixel-wise mask for my body.

Let’s apply Mask R-CNN to one final image:

$ python maskrcnn_predict.py --weights mask_rcnn_coco.h5 --labels coco_labels.txt \ --image images/ybor_city.jpg Using TensorFlow backend. [INFO] loading Mask R-CNN model... [INFO] making predictions with Mask R-CNN... Processing 1 images image shape: (688, 512, 3) min: 5.00000 max: 255.00000 uint8 molded_images shape: (1, 1024, 1024, 3) min: -123.70000 max: 151.10000 float64 image_metas shape: (1, 93) min: 0.00000 max: 1024.00000 float64 anchors shape: (1, 261888, 4) min: -0.35390 max: 1.29134 float32

One of my favorite cities to visit in the United States is Ybor City — there’s just something I like about the area (and perhaps it’s that the roosters are a protected in thee city and free to roam around).

Here you can see me and such a rooster — notice how each of us is correctly labeled and segmented by the Mask R-CNN. You’ll also notice that the Mask R-CNN model was able to localize each of the individual cars and label the bus!

Can Mask R-CNN run in real-time?

At this point you’re probably wondering if it’s possible to run Keras + Mask R-CNN in real-time, right?

As you know from “The History of Mask R-CNN?” section above, Mask R-CNN is based on the Faster R-CNN object detectors.

Faster R-CNNs are incredibly computationally expensive, and when you add instance segmentation on top of object detection, the model only becomes more computationally expensive, therefore:

- On a CPU, a Mask R-CNN cannot run in real-time.

- But on a GPU, Mask R-CNN can get up to 5-8 FPS.

If you would like to run Mask R-CNN in semi-real-time, you will need a GPU.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: March 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to use Keras + Mask R-CNN to perform instance segmentation.

Unlike object detection, which only gives you the bounding box (x, y)-coordinates for an object in an image, instance segmentation takes it a step further, yielding pixel-wise masks for each object.

Using instance segmentation we can actually segment an object from an image.

To perform instance segmentation we used the Matterport Keras + Mask R-CNN implementation.

We then created a Python script that:

- Constructed a configuration class for Mask R-CNN (both with and without a GPU).

- Loaded the Keras + Mask R-CNN architecture from disk

- Preprocessed our input image

- Detected objects/masks in the image

- Visualized the results

If you are interested in how to:

- Label and annotate your own custom image dataset

- And then train a Mask R-CNN model on top of your annotated dataset…

…then you’ll want to take a look at my book, Deep Learning for Computer Vision with Python, where I cover Mask R-CNN and annotation in detail.

I hope you enjoyed today’s post!

To download the source code (including the pre-trained Keras + Mask R- CNN model), just enter your email address in the form below! I’ll be sure to let you know when future tutorials are published here on PyImageSearch.

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!