The Ubuntu VirtualBox virtual machine that comes with my book, Deep Learning for Computer Vision with Python, includes all the necessary deep learning and computer vision libraries you need (such as Keras, TensorFlow, scikit-learn, scikit-image, OpenCV, etc.) pre-installed.

However, while the deep learning virtual machine is easy to use, it also has a number of drawbacks, including:

- Being significantly slower than executing instructions on your native machine.

- Unable to access your GPU (and other peripherals attached to your host).

What the virtual machine has in convenience you end up paying for in performance — this makes it a great for readers who are getting their feet wet, but if you want to be able to dramatically boost speed while still maintaining the pre-configured environment, you should consider using Amazon Web Services (AWS) and my pre-built deep learning Amazon Machine Image (AMI).

Using the steps outlined in this tutorial you’ll learn how to login (or create) your AWS account, spin up a new instance (with or without a GPU), and install my pre-configured deep learning image. This will enable you to enjoy the pre-built deep learning environment without sacrificing speed.

(2019-01-07) Release v2.1 of DL4CV: AMI version 2.1 is released with more environments to accompany bonus chapters of my deep learning book.

To learn how to use my deep learning AMI, just keep reading.

Pre-configured Amazon AWS deep learning AMI with Python

In this tutorial I will show you how to:

- Login/create your AWS account.

- Launch my pre-configured deep learning AMI.

- Login to the server and execute your code.

- Stop the machine when you are done.

However, before we get too far I want to mention that:

- The deep learning AMI is Linux-based so I would recommend having some basic knowledge of Unix environments, especially the command line.

- AWS is not free and costs an hourly rate. Exactly how much the hourly rate depends is on which machine you choose to spin up (no GPU, one GPU, eight GPUs, etc.). For less than $1/hour you can use a machine with a GPU which will dramatically speedup the training of deep neural networks. You pay for only the time the machine is running. You can then shut down your machine when you are done.

Step #1: Setup Amazon Web Services (AWS) account

In order to launch my pre-configured deep learning you first need an Amazon Web Services account.

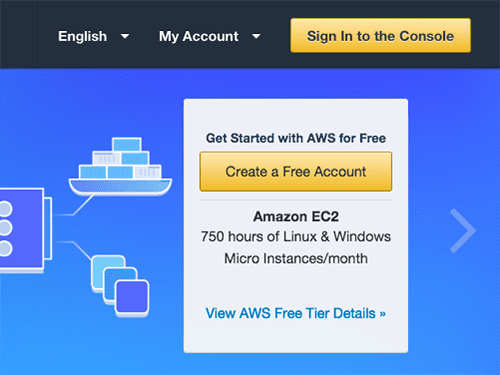

To start, head to the Amazon Web Services homepage and click the “Sign In to the Console” link:

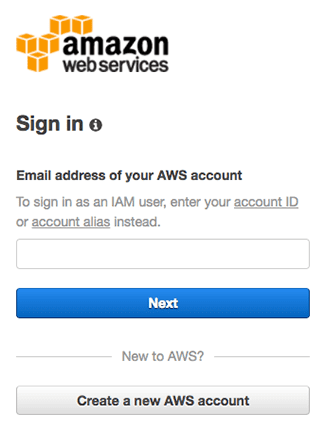

If you already have an account you can login using your email address and password. Otherwise you will need to click the “Create a new AWS account” button and create your account:

I would encourage you to use an existing Amazon.com login as this will expedite the process.

Step #2: Select and launch your deep learning AWS instance

You are now ready to launch your pre-configured deep learning AWS instance.

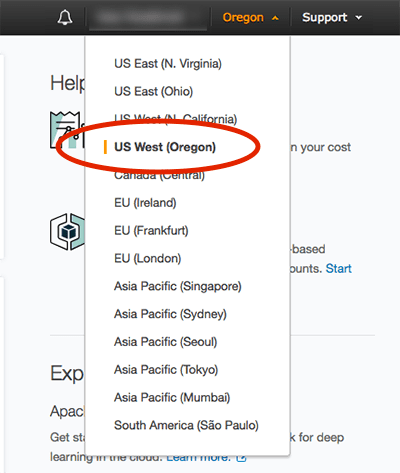

First, you should set your region/zone to “US West (Oregon)”. I created the deep learning AMI in the Oregon region so you’ll need to be in this region to find it, launch it, and access it:

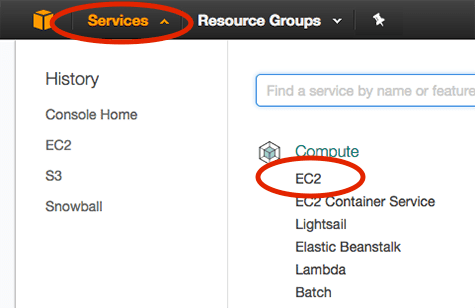

After you have set your region to Oregon, click the “Services” tab and then select “EC2” (Elastic Cloud Compute):

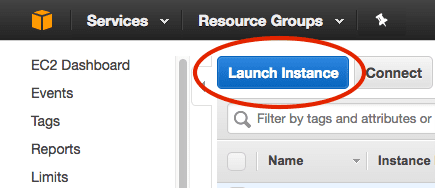

From there you should click the “Launch Instance” button:

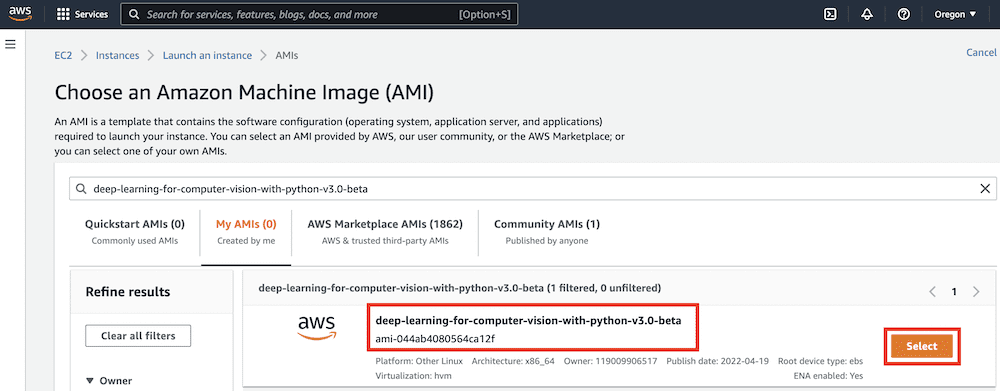

Then select the “Community AMIs” and search for either “deep-learning-for-computer-vision-with-python-v2.1 – ami-089c8796ad90c7807”:

Click “Select” next to the AMI.

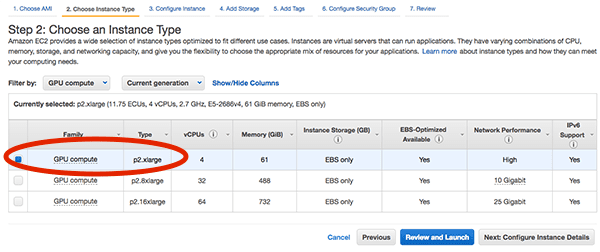

You are now ready to select your instance type. Amazon provides a huge number of virtual servers that are designed to run a wide array of applications. These instances have varying amount of CPU power, storage, network capacity, or GPUs, so you should consider:

- What type of machine you would like to launch.

- Your particular budget.

GPU instances tend to cost much more than standard CPU instances. However, they can train deep neural networks in a fraction of the time. When you average out the amount of time it takes to train a network on a CPU versus on a GPU you may realize that using the GPU instance will save you money.

For CPU instances I recommend you use the “Compute optimized” c4.* instances. In particular, the c4.xlarge instance is a good option to get your feet wet.

If you would like to use a GPU, I would highly recommend the “GPU compute” instances. The p2.xlarge instance has a single NVIDIA K80 (12GB of memory).

The p2.8xlarge sports 8 GPUs. While the p2.16xlarge has 16 GPUs.

I have included the pricing (at the time of this writing) for each of the instances below:

- c4.xlarge: $0.199/hour

- p2.xlarge: $0.90/hour

- p2.8xlarge: $7.20/hour

- p2.16xlarge: $14.40/hour

As you can see, the GPU instances are much more expensive; however, you are able to train networks in a fraction of the cost, making them a more economically viable option. Because of this I recommend using the p2.xlarge instance if this is your first time using a GPU for deep learning.

In the example screenshot below you can see that I have chosen the p2.xlarge instance:

(2019-01-07) Release v2.1 of DL4CV: AWS currently has their p2 instances under “GPU instances” rather than “GPU compute”.

Next, I can click “Review and Launch” followed by “Launch” to boot my instance.

After clicking “Launch” you’ll be prompted to select your key pair or create a new key pair:

If you have an existing key pair you can select “Choose an existing key pair” from the drop down. Otherwise you’ll need to select the “Create a new key pair” and then download the pair. The key pair is used to login to your AWS instance.

After acknowledging and accepting login note from Amazon your instance will start to boot. Scroll down to the bottom of the page and click “View Instances”. It will take a minute or so for your instance to boot.

Once the instance is online you’ll see the “Instance State” column be changed to “running” for the instance.

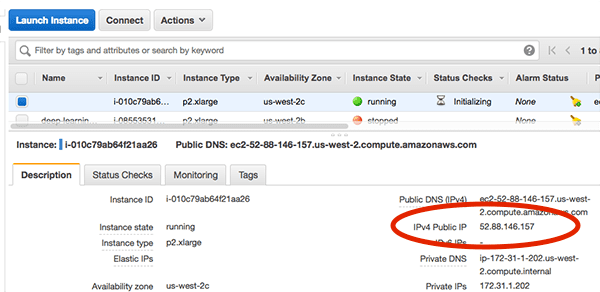

Select it and you’ll be able to view information on the instance, including the IP address:

Here you can see that my IP address is 52.88.146.157 . Your IP address will be different.

Fire up a terminal and you can SSH into your AWS instance:

$ ssh -i EC2KeyPair.pem ubuntu@52.88.146.157

You’ll want to update the command above to:

- Use the filename you created for the key pair.

- Use the IP address of your instance.

Step #3: (GPU only & only for AMI version 1.0 and 1.2) Re-install NVIDIA deep learning driver

(2019-01-07) Release v2.1 of DL4CV: This step is not required for AMI version 2.1. Neither a driver update nor a reboot is required. Just launch and go. However, take note of the nvidia-smi command below as it is useful to verify driver operation.

If you selected a GPU instance you will need to:

- Reboot your AMI via the command line

- Reinstall the NVIDIA driver

The reason for these two steps is because instances launched from a pre-configured AMI can potentially restart with a slightly different kernel, therefore causing the Nouveau (default) driver to be loaded instead of the NVIDIA driver.

To avoid this situation you can either:

- Reboot your system now, essentially “locking in” the current kernel and then reinstalling the NVIDA driver once.

- Reinstall the NVIDIA driver each time you launch/reboot your instance from the AWS admin.

Both methods have their pros and cons, but I would recommend the first one.

To start, reboot your instance via the command line:

$ sudo reboot

Your SSH connection will terminate during the reboot process.

Once the instance has rebooted, re-SSH into the instance, and reinstall the NVIDIA kernel drivers. Luckily this is easy as I have included the driver file in the home directory of the instance.

If you list the contents of the installers directory you’ll see three files:

$ ls -l installers/ total 1435300 -rwxr-xr-x 1 root root 1292835953 Sep 6 14:03 cuda-linux64-rel-8.0.61-21551265.run -rwxr-xr-x 1 root root 101033340 Sep 6 14:03 cuda-samples-linux-8.0.61-21551265.run -rwxr-xr-x 1 root root 75869960 Sep 6 14:03 NVIDIA-Linux-x86_64-375.26.run

Change directory into installers and then execute the following command:

$ cd installers $ sudo ./NVIDIA-Linux-x86_64-375.26.run --silent

Follow the prompts on screen (including overwriting any existing NVIDIA driver files) and your NVIDIA deep learning driver will be installed.

You can validate the NVIDIA driver installed successfully by running the nvidia-smi command:

$ nvidia-smi

Wed Sep 13 12:51:43 2017

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 375.26 Driver Version: 375.26 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

|===============================+======================+======================|

| 0 Tesla K80 Off | 0000:00:1E.0 Off | 0 |

| N/A 43C P0 59W / 149W | 0MiB / 11439MiB | 97% Default |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: GPU Memory |

| GPU PID Type Process name Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

Step #4: Access deep learning Python virtual environments on AWS

(2019-01-07) Release v2.1 of DL4CV: Version 2.1 of the AMI has the following environments: dl4cv , mxnet , tfod_api , retinanet , mask_rcnn . Ensure that you’re working in the correct environment that corresponds to the DL4CV book chapter you’re studying. Additionally, be sure to refer to the DL4CV companion website for more information on these virtual environments.

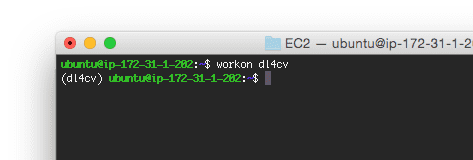

You can access our deep learning and computer vision libraries by using the workon dl4cv command to access the Python virtual virtual environment:

Notice that my prompt now has the text (dl4cv) preceding it, implying that I am inside the dl4cv Python virtual environment.

You can run pip freeze to see all the Python libraries installed.

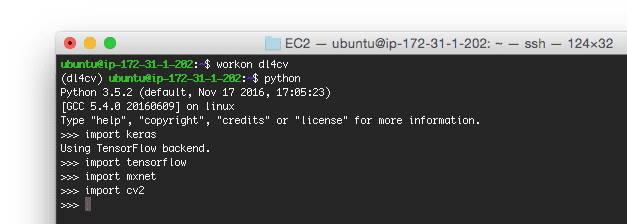

I have included a screenshot below demonstrating how to import Keras, TensorFlow, mxnet, and OpenCV from a Python shell:

If you run into an error importing mxnet, simply recompile it:

$ cd ~/mxnet $ make -j4 USE_OPENCV=1 USE_BLAS=openblas USE_CUDA=1 \ USE_CUDA_PATH=/usr/local/cuda USE_CUDNN=1

This due to the NVIDIA kernel driver issue I mentioned in Step #3. You only need to recompile mxnet once and only if you receive an error at import.

The code + datasets to Deep Learning for Computer Vision with Python are not included on the pre-configured AMI by default (as the AMI is publicly available and can be used for tasks other than reading through Deep Learning for Computer Vision with Python).

To upload the code from the book on your local system to the AMI I would recommend using the scp command:

$ scp -i EC2KeyPair.pem ~/Desktop/sb_code.zip ubuntu@52.88.146.157:~

Here I am specifying:

- The path to the

.zipfile of the Deep Learning for Computer Vision with Python code + datasets. - The IP address of my Amazon instance.

From there the .zip file is uploaded to my home directory.

You can then unzip the archive and execute the code:

$ unzip sb_code.zip

$ cd sb_code/chapter12-first_cnn/

$ workon dl4cv

$ python shallownet_animals.py --dataset ../datasets/animals

Using TensorFlow backend.

[INFO] loading images...

...

Epoch 100/100

2250/2250 [==============================] - 0s - loss: 0.3429 - acc: 0.8800 - val_loss: 0.7278 - val_acc: 0.6720

[INFO] evaluating network...

precision recall f1-score support

cat 0.67 0.52 0.58 262

dog 0.59 0.64 0.62 249

panda 0.75 0.87 0.81 239

avg / total 0.67 0.67 0.67 750

Step #5: Stop your deep learning AWS instance

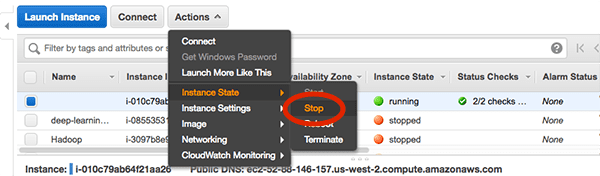

Once you are finished working with your AMI head back to the “Instances” menu item on your EC2 dashboard and select your instance.

With your instance selected click “Actions => Instance State => Stop”:

This process will shutdown your deep learning instance (and you will no longer be billed hourly for it).

If you wanted to instead delete the instance you would select “Terminate”. Deleting an instance destroys all of your data, so be sure you’ve put your trained models back on your laptop if needed. Terminating an instance also stops you from incurring any further charges for the instance.

Troubleshooting and FAQ

In this section I detail answers to frequently asked questions and problems regarding the pre-configured deep learning AMI.

How do I execute code from Deep Learning for Computer Vision with Python from the deep learning AMI?

Please see the “Access deep learning Python virtual environment on AWS” section above. The gist is that you will upload a .zip of the code to your AMI via the scp command. An example command can be seen below:

$ scp -i EC2KeyPair.pem path/to/code.zip ubuntu@your_aws_ip_address:~

Can I use a GUI/window manager with my deep learning AMI?

No, the AMI is terminal only. I would suggest using the deep learning AMI if you are:

- Comfortable with Unix environments.

- Have experience using the terminal.

Otherwise I would recommend the deep learning virtual machine part of Deep Learning for Computer Vision with Python instead.

It is possible to use X11 forwarding with the AMI. when you SSH to the machine, just provide the -X flag like this:

$ ssh -X -i EC2KeyPair.pem ubuntu@52.88.146.157

How can I use a GPU instance for deep learning?

Please see the “Step #2: Select and launch your deep learning AWS instance” section above. When selecting your Amazon EC2 instance choose a p2.* (i.e., “GPU compute” or “GPU instances”) instance. These instances have one, eight, and sixteen GPUs, respectively.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post you learned how to use my pre-configured AMI for deep learning in the Amazon Web Services ecosystem.

The benefit of using my AMI over the pre-configured virtual machine is that:

- Amazon Web Services and the Elastic Cloud Compute ecosystem give you a huge range of systems to choose from, including CPU-only, single GPU, and multi-GPU.

- You can scale your deep learning environment to multiple machines.

- You retain the ability to use pre-configured deep learning environments but still get the benefit of added speed via dedicated hardware.

The downside is that AWS:

- Costs money (typically an hourly rate).

- Can be daunting for those who are new to Unix environments.

After you have gotten your feet wet with deep learning using my virtual machine I would highly recommend that you try AWS out as well — you’ll find that the added speed improvements are worth the extra cost.

To learn more, take a look at my new book, Deep Learning for Computer Vision with Python.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.