Have you ever had a horrible case of eyelid twitching? One where your eyelid just won’t stop spazzing out, no matter what you do?

That’s currently what’s going on with me — and it’s been going on for over two weeks. As you can imagine, I’m not exactly happy.

However, to combat this persistent, often distracting nuisance, I’ve started sleeping more regularly, drinking substantially less caffeine (I was only drinking two cups of coffee a day to begin with), and consuming next to no alcohol. Which is a real challenge when you enjoy a frothy beer as much as I do.

More sleep has certainly helped. And I don’t miss the occasional beer.

But man, these caffeine headaches are the worst.

Luckily, I woke up this morning to an email that practically cured me of my caffeine free woes and interminable eyelid twitching.

Opening my inbox, I found an excellent question from dedicated PyImageSearch reader Sriharsha Annamaneni, who wrote in and asked:

Hi Adrian,

In one of Jitendra Malik’s YouTube videos, he explains superpixels. I did not understand it completely. Can you explain it on your website?

Thank you,

Sriharsha Annamaneni

Great question Sriharsha. I’d be happy to discuss superpixels, I’m sure a ton of other PyImageSearch readers would be interested too.

But before we dive into some code, let’s discuss what exactly a superpixel is and why it has important applications in the computer vision domain.

OpenCV and Python versions:

This example will run on Python 2.7/Python 3.4+ and OpenCV 2.4.X/OpenCV 3.0+.

So, What’s a Superpixel?

Take a second, clear your mind, and consider how we represent images.

Images are represented as a grid of pixels, in either single or multiple channels.

We take these M x N pixel grids and then apply algorithms to them, such as face detection and recognition, template matching, and even deep learning applied directly to the raw pixel intensities.

The problem is that our pixel grid is by no means a natural representation of an image.

For example, consider the image below:

If I were to take a single pixel from the image on the left (highlighted by the red arrow) and then showed it to you on the right, could you reasonably tell me that (1) that pixel came from a Velociraptor and (2) that single pixel actually holds some sort of semantic meaning?

Unless you really, really want to play devils advocate, the answer is a resounding no — a single pixel, standing alone by itself, is not a natural representation of an image.

Taken as a whole, this grouping of pixels as a pixel grid is simply an artifact of an image — part of the process of capturing and creating digital images.

That leads us to superpixels.

Intuitively, it would make more sense to explore not only perceptual, but semantic meanings of an image formed by locally grouping pixels as well.

When we perform this type of local grouping of pixels on our pixel grid, we arrive at superpixels.

These superpixels carry more perceptual and semantic meaning than their simple pixel grid counterparts.

Benefits of Superpixels

To summarize the work of Dr. Xiaofeng Ren, we can see that a mapping from pixel grids to superpixels would (ideally) hold the desirable properties of:

- Computational efficiency: While it may be (somewhat) computationally expensive to compute the actual superpixel groupings, it allows us to reduce the complexity of the images themselves from hundreds of thousands of pixels to only a few hundred superpixels. Each of these superpixels will then contain some sort of perceptual, and ideally, semantic value.

- Perceptual meaningfulness: Instead of only examining a single pixel in a pixel grid, which caries very little perceptual meaning, pixels that belong to a superpixel group share some sort of commonality, such as similar color or texture distribution.

- Oversegmentation: Most superpixel algorithms oversegment the image. This means that most of important boundaries in the image are found; however, at the expense of generating many insignificant boundaries. While this may sound like a problem or a deterrent to using super pixels, it’s actually a positive — the end product of this oversegmentation is that that very little (or no) pixels are lost from the pixel grid to superpixel mapping.

- Graphs over superpixels: Dr. Ren refers to this concept as “representationally efficient”. To make this more concrete, imagine constructing a graph over a 50,000 x 50,000 pixel grid, where each pixel represents a node in the graph — that leads to very large representation. However, suppose we instead applied superpixels to the pixel grid space, leaving us with a (arbitrary) 200 superpixels. In this representation, constructing a graph over the 200 superpixels is substantially more efficient.

Example: Simple Linear Iterative Clustering (SLIC)

As always, a PyImageSearch blog post wouldn’t be complete without an example and some code.

Ready?

Open up your favorite editor, create slic.py , and let’s get coding:

# import the necessary packages

from skimage.segmentation import slic

from skimage.segmentation import mark_boundaries

from skimage.util import img_as_float

from skimage import io

import matplotlib.pyplot as plt

import argparse

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", required = True, help = "Path to the image")

args = vars(ap.parse_args())

# load the image and convert it to a floating point data type

image = img_as_float(io.imread(args["image"]))

# loop over the number of segments

for numSegments in (100, 200, 300):

# apply SLIC and extract (approximately) the supplied number

# of segments

segments = slic(image, n_segments = numSegments, sigma = 5)

# show the output of SLIC

fig = plt.figure("Superpixels -- %d segments" % (numSegments))

ax = fig.add_subplot(1, 1, 1)

ax.imshow(mark_boundaries(image, segments))

plt.axis("off")

# show the plots

plt.show()

On Lines 2-7 we import the packages we’ll be using for this example. To perform the SLIC superpixel segmentation, we will be using the sckit-image implementation, which we import on Line 2. To draw the actual superpixel segmentations, scikit-image provides us with a mark_boundaries function which we import on Line 3.

From there, we import a utility function, img_as_float on Line 4, which as the name suggests, converts an image from an unsigned 8-bit integer, to a floating point data with, with all pixel values called to the range [0, 1].

Line 5 imports the io sub-package of scikit-image which is used for loading and saving images.

We’ll also make use of matplotlib to plot our results and argparse to parse our command line arguments.

Lines 10-12 handle parsing our command line arguments. We need only a single switch, --image , which is the path to where our image resides on disk.

We then load this image and convert it from an unsigned 8-bit integer to a floating point data type on Line 15.

Now the interesting stuff happens.

We start looping over our number of superpixel segments on Line 18. In this case, we’ll be examining three increasing sizes of segments: 100, 200, and 300, respectively.

We perform the SLIC superpixel segmentation on Line 21. The slic function takes only a single required parameter, which is the image we want to perform superpixel segmentation on.

However, the slic function also provides many optional parameters, which I’ll only cover a sample of here.

The first is the is the n_segments argument which defines how many superpixel segments we want to generate. This value defaults to 100 segments.

We then supply sigma , which is the smoothing Gaussian kernel applied prior to segmentation.

Other optional parameters can be utilized in the function, such as max_iter , which the maximum number of iterations for k-means, compactness , which balances the color-space proximity with image space-proximity, and convert2lab which determines whether the input image should be converted to the L*a*b* color space prior to forming superpixels (in nearly all cases, having convert2lab set to True is a good idea).

Now that we have our segments, we display them using matplotlib in Lines 24-27.

In order to draw the segmentations, we make use of the mark_boundaries function which simply takes our original image and overlays our superpixel segments.

Finally, our results are displayed on Line 30.

Now that our code is done, let’s see what our results look like.

Fire up a shell and execute the following command:

$ python superpixel.py --image raptors.png

If all goes well, you should see the following image:

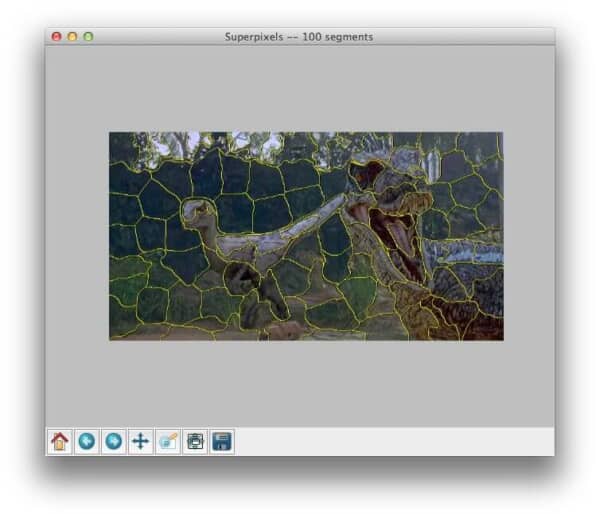

In this image, we have found (approximately) 100 superpixel segmentations. Notice how locally similar regions of the image, such as the scales of the Velociraptor and the shrubbery are grouped in similar superpixels.

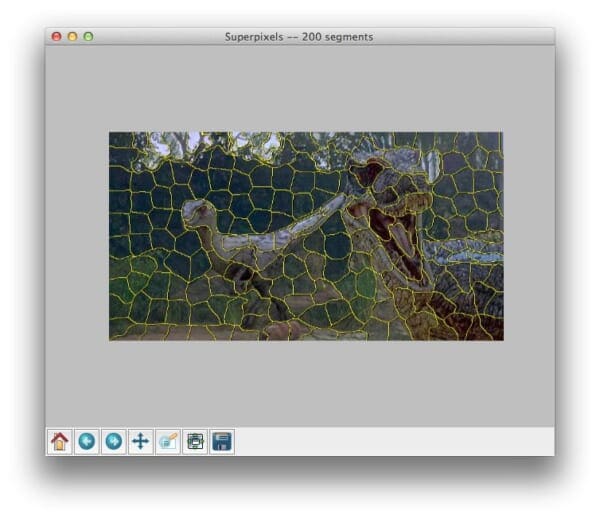

Now, let’s increase to 200 superpixel segments:

Same story here — local regions with similar color and texture distributions are part of the same superpixel group.

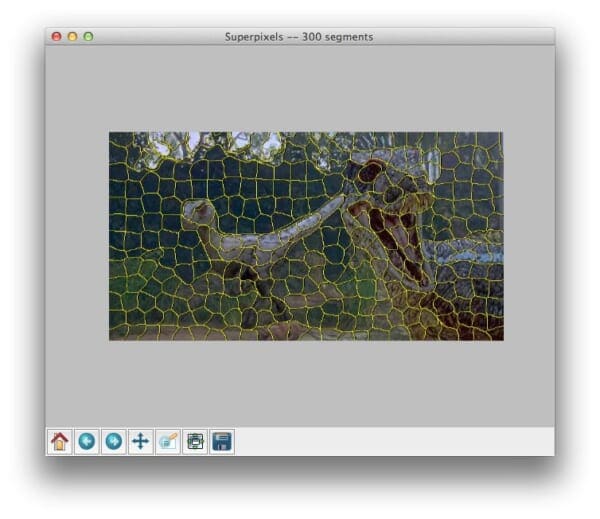

Finally, let’s generate a very dramatic oversegmentation of the image using 300 super pixels:

Notice how as the number of segments increases, the segments also become more rectangular and grid like. This is not a coincidence, and it can be further controlled by the optional compactness parameter of slic .

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post I explained what superpixel segmentation is and how it has many benefits in the computer vision world.

For example, working with superpixels instead of the standard pixel grid space yields us computational efficiency, perceptual meaningfulness, oversegmentation, and efficient graph representations across regions of the image.

Finally, I showed you how to utilize the Simple Linear Iterative Clustering (SLIC) algorithm to apply superpixel segmentation to your own images.

This certainly won’t be the last time we discuss superpixels on this blog, so look forward to more posts in the future!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!