In this tutorial, you will learn how to apply neural style transfer to both images and real-time video using OpenCV, Python, and deep learning. By the end of this guide, you’ll be able to generate beautiful works of art with neural style transfer.

The original neural style transfer algorithm was introduced by Gatys et al. in their 2015 paper, A Neural Algorithm of Artistic Style (in fact, this is the exact algorithm that I teach you how to implement and train from scratch inside Deep Learning for Computer Vision with Python).

In 2016, Johnson et al. published Perceptual Losses for Real-Time Style Transfer and Super- Resolution, which frames neural style transfer as a super-resolution-like problem using perceptual loss. The end result is a neural style transfer algorithm which is up to three orders of magnitude faster than the Gatys et al. method (there are a few downsides though and I’ll be discussing them later in the guide).

In the rest of this post you will learn how to apply the neural style transfer algorithm to your own images and video streams.

To learn how to apply neural style transfer using OpenCV and Python, just keep reading!

Neural Style Transfer with OpenCV

In the remainder of today’s guide I will be demonstrating how you can apply the neural style transfer algorithm using OpenCV and Python to generate your own works of art.

The method I’m discussing here today is capable of running in near real-time on a CPU and is fully capable of obtaining super real-time performance on your GPU.

We’ll start with a brief discussion of neural style transfer, including what it is and how it works.

From there we’ll utilize OpenCV and Python to actually apply neural style transfer.

What is neural style transfer?

Neural style transfer is the process of:

- Taking the style of one image

- And then applying it to the content of another image

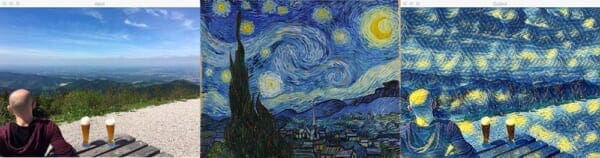

An example of the neural style transfer process can be seen in Figure 1. On the left we have our content image — a serene view of myself enjoying a beer on top of a mountain in the Black Forest of Germany, overlooking the town of Baden.

In the middle is our style image, Vincent van Gogh’s famous The Starry Night.

And on the right is the output of applying the style of van Gogh’s Starry Night to the content of my photo of Germany’s Black Forest. Notice how we have retained the content of the rolling hills, forest, myself, and even the beers, but have applied the style of Starry Night — it’s as if Van Gogh had applied his masterful paint strokes to our scenic view!

The question is, how do we define a neural network to perform neural style transfer?

Is that even possible?

You bet it is — and we’ll be discussing how neural style transfer is made possible in the next section.

How does neural style transfer work?

At this point you’re probably scratching your head and thinking something along the lines of: “How do we define a neural network to perform style transfer?”

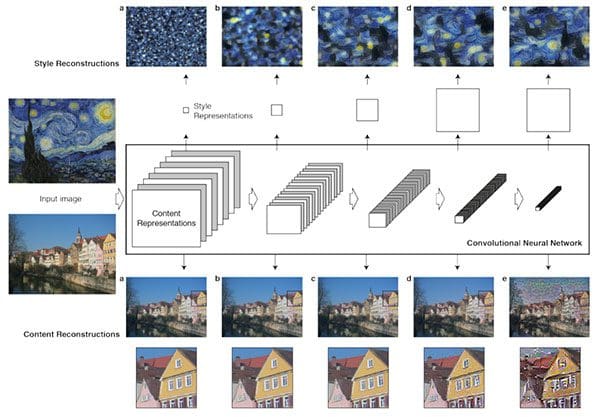

Interestingly, the original 2015 paper by Gatys et al. proposed a neural style transfer algorithm that does not require a new architecture at all. Instead, we can take a pre-trained network (typically on ImageNet) and define a loss function that will enable us to achieve our end goal of style transfer and then optimize over that loss function.

Therefore, the question isn’t “What neural network do we use?” but rather “What loss function do we use?”

The answer is a three-component loss function, including:

- Content loss

- Style loss

- Total-variation loss

Each component is individually computed and then combined in a single meta-loss function. By minimizing the meta-loss function we will be in turn jointly optimizing the content, style, and total-variation loss as well.

While the Gatys et al. method produced beautiful neural style transfer results, the problem was that it was quite slow.

Johnson et al. (2016) built on the work of Gatys et al., proposing a neural style transfer algorithm that is up to three orders of magnitude faster. The Johnson et al. method frames neural style transfer as a super-resolution-like problem based on perceptual loss functions.

While the Johnson et al. method is certainly fast, the biggest downside is that you cannot arbitrarily select your style images like you could in the Gatys et al. method.

Instead, you first need to explicitly train a network to reproduce the style of your desired image. Once the network is trained, you can then apply it to any content image you wish. You should see the Johnson et al. method as a more of an “investment” in your style image — you better like your style image as you’ll be training your own network to reproduce its style on content images.

Johnson et al. provide documentation on how to train your own neural style transfer models on their official GitHub page.

Finally, it’s also worth noting that that in Ulyanov et al.’s 2017 publication, Instance Normalization: The Missing Ingredient for Fast Stylization, it was found that swapping batch normalization for instance normalization (and applying instance normalization at both training and testing), leads to even faster real-time performance and arguably more aesthetically pleasing results as well.

I have included both the models used by Johnson et al. in their ECCV paper along with the Ulyanov et al. models in the “Downloads” section of this post — be sure to download them so you can follow along with the remainder of this guide.

And if you’re interested in learning more about how neural style transfer works, be sure to refer to my book, Deep Learning for Computer Vision with Python.

Project structure

Today’s project includes a number of files which you can grab from the “Downloads” section.

Once you’ve grabbed the scripts + models + images, you can inspect the project structure with the tree command:

$ tree --dirsfirst . ├── images │ ├── baden_baden.jpg │ ├── giraffe.jpg │ ├── jurassic_park.jpg │ └── messi.jpg ├── models │ ├── eccv16 │ │ ├── composition_vii.t7 │ │ ├── la_muse.t7 │ │ ├── starry_night.t7 │ │ └── the_wave.t7 │ └── instance_norm │ ├── candy.t7 │ ├── feathers.t7 │ ├── la_muse.t7 │ ├── mosaic.t7 │ ├── starry_night.t7 │ ├── the_scream.t7 │ └── udnie.t7 ├── neural_style_transfer.py ├── neural_style_transfer_examine.py └── neural_style_transfer_video.py 4 directories, 18 files

Once you use the “Downloads” section of the blog post to grab the .zip, you won’t need to go hunting for anything else online. I’ve provided a handful of test images/ as well as a number of models/ that have already been trained by Johnson et. al. You’ll also find three Python scripts to work with and we’ll be reviewing two of them today.

Implementing neural style transfer

Let’s get started implementing neural style transfer with OpenCV and Python.

Open up your neural_style_transfer.py file and insert the following code:

# import the necessary packages

import argparse

import imutils

import time

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-m", "--model", required=True,

help="neural style transfer model")

ap.add_argument("-i", "--image", required=True,

help="input image to apply neural style transfer to")

args = vars(ap.parse_args())

First, we import our required packages and parse command line arguments.

Our notable imports are:

- imutils: This package is pip-installable via

pip install --upgrade imutils. I recently releasedimutils==0.5.1, so don’t forget to upgrade! - OpenCV: You need OpenCV 3.4 or better in order to use today’s code. You can install OpenCV 4 using my tutorials for Ubuntu and macOS.

We have two required command line arguments for this script:

--model: The neural style transfer model path. I’ve included 11 pre-trained models for you to use in the “Downloads”.--image: Our input image which we’ll apply the neural style to. I’ve included 4 sample images. Feel free to experiment with your own as well!

You do not have to change the command line argument code — the arguments are passed and processed at runtime. If you aren’t familiar with how this works, be sure to read my command line arguments + argparse blog post.

Now comes the fun part — we’re going to load our image + model and then compute neural style transfer:

# load the neural style transfer model from disk

print("[INFO] loading style transfer model...")

net = cv2.dnn.readNetFromTorch(args["model"])

# load the input image, resize it to have a width of 600 pixels, and

# then grab the image dimensions

image = cv2.imread(args["image"])

image = imutils.resize(image, width=600)

(h, w) = image.shape[:2]

# construct a blob from the image, set the input, and then perform a

# forward pass of the network

blob = cv2.dnn.blobFromImage(image, 1.0, (w, h),

(103.939, 116.779, 123.680), swapRB=False, crop=False)

net.setInput(blob)

start = time.time()

output = net.forward()

end = time.time()

In this code block we proceed to:

- Load a pre-trained neural style transfer model into memory as

net(Line 17). - Load the input

imageand resize it (Lines 21 and 22). - Construct a

blobby performing mean subtraction (Lines 27 and 28). Read aboutcv2.dnn.blobFromImageand how it works in my previous blog post. - Perform a

forwardpass to obtain anoutputimage (i.e., the result of the neural style transfer process) on Line 31. I’ve also surrounded this line with timestamps for benchmarking purposes.

Next, it is critical that we post-process the output image:

# reshape the output tensor, add back in the mean subtraction, and # then swap the channel ordering output = output.reshape((3, output.shape[2], output.shape[3])) output[0] += 103.939 output[1] += 116.779 output[2] += 123.680 output /= 255.0 output = output.transpose(1, 2, 0)

For the particular image I’m using for this example, the output NumPy array will have the shape (1, 3, 452, 600) :

- The

1indicates that we passed a batch size of one (i.e., just our single image) through the network. - OpenCV is using channels-first ordering here, indicating there are

3channels in the output image. - The final two values in the output shape are the number of rows (height) and number of columns (width).

We reshape the matrix to simply be (3, H, W) (Line 36) and then “de-process” the image by:

- Adding back in the mean values we previously subtracted (Lines 37-39).

- Scaling (Line 40).

- Transposing the matrix to be channels-last ordering (Line 41).

The final step is to show the output of the neural style transfer process to our screen:

# show information on how long inference took

print("[INFO] neural style transfer took {:.4f} seconds".format(

end - start))

# show the images

cv2.imshow("Input", image)

cv2.imshow("Output", output)

cv2.waitKey(0)

Neural style transfer results

In order to replicate my results, you will need to grab the “Downloads” for this blog post.

Once you’ve grabbed the files, open up terminal and execute the following command:

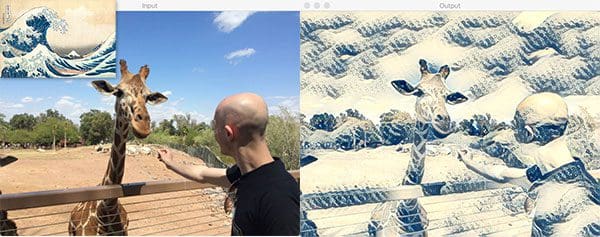

$ python neural_style_transfer.py --image images/giraffe.jpg \ --model models/eccv16/the_wave.t7 [INFO] loading style transfer model... [INFO] neural style transfer took 0.3152 seconds

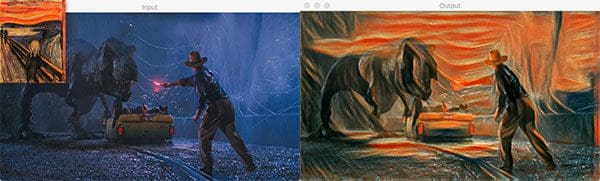

Now, simply change the command line arguments to use a screen capture from my favorite movie, Jurassic Park, as the content image, and then The Scream style model:

$ python neural_style_transfer.py --image images/jurassic_park.jpg \ --model models/instance_norm/the_scream.t7 [INFO] loading style transfer model... [INFO] neural style transfer took 0.1202 seconds

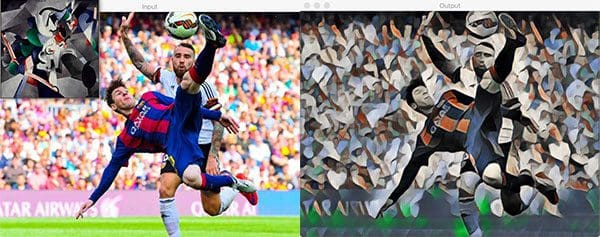

And changing the command line arguments in your terminal once more:

$ python neural_style_transfer.py --image images/messi.jpg \ --model models/instance_norm/udnie.t7 [INFO] loading style transfer model... [INFO] neural style transfer took 0.1495 seconds

Figure 5 is arguably my favorite — it just feels like it could be printed and hung on a wall in a sports bar.

In these three examples, we’ve created deep learning art! In the terminal output, the time elapsed to compute the output image is shown — each CNN model is a little bit different and you should expect different timings for each of the models.

Challenge! Can you create fancy deep learning artwork with neural style transfer? I’d love to see you tweet your artwork results — just use the hashtag, #neuralstyletransfer and mention me in the tweet (@PyImageSearch). Also, be sure to give credit to the artists and photographers — tag them if they are on Twitter as well.

Real-time neural style transfer

Now that we’ve learned how to apply neural style transfer to single images, let’s learn how to apply the process to (near) real-time video as well.

The process is quite similar to performing neural style transfer on a static image. In this script, we’ll:

- Utilize a special Python iterator which will allow us to cycle over all available neural style transfer models in our

modelspath. - Start our webcam video stream — our webcam frames will be processed in (near) real-time. Slower systems may lag quite a bit for certain larger models.

- Loop over incoming frames.

- Perform neural style transfer on the frame, post-process the output, and display the result to the screen (you’ll recognize this from above as it is nearly identical).

- If the user presses the “n” key on their keyboard, we’ll utilize the iterator to cycle to the next neural style transfer model without having to stop/restart the script.

Without further ado, let’s get to it.

Open up your neural_style_transfer_video.py file and insert the following code:

# import the necessary packages

from imutils.video import VideoStream

from imutils import paths

import itertools

import argparse

import imutils

import time

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-m", "--models", required=True,

help="path to directory containing neural style transfer models")

args = vars(ap.parse_args())

We begin by importing required packages/modules.

From there, we just need the path to our models/ directory (a selection of models is included with today’s “Downloads”). The command line argument, --models , coupled with argparse , allows us to pass the path at runtime.

Next, let’s create our model path iterator:

# grab the paths to all neural style transfer models in our 'models'

# directory, provided all models end with the '.t7' file extension

modelPaths = paths.list_files(args["models"], validExts=(".t7",))

modelPaths = sorted(list(modelPaths))

# generate unique IDs for each of the model paths, then combine the

# two lists together

models = list(zip(range(0, len(modelPaths)), (modelPaths)))

# use the cycle function of itertools that can loop over all model

# paths, and then when the end is reached, restart again

modelIter = itertools.cycle(models)

(modelID, modelPath) = next(modelIter)

Once we begin processing frames in a while loop (to be covered in a few code blocks), a “n” keypress will load the “next” model in the iterator. This allows you to see the effect of each neural style model in your video stream without having to stop your script, change your model path, and then restart.

To construct our model iterator, we:

- Grab and sort paths to all neural style transfer models (Lines 18 and 19).

- Assign a unique ID to each (Line 23).

- Use

itertoolsandcycleto create an iterator (Line 27). Essentially,cycleallows us to create a circular list which when you reach the end of it, starts back at the beginning.

Calling the next method of the modelIter grabs our first modelID and modelPath (Line 28).

If you are new to Python iterators or iterators in general (most programming languages implement them), then be sure to give this article by RealPython a read.

Let’s load the first neural style transfer model and initialize our video stream:

# load the neural style transfer model from disk

print("[INFO] loading style transfer model...")

net = cv2.dnn.readNetFromTorch(modelPath)

# initialize the video stream, then allow the camera sensor to warm up

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(2.0)

print("[INFO] {}. {}".format(modelID + 1, modelPath))

On Line 32, we read the first neural style transfer model using its path.

Then on Lines 36 and 37, we initialize our video stream so we can grab frames from our webcam.

Let’s begin looping over frames:

# loop over frames from the video file stream while True: # grab the frame from the threaded video stream frame = vs.read() # resize the frame to have a width of 600 pixels (while # maintaining the aspect ratio), and then grab the image # dimensions frame = imutils.resize(frame, width=600) orig = frame.copy() (h, w) = frame.shape[:2] # construct a blob from the frame, set the input, and then perform a # forward pass of the network blob = cv2.dnn.blobFromImage(frame, 1.0, (w, h), (103.939, 116.779, 123.680), swapRB=False, crop=False) net.setInput(blob) output = net.forward()

Our while loop begins on Line 41.

Lines 43-57 are nearly identical to the previous script we reviewed with the only exception being that we load a frame from the video stream rather than an image file on disk.

Essentially we grab the frame , preprocess it into a blob , and send it through the CNN. Be sure to refer to scroll up to my previous explanation if you didn’t read it already.

There’s a lot of computation going on behind the scenes here in the CNN. If you’re curious how to train your own neural style transfer model with Keras, be sure to refer to my book, Deep Learning for Computer Vision with Python.

Next, we’ll post-process and display the output image:

# reshape the output tensor, add back in the mean subtraction, and

# then swap the channel ordering

output = output.reshape((3, output.shape[2], output.shape[3]))

output[0] += 103.939

output[1] += 116.779

output[2] += 123.680

output /= 255.0

output = output.transpose(1, 2, 0)

# show the original frame along with the output neural style

# transfer

cv2.imshow("Input", frame)

cv2.imshow("Output", output)

key = cv2.waitKey(1) & 0xFF

Again, Lines 61-66 are identical to the static image neural style script above where I explained these lines in detail. These lines are critical to you seeing the correct result. Our output image is “de-processed” by reshaping, mean addition (since we subtracted the mean earlier), rescaling, and transposing.

The output of our neural style transfer is shown on Lines 70 and 71, where both the original and processed frames are displayed on the screen.

We also capture keypresses on Line 72. The keys are processed in the next block:

# if the `n` key is pressed (for "next"), load the next neural

# style transfer model

if key == ord("n"):

# grab the next neural style transfer model model and load it

(modelID, modelPath) = next(modelIter)

print("[INFO] {}. {}".format(modelID + 1, modelPath))

net = cv2.dnn.readNetFromTorch(modelPath)

# otheriwse, if the `q` key was pressed, break from the loop

elif key == ord("q"):

break

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

There are two keys that will cause different behaviors while the script is running:

- “n”: Grabs the “next” neural style transfer model path + ID and loads it (Lines 76-80). If we’ve reached the last model, the iterator will cycle back to the beginning.

- “q”: Pressing the “q” key will “quit” the

whileloop (Lines 83 and 84).

Cleanup is then performed on the remaining lines.

Real-time neural style transfer results

Once you’ve used the “Downloads” section of this tutorial to download the source code and neural style transfer models, you can execute the following command to apply style transfer to your own video streams:

$ python neural_style_transfer_video.py --models models

As you can see, it’s easy to cycle through the neural style transfer models using a single keypress.

I have included a short demo video of myself applying neural transfer below:

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post you learned how to apply neural style transfer to both images and video using OpenCV and Python.

Specifically, we utilized the models trained by Johnson et al. in their 2016 publication on neural style transfer — for your convenience, I have included the models in the “Downloads” section of this blog post.

I hope you enjoyed today’s tutorial on neural style transfer!

Be sure to use Twitter and the comments section to post links to your own beautiful works of art — I can’t wait to see them!

To be notified when future blog posts are published here on PyImageSearch, just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!