Last week we learned a bit about Python virtual environments and how to access the RPi.GPIO and GPIO Zero libraries along with OpenCV.

Today we are going to build on that knowledge and create an “alarm” that triggers both an LED light to turn on and a buzzer to go off whenever a specific visual action takes place.

To accomplish this, we’ll utilize OpenCV to process frames from a video stream. Then, when a pre-defined event takes place (such as a green ball entering our field of view), we’ll utilize RPi.GPIO/GPIO Zero to trigger the alarm.

Keep reading to find out how it’s done!

OpenCV, RPi.GPIO, and GPIO Zero on the Raspberry Pi

In the remainder of this blog post, we’ll be using OpenCV and the RPi.GPIO/GPIO Zero libraries to interact with each other. We’ll use OpenCV to process frames from a video stream, and once a specific event happens, we’ll trigger an action on our attached TrafficHAT board.

Hardware

To get started, we’ll need a bit of hardware, including a:

- Raspberry Pi: I ended up going with the Raspberry Pi 3, but the Raspberry Pi 2 would also be an excellent choice for this project.

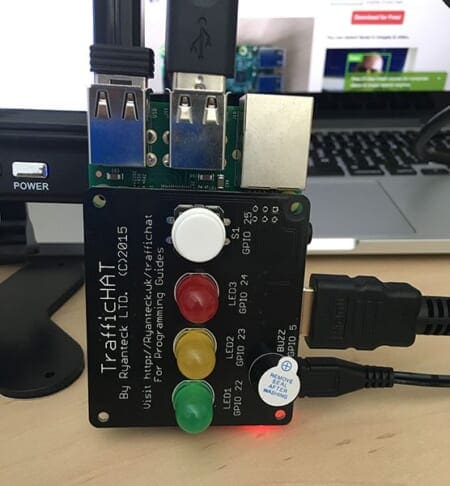

- TrafficHAT: I purchased the TrafficHAT, a module for the Raspberry Pi consisting of three LED lights, a buzzer, and push-button from RyanTeck. Given that I have very little experience with GPIO programming, this kit was an excellent starting point for me to get some exposure to GPIO. If you’re just getting started as well, be sure to take a look at the TrafficHat.

You can see the TrafficHat itself in the image below:

Notice how the module simply sits on top of the Raspberry Pi — no breakout board, extra cables, or soldering required!

To trigger the alarm, we’ll be writing a Python script to detect this green ball in our video stream:

If this green ball enters the view of our camera, we’ll sound the alarm by ringing the buzzer and turning on the green LED light.

Below you can see an example of my setup:

Coding the alarm

Before we get started, make sure you have read last week’s blog post on accessing RPi.GPIO and GPIO Zero with OpenCV. This post provides crucial information on configuring your development environment, including an explanation of Python virtual environments, why we use them, and how to install RPi.GPIO and GPIO Zero such that they are accessible in the same virtual environment as OpenCV.

Once you’ve given the post a read, you should be prepared for this project. Open up a new file, name it pi_reboot_alarm.py , and let’s get coding:

# import the necessary packages

from __future__ import print_function

from imutils.video import VideoStream

from gpiozero import TrafficHat

import argparse

import imutils

import time

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--picamera", type=int, default=-1,

help="whether or not the Raspberry Pi camera should be used")

args = vars(ap.parse_args())

Lines 2-8 import our required Python packages. We’ll be using the VideoStream class from our unifying picamera and cv2.VideoCapture into a single class with OpenCV post. We’ll also be using imutils, a series of convenience functions used to make common image processing operations with OpenCV easier. If you don’t already have imutils installed on your system, you can install it using pip :

$ pip install imutils

Note: Make sure you install imutils into the same Python virtual environment as the one you’re using for OpenCV and GPIO programming!

Lines 11-14 then handle parsing our command line arguments. We only need a single switch here, --picamera , which is an integer indicating whether the Raspberry Pi camera module or a USB webcam should be used. If you’re using a USB webcam, you can ignore this switch (the USB webcam will be used by default). Otherwise, if you want to use the picamera module, then supply a value of --picamera 1 as a command line argument when you execute the script.

Now, let’s initialize some important variables:

# initialize the video stream and allow the cammera sensor to

# warmup

print("[INFO] waiting for camera to warmup...")

vs = VideoStream(usePiCamera=args["picamera"] > 0).start()

time.sleep(2.0)

# define the lower and upper boundaries of the "green"

# ball in the HSV color space

greenLower = (29, 86, 6)

greenUpper = (64, 255, 255)

# initialize the TrafficHat and whether or not the LED is on

th = TrafficHat()

ledOn = False

Lines 19 and 20 accesses our VideoStream and allow the camera sensor to warmup.

We’ll be applying color thresholding to find the green ball in our video stream, so we initialize the lower and upper boundaries of the green ball pixel intensities in the HSV color space (be sure to see the “color thresholding” link for more information on how these values are defined — essentially, we are looking for all pixels that fall within this lower and upper range).

Line 28 initializes the TrafficHat class used to interact with the TrafficHat module (although we could certainly use the RPi.GPIO library here as well). We then initialize a boolean variable (Line 29) used to indicate if the green LED is “on” (i.e., the green ball is in view of the camera).

Next, we have the main video processing loop of our script:

# loop over the frames from the video stream while True: # grab the next frame from the video stream, resize the # frame, and convert it to the HSV color space frame = vs.read() frame = imutils.resize(frame, width=500) hsv = cv2.cvtColor(frame, cv2.COLOR_BGR2HSV) # construct a mask for the color "green", then perform # a series of dilations and erosions to remove any small # blobs left in the mask mask = cv2.inRange(hsv, greenLower, greenUpper) mask = cv2.erode(mask, None, iterations=2) mask = cv2.dilate(mask, None, iterations=2) # find contours in the mask and initialize the current # (x, y) center of the ball cnts = cv2.findContours(mask.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts) center = None

On Line 32 we start an infinite loop that continuously reads frames from our video stream. For each of these frames, we resize the frame to have a maximum width of 500 pixels (the smaller the frame is, the faster it is to process) and then convert it to the HSV color space.

Line 42 applies color thresholding using the cv2.inRange function. All pixels in the range greenLower <= pixel <= greenUpper are set to white and all pixels outside this range are set to black. This enables us to create a mask representing the foreground (i.e., green ball, or lack thereof) in the frame. You can read more about color thresholding and ball detection here.

Lines 43 and 44 perform a series of erosions and dilations to remove any small “blobs” (i.e., noise in the frame that does not correspond to the green ball) from the image.

From there, we apply contour detection on Lines 48-50, allowing us to find the outline of the ball in the mask .

We are now ready to perform a few checks, and if they pass, raise the alarm:

# only proceed if at least one contour was found if len(cnts) > 0: # find the largest contour in the mask, then use # it to compute the minimum enclosing circle and # centroid c = max(cnts, key=cv2.contourArea) ((x, y), radius) = cv2.minEnclosingCircle(c) M = cv2.moments(c) center = (int(M["m10"] / M["m00"]), int(M["m01"] / M["m00"])) # only proceed if the radius meets a minimum size if radius > 10: # draw the circle and centroid on the frame cv2.circle(frame, (int(x), int(y)), int(radius), (0, 255, 255), 2) cv2.circle(frame, center, 5, (0, 0, 255), -1) # if the led is not already on, raise an alarm and # turn the LED on if not ledOn: th.buzzer.blink(0.1, 0.1, 10, background=True) th.lights.green.on() ledOn = True

Line 54 makes a check to ensure at least one contour was found. And if so, we find the largest contour in our mask (Line 58), which we assume is the green ball.

Note: This is a reasonable assumption to make since we presume there will be no other large, green objects in view of our camera.

Once we have the largest contour, we compute the minimum enclosing circle of the region, along with the center (x, y)-coordinates — we’ll be using these values to actually highlight the area of the image that contains the ball.

In order to protect against false-positive detections, Line 64 makes a check to ensure the radius of the circle in sufficiently large (in this case, the radius must be at least 10 pixels). If this check passes, we draw the circle and centroid on the frame (Lines 66-68).

Finally, if the the LED light is not already on, we trigger the alarm by buzzing the buzzer and lighting up the LED (Lines 72-75).

We have now reached our last code block:

# if the ball is not detected, turn off the LED

elif ledOn:

th.lights.green.off()

ledOn = False

# show the frame to our screen

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the 'q' key is pressed, stop the loop

if key == ord("q"):

break

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

Line 78-80 handle if no green ball is detected and the LED light is on, indicating that we should turn the alarm off.

Now that we are done processing our frame , we can display it to our screen on Lines 83 and 84.

If the q key is pressed, we break from the loop and perform a bit of cleanup.

Arming the alarm

To execute our Python script, simply issue the following command:

$ python pi_reboot_alarm.py

If you want to utilize the Raspberry Pi camera module rather than a USB webcam, then just append the --picamera 1 switch:

$ python pi_reboot_alarm.py --picamera 1

Below you can find a sample image from my output where the green ball is not present:

As the above figure demonstrates, there is no green ball in the image — and thus, the green LED is off.

However, once the green ball enters the view of the camera, OpenCV detects it, thereby allowing us to utilize GPIO programming to light up the green LED:

The full output of the script can be seen in the video below:

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: January 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this blog post, we learned how to utilize both OpenCV and the RPi.GPIO/GPIO Zero libraries to interact with each other and accomplish a particular task. Specifically, we built a simple “alarm system” using a Raspberry Pi 3, a TrafficHAT module, and color thresholding to detect the presence of a green ball.

OpenCV was used to perform the core video processing and detect the ball. Once the ball was detected, we raised an alarm by buzzing the buzzer and lighting up an LED on the TrafficHat.

Overall, my goal of this blog post was to demonstrate a straightforward computer vision application that can blend both OpenCV and GPIO libraries together. I hope it serves as a starting point for you future projects!

Next week we’ll learn how to take this example alarm program and make it run as soon as the Raspberry Pi boots up!

Be sure to enter your email address in the form below to be notified the second this next blog post is published!

See you next week…

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!