Introduction to Pandas read_csv

In this tutorial, we delve into the powerful data manipulation capabilities of Python’s Pandas library, specifically focusing on the pandas read_csv function. By the end of this tutorial, you will have a thorough understanding of the pd.read_csv function, a versatile tool in the arsenal of any data scientist or analyst.

The pandas read_csv function is one of the most commonly used pandas functions, particularly for data preprocessing. It is invaluable for tasks such as importing data from CSV files into the Python environment for further analysis. This function is capable of reading a CSV file from both your local machine and from a URL directly. What’s more, using pandas to read csv files comes with a plethora of options to customize your data loading process to fit your specific needs.

We will explore the different parameters and options available in the pd.read_csv function, learn how to handle large datasets, and deal with different types of data. Whether you’re a beginner just starting out or a seasoned data science professional, understanding the pandas read csv function is crucial to efficient data analysis.

Unleash the power of the pandas read_csv function, and redefine the way you handle, manipulate, and analyze data.

Things to Be Aware of When Using Pandas Concat

When using the pandas read_csv function (pd.read_csv) to read a CSV file into a DataFrame, there are several important things to be aware of:

- Delimiter and Encoding: Always specify the appropriate delimiter and encoding parameters when using

pandas read_csv. The default delimiter is a comma, but CSV files can also use other delimiters like tabs or semicolons. Additionally, ensure the encoding matches the file’s encoding to correctly read special characters. - Handling Missing Data: Be mindful of how missing data is represented in your CSV file. By default,

pandas read_csvconsiders empty strings, NA, and NULL values as missing data. You can customize how missing values are handled using parameters likena_valuesandkeep_default_na. - Parsing Dates and Times: When working with date and time data in CSV files, specify the

parse_datesparameter inpandas read_csvto ensure the correct parsing of date and time columns. This will allow you to work with the data as datetime objects in the DataFrame.

By paying attention to these key considerations when using pandas read_csv, you can effectively use Pandas to read csv files into DataFrames while ensuring data integrity and proper handling of various data types.

Configuring Your Development Environment

To follow this guide, you need to have the Pandas library installed on your system.

Luckily, Pandas is pip-installable:

python pip install pandas

If you need help configuring your development environment for Pandas, we highly recommend that you read our pip install Pandas guide — it will have you up and running in minutes.

Need Help Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code immediately on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing this tutorial’s “Downloads” section to retrieve the source code and example images.

From there, take a look at the directory structure:

tree . --dirsfirst . ├── data │ ├── large_video_game_sales.csv │ ├── movie_ratings.csv │ ├── odd_delimiter_sales.csv │ └── video_game_sales.csv └── pandas_read_csv_examples.py 2 directories, 5 files

Simple Example of Using pandas read_csv

This example demonstrates how to use pandas.read_csv to load a simple dataset. We will use a CSV file that contains movie ratings. The goal is to load this data into a pandas DataFrame and print basic information about the data.

# First, let's import the pandas package.

import pandas as pd

# Now, let's load a CSV file named 'movie_ratings.csv. It is a dataset of movie ratings from your favorite film review website.

# The dataset includes the columns 'Title', 'Year', 'Rating', and 'Reviewer'.

# Load the CSV file into a DataFrame.

movie_ratings = pd.read_csv('.data/movie_ratings.csv')

# Let's take a peek at the first few rows of our movie ratings to make sure everything looks good.

print(movie_ratings.head())

# What about the basic info of our dataset? Let's check the data types and if there are missing values.

print(movie_ratings.info())

Line 2: First, we import the pandas package using the pd alias. This package provides data structures and data analysis tools.

Line 8: A CSV file named ‘movie_ratings.csv’ is loaded into a DataFrame called ‘movie_ratings’. This file likely contains movie rating data with columns like ‘Title’, ‘Year’, ‘Rating’, and ‘Reviewer’.

Line 11: The print(movie_ratings.head()) function is used to display the first few rows of the ‘movie_ratings’ DataFrame. This provides a quick look at the data.

Line 14: The print(movie_ratings.info()) function is used to display basic information about the ‘movie_ratings’ DataFrame. This includes data types of columns and the presence of any missing values. This helps in understanding the structure and completeness of the dataset.

When you run this code, you’ll see an output similar to the following:

Title Year Rating Reviewer 0 The Shawshank Redemption 1994 9.3 John Doe 1 The Godfather 1972 9.2 Jane Smith 2 The Dark Knight 2008 9.0 Emily Johnson 3 Pulp Fiction 1994 8.9 Mike Brown 4 The Lord of the Rings: The Return of the King 2003 8.9 Sarah Davis RangeIndex: 5 entries, 0 to 4 Data columns (total 4 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Title 5 non-null object 1 Year 5 non-null int64 2 Rating 5 non-null float64 3 Reviewer 5 non-null object dtypes: float64(1), int64(1), object(2) memory usage: 292.0+ bytes None

This output show how pandas read_csv handles importing a csv file and how to display information about the csv data. Now let’s move on to some more advanced capabilities.

Skipping Unnecessary Rows with skiprows

The skiprows parameter in pd.read_csv allows you to skip specific rows while reading a CSV file. This is particularly useful for ignoring metadata, extra headers, or any irrelevant rows at the beginning of the file. By skipping these rows, you can focus on the actual data and clean up your dataset for further analysis.

Here’s an example of using skiprows:

# Import the pandas library

import pandas as pd

# Example CSV file with metadata in the first two rows

# We will skip the first two rows to read the actual data

data = pd.read_csv(

'./data/video_game_sales_with_metadata.csv',

skiprows=2

)

# Display the first few rows of the cleaned data

print(data.head())

When to Use skiprows

- Ignoring metadata or additional headers at the start of the file.

- Cleaning up messy datasets with unnecessary rows.

The result of the above code will skip the first two lines, ensuring only the main data is loaded into the DataFrame.

Advanced pandas read_csv feature using mixed data types, handling dates, and missing values.

We will explore more advanced features of pd.read_csv, we’ll use a dataset that includes mixed data types, handling dates, and missing values. We’ll focus on a dataset about video game sales, which includes release dates, platforms, sales figures, and missing values in some entries. This will allow us to demonstrate how to handle these complexities using pd.read_csv.

# Import pandas to handle our data

import pandas as pd

#We have a CSV file 'video_game_sales.csv' that contains complex data types and missing values.

# We're going to specify data types for better memory management and parsing dates directly.

video_games = pd.read_csv(

'./data/video_game_sales.csv',

parse_dates=['Release_Date'], # Parsing date columns directly

dtype={

'Name': str,

'Platform': str,

'Year_of_Release': pd.Int32Dtype(), # Using pandas' nullable integer type

'Genre': str,

'Publisher': str,

'NA_Sales': float,

'EU_Sales': float,

'JP_Sales': float,

'Other_Sales': float,

'Global_Sales': float

},

na_values=['n/a', 'NA', '--'] # Handling missing values marked differently

)

# Let's display the first few rows to check our data

print(video_games.head())

# Show information to confirm our types and check for any null values

print(video_games.info())

Line 2: We import the pandas library, which is a powerful tool for data manipulation and analysis in Python.

Lines 6-22: We create a DataFrame named video_games by reading data from a CSV file named ‘video_game_sales.csv’ using the pd.read_csv function. The parse_dates parameter is set to ['Release_Date'], which instructs pandas to parse the ‘Release_Date’ column as a datetime object. The dtype parameter is specified as a dictionary to define the data types of various columns. This helps in optimizing memory usage and ensures that each column is read with the appropriate type:

'Name': str (string)'Platform': str (string)'Year_of_Release': pd.Int32Dtype() (nullable integer type from pandas)'Genre': str (string)'Publisher': str (string)'NA_Sales': float (floating-point number)'EU_Sales': float (floating-point number)'JP_Sales': float (floating-point number)'Other_Sales': float (floating-point number)'Global_Sales': float (floating-point number)

The na_values parameter is used to specify additional strings that should be recognized as NaN (missing values) in the dataset. In this case, ‘n/a’, ‘NA’, and ‘–‘ are treated as missing values.

Line 25: We print the first few rows of the DataFrame video_games using the head() method to get a quick look at the data and verify that it has been loaded correctly.

Line 28: We print the information about the DataFrame using the info() method. This provides a summary of the DataFrame, including the column names, data types, and the number of non-null values in each column. This is useful to confirm that the data types are correctly set and to check for any missing values.

When you run this code, you’ll see the following output:

Name Platform Release_Date Genre Publisher NA_Sales EU_Sales JP_Sales Other_Sales Global_Sales 0 The Legend of Zelda Switch 2017-03-03 Adventure Nintendo 4.38 2.76 1.79 0.65 9.58 1 Super Mario Bros. NES 1985-09-13 Platform Nintendo 29.08 3.58 6.81 0.77 40.24 2 Minecraft PC 2011-11-18 Sandbox Mojang 6.60 2.28 0.25 0.79 9.92 3 NaN PC 2003-05-15 Strategy Unknown NaN 0.50 0.10 0.05 0.65 4 The Witcher 3 PS4 2015-05-19 Role-Playing CD Projekt 2.90 3.30 0.30 1.00 7.50 RangeIndex: 5 entries, 0 to 4 Data columns (total 10 columns): # Column Non-Null Count Dtype --- ------ -------------- ----- 0 Name 4 non-null object 1 Platform 5 non-null object 2 Release_Date 5 non-null datetime64[ns] 3 Genre 5 non-null object 4 Publisher 5 non-null object 5 NA_Sales 4 non-null float64 6 EU_Sales 5 non-null float64 7 JP_Sales 5 non-null float64 8 Other_Sales 5 non-null float64 9 Global_Sales 5 non-null float64 dtypes: datetime64[ns](1), float64(5), object(4) memory usage: 532.0+ bytes None

This output illustrates how ‘pandas read_csv’ handles importing a csv file that contains multiple data types, datetime and treating specific strings like ‘n/a’, ‘NA’, and ‘–‘ as missing values.

Practical Tips for Using pandas read_csv

We’ll cover how to read large datasets with chunking and handle non-standard CSV files that use different delimiters or encodings. These examples are highly relevant to readers dealing with diverse and potentially challenging datasets.

Example 1: Reading Specific Columns

The usecols parameter is crucial for optimizing memory usage and performance when dealing with large datasets by allowing users to load only the necessary columns. This can significantly improve efficiency, especially when working with datasets containing numerous columns. Below is a simple example to demonstrate how to use the usecols parameter with pd.read_csv

# Import the pandas library

import pandas as pd

# Assume we have a CSV file named 'large_video_game_sales.csv'

# We only need the columns 'Name', 'Platform', and 'Global_Sales'

# Load specific columns using the usecols parameter

specific_columns = pd.read_csv(

'./data/large_video_game_sales.csv',

usecols=['Name', 'Platform', 'Global_Sales']

)

# Display the first few rows to verify the data

print(specific_columns.head())

Why Use usecols?

By specifying only the required columns using usecols, you can:

- Reduce memory consumption by avoiding loading unnecessary data.

- Speed up the data import process, making it more efficient for large datasets.

Example 2: Using index_col to Set a Column as the Index

The index_col parameter in pd.read_csv allows you to specify a column to be used as the index of the resulting DataFrame. This is particularly helpful when one column in your dataset contains unique identifiers or meaningful labels, such as IDs, dates, or names. By setting such a column as the index, you can simplify data referencing and improve the readability of your DataFrame.

Here’s how to use the index_col parameter:

# Import the pandas library

import pandas as pd

# Example CSV file with a column containing unique IDs

# Set the 'Game_ID' column as the index

data = pd.read_csv(

'./data/video_game_sales.csv',

index_col='Game_ID'

)

# Display the first few rows of the DataFrame

print(data.head())

Benefits of Using index_col

- Enables direct referencing of rows using meaningful identifiers.

- Improves DataFrame organization for operations like grouping or merging.

- Facilitates easier data manipulation and slicing.

Additional Notes

If your dataset contains multiple columns you’d like to use as a hierarchical index, you can pass a list of column names to index_col.

data = pd.read_csv('./data/video_game_sales.csv', index_col=['Platform', 'Year_of_Release'])

This feature is essential for organizing and working efficiently with your data, making index_col a vital tool in the data scientist’s arsenal.

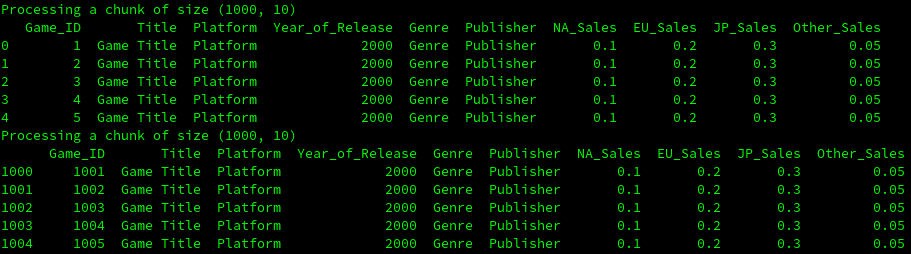

Example 3: Reading Large Datasets with Chunking

This technique is useful for managing memory when working with very large datasets. By specifying chunksize, pd.read_csv returns an iterable object, allowing you to process the data in manageable parts.

# Import the essential library

import pandas as pd

# Suppose we have a very large dataset 'large_video_game_sales.csv'.

# Reading it all at once might consume too much memory, so let's use chunking.

# We will read the file in chunks of 1000 rows at a time.

chunk_size = 1000

for chunk in pd.read_csv('large_video_game_sales.csv', chunksize=chunk_size):

# Process each chunk: here, we could filter data, perform calculations, or aggregate statistics.

# For demonstration, let's just print the size of each chunk and the first few rows.

print(f"Processing a chunk of size {chunk.shape}")

print(chunk.head())

The output will be displayed in chucks as follows:

Using the chuck parameter for pd.read_csv it allows you to more easily manage and process large amounts of csv data.

Example 4: Handling Non-Standard CSV Files

Handling files with different delimiters and encodings is common. Adjusting the delimiter and encoding parameters in pd.read_csv lets you adapt to these variations seamlessly.

Here is an example of non standard csv delimiter data being used in this example:

Game_ID;Title;Sales 1;Odd Game 1;500 2;Odd Game 2;400 3;Odd Game 3;300 4;Odd Game 4;200 5;Odd Game 5;100

# Reading a CSV file with non-standard delimiters and encoding issues can be tricky.

# Let's handle a file 'odd_delimiter_sales.csv' that uses semicolons ';' as delimiters and has UTF-16 encoding.

# Load the data with the correct delimiter and encoding

odd_sales_data = pd.read_csv('odd_delimiter_sales.csv', delimiter=';', encoding='utf-16')

# Let's check out the first few rows to ensure everything is loaded correctly.

print(odd_sales_data.head())

Line 5: On this line we utilize the pd.read_csv function from the pandas library to read a CSV file named ‘odd_delimiter_sales.csv’. The function is called with three arguments. The first argument is the file path as a string. The second argument specifies the delimiter used in the CSV file, which in this case is a semicolon (‘;’). The third argument sets the encoding to ‘UTF-16’ to handle any encoding issues.

On Line 8, we use the print function to display the first few rows of the DataFrame odd_sales_data. The head() method is called on odd_sales_data to retrieve the first five rows, which is the default behavior of this method. This allows us to verify that the data was loaded correctly.

When you run this code, you’ll see the following output:

Game_ID Title Sales 0 1 Odd Game 1 500 1 2 Odd Game 2 400 2 3 Odd Game 3 300 3 4 Odd Game 4 200 4 5 Odd Game 5 100

Now that you know the basics and some advanced techniques of how to use Pandas to read csv files. We are going to look into an alternative solution to pandas to read csv files.

Exploring Alternatives to Pandas read_csv

We are going to explore alternatives to using pandas read_csv, we’ll delve into using Dask. Dask is a powerful parallel computing library in Python that can handle large datasets efficiently, making it an excellent alternative for cases where Pandas might struggle with memory issues.

We will use the large dataset we created earlier for the chunking example (large_video_game_sales.csv) to demonstrate how Dask can be used for similar tasks but more efficiently in terms of memory management and parallel processing.

Using Dask to Read and Process Large Datasets

Here’s how you can use Dask to achieve similar functionality to pandas read_csv but with the capability to handle larger datasets more efficiently:

# First, we need to import Dask's DataFrame functionality

import dask.dataframe as dd

# We'll use the 'large_video_game_sales.csv' we prepared earlier.

# Load the dataset with Dask. It's similar to pandas but optimized for large datasets and parallel processing.

dask_df = dd.read_csv('large_video_game_sales.csv')

# For example, let's calculate the average North American sales and compute it.

na_sales_mean = dask_df['NA_Sales'].mean()

computed_mean = na_sales_mean.compute() # This line triggers the actual computation.

# Print the result

print("Average North American Sales:", computed_mean)

Why Dask is a Better Approach for Large Datasets

- Scalability: Dask can scale up to clusters of machines and handle computations on datasets that are much larger than the available memory, whereas pandas is limited by the size of the machine’s RAM.

- Lazy Evaluation: Dask operations are lazy, meaning they build a task graph and execute it only when you explicitly compute the results. This allows Dask to optimize the operations and manage resources more efficiently.

- Parallel Computing: Dask can automatically divide data and computation over multiple cores or different machines, providing significant speed-ups especially for large-scale data.

This makes Dask an excellent alternative to pd.read_csv when working with very large data sets or in distributed computing environments where parallel processing can significantly speed up data manipulations.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary of pd.read_csv

In this tutorial, we delve into using pandas read_csv function to effectively manage CSV data. We start with a straightforward example, loading and examining a movie ratings dataset to demonstrate basic Pandas functions. We then advance to a video game sales dataset, where we explore more complex features such as handling mixed data types, parsing dates, and managing missing values.

We also provide practical advice on reading large datasets through chunking and tackling non-standard CSV files with unusual delimiters and encodings. These techniques are essential for dealing with diverse datasets efficiently.

Lastly, we introduce Dask as a robust alternative for processing large datasets, highlighting its advantages in scalability, lazy evaluation, and parallel computing. This makes Dask an excellent option for large-scale data tasks where Pandas may fall short.

This guide aims to equip you with the skills to enhance your data handling capabilities and tackle complex data challenges using Pandas. By mastering these steps, you’ll be well-equipped to handle CSV data efficiently in your data analysis projects. For more details on the pandas.read_csv function, refer to the official documentation.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.