Table of Contents

- Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project)

- Object Detection with Deep Learning Through PyTorch and YOLOv5

- Discovering FLIR Thermal Starter Dataset

- Thermal Object Detection Using PyTorch and YOLOv5

- Configuring Your Development Environment

- Having Problems Configuring Your Development Environment?

- Project Structure

- Summary

Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project)

In today’s tutorial, you will detect objects in thermal images using Deep Learning and combining Python and OpenCV. As we have already discovered, thermal cameras allow us to see in absolute darkness, so we will learn how to detect objects under any visible light condition!

This lesson includes:

- Object Detection with Deep Learning through PyTorch and YOLOv5

- Discovering FLIR Thermal Starter Dataset

- Thermal Object Detection Using PyTorch and YOLOv5

This tutorial is the last of our 4-part course on Infrared Vision Basics:

- Introduction to Infrared Vision: Near vs. Mid-Far Infrared Images

- Thermal Vision: Measuring your First Temperature from an Image with Python and OpenCV

- Thermal Vision: Fever Detector with Python and OpenCV (starter project)

- Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project) (today’s tutorial)

By the end of this lesson, you’ll learn how to detect different objects using thermal images and Deep Learning in a very quick, easy, and up-to-date way, using only four pieces of code!

To learn how to utilize YOLOv5 using your custom thermal imaging dataset, just keep reading.

Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project)

Object Detection with Deep Learning Through PyTorch and YOLOv5

In our previous tutorial, we covered how we can apply, in a real solution, the temperature measured from a thermal image using Python, OpenCV, and a traditional Machine Learning method.

From that point and based on all the content covered during this course, the PyImageSearch team appeals to your imagination to excel in any thermal imaging situation, but not before providing you with another powerful and real-life example of this incredible combination: Computer Vision + Thermal Imaging.

In this case, we will learn how computers can see in the dark distinguishing different object classes in real time.

Before starting this tutorial, for better comprehension, we encourage you to take the Torch Hub Series course at PyImageSearch University or gain some experience with PyTorch and Deep Learning. As in all PyImageSearch University courses, we will cover all aspects step by step.

As explained in Torch Hub Series #3: YOLOv5 and SSD — Models on Object Detection, YOLOv5 — You Only Look Once (Figure 1, 2015) version 5 — is the fifth version of one of the most powerful state-of-the-art Convolutional Neural Network models. This fast object detector model is usually trained on the COCO dataset, an open-access Microsoft RGB imaging database consisting of 330K images, 91 object classes, and 2.5 million labeled instances.

This strong combination makes YOLOv5 the perfect model to detect objects even in our custom imaging datasets. For obtaining a thermal object detector, we will use Transfer Learning (i.e., to train the COCO-pre-trained YOLOv5 model on a real thermal imaging dataset especially collected for self-driving car solutions).

Discovering FLIR Thermal Starter Dataset

The thermal imaging dataset that we are going to use to train our pre-trained YOLOv5 model is the free Teledyne FLIR ADAS Dataset.

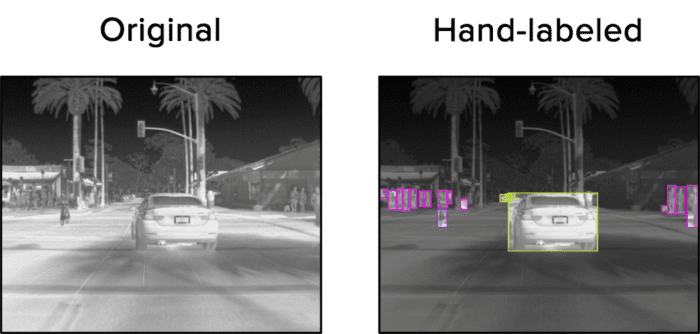

This database consists of 14,452 thermal images in gray8 and gray16 format, which, as we have learned, allows us to measure any pixel temperature. All the 14,452 gray8 images acquired in some streets of California with a mounted-car thermal camera are hand-labeled with bounding boxes, as Figure 2 shows. We will use these annotations (labels + bounding boxes) to detect four different object categories out of the four classes predefined in this dataset: car, person, bicycle, and dog.

car (yellow), person (pink), bicycle (purple), and dog (red).A JSON file with the COCO format annotations is provided. To simplify this tutorial, we give you the annotations in the YOLOv5 PyTorch format. You can find a labels folder with individual annotations for each gray8 image.

We have also reduced the dataset to 1,772 images: 1000 to train our pre-trained YOLOv5 model and 772 to validate it (i.e., approximately 60-40% training-validation split). These images have been selected from the training portion of the original dataset.

Thermal Object Detection Using PyTorch and YOLOv5

Once we have learned all the concepts seen so far … let’s play!

Configuring Your Development Environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

We first need to review our project directory structure.

Start by accessing this tutorial’s “Downloads” section to retrieve the source code and example images.

From there, take a look at the directory structure:

$ tree --dirsfirst

.

└── yolov5

├── data

├── models

├── utils

├── CONTRIBUTING.md

├── Dockerfile

├── LICENSE

├── ...

└── val.py

1 directory, XX files

We set up this structure by cloning the official YOLOv5 repository.

# clone the yolov5 repository from GitHub and install some necessary packages (requirements.txt file) !git clone https://github.com/ultralytics/yolov5 %cd yolov5 %pip install -qr requirements.txt

See the codes on Lines 2 and 3.

Notice that we also installed the required libraries indicated in the requirements.txt file (Line 4): Matplotlib, NumPy, OpenCV, PyTorch, etc.

In the yolov5 folder, we can find all the necessary files to use YOLOv5 in any of our projects:

data: contains the required information to manage different datasets as COCO.models: we can find all the YOLOv5 CNN structures in Yet Another Markup Language (YAML) format, a human-friendly data serialization language for programming languages.utils: includes some necessary Python files to manage the training, the dataset, the information visualization, and general project utilities.

The rest of the files in the yolov5 files are required, but we will only run two of them:

train.py: is a file to train our model, which is part of the repository we cloned abovedetect.py: is a file to test our model by inferring the detected objects, which is also part of the repository we cloned above

The thermal_imaging_dataset folder includes our 1,772 gray8 thermal images. This folder contains the images (thermal_imaging_dataset/images) and the labels (thermal_imaging_dataset/labels) split into the training and validation sets, respectively, train and val folders.

The thermal_imaging_video_test.mp4 is the video file on which we will test our thermal object detection model. It contains 4,224 thermal frames acquired at 30 fps with scenes of streets and highways.

# import PyTorch and check versions import torch from yolov5 import utils display = utils.notebook_init()

Open your yolov5.py file and import the required packages (Lines 7 and 8), checking your notebook features (Line 9) if you are working with Jupyter Notebooks on Google Colab.

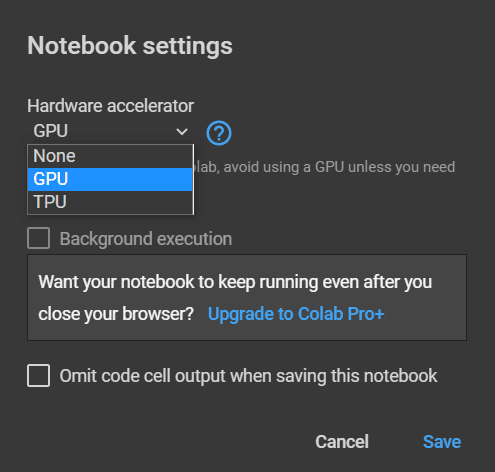

Check that your environment includes a GPU (Figure 3) to run our next training process in a reasonable time successfully.

Additional connection options and click View resources (top). Step 2: Check that your device has GPU RAM (middle); if not, follow the next step. Step 3: Click on Change runtime type (bottom-left). Step 4: Select GPU as a hardware accelerator (bottom-right).Pre-Training

As we have already mentioned, we’ll use Transfer Learning to train our object detector model on our thermal imaging dataset using the YOLOv5 CNN architecture pre-trained on the COCO dataset as a starting point.

For this purpose, the trained YOLOv5 model selected is the YOLOv5s version due to its high speed-accuracy performance.

Training

After setting up the environment and fulfilling all the requirements, let’s train our pre-trained model!

# train pretrained YOLOv5s model on the custom thermal imaging dataset, # basic parameters: # - image size (img): image size of the thermal dataset is 640 x 512, 640 passed # - batch size (batch): 16 by default, 16 passed # - epochs (epochs): number of epochs, 30 passed # - dataset (data): dataset in .yaml file format, custom thermal image dataset passed # - pre-trained YOLOv5 model (weights): YOLOv5 model version, YOLOv5s (small version) passed !python train.py --img 640 --batch 16 --epochs 30 --data thermal_image_dataset.yaml --weights yolov5s.pt

On Line 18, after importing the PyTorch and the YOLOv5 utils (Lines 7-9), we run the train.py file by specifying the following parameters:

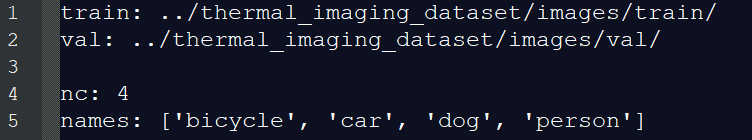

img: image size of the training images to be passed through our model. In our case, thermal images have a640x512resolution, so we indicate the maximum size, 640 pixels.batch: batch size. We set up a batch size of 16 images.epochs: training epochs. After some tests, we established 30 epochs as a good number of iterations.data: YAML dataset file. Figure 4 shows our dataset file. It is pointing to the YOLOv5 dataset structure, previously explained:thermal_imaging_dataset/images/trainthermal_imaging_dataset/labels/train,

for training and:thermal_imaging_dataset/images/valthermal_imaging_dataset/labels/val,

for validation.

It also indicates the number of classes,nc: 4, and the class names,names: ['bicycle', 'car', 'dog', 'person'].

This YAML dataset file should be located inyolov5/data.weights: calculates weights of the pre-trained model, in our case, YOLOv5s, on the COCO dataset. Theyolov5s.ptfile is the pre-trained model that contains these weights and is located inyolov5/models.

thermal_image_dataset.yaml. It contains the thermal imaging dataset path, the number of classes, and the class names.That’s all we need to train our model!

Let’s check out the results!

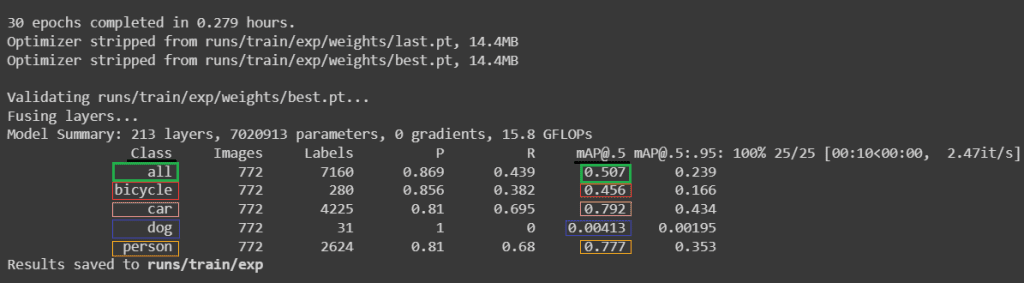

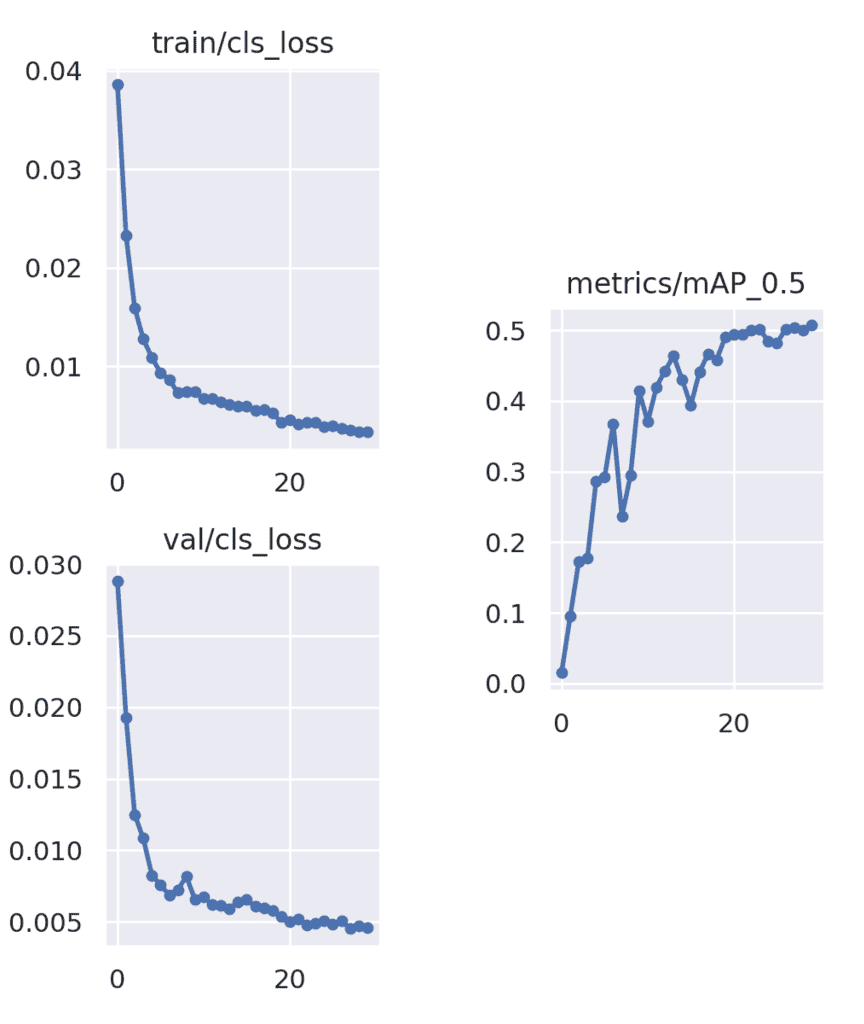

After 30 epochs completed in the GPU NVIDIA Tesla T4 in 0.279 hours, our model has learned to detect the classes person, car, bicycle, and dog, achieving the mean Average Precision of 50.7%, mAP (IoU = 0.5) = 0.507, as Figure 5 shows. This means that our average prediction, with an Intersection over Union (IoU, Figure 6) of 0.5, is 50.7% for all our classes.

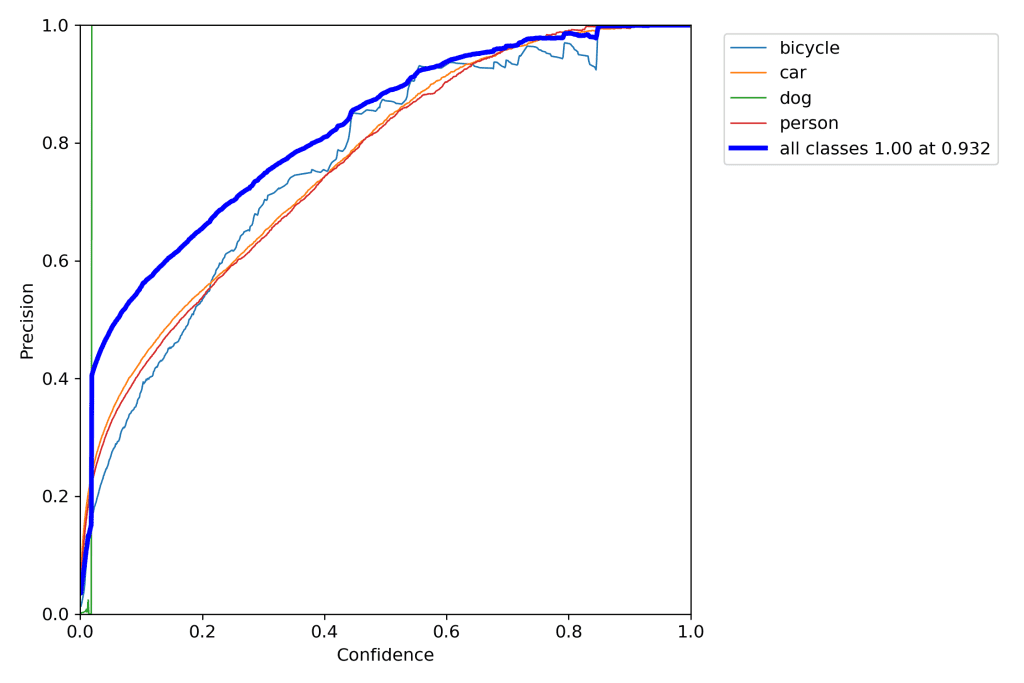

bicycle (red), car (pink), dog (blue), and person (yellow). As you can deduce, our bicycle and dog classes are underrepresented with mAP bicycle (IoU = 0.5) = 0.456 and mAP dog (IoU = 0.5) = 0.004, respectively.As is shown in Figure 6, the Intersection over Union (IoU) is the right overlap of the bounding boxes when the original and the prediction are compared.

So, for our person class, our model properly detects, on average, 77.7% of the cases, considering a correct prediction when there is a bounding-boxes intersection of 50% or higher.

Figure 7 compares two original images, their hand-labeled bounding boxes, and their predicted results.

car (pink), person (yellow), and bicycle (red).Although it is out of the scope of this tutorial, it is important to note that our dataset is highly unbalanced, with only 280 and 31 labels, respectively, for our bicycle and dog classes. That is why we obtain mAP bicycle (IoU = 0.5) = 0.456 and mAP dog (IoU = 0.5) = 0.004, respectively.

Finally, to verify our results, Figure 8 shows the Classification Loss during the training (top-left) and the validation (bottom-left) processes, and the mean Average Precision at IoU 50% (middle-right), mAP (IoU = 0.5) for all the classes through the 30 epochs.

But now, let’s test our model!

Testing

For this purpose, we will use the thermal_imaging_video_test.mp4, located at the project’s root, passing it through the layers of our model using the Python file detect.py.

# test the trained model (night_object_detector.pt) on a thermal imaging video, # parameters: # - trained model (weights): model trained in the previous step, night_object_detector.pt passed # - image size (img): frame size of the thermal video is 640 x 512, 640 passed # - confidence (conf): confidence threshold, only the inferences higher than this value will be shown, 0.35 passed # - video file (source): thermal imaging video, thermal_imaging_video.mp4 passed !python detect.py --weights runs/train/exp/weights/best.pt --img 640 --conf 0.35 --source ../thermal_imaging_video.mp4

Line 27 shows how to do it.

We run the detect.py by specifying the following parameters:

weights: points to our trained model. Calculated weights collected atbest.ptfile (runs/train/exp/weights/best.pt).img: image size of the testing images that will be passed through our model. In our case, thermal images from our video have a640x512resolution, so we indicate the maximum size as 640 pixels.conf: confidence of each detection. This threshold establishes the level of probability of detection from which the detections are considered correct and therefore shown. We set up the confidence at 35%.source: images to test the model, in our case, the video filethermal_imaging_video.mp4.

Let’s test it!

Figure 9 shows a GIF of our good results!

As we have indicated, the night object detection of this video has been obtained with 35% confidence. To modify this factor, we should check the curve obtained in Figure 10, where Precision is plotted against Confidence.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: March 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

We would like to acknowledge the great work of Ultralytics. We found their train.py and detect.py files so great we included them in this post.

In this tutorial, we have learned how to detect different objects under any light condition, combining Thermal Vision and Deep Learning, using the CNN YOLOv5 architecture and our custom thermal imaging dataset.

For this purpose, we have discovered how to train the state-of-the-art YOLOv5 model, previously trained using the Microsoft COCO dataset, on the FLIR Thermal Starter Dataset.

Even though the thermal images are completely different from common RGB images of the COCO dataset, the great performance and results obtained show how powerful the YOLOv5 model is.

We can conclude that Artificial Intelligence goes through incredible and useful paradigms nowadays.

This tutorial shows you how to apply Thermal Vision and Deep Learning in real applications (e.g., Self-Driving Cars). If you would like to learn about this awesome topic, check out the Autonomous Car courses at PyImageSearch University.

The PyImageSearch team hopes that you have enjoyed and interiorized all the concepts taught during this Infrared Vision Basics course.

See you in the next courses!

Citation Information

Garcia-Martin, R. “Thermal Vision: Night Object Detection with PyTorch and YOLOv5 (real project),” PyImageSearch, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, and R. Raha, eds., 2022, https://pyimg.co/p2zsm

@incollection{RGM_2022_PYTYv5,

author = {Raul Garcia-Martin},

title = {Thermal Vision: Night Object Detection with {PyTorch} and {YOLOv5} (real project)},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha},

year = {2022},

note = {https://pyimg.co/p2zsm},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.