Table of Contents

Neural Machine Translation

In this tutorial, you will learn about the core concepts of Neural Machine Translation and a primer on attention.

This lesson is the last in a 3-part series on NLP 102:

- Introduction to Recurrent Neural Networks with Keras and TensorFlow

- Long Short-Term Memory Networks

- Neural Machine Translation (today’s tutorial)

To learn how neural machine translation works and the math behind it, just keep reading.

Neural Machine Translation

Introduction

Imagine you find an article about a really interesting topic on the internet. As luck might have it, it is not in your mother tongue or the language you are comfortable conversing in. You chuckle to yourself but then find an option to translate the text (as shown in Figure 1).

You thank the technology gods, read the article, and move on with your day. But something clicked, the webpage may have been more than 2500 words, yet the translation happened in a matter of seconds. So, unless there is a super fast person smashing keyboards somewhere inside the browser, this must have been done by an algorithm.

But how is the algorithm so accurate? What makes such an algorithm perform robustly in any language in the world?

This is a special area of Natural Language Processing known as Neural Machine Translation, defined as the act of translation with the help of an artificial neural network.

In this tutorial, we will learn about:

- How Neural Machine Translation works

- An overview of two very important papers for this task

- The dataset we will be using in later tutorials

Configuring Your Development Environment

To follow this guide, you need to have the TensorFlow and the TensorFlow Text library installed on your system.

Luckily, both are pip-installable:

$ pip install tensorflow $ pip install tensorflow-text

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Probabilistic Overview of Neural Machine Translation

If that sounds like a mouthful, don’t be worried, we promise all of this will make sense in a minute. But, before we go any further, let us first take a moment to refresh our memory of probability.

refers to a probability of the event

occurring given

has occurred. Now imagine

and

as a sequence of French and English words, respectively. If we apply the same definition of conditional probability here, it will mean

is the probability of a sequence of words in French

occurring, given there is a sequence of words in English

.

This means the task of translation (from English to French) is to maximize this probability , as shown in Figure 3.

The neural network’s task is to learn the conditional distribution, and then when a source sentence is given, search for an appropriate target sentence by maximizing this conditional probability.

Math Behind Neural Machine Translation

Neural Machine Translation (NMT) is the process of leveraging an artificial neural network to maximize this conditional probability.

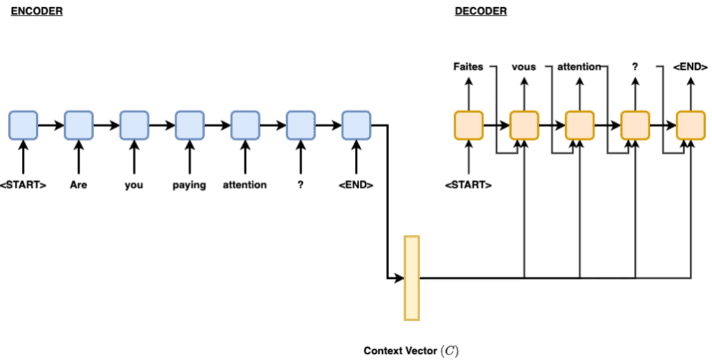

An NMT architecture usually comprises an encoder and a decoder, as shown in Figure 4.

Before Bahdanau and Luong, the encoder and decoder used only recurrence to solve the machine translation task. In this section, we will discuss the math behind modeling translation using only RNNs as encoders and decoders.

Let us consider the equation of the hidden state of the RNN in the encoder.

Here is a network (can be an RNN, LSTM, or GRU). The main motivation here is to understand that the current hidden state (

) depends on the current input (

) and the previous hidden state (

). This recursive cell output feeding to the next has already been explained in our Introduction to RNN blog post. We advise you to quickly read our RNN series (if not done already) to get a primer on the same.

The encoder in NMT creates a bottleneck fixed-size vector (context vector, ) from all the hidden states of the encoder. The context vector (

) will be used by the decoder to get to the target sequence.

can be any non-linearity. You will most likely find

to be the last hidden state

The decoder predicts the next word given the context vector (

) and all the previously predicted words {

}.

Now let us rewrite the probabilistic equation.

is the hidden state of the decoder. Just like the hidden state of the encoder,

can be any recurrent architecture (RNN, LSTM, or GRU).

can be any non-linearity that outputs the probability of the next word given all the previously generated words and the context vector.

For a translation task, we have to generate target words that maximize the conditional probability .

TL;DR: What essentially happens is a variable length sequence is passed to an encoder, which squishes the representation of the entire sequence into a fixed context vector. This context vector is then passed to the decoder, which converts it into the target sequence.

What Lies Ahead?

In the introduction, we mentioned two seminal papers:

- Neural Machine Translation by Jointly Learning to Align and Translate: In this paper, the authors argue that encoding a variable-length sequence into a fixed-length context vector would deteriorate the performance of the translation.

To counter the problem, they propose a soft-attention scheme. With the attention in place, the context vector would now have a complete overview of the entire input sequence.

For every word translated, a dynamic context vector is built just for the current translation.

- Effective Approaches to Attention-Based Neural Machine Translation: In this paper, the authors improve several key factors of its predecessor (Neural Machine Translation by Jointly Learning to Align and Translate).

These include introducing unidirectional RNN for the encoder and multiplicative addition in place of additive addition. This paper aimed to build a better and more effective (as the name suggests) approach toward attention-based Neural Machine Translation.

Dataset

Are you wondering how to code this up and have your own translation algorithm?

We will need this dataset in two upcoming blog posts where we discuss the Bahdanau and Luong attentions.

Since this is a text translation task, we will need a text pair for this task. We are using the French to English dataset from http://www.manythings.org/anki/

You can download the dataset in a Colab Notebook or your local system using the following code snippet:

$ wget https://www.manythings.org/anki/fra-eng.zip $ unzip fra-eng.zip $ rm _about.txt fra-eng.zip

We will expand on loading and processing the dataset in upcoming tutorials.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

This tutorial introduces Neural Machine Translation. We learn how Neural Machine Translation can be expressed in Probabilistic terms. We saw how NMT architectures are usually designed in the field.

Next, we will learn about the Bahdanau and Luong attentions and their code implementations in TensorFlow and Keras.

Citation Information

A. R. Gosthipaty and R. Raha. “Neural Machine Translation,” PyImageSearch, P. Chugh, S. Huot, K. Kidriavsteva, and A. Thanki, eds., 2022, https://pyimg.co/4yi97

@incollection{ADR_2022_NMT,

author = {Aritra Roy Gosthipaty and Ritwik Raha},

title = {Neural Machine Translation},

booktitle = {PyImageSearch},

editor = {Puneet Chugh and Susan Huot and Kseniia Kidriavsteva and Abhishek Thanki},

year = {2022},

note = {https://pyimg.co/4yi97},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.