In this tutorial, you will learn how to OCR video streams.

This lesson is part 3 of a 4-part series on Optical Character Recognition with Python:

- Multi-Column Table OCR

- OpenCV Fast Fourier Transform (FFT) for Blur Detection in Images and Video Streams

- OCR’ing Video Streams (this tutorial)

- Improving Text Detection Speed with OpenCV and GPUs

OCR’ing Video Streams

In our previous tutorial, you learned how to use the fast Fourier transform (FFT) to detect blur in images and documents. Using this method, we were able to detect blurry, low-quality images and then alert the user that they should attempt to capture a higher-quality version so that we can OCR it.

Remember, it’s always easier to write computer vision code that operates on high-quality images than low-quality images. Using the FFT blur detection method helps ensure that only higher-quality images enter our pipeline.

However, there is another use of the FFT blur detector — it can be used to discard low-quality frames from video streams that would be otherwise impossible to OCR.

Video streams naturally have low-quality frames due to rapid changes in lighting conditions (e.g., walking on a bright sunny day into a dark room), the camera lens autofocusing, or most commonly, motion blur.

It would be near impossible to OCR these frames. So instead of attempting to OCR every frame in a video stream (which would lead to nonsensical results for the low-quality frames), we could instead simply detect that the frame was blurry, ignore it, and then only OCR the high-quality frames?

Is such an implementation possible?

You bet it is — and we’ll be covering how to apply our blur detector to OCR video streams in the remainder of this tutorial.

Inside this tutorial, you will:

- Learn how to OCR video streams

- Apply our FFT blur detector to detect and discard blurry, low-quality frames

- Build an output visualization script that shows the stages of OCR’ing video streams

- Put all the pieces together and fully implement OCR in video streams

OCR’ing Real-Time Video Streams

In the first part of this tutorial, we will review our project directory structure.

We’ll then implement a simple video writer utility class. This class will allow us to create an output video of our blur detection and OCR results from an input video.

Given our video writer helper function, we’ll then implement our driver script to apply OCR to video streams.

We’ll wrap up this tutorial with a discussion of our results.

To learn how to OCR video streams, just keep reading.

Configuring your development environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, we highly recommend that you read our pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having Problems Configuring Your Development Environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project Structure

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

Let’s get started by reviewing the directory structure for our video OCR project:

|-- pyimagesearch | |-- __init__.py | |-- helpers.py | |-- blur_detection | | |-- __init__.py | | |-- blur_detector.py | |-- video_ocr | | |-- __init__.py | | |-- visualization.py |-- output | |-- ocr_video_output.avi |-- video | |-- business_card.mp4 |-- ocr_video.py

Inside the pyimagesearch module, we have two submodules:

blur_detection: The submodule which we’ll be using to aid in detecting blur in video streams.video_ocr: Contains a helper function that writes the output of our video OCR to disk as a separate video file.

The video directory contains business_card.mp4, a video containing a business card that we want to OCR. The output directory contains the output of running the driver script, ocr_video.py, on our input video.

Implementing Our Video Writer Utility

Before we implement our driver script, we first need to implement a basic helper utility that will allow us to write the output of our video OCR script to disk as a separate output video.

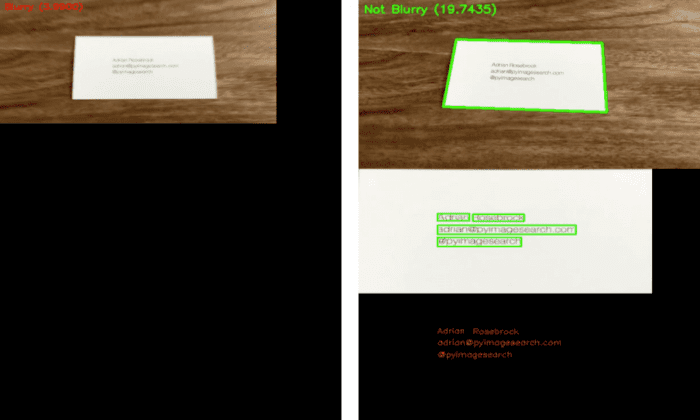

A sample of the output of the visualization script can be seen in Figure 2. Notice that the output has three components:

- The original input frame with the business card detected and blurry/not blurry annotation (top)

- The top-down transform of the business card with the text detected (middle)

- The OCR’d text itself from the top-down transform (bottom)

We’ll be implementing a helper utility function to build such an output visualization in this section.

Please note that this video writer utility has nothing to do with OCR. Instead, it’s just a simple Python class I implemented to write in video I/O. I’m merely reviewing it in this tutorial as a matter of completeness.

If you find yourself struggling to follow along with the class implementation, don’t worry — it will not impact your OCR knowledge. That said, if you would like to learn more about working with video and OpenCV, I recommend you follow our “Working with Video” tutorials.

Let’s get started implementing our video writer utility now. Open the visualization.py file in the video_ocr directory of our project, and let’s get started:

# import the necessary packages import numpy as np class VideoOCROutputBuilder: def __init__(self, frame): # store the input frame dimensions self.maxW = frame.shape[1] self.maxH = frame.shape[0]

We start by defining our VideoOCROutputBuilder class. Our constructor requires only a single parameter, our input frame. We then store the width and height of the frame as maxW and maxH, respectively.

With our constructor taken care of, let’s create the build method responsible for constructing the visualization you saw in Figure 2.

def build(self, frame, card=None, ocr=None): # grab the input frame dimensions and initialize the card # image dimensions along with the OCR image dimensions (frameH, frameW) = frame.shape[:2] (cardW, cardH) = (0, 0) (ocrW, ocrH) = (0, 0) # if the card image is not empty, grab its dimensions if card is not None: (cardH, cardW) = card.shape[:2] # similarly, if the OCR image is not empty, grab its # dimensions if ocr is not None: (ocrH, ocrW) = ocr.shape[:2]

The build method accepts three arguments, one of which is required (the other two are optional):

frame: The input frame from the videocard: The business card after a top-down perspective transform has been applied, and the text on the card detectedocr: The OCR’d text itself

Line 13 grabs the spatial dimensions of the input frame while Lines 14 and 15 initialize the card and ocr images’ spatial dimensions.

Since both card and ocr could be None, we don’t know if they are valid images. If they are, Lines 18-24 makes this check, and if it passes, grabs the width and height of the card and ocr.

We can now start constructing our output visualization:

# compute the spatial dimensions of the output frame outputW = max([frameW, cardW, ocrW]) outputH = frameH + cardH + ocrH # update the max output spatial dimensions found thus far self.maxW = max(self.maxW, outputW) self.maxH = max(self.maxH, outputH) # allocate memory of the output image using our maximum # spatial dimensions output = np.zeros((self.maxH, self.maxW, 3), dtype="uint8") # set the frame in the output image output[0:frameH, 0:frameW] = frame

Line 27 computes the maximum width of the output visualization by finding the max height across the frame, card, and ocr. Line 28 determines the height of the visualization by adding all three heights together (we do this addition operation because these images need to be stacked on top of the other).

Lines 31 and 32 update our maxW and maxH bookkeeping variables with the largest width and height values we’ve found thus far.

Given our newly updated maxW and maxH, Line 36 allocates memory for our output image using the maximum spatial dimensions we found thus far.

With the output image initialized, we store the frame at the top of the output (Line 39).

Our next code block handles adding the card and the ocr images to the output frame:

# if the card is not empty, add it to the output image if card is not None: output[frameH:frameH + cardH, 0:cardW] = card # if the OCR result is not empty, add it to the output image if ocr is not None: output[ frameH + cardH:frameH + cardH + ocrH, 0:ocrW] = ocr # return the output visualization image return output

Lines 42 and 43 verify that a valid card image has been passed into the function, and if so, we add it to the output image. Lines 46-49 do the same, only for the ocr image.

Finally, we return the output visualization to the calling function.

Congrats on implementing our VideoOCROutputBuilder class! Let’s put it to work in the next section!

Implementing Our Real-Time Video OCR Script

We are now ready to implement our ocr_video.py script. Let’s get to work:

# import the necessary packages from pyimagesearch.video_ocr import VideoOCROutputBuilder from pyimagesearch.blur_detection import detect_blur_fft from pyimagesearch.helpers import cleanup_text from imutils.video import VideoStream from imutils.perspective import four_point_transform from pytesseract import Output import pytesseract import numpy as np import argparse import imutils import time import cv2

We start on Lines 2-13, importing our required Python packages. The notable imports include:

VideoOCROutputBuilder: Our visualization builderdetect_blur_fft: Our FFT blur detectorcleanup_text: Used to clean up OCR’d text, stripping out non-ASCII characters, such that we can draw the OCR’d text on the output image using OpenCV’scv2.putTextfunctionfour_point_transform: Applies a perspective transform such that we can obtain a top-down/bird’s-eye view of the business card we’re OCR’ingpytesseract: Provides an interface to the Tesseract OCR engine

With our imports taken care of, let’s move on to our command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input", type=str,

help="path to optional input video (webcam will be used otherwise)")

ap.add_argument("-o", "--output", type=str,

help="path to optional output video")

ap.add_argument("-c", "--min-conf", type=int, default=50,

help="minimum confidence value to filter weak text detection")

args = vars(ap.parse_args())

Our script provides three command line arguments:

--input: Path to an optional input video file on disk. If no video file is provided, we’ll use our webcam.--output: Path to an optional output video file that we’ll generate.--min-conf: Minimum confidence value used to filter out weak text detections.

Now we can move on to our initializations:

# initialize our video OCR output builder used to easily visualize # output to our screen outputBuilder = None # initialize our output video writer along with the dimensions of the # output frame writer = None outputW = None outputH = None

Line 27 initializes our outputBuilder. This object will be instantiated in the main body of our while loop that accesses frames from our video stream (which we’ll cover later in this tutorial).

We then initialize the output video writer and the spatial dimensions of the output video on Lines 31-33.

Let’s move on to accessing our video stream:

# create a named window for our output OCR visualization (a named

# window is required here so that we can automatically position it

# on our screen)

cv2.namedWindow("Output")

# initialize a Boolean used to indicate if either a webcam or input

# video is being used

webcam = not args.get("input", False)

# if a video path was not supplied, grab a reference to the webcam

if webcam:

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(2.0)

# otherwise, grab a reference to the video file

else:

print("[INFO] opening video file...")

vs = cv2.VideoCapture(args["input"])

Line 38 creates a named window called Output for our output visualization. We explicitly created a named window here so we can use OpenCV’s cv2.moveWindow function to move the window on our screen. We need to perform this moving operation because the size of the output window is dynamic — it will grow in size as our output grows and shrinks.

Line 42 determines if we are using a webcam as video input or not. If so, Lines 45-48 access our webcam video stream; otherwise, Lines 51-53 grab a pointer to the video residing on disk.

With access to our video stream, it’s now time to start looping over frames:

# loop over frames from the video stream while True: # grab the next frame and handle if we are reading from either # a webcam or a video file orig = vs.read() orig = orig if webcam else orig[1] # if we are viewing a video and we did not grab a frame then we # have reached the end of the video if not webcam and orig is None: break # resize the frame and compute the ratio of the *new* width to # the *old* width frame = imutils.resize(orig, width=600) ratio = orig.shape[1] / float(frame.shape[1]) # if our video OCR output builder is None, initialize it if outputBuilder is None: outputBuilder = VideoOCROutputBuilder(frame)

Lines 59 and 60 read the original (orig) frame from the video stream. If the webcam variable is set and the orig frame is None, we have reached the end of the video file, so we break from the loop.

Otherwise, Lines 69 and 70 resize the frame to have a width of 700 pixels (such that it’s easier and faster to process) and then compute the ratio of the new width to the old width. We’ll need this ratio when applying the perspective transform to the original high-resolution frame later in this loop.

Lines 73 and 74 initialize our VideoOCROutputBuilder using the resized frame.

Next comes a few more initializations, followed by blur detection:

# initialize our card and OCR output ROIs

card = None

ocr = None

# convert the frame to grayscale and detect if the frame is

# considered blurry or not

gray = cv2.cvtColor(frame, cv2.COLOR_BGR2GRAY)

(mean, blurry) = detect_blur_fft(gray, thresh=15)

# draw whether or not the frame is blurry

color = (0, 0, 255) if blurry else (0, 255, 0)

text = "Blurry ({:.4f})" if blurry else "Not Blurry ({:.4f})"

text = text.format(mean)

cv2.putText(frame, text, (10, 25), cv2.FONT_HERSHEY_SIMPLEX,

0.7, color, 2)

Lines 77 and 78 initialize our card and ocr ROIs. The card ROI will contain the top-down transform of the business card (if the business card is found in the current frame), while ocr will contain the OCR’d text itself.

We then perform text/document blur detection on Lines 82 and 83. We first convert the frame to grayscale and then apply our detect_blur_fft function.

Lines 86-90 draw on the frame, indicating whether or not the current frame is blurry.

Let’s continue with our video OCR pipeline:

# only continue to process the frame for OCR if the image is # *not* blurry if not blurry: # blur the grayscale image slightly and then perform edge # detection blurred = cv2.GaussianBlur(gray, (5, 5,), 0) edged = cv2.Canny(blurred, 75, 200) # find contours in the edge map and sort them by size in # descending order, keeping only the largest ones cnts = cv2.findContours(edged.copy(), cv2.RETR_EXTERNAL, cv2.CHAIN_APPROX_SIMPLE) cnts = imutils.grab_contours(cnts) cnts = sorted(cnts, key=cv2.contourArea, reverse=True)[:5] # initialize a contour that corresponds to the business card # outline cardCnt = None # loop over the contours for c in cnts: # approximate the contour peri = cv2.arcLength(c, True) approx = cv2.approxPolyDP(c, 0.02 * peri, True) # if our approximated contour has four points, then we # can assume we have found the outline of the business # card if len(approx) == 4: cardCnt = approx break

Before continuing, we check to verify that the frame is not blurry. Provided the check passes, we start finding the business card in the input frame by smoothing the frame with a Gaussian kernel and then applying edge detection (Lines 97 and 98).

We then apply contour detection to the edge map and sort the contours by area, from the largest to smallest (Lines 102-105). Our assumption here is that the business card will be the largest ROI in the input frame that also has four vertices.

To determine if we’ve found the business card or not, we loop over the largest contours on Line 112. We then apply contour approximation (Lines 114 and 115) and check if the approximated contour has four points.

Provided the contour has four points, we assume we’ve found our card contour, so we store the contour variable (cardCnt) and then break from the loop (Lines 120-122).

If we found our business card contour, we now attempt to OCR it:

# ensure that the business card contour was found if cardCnt is not None: # draw the outline of the business card on the frame so # we visually verify that the card was detected correctly cv2.drawContours(frame, [cardCnt], -1, (0, 255, 0), 3) # apply a four-point perspective transform to the # *original* frame to obtain a top-down bird's-eye # view of the business card card = four_point_transform(orig, cardCnt.reshape(4, 2) * ratio) # allocate memory for our output OCR visualization ocr = np.zeros(card.shape, dtype="uint8") # swap channel ordering for the business card and OCR it rgb = cv2.cvtColor(card, cv2.COLOR_BGR2RGB) results = pytesseract.image_to_data(rgb, output_type=Output.DICT)

Line 125 verifies that we have indeed found our business card contour. We then draw the card contour on our frame via OpenCV’s cv2.drawContours function.

Next, we apply a perspective transform to the original, high-resolution image (such that we can better OCR it) by using our four_point_transform function (Lines 133 and 134). We also allocate memory for our output ocr visualization, using the same spatial dimensions of the card after applying the top-down transform (Line 137).

Lines 140-142 then apply text detection and OCR to the business card.

The next step is to annotate the output ocr visualization with the OCR’d text itself:

# loop over each of the individual text localizations for i in range(0, len(results["text"])): # extract the bounding box coordinates of the text # region from the current result x = results["left"][i] y = results["top"][i] w = results["width"][i] h = results["height"][i] # extract the OCR text itself along with the # confidence of the text localization text = results["text"][i] conf = int(results["conf"][i]) # filter out weak confidence text localizations if conf > args["min_conf"]: # process the text by stripping out non-ASCII # characters text = cleanup_text(text) # if the cleaned up text is not empty, draw a # bounding box around the text along with the # text itself if len(text) > 0: cv2.rectangle(card, (x, y), (x + w, y + h), (0, 255, 0), 2) cv2.putText(ocr, text, (x, y - 10), cv2.FONT_HERSHEY_SIMPLEX, 0.5, (0, 0, 255), 1)

Line 145 loops over all text detections. We then proceed to:

- Grab the bounding box coordinates of the text ROI (Lines 148-151)

- Extract the OCR’d text and its corresponding confidence/probability (Lines 155 and 156)

- Verify that the text detection has sufficient confidence, followed by stripping out non-ASCII characters from the text (Lines 159-162)

- Draw the OCR’d text on the

ocrvisualization (Lines 167-172)

The rest of the code blocks in this example focus more on bookkeeping variables and output:

# build our final video OCR output visualization

output = outputBuilder.build(frame, card, ocr)

# check if the video writer is None *and* an output video file

# path was supplied

if args["output"] is not None and writer is None:

# grab the output frame dimensions and initialize our video

# writer

(outputH, outputW) = output.shape[:2]

fourcc = cv2.VideoWriter_fourcc(*"MJPG")

writer = cv2.VideoWriter(args["output"], fourcc, 27,

(outputW, outputH), True)

# if the writer is not None, we need to write the output video

# OCR visualization to disk

if writer is not None:

# force resize the video OCR visualization to match the

# dimensions of the output video

outputFrame = cv2.resize(output, (outputW, outputH))

writer.write(outputFrame)

# show the output video OCR visualization

cv2.imshow("Output", output)

cv2.moveWindow("Output", 0, 0)

key = cv2.waitKey(1) & 0xFF

# if the 'q' key was pressed, break from the loop

if key == ord("q"):

break

Line 175 creates our output frame using the .build method our VideoOCROutputBuilder class.

We then check to see if an --output video file path is supplied, and if so, instantiate our cv2.VideoWriter so we can write the output frame visualizations to disk (Lines 179-185).

Similarly, if the writer object has been instantiated, we then write the output frame to disk (Lines 189-193).

Lines 196-202 display the output frame to our screen:

Our final code block releases video pointers:

# if we are using a webcam, stop the camera video stream if webcam: vs.stop() # otherwise, release the video file pointer else: vs.release() # close any open windows cv2.destroyAllWindows()

Taken as a whole, this may seem like a complicated script. But keep in mind that we just implemented an entire video OCR pipeline in under 225 lines of code (including comments). That’s not much code when you think about it — and it’s all made possible by using OpenCV and Tesseract!

Real-Time Video OCR Results

We are now ready to put our video OCR script to the test! Open a terminal and execute the following command:

$ python ocr_video.py --input video/business_card.mp4 --output output/ocr_video_output.avi [INFO] opening video file...

Figure 3 displays the screen captures from our ocr_video_output.avi file in the output directory.

Notice on the left that our script has correctly detected a blurry frame and did not OCR it. If we had attempted to OCR this frame, the results would have been nonsensical, confusing the end-user.

Instead, we wait for a higher-quality frame (right) and then OCR it. As you can see, by waiting for the higher-quality frame, we were able to correctly OCR the business card.

If you ever need to apply OCR to video streams, I strongly recommend that you implement some type of low-quality versus high-quality frame detector. Do not attempt to OCR every single frame of the video stream unless you are 100% confident that the video was captured under ideal, controlled conditions and that every frame is high-quality.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to OCR video streams. First, however, we need to detect blurry, low-quality frames to OCR the video streams.

Videos naturally have low-quality frames due to rapid changes in lighting conditions, camera lens autofocusing, and motion blur. Instead of trying to OCR these low-quality frames, which would ultimately lead to low OCR accuracy (or worse, totally nonsensical results), we instead need to detect these low-quality frames and discard them.

One easy way to detect low-quality frames is to use blur detection. Therefore, we utilized our FFT blur detector to work with our video stream. The result is an OCR pipeline capable of operating on video streams while still maintaining high accuracy.

I hope you enjoyed this tutorial! And I hope that you can apply this method to your projects.

Citation Information

Rosebrock, A. “OCR’ing Video Streams,” PyImageSearch, D. Chakraborty, P. Chugh, A. R. Gosthipaty, S. Huot, K. Kidriavsteva, R. Raha, and A. Thanki, eds., 2022, https://pyimg.co/k43vd

@incollection{Rosebrock_2022_OCR_Video_Streams,

author = {Adrian Rosebrock},

title = {{OCR}’ing Video Streams},

booktitle = {PyImageSearch},

editor = {Devjyoti Chakraborty and Puneet Chugh and Aritra Roy Gosthipaty and Susan Huot and Kseniia Kidriavsteva and Ritwik Raha and Abhishek Thanki},

year = {2022},

note = {https://pyimg.co/k43vd},

}

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.