In this tutorial, you will learn how to perform template matching using OpenCV and the cv2.matchTemplate function.

Other than contour filtering and processing, template matching is arguably one of the most simple forms of object detection:

- It’s simple to implement, requiring only 2-3 lines of code

- Template matching is computationally efficient

- It doesn’t require you to perform thresholding, edge detection, etc., to generate a binary image (such as contour detection and processing does)

- And with a basic extension, template matching can detect multiple instances of the same/similar object in an input image (which we’ll cover next week)

Of course, template matching isn’t perfect. Despite all the positives, template matching quickly fails if there are factors of variation in your input images, including changes to rotation, scale, viewing angle, etc.

If your input images contain these types of variations, you should not use template matching — utilize dedicated object detectors including HOG + Linear SVM, Faster R-CNN, SSDs, YOLO, etc.

But in situations where you know the rotation, scale, and viewing angle are constant, template matching can work wonders.

To learn how to perform template matching with OpenCV, just keep reading.

OpenCV Template Matching ( cv2.matchTemplate )

In the first part of this tutorial, we’ll discuss what template matching is and how OpenCV implements template matching via the cv2.matchTemplate function.

From there, we’ll configure our development environment and review our project directory structure.

We’ll then implement template matching with OpenCV, apply it to a few example images, and discuss where it worked well, when it didn’t, and how to improve template matching results.

What is template matching?

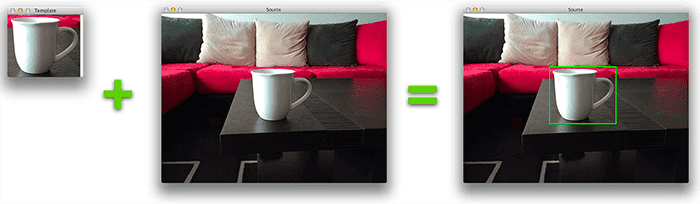

Template matching can be seen as a very basic form of object detection. Using template matching, we can detect objects in an input image using a “template” containing the object we want to detect.

Essentially, what this means is that we require two images to apply template matching:

- Source image: This is the image we expect to find a match to our template in.

- Template image: The “object patch” we are searching for in the source image.

To find the template in the source image, we slide the template from left-to-right and top-to-bottom across the source:

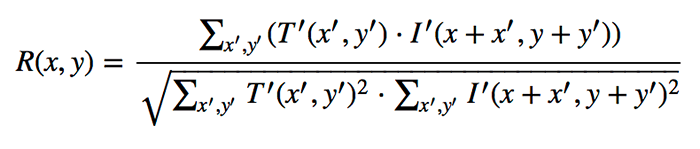

At each (x, y)-location, a metric is calculated to represent how “good” or “bad” the match is. Typically, we use the normalized correlation coefficient to determine how “similar” the pixel intensities of the two patches are:

For the full derivation of the correlation coefficient, including all other template matching methods OpenCV supports, refer to the OpenCV documentation.

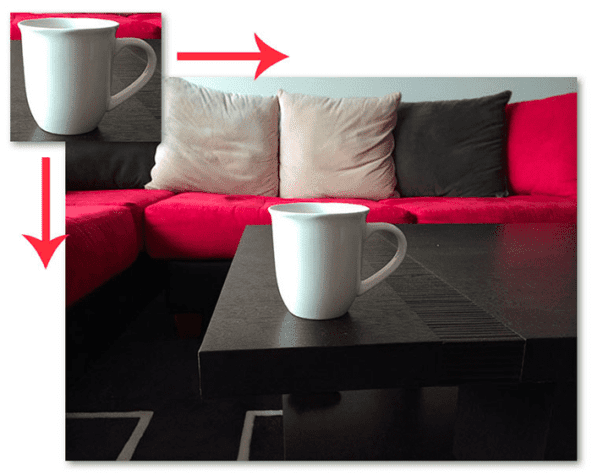

For each location T over I, the computed result metric is stored in our result matrix R. Each (x, y)-coordinate in the source image (that also has a valid width and height for the template image) contains an entry in the result matrix R:

Here, we can visualize our result matrix R overlaid on the original image. Notice how R is not the same size as the original template. This is because the entire template must fit inside the source image for the correlation to be computed. If the template exceeds the source’s boundaries, we do not compute the similarity metric.

Bright locations of the result matrix R indicate the best matches, where dark regions indicate there is very little correlation between the source and template images. Notice how the result matrix’s brightest region appears at the coffee mug’s upper-left corner.

While template matching is extremely simple and computationally efficient to apply, there are many limitations. If there are any object scale variations, rotation, or viewing angle, template matching will likely fail.

In nearly all cases, you’ll want to ensure that the template you are detecting is nearly identical to the object you want to detect in the source. Even small, minor deviations in appearance can dramatically affect template matching results and render it effectively useless.

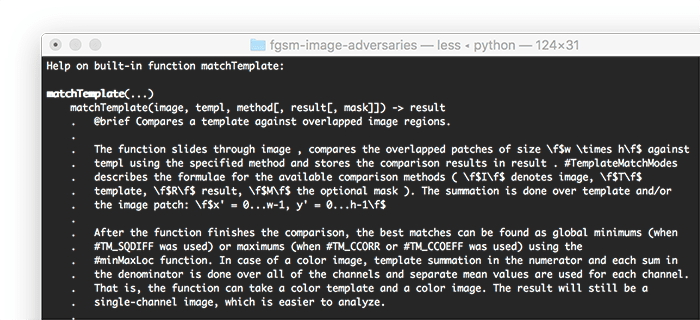

OpenCV’s “cv2.matchTemplate” function

We can apply template matching using OpenCV and the cv2.matchTemplate function:

result = cv2.matchTemplate(image, template, cv2.TM_CCOEFF_NORMED)

Here, you can see that we are providing the cv2.matchTemplate function with three parameters:

- The input

imagethat contains the object we want to detect - The

templateof the object (i.e., what we want to detect in theimage) - The template matching method

Here, we are using the normalized correlation coefficient, which is typically the template matching method you’ll want to use, but OpenCV supports other template matching methods as well.

The output result from cv2.matchTemplate is a matrix with spatial dimensions:

- Width:

image.shape[1] - template.shape[1] + 1 - Height:

image.shape[0] - template.shape[0] + 1

We can then find the location in the result that has the maximum correlation coefficient, which corresponds to the most likely region that the template can be found in (which you’ll learn how to do later in this tutorial).

It’s also worth noting that if you wanted to detect objects within only a specific region of an input image, you could supply a mask, like the following:

result = cv2.matchTemplate(image, template, cv2.TM_CCOEFF_NORMED, mask)

The mask must have the same spatial dimensions and data type as the template. For regions of the input image you don’t want to be searched, the mask should be set to zero. For regions of the image you want to be searched, be sure the mask has a corresponding value of 255.

Configuring your development environment

To follow this guide, you need to have the OpenCV library installed on your system.

Luckily, OpenCV is pip-installable:

$ pip install opencv-contrib-python

If you need help configuring your development environment for OpenCV, I highly recommend that you read my pip install OpenCV guide — it will have you up and running in a matter of minutes.

Having problems configuring your development environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux systems?

Then join PyImageSearch University today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project structure

Before we get too far, let’s review our project directory structure.

Start by accessing the “Downloads” section of this tutorial to retrieve the source code and example images.

Your directory should look like the following:

$ tree . --dirsfirst . ├── images │ ├── 8_diamonds.png │ ├── coke_bottle.png │ ├── coke_bottle_rotated.png │ ├── coke_logo.png │ └── diamonds_template.png └── single_template_matching.py 1 directory, 6 files

We have a single Python script to review today, single_template_matching.py, which will perform template matching with OpenCV.

Inside the images directory, we have five images to which we’ll be applying template matching. We’ll see each of these images later in the tutorial.

Implementing template matching with OpenCV

With our project directory structure reviewed, let’s move on to implementing template matching with OpenCV.

Open the single_template_matching.py file in your directory structure and insert the following code:

# import the necessary packages

import argparse

import cv2

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", type=str, required=True,

help="path to input image where we'll apply template matching")

ap.add_argument("-t", "--template", type=str, required=True,

help="path to template image")

args = vars(ap.parse_args())

On Lines 2 and 3, we import our required Python packages. We only need argparse for command line argument parsing and cv2 for our OpenCV bindings.

From there, we move on to parsing our command line arguments:

--image: The path to the input image on disk that we’ll be applying template matching to (i.e., the image we want to detect objects in).--template: The example template image we want to find instances of in the input image.

Next, let’s prepare our image and template for template matching:

# load the input image and template image from disk, then display

# them on our screen

print("[INFO] loading images...")

image = cv2.imread(args["image"])

template = cv2.imread(args["template"])

cv2.imshow("Image", image)

cv2.imshow("Template", template)

# convert both the image and template to grayscale

imageGray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

templateGray = cv2.cvtColor(template, cv2.COLOR_BGR2GRAY)

We start by loading our image and template, then displaying them on our screen.

Template matching is typically applied to grayscale images, so Lines 22 and 23 convert the image to grayscale.

Next, all that needs to be done is call cv2.matchTemplate:

# perform template matching

print("[INFO] performing template matching...")

result = cv2.matchTemplate(imageGray, templateGray,

cv2.TM_CCOEFF_NORMED)

(minVal, maxVal, minLoc, maxLoc) = cv2.minMaxLoc(result)

Lines 27 and 28 perform template matching itself via the cv2.matchTemplate function. We pass in three required arguments to this function:

- The input image that we want to find objects in

- The template image of the object we want to detect in the input image

- The template matching method

Typically the normalized correlation coefficient (cv2.TM_CCOEF_NORMED) works well in most situations, but you can refer to the OpenCV documentation for more details on other template matching methods.

Once we’ve applied the cv2.matchTemplate, we receive a result matrix with the following spatial dimensions:

- Width:

image.shape[1] - template.shape[1] + 1 - Height:

image.shape[0] - template.shape[0] + 1

The result matrix will have a large value (closer to 1), where there is more likely to be a template match. Similarly, the result matrix will have a small value (closer to 0), where matches are less likely.

To find the location with the largest value, and therefore the most likely match, we make a call to cv2.minMaxLoc (Line 29), passing in the result matrix.

Once we have the (x, y)-coordinates of the location with the largest normalized correlation coefficient (maxLoc), we can extract the coordinates and derive the bounding box coordinates:

# determine the starting and ending (x, y)-coordinates of the # bounding box (startX, startY) = maxLoc endX = startX + template.shape[1] endY = startY + template.shape[0]

Line 33 extracts the starting (x, y)-coordinates from our maxLoc, derived from calling cv2.minMaxLoc in the previous codeblock.

Using the startX and endX coordinates, we can derive the endX and endY coordinates on Lines 34 and 35 by adding the template width and height to the startX and endX coordinates, respectively.

The final step is to draw the detected bounding box on the image:

# draw the bounding box on the image

cv2.rectangle(image, (startX, startY), (endX, endY), (255, 0, 0), 3)

# show the output image

cv2.imshow("Output", image)

cv2.waitKey(0)

A call to cv2.rectangle on Line 38 draws the bounding box on the image.

Lines 41 and 42 then show our output image on our screen.

OpenCV template matching results

We are now ready to apply template matching with OpenCV!

Access the “Downloads” section of this tutorial to retrieve the source code and example images.

From there, open a terminal and execute the following command:

$ python single_template_matching.py --image images/coke_bottle.png \ --template images/coke_logo.png [INFO] loading images... [INFO] performing template matching...

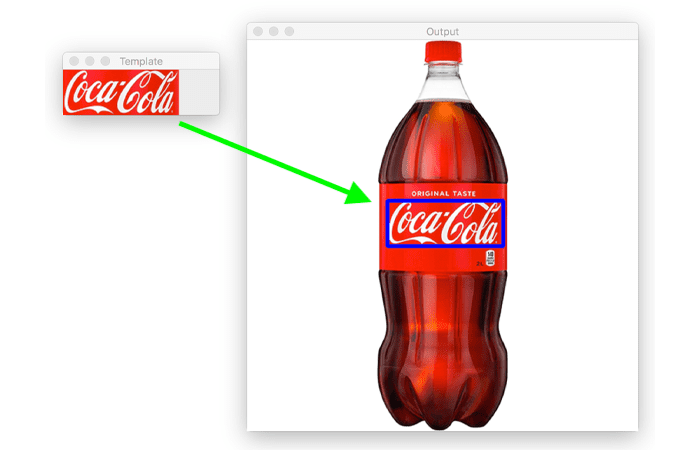

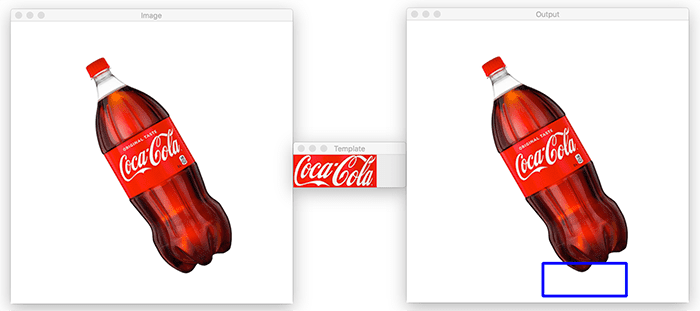

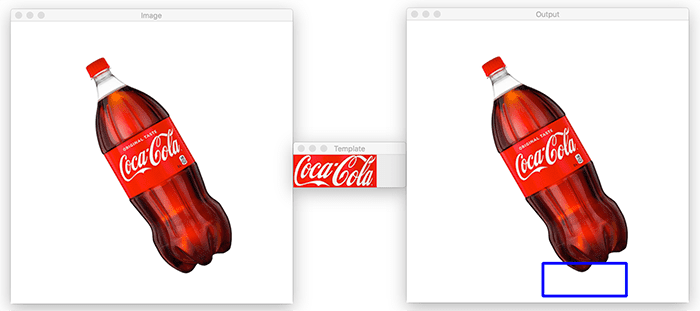

In this example, we have an input image containing a Coca-Cola bottle:

Our goal is to detect the Coke logo in the image:

By applying OpenCV and the cv2.matchTemplate function, we can correctly localize where in the coke_bottle.png image the coke_logo.png image is:

This method works because the Coca-Cola logo in coke_logo.png is the same size (in terms of scale) as the logo in coke_bottle.png. Similarly, the logos are viewed at the same viewing angle and are not rotated.

If the logos differed in scale or the viewing angle was different, the method would fail.

For example, let’s try this example image, but this time I have rotated the Coca-Cola bottle slightly and scaled the bottle down:

$ python single_template_matching.py \ --image images/coke_bottle_rotated.png \ --template images/coke_logo.png [INFO] loading images... [INFO] performing template matching...

Notice how we have a false-positive detection! We have failed to detect the Coca-Cola logo now that the scale and rotation are different.

The key point here is that template matching is tremendously sensitive to changes in rotation, viewing angle, and scale. When that happens, you may need to apply more advanced object detection techniques.

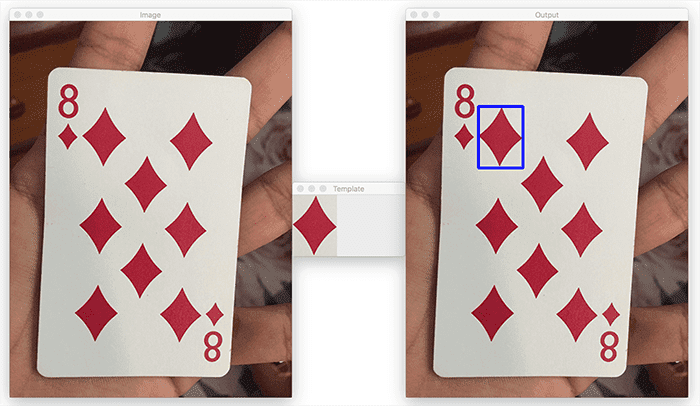

In the following example, we’re working with a deck of cards and are trying to detect the “diamond” symbols on the eight of diamonds playing card:

$ python single_template_matching.py --image images/8_diamonds.png \ --template images/diamonds_template.png [INFO] loading images... [INFO] performing template matching...

On the left, we have our diamonds_template.png image. We use OpenCV and the cv2.matchTemplate function to find all the diamond symbols (right)…

…but what happened here?

Why haven’t all the diamond symbols been detected?

The answer is that the cv2.matchTemplate function, by itself, cannot detect multiple objects!

There is a solution, though — and I’ll be covering multi-template matching with OpenCV in next week’s tutorial.

A note on false-positive detections with template matching

You’ll note that in our rotated Coca-Cola logo example, we failed to detect the Coke logo; however, our code still “reported” that the logo was found:

Keep in mind that the cv2.matchTemplate function truly has no idea if the object was correctly found or not — it’s simply sliding the template image across the input image, computing a normalized correlation score, and then returning the location where the score is the largest.

Template matching is an example of a “dumb algorithm.” There’s no machine learning going on, and it has no idea what is in the input image.

To filter out false-positive detections, you should grab the maxVal and use an if statement to filter out scores that are below a certain threshold.

Credits and References

I would like to thank TheAILearner for their excellent article on template matching — I cannot take credit for the idea of using playing cards to demonstrate template matching. That was their idea, and it was an excellent one at that. Credits to them for coming up with that example, which I shamelessly used here, thank you.

Additionally, the eight of diamonds image was obtained from the Reddit post by u/fireball_73.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial, you learned how to perform template matching using OpenCV and the cv2.matchTemplate function.

Template matching is a basic form of object detection. It’s very fast and efficient, but the downside is that it fails when the rotation, scale, or viewing angle of an object changes — when that happens, you need a more advanced object detection technique.

Nevertheless, suppose you can control the scale or normalize the scale of objects in the environment where you are capturing photos. In that case, you could potentially get away with template matching and avoid the tedious task of labeling your data, training an object detector, and tuning its hyperparameters.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.