In this tutorial, you will learn how to compare two images for similarity (and whether or not they belong to the same or different classes) using siamese networks and the Keras/TensorFlow deep learning libraries.

This blog post is part three in our three-part series on the basics of siamese networks:

- Part #1: Building image pairs for siamese networks with Python (post from two weeks ago)

- Part #2: Training siamese networks with Keras, TensorFlow, and Deep Learning (last week’s tutorial)

- Part #3: Comparing images using siamese networks (this tutorial)

Last week we learned how to train our siamese network. Our model performed well on our test set, correctly verifying whether two images belonged to the same or different classes. After training, we serialized the model to disk.

Soon after last week’s tutorial published, I received an email from PyImageSearch reader Scott asking:

“Hi Adrian — thanks for these guides on siamese networks. I’ve heard them mentioned in deep learning spaces but honestly was never really sure how they worked or what they did. This series really helped clear my doubts and have even helped me in one of my work projects.

My question is:

How do we take our trained siamese network and make predictions on it from images outside of the training and testing set?

Is that possible?”

You bet it is, Scott. And that’s exactly what we are covering here today.

To learn how to compare images for similarity using siamese networks, just keep reading.

Comparing images for similarity using siamese networks, Keras, and TensorFlow

In the first part of this tutorial, we’ll discuss the basic process of how a trained siamese network can be used to predict the similarity between two image pairs and, more specifically, whether the two input images belong to the same or different classes.

You’ll then learn how to configure your development environment for siamese networks using Keras and TensorFlow.

Once your development environment is configured, we’ll review our project directory structure and then implement a Python script to compare images for similarity using our siamese network.

We’ll wrap up this tutorial with a discussion of our results.

How can siamese networks predict similarity between image pairs?

In last week’s tutorial you learned how to train a siamese network to verify whether two pairs of digits belonged to the same or different classes. We then serialized our siamese model to disk after training.

The question then becomes:

“How can we use our trained siamese network to predict the similarity between two images?”

The answer is that we utilize the final layer in our siamese network implementation, which is sigmoid activation function.

The sigmoid activation function has an output in the range [0, 1], meaning that when we present an image pair to our siamese network, the model will output a value >= 0 and <= 1.

A value of 0 means that the two images are completely and totally dissimilar, while a value of 1 implies that the images are very similar.

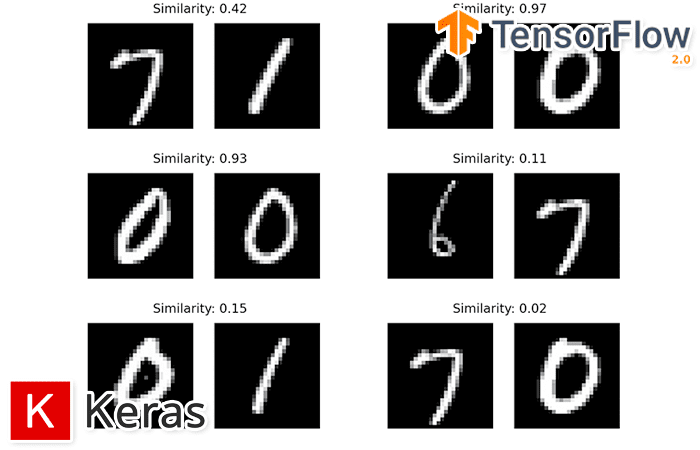

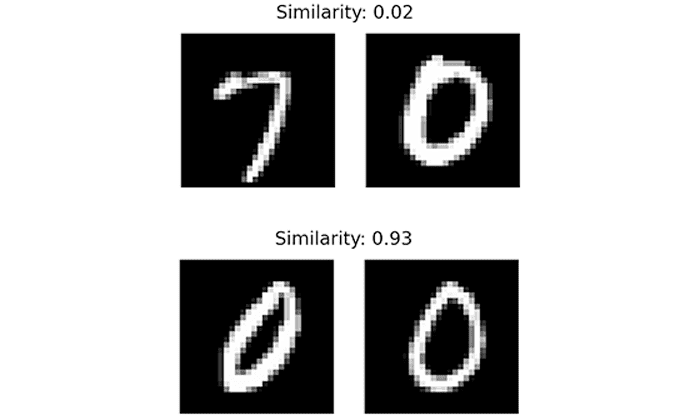

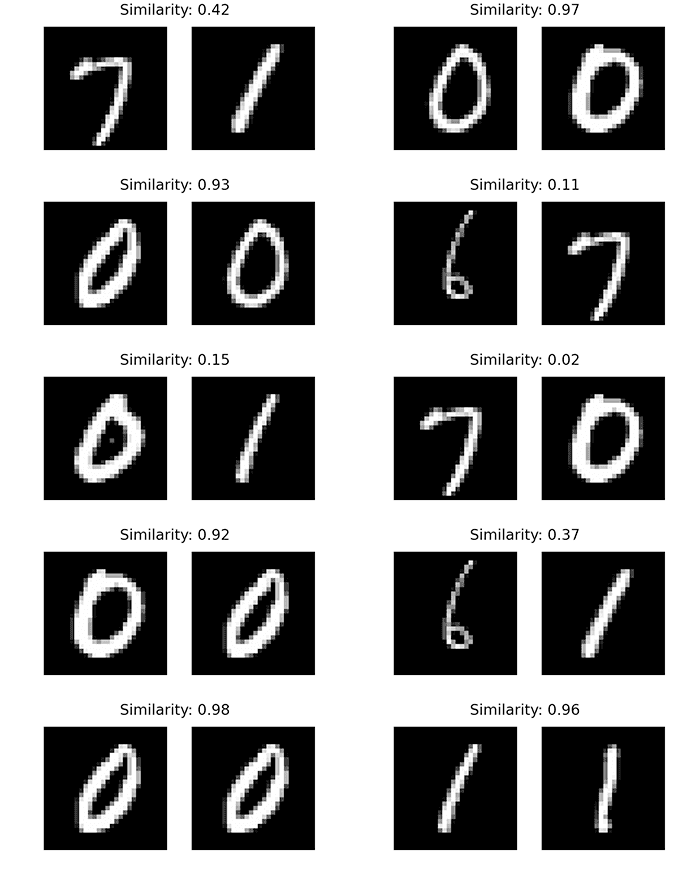

An example of such a similarity can be seen in Figure 1 at the top of this section:

- Comparing a “7” to a “0” has a low similarity score of only 0.02.

- However, comparing a “0” to another “0” has a very high similarity score of 0.93.

A good rule of thumb is to use a similarity cutoff value of 0.5 (50%) as your threshold:

- If two image pairs have an image similarity of <= 0.5, then they belong to a different class.

- Conversely, if pairs have a predicted similarity of > 0.5, then they belong to the same class.

In this manner you can use siamese networks to (1) compare images for similarity and (2) determine whether they belong to the same class or not.

Practical use cases of using siamese networks include:

- Face recognition: Given two separate images containing a face, determine if it’s the same person in both photos.

- Signature verification: When presented with two signatures, determine whether one is a forgery or not.

- Prescription pill identification: Given two prescription pills, determine whether they are the same medication or different medications.

Configuring your development environment

This series of tutorials on siamese networks utilizes Keras and TensorFlow. If you intend on following this tutorial or the previous two parts in this series, I suggest you take the time now to configure your deep learning development environment.

You can utilize either of these two guides to install TensorFlow and Keras on your system:

Either tutorial will help you configure your system with all the necessary software for this blog post in a convenient Python virtual environment.

Having problems configuring your development environment?

All that said, are you:

- Short on time?

- Learning on your employer’s administratively locked system?

- Wanting to skip the hassle of fighting with the command line, package managers, and virtual environments?

- Ready to run the code right now on your Windows, macOS, or Linux system?

Then join PyImageSearch Plus today!

Gain access to Jupyter Notebooks for this tutorial and other PyImageSearch guides that are pre-configured to run on Google Colab’s ecosystem right in your web browser! No installation required.

And best of all, these Jupyter Notebooks will run on Windows, macOS, and Linux!

Project structure

Before we get too far into this tutorial, let’s first take a second and review our project directory structure.

Start by making sure you use the “Downloads” section of this tutorial to download the source code and example images.

From there, let’s take a look at the project:

$ tree . --dirsfirst . ├── examples │ ├── image_01.png ... │ └── image_13.png ├── output │ ├── siamese_model │ │ ├── variables │ │ │ ├── variables.data-00000-of-00001 │ │ │ └── variables.index │ │ └── saved_model.pb │ └── plot.png ├── pyimagesearch │ ├── config.py │ ├── siamese_network.py │ └── utils.py ├── test_siamese_network.py └── train_siamese_network.py 4 directories, 21 files

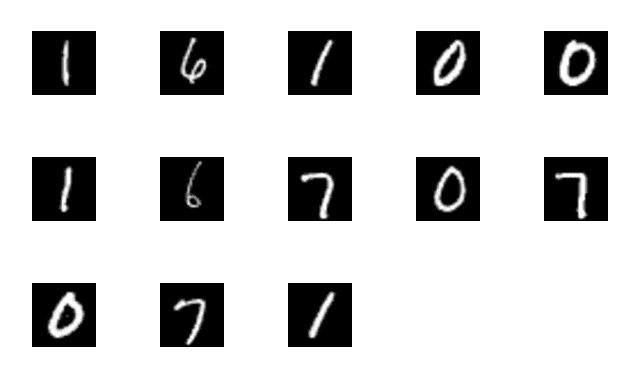

Inside the examples directory we have a number of example digits:

We’ll be sampling pairs of these digits and then comparing them for similarity using our siamese network.

The output directory contains the training history plot (plot.png) and our trained/serialized siamese network model (siamese_model/). Both of these files were generated in last week’s tutorial on training your own custom siamese network models — make sure you read that tutorial before you continue, as it’s required reading for today!

The pyimagesearch module contains three Python files:

config.pysiamese_network.pyutils.py

The train_siamese_network.py script:

- Imports the configuration, siamese network implementation, and utility functions

- Loads the MNIST dataset from disk

- Generates image pairs

- Creates our training/testing dataset split

- Trains our siamese network

- Serializes the trained siamese network to disk

I will not be covering these four scripts today, as I have already covered them in last week’s tutorial on how to train siamese networks. I’ve included these files in the project directory structure for today’s tutorial as a matter of completeness, but again, for a full review of these files, what they do, and how they work, refer back to last week’s tutorial.

Finally, we have the focus of today’s tutorial, test_siamese_network.py.

This script will:

- Load our trained siamese network model from disk

- Grab the paths to the sample digit images in the

examplesdirectory - Randomly construct pairs of images from these samples

- Compare the pairs for similarity using the siamese network

Let’s get to work!

Implementing our siamese network image similarity script

We are now ready to implement siamese networks for image similarity using Keras and TensorFlow.

Start by making sure you use the “Downloads” section of this tutorial to download the source code, example images, and pre-trained siamese network model.

From there, open up test_siamese_network.py, and follow along:

# import the necessary packages from pyimagesearch import config from pyimagesearch import utils from tensorflow.keras.models import load_model from imutils.paths import list_images import matplotlib.pyplot as plt import numpy as np import argparse import cv2

We start off by importing our required Python packages (Lines 2-9). Notable imports include:

configutilseuclidean_distancefunction utilized in ourLambdalayer of the siamese network — we need to import this package to suppress anyUserWarningsabout loadingLambdalayers from diskload_modellist_imagesexamplesdirectory

Let’s move on to parsing our command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--input", required=True,

help="path to input directory of testing images")

args = vars(ap.parse_args())

We only need a single argument here, --input, which is the path to our directory on disk containing the images we want to compare for similarity. When running this script, we’ll supply the path to the examples directory in our project.

With our command line arguments parsed, we can now grab all testImagePaths in our --input directory:

# grab the test dataset image paths and then randomly generate a

# total of 10 image pairs

print("[INFO] loading test dataset...")

testImagePaths = list(list_images(args["input"]))

np.random.seed(42)

pairs = np.random.choice(testImagePaths, size=(10, 2))

# load the model from disk

print("[INFO] loading siamese model...")

model = load_model(config.MODEL_PATH)

Line 20 grabs the paths to all of our example images containing digits we want to compare for similarity. Line 22 randomly generates a total of 10 pairs of images from these testImagePaths.

Line 26 loads our siamese network from disk using the load_model function.

With the siamese network loaded from disk, we can now compare images for similarity:

# loop over all image pairs for (i, (pathA, pathB)) in enumerate(pairs): # load both the images and convert them to grayscale imageA = cv2.imread(pathA, 0) imageB = cv2.imread(pathB, 0) # create a copy of both the images for visualization purpose origA = imageA.copy() origB = imageB.copy() # add channel a dimension to both the images imageA = np.expand_dims(imageA, axis=-1) imageB = np.expand_dims(imageB, axis=-1) # add a batch dimension to both images imageA = np.expand_dims(imageA, axis=0) imageB = np.expand_dims(imageB, axis=0) # scale the pixel values to the range of [0, 1] imageA = imageA / 255.0 imageB = imageB / 255.0 # use our siamese model to make predictions on the image pair, # indicating whether or not the images belong to the same class preds = model.predict([imageA, imageB]) proba = preds[0][0]

Line 29 starts a loop over all image pairs. For each image pair we:

- Load the two images from disk (Lines 31 and 32)

- Clone the two images such that we can draw/visualize them later (Lines 35 and 36)

- Add a channel dimension (Lines 39 and 40) along with a batch dimension (Lines 43 and 44)

- Scale the pixel intensities to from the range [0, 255] to [0, 1], just like we did when training our siamese network last week (Lines 47 and 48)

Once imageA and imageB are preprocessed, we compare them for similarity by making a call to the .predict method on our siamese network model (Line 52), resulting in the probability/similarity scores of the two images (Line 53).

The final step is to display the image pair and corresponding similarity score to our screen:

# initialize the figure

fig = plt.figure("Pair #{}".format(i + 1), figsize=(4, 2))

plt.suptitle("Similarity: {:.2f}".format(proba))

# show first image

ax = fig.add_subplot(1, 2, 1)

plt.imshow(origA, cmap=plt.cm.gray)

plt.axis("off")

# show the second image

ax = fig.add_subplot(1, 2, 2)

plt.imshow(origB, cmap=plt.cm.gray)

plt.axis("off")

# show the plot

plt.show()

Lines 56 and 57 create a matplotlib figure for the pair and display the similarity score as the title of the plot.

Lines 60-67 plot each of the images in the pair on the figure, while Line 70 displays the output to our screen.

Congrats on implementing siamese networks for image comparison and similarity! Let’s see the results of our hard work in the next section.

Image similarity results using siamese networks with Keras and TensorFlow

We are now ready to compare images for similarity using our siamese network!

Before we examine the results, make sure you:

- Have read our previous tutorial on training siamese networks so you understand how our siamese network model was trained and generated

- Use the “Downloads” section of this tutorial to download the source code, pre-trained siamese network, and example images

From there, open up a terminal, and execute the following command:

$ python test_siamese_network.py --input examples [INFO] loading test dataset... [INFO] loading siamese model...

Note: Are you getting an error related to TypeError: ('Keyword argument not understood:', 'groups')? If so, keep in mind that the pre-trained model included in the “Downloads” section of this tutorial was trained using TensorFlow 2.3. You should therefore be using TensorFlow 2.3 when running test_siamese_network.py. If you instead prefer to use a different version of TensorFlow, simply run train_siamese_network.py to train the model and generate a new siamese_model serialized to disk. From there you’ll be able to run test_siamese_network.py without error.

Figure 4 above displays a montage of our image similarity results.

For the first image pair, one contains a “7”, while the other contains a “1” — clearly these are not the same image, and the similarity score is low at 42%. Our siamese network has correctly marked these images as belonging to different classes.

The next image pair consists of two “0” digits. Our siamese network has predicted a very high similarity score of 97%, indicating that these two images belong to the same class.

You can see the same pattern for all other image pairs in Figure 4. Images that have a high similarity score belong to the same class, while image pairs with low similarity scores belong to different classes.

Since we used the sigmoid activation layer as the final layer in our siamese network (which has an output value in the range [0, 1]), a good rule of thumb is to use a similarity cutoff value of 0.5 (50%) as your threshold:

- If two image pairs have an image similarity of <= 0.5, then they belong to different classes.

- Conversely, if pairs have a predicted similarity of > 0.5, then they belong to the same class.

You can use this rule of thumb in your own projects when using siamese networks to compute image similarity.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: July 2025

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In this tutorial you learned how to compare two images for similarity and, more specifically, whether they belonged to the same or different classes. We accomplished this task using siamese networks along with the Keras and TensorFlow deep learning libraries.

This post is the final part in our three part series on introduction to siamese networks. For easy reference, here are links to each guide in the series:

- Part #1: Building image pairs for siamese networks with Python

- Part #2: Training siamese networks with Keras, TensorFlow, and Deep Learning

- Part #3: Comparing images for similarity using siamese networks, Keras, and TensorFlow (this tutorial)

In the near future I’ll be covering more advanced series on siamese networks, including:

- Image triplets

- Contrastive loss

- Triplet loss

- Face recognition with siamese networks

- One-shot learning with siamese networks

Stay tuned for these tutorials; you don’t want to miss them!

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), simply enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.