Inside this tutorial, you will learn how to utilize the OpenVINO toolkit with OpenCV for faster deep learning inference on the Raspberry Pi.

Raspberry Pis are great — I love the quality hardware and the supportive community built around the device.

That said, for deep learning, the current Raspberry Pi hardware is inherently resource-constrained and you’ll be lucky to get more than a few FPS (using the RPi CPU alone) out of most state-of-the-art models (especially object detection and instance/semantic segmentation).

We know from my previous posts that Intel’s Movidius Neural Compute Stick allows for faster inference with the deep learning coprocessor that you plug into the USB socket:

- Getting started with the Intel Movidius Neural Compute Stick

- Real-time object detection on the Raspberry Pi with the Movidius NCS

Since 2017, the Movidius team has been hard at work on their Myriad processors and their consumer-grade USB deep learning sticks.

The first version of the API that came with the sticks worked well and demonstrated the power of the Myriad, but left a lot to be desired.

Then, the Movidius APIv2 was released and welcomed by the Movidius + Raspberry Pi community. It was easier/more reliable than the APIv1 but had its fair share of issues as well.

But now, it’s become easier than ever to work with the Movidius NCS, especially with OpenCV.

Meet OpenVINO, an Intel library for hardware optimized computer vision designed to replace the V1 and V2 APIs.

Intel’s shift to support the Movidius hardware with OpenVINO software makes the Movidius shine in all of its metallic blue glory.

OpenVINO is extremely simple to use — just set the target processor (a single function call) and let OpenVINO-optimized OpenCV handle the rest.

But the question remains:

How can I install OpenVINO on the Raspberry Pi?

Today we’ll learn just that, along with a practical object detection demo (spoiler alert: it is dead simple to use the Movidius coprocessor now).

Update 2020-04-06: There are a number of updates in this tutorial to ensure compatibility with OpenVINO 4.2.0.

To learn how to install OpenVINO on the Raspberry Pi (and perform object detection with the Movidius Neural Compute Stick), just follow this tutorial!

OpenVINO, OpenCV, and Movidius NCS on the Raspberry Pi

In this blog post, we’re going to cover three main topics.

- First, we’ll learn what OpenVINO is and how it is a very welcome paradigm shift for the Raspberry Pi.

- We’ll then cover how to install OpenCV and OpenVINO on your Raspberry Pi.

- Finally, we’ll develop a real-time object detection script using OpenVINO, OpenCV, and the Movidius NCS.

Note: There are many Raspberry Pi install guides on my blog, most unrelated to Movidius. Before you begin, be sure to check out the available install tutorials on my OpenCV installation guides page and choose the one that best fits your needs.

Let’s get started.

What is OpenVINO?

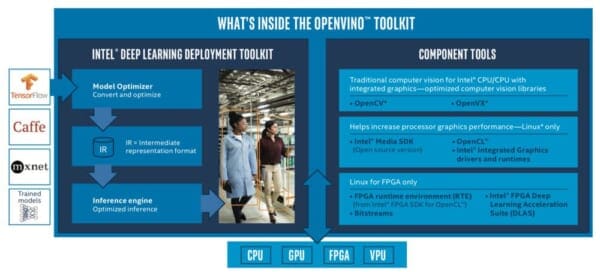

Intel’s OpenVINO is an acceleration library for optimized computing with Intel’s hardware portfolio.

OpenVINO supports Intel CPUs, GPUs, FPGAs, and VPUs.

Deep learning libraries you’ve come to rely upon such as TensorFlow, Caffe, and mxnet are supported by OpenVINO.

Intel has even optimized OpenCV’s DNN module to support its hardware for deep learning.

In fact, many newer smart cameras use Intel’s hardware along with the OpenVINO toolkit. OpenVINO is edge computing and IoT at its finest — it enables resource-constrained devices like the Raspberry Pi to work with the Movidius coprocessor to perform deep learning at speeds that are useful for real-world applications.

We’ll be installing OpenVINO on the Raspberry Pi so it can be used with the Movidius VPU (Vision Processing Unit) in the next section.

Be sure to read the OpenVINO product brief PDF for more information.

Installing OpenVINO’s optimized OpenCV on the Raspberry Pi

In this section, we’ll cover prerequisites and all steps required to install OpenCV and OpenVINO on your Raspberry Pi.

Be sure to read this entire section before you begin so that you are familiar with the steps required.

Let’s begin.

Hardware, assumptions, and prerequisites

In this tutorial, I am going to assume that you have the following hardware:

- Raspberry 4B or 3B+ (running Raspbian Buster)

- Movidius NCS 2 (or Movidius NCS 1)

- PiCamera V2 (or USB webcam)

- 32GB microSD card with Raspbian Stretch freshly flashed

- HDMI screen + keyboard/mouse (at least for the initial WiFi configuration)

- 5V power supply (I recommend a 2.5A supply because the Movidius NCS is a power hog)

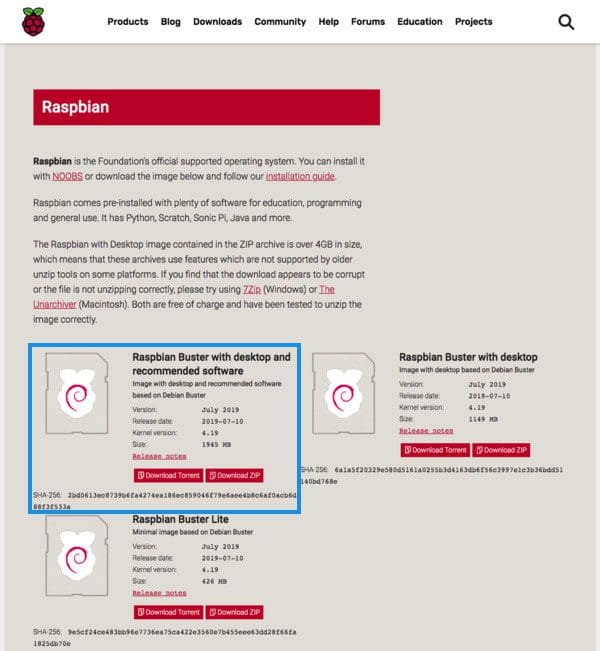

If you don’t have a microSD with a fresh burn of Raspbian Stretch, you may download it here. I recommend the full install:

From there, use balenaEtcher (or a suitable alternative) to flash the card.

Once you’re ready, insert the microSD card into your Raspberry Pi and boot it up.

Enter your WiFi credentials and enable SSH, VNC, and the camera interface.

From here you will need one of the following:

- Physical access to your Raspberry Pi so that you can open up a terminal and execute commands

- Remote access via SSH or VNC

I’ll be doing the majority of this tutorial via SSH, but as long as you have access to a terminal, you can easily follow along.

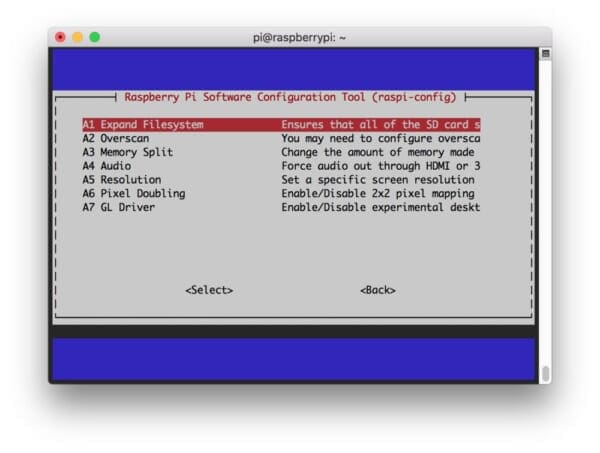

Can’t SSH? If you see your Pi on your network, but can’t ssh to it, you may need to enable SSH. This can easily be done via the Raspberry Pi desktop preferences menu or using the raspi-config command.

After you’ve changed the setting and rebooted, you can test SSH directly on the Pi with the localhost address.

Open a terminal and type ssh pi@127.0.0.1 to see if it is working. To SSH from another computer you’ll need the Pi’s IP address — you can determine the IP address by looking at your router’s clients page or by running ifconfig to determine the IP on/of the Pi itself.

Is your Raspberry Pi keyboard layout giving you problems? Change your keyboard layout by going to the Raspberry Pi desktop preferences menu. I use the standard US Keyboard layout, but you’ll want to select the one appropriate for you.

Step #1: Expand filesystem on your Raspberry Pi

To get the OpenVINO party started, fire up your Raspberry Pi and open an SSH connection (alternatively use the Raspbian desktop with a keyboard + mouse and launch a terminal).

If you’ve just flashed Raspbian Stretch, I always recommend that you first check to ensure your filesystem is using all available space on the microSD card.

To check your disk space usage execute the df -h command in your terminal and examine the output:

$ df -h Filesystem Size Used Avail Use% Mounted on /dev/root 30G 4.2G 24G 15% / devtmpfs 434M 0 434M 0% /dev tmpfs 438M 0 438M 0% /dev/shm tmpfs 438M 12M 427M 3% /run tmpfs 5.0M 4.0K 5.0M 1% /run/lock tmpfs 438M 0 438M 0% /sys/fs/cgroup /dev/mmcblk0p1 42M 21M 21M 51% /boot tmpfs 88M 0 88M 0% /run/user/1000

As you can see, my Raspbian filesystem has been automatically expanded to include all 32GB of the micro-SD card. This is denoted by the fact that the size is 30GB (nearly 32GB) and I have 24GB available (15% usage).

If you’re seeing that you aren’t using your entire memory card capacity, below you can find instructions on how to expand the filesystem.

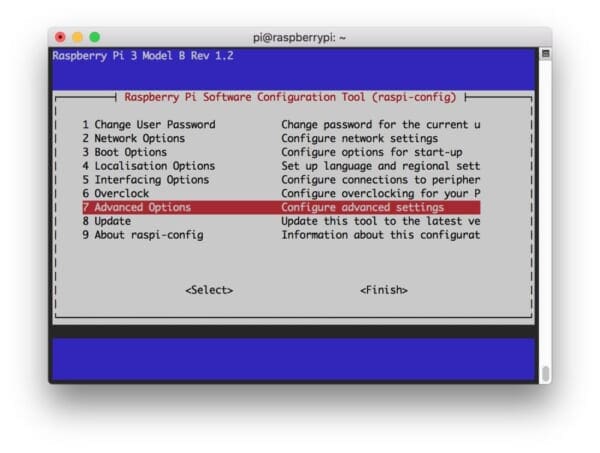

Open up the Raspberry Pi configuration in your terminal:

$ sudo raspi-config

And then select the “Advanced Options” menu item:

raspi-config menu to expand the Raspbian file system on your Raspberry Pi is important before installing OpenVINO and OpenCV. Next, we’ll actually expand the filesystem.Followed by selecting “Expand filesystem”:

Once prompted, you should select the first option, “A1. Expand File System”, hit Enter on your keyboard, arrow down to the “<Finish>” button, and then reboot your Pi — you will be prompted to reboot. Alternatively, you can reboot from the terminal:

$ sudo reboot

Be sure to run the df -h command again to check that your file system is expanded.

Step #2: Reclaim space on your Raspberry Pi

One simple way to gain more space on your Raspberry Pi is to delete both LibreOffice and Wolfram engine to free up some space on your Pi:

$ sudo apt-get purge wolfram-engine $ sudo apt-get purge libreoffice* $ sudo apt-get clean $ sudo apt-get autoremove

After removing the Wolfram Engine and LibreOffice, you can reclaim almost 1GB!

Step #3: Install OpenVINO + OpenCV dependencies on your Raspberry Pi

This step shows some dependencies which I install on every OpenCV system. While you’ll soon see that OpenVINO is already compiled, I recommend that you go ahead and install these packages anyway in case you end up compiling OpenCV from scratch at any time going forward.

Let’s update our system:

$ sudo apt-get update && sudo apt-get upgrade

And then install developer tools including CMake:

$ sudo apt-get install build-essential cmake unzip pkg-config

Next, it is time to install a selection of image and video libraries — these are key to being able to work with image and video files:

$ sudo apt-get install libjpeg-dev libpng-dev libtiff-dev $ sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev $ sudo apt-get install libxvidcore-dev libx264-dev

From there, let’s install GTK, our GUI backend:

$ sudo apt-get install libgtk-3-dev

And now let’s install a package which may help to reduce GTK warnings:

$ sudo apt-get install libcanberra-gtk*

The asterisk ensures we will grab the ARM-specific GTK. It is required.

Now we need two packages which contain numerical optimizations for OpenCV:

$ sudo apt-get install libatlas-base-dev gfortran

And finally, let’s install the Python 3 development headers:

$ sudo apt-get install python3-dev

Once you have all of these prerequisites installed you can move on to the next step.

Step #4: Download and unpack OpenVINO for your Raspberry Pi

From here forward, our install instructions are largely based upon Intel’s Raspberry Pi OpenVINO guide. There are a few “gotchas” which is why I decided to write a guide. We’ll also use virtual environments as PyImageSearch readers have come to expect.

Our next step is to download OpenVINO.

Let’s navigate to our home folder and create a new directory

$ cd ~

From there, go ahead and grab the OpenVINO Toolkit via wget :

$ wget https://download.01.org/opencv/2020/openvinotoolkit/2020.1/l_openvino_toolkit_runtime_raspbian_p_2020.1.023.tgz

Update 2020-04-06: The Download URL has changed; the new URL is reflected.

Once you have successfully downloaded the OpenVINO toolkit, you can unarchive it using the following command:

$ tar -xf l_openvino_toolkit_runtime_raspbian_p_2020.1.023.tgz ... $ mv l_openvino_toolkit_runtime_raspbian_p_2020.1.023 openvino

Step #5: Configure OpenVINO on your Raspberry Pi

Let’s use nano to edit our ~/.bashrc . We will add a line to load OpenVINO’s setupvars.sh each time you invoke a Pi terminal. Go ahead and open the file:

$ nano ~/.bashrc

Scroll to the bottom and add the following lines:

# OpenVINO source ~/openvino/bin/setupvars.sh

Save and exit from the nano text editor.

Then, go ahead and source your ~/.bashrc file:

$ source ~/.bashrc

Step #6: Configure USB rules for your Movidius NCS and OpenVINO on Raspberry Pi

OpenVINO requires that we set custom USB rules. It is quite straightforward, so let’s get started.

First, enter the following command to add the current user to the Raspbian “users” group:

$ sudo usermod -a -G users "$(whoami)"

Then logout and log back in. If you’re on SSH, you can type exit and then re-establish your SSH connection. Rebooting is also an option via sudo reboot now .

Once you’re back at your terminal, run the following script to set the USB rules:

$ cd ~ $ sh openvino/install_dependencies/install_NCS_udev_rules.sh

Step #7: Create an OpenVINO virtual environment on Raspberry Pi

Let’s grab and install pip, a Python Package Manager.

To install pip, simply enter the following in your terminal:

$ wget https://bootstrap.pypa.io/get-pip.py $ sudo python3 get-pip.py

We’ll be making use of virtual environments for Python development with OpenCV and OpenVINO.

If you aren’t familiar with virtual environments, please take a moment look at this article on RealPython or read the first half of this blog post on PyImageSearch.

Virtual environments will allow you to run independent, sequestered Python environments in isolation on your system. Today we’ll be setting up just one environment, but you could easily have an environment for each project.

Let’s go ahead and install virtualenv and virtualenvwrapper now — they allow for Python virtual environments:

$ sudo pip install virtualenv virtualenvwrapper $ sudo rm -rf ~/get-pip.py ~/.cache/pip

To finish the install of these tools, we need to update our ~/.bashrc again:

$ nano ~/.bashrc

Then add the following lines:

# virtualenv and virtualenvwrapper export WORKON_HOME=$HOME/.virtualenvs export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3 source /usr/local/bin/virtualenvwrapper.sh VIRTUALENVWRAPPER_ENV_BIN_DIR=bin

~/.bashrc profile has been updated to accommodate OpenVINO and virtualenvwrapper. Now we’ll be able to create a virtual environment for Python packages.Alternatively, you can append the lines directly via bash commands:

$ echo -e "\n# virtualenv and virtualenvwrapper" >> ~/.bashrc $ echo "export WORKON_HOME=$HOME/.virtualenvs" >> ~/.bashrc $ echo "export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3" >> ~/.bashrc $ echo "source /usr/local/bin/virtualenvwrapper.sh" >> ~/.bashrc $ echo "VIRTUALENVWRAPPER_ENV_BIN_DIR=bin" >> ~/.bashrc

Next, source the ~/.bashrc profile:

$ source ~/.bashrc

Let’s now create a virtual environment to hold OpenVINO, OpenCV and related packages:

$ mkvirtualenv openvino -p python3

This command simply creates a Python 3 virtual environment named openvino .

You can (and should) name your environment(s) whatever you’d like — I like to keep them short and sweet while also providing enough information so I’ll remember what they are for.

Step #8: Install packages into your OpenVINO environment

Let’s install a handful of packages required for today’s demo script

$ workon openvino $ pip install numpy $ pip install "picamera[array]" $ pip install imutils

Now that we’ve installed these packages in the openvino virtual environment, they are only available in the openvino environment. This is your sequestered area to work on OpenVINO projects (we use Python virtual environments here so we don’t risk ruining your system install of Python).

Additional packages for Caffe, TensorFlow, and mxnet may be installed via requirements.txt files using pip. You can read more about it at this Intel documentation link. This is not required for today’s tutorial.

Step #9: Test your OpenVINO install on your Raspberry Pi

Let’s do a quick sanity test to see if OpenCV is ready to go before we try an OpenVINO example.

Open a terminal and perform the following:

$ workon openvino $ source ~/openvino/bin/setupvars.sh $ python >>> import cv2 >>> cv2.__version__ '4.2.0-openvino' >>> exit()

The first command activates our OpenVINO virtual environment. The second command sets up the Movidius NCS with OpenVINO and is very important. From there we fire up the Python 3 binary in the environment and import OpenCV.

The version of OpenCV indicates that it is an OpenVINO optimized install!

Recommended: Create a shell script for starting your OpenVINO environment

In this section, we’ll create a shell script just like the ones that come on my Pre-configured and Pre-installed Raspbian .img.

Open a new file named start_openvino.sh and place it in your ~/ directory. Insert the following lines:

#!/bin/bash echo "Starting Python 3.7 with OpenCV-OpenVINO 4.2.0 bindings..." source ~/openvino/bin/setupvars.sh workon openvino

Save and close the file.

From here on, you can activate your OpenVINO environment with one simple command (as opposed to two commands like in the previous step:

$ source ~/start_openvino.sh Starting Python 3.7 with OpenCV-OpenVINO 4.2.0 bindings...

Real-time object detection with Raspberry Pi and OpenVINO

Installing OpenVINO was pretty easy and didn’t even require a compile of OpenCV. The Intel team did a great job!

Now let’s put the Movidius Neural Compute Stick to work using OpenVINO.

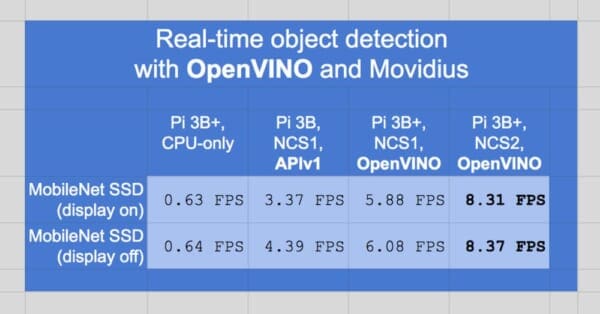

For comparison’s sake, we’ll run the MobileNet SSD object detector with and without the Movidius to benchmark our FPS. We’ll compare the values to previous results of using Movidius NCS APIv1 (the non-OpenVINO method that I wrote about in early 2018).

Let’s get started!

Project structure

Go ahead and grab the “Downloads” for today’s blog post.

Once you’ve extracted the zip, you can use the tree command to inspect the project directory:

$ tree . ├── MobileNetSSD_deploy.caffemodel ├── MobileNetSSD_deploy.prototxt ├── openvino_real_time_object_detection.py └── real_time_object_detection.py 0 directories, 3 files

Our MobileNet SSD object detector files include the .caffemodel and .prototxt.txt files. These are pretrained (we will not be training MobileNet SSD today).

We’re going to review the openvino_real_time_object_detection.py script and compare it to the original real-time object detection script (real_time_object_detection.py ).

Real-time object detection with OpenVINO, Movidius NCS, and Raspberry Pi

To demonstrate the power of OpenVINO on the Raspberry Pi with Movidius, we’re going to perform real-time deep learning object detection.

The Movidius/Myriad coprocessor will perform the actual deep learning inference, reducing the load on the Pi’s CPU.

We’ll still use the Raspberry Pi CPU to process the results and tell the Movidius what to do, but we’re reserving deep learning inference for the Myriad as its hardware is optimized and designed for deep learning inference.

As previously discussed in the “What is OpenVINO?” section, OpenVINO with OpenCV allows us to specify the processor for inference when using the OpenCV “DNN” module.

In fact, it only requires one line of code (typically) to use the Movidius NCS Myriad processor.

From there, the rest of the code is the same!

On the PyImageSearch blog I provide a detailed walkthrough of all Python scripts.

This is one of the few posts where I’ve decided to deviate from my typical format.

This post is first and foremost an install + configuration post. Therefore I’m going to skip over the details and instead demonstrate the power of OpenVINO by highlighting new lines of code inserted into a previous blog post (where all details are provided).

Please review that post if you want to get into the weeds with Real-time object detection with deep learning and OpenCV where I demonstrated the concept of using OpenCV’s DNN module in just 100 lines of code.

Today, we’re adding just one line of code that performs computation (and a comment + blank line). This brings the new total to 103 lines of code without using the previous complex Movidius APIv1 (215 lines of code).

If this is your first foray into OpenVINO, I think you’ll be just as astounded and pleased as I was when I learned how easy it is.

Let’s learn the changes necessary to accommodate OpenVINO’s API with OpenCV and Movidius.

Go ahead and open a file named openvino_real_time_object_detection.py and insert the following lines, paying close attention to Lines 33-35 (highlighted in yellow):

# import the necessary packages

from imutils.video import VideoStream

from imutils.video import FPS

import numpy as np

import argparse

import imutils

import time

import cv2

# construct the argument parse and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-p", "--prototxt", required=True,

help="path to Caffe 'deploy' prototxt file")

ap.add_argument("-m", "--model", required=True,

help="path to Caffe pre-trained model")

ap.add_argument("-c", "--confidence", type=float, default=0.2,

help="minimum probability to filter weak detections")

ap.add_argument("-u", "--movidius", type=bool, default=0,

help="boolean indicating if the Movidius should be used")

args = vars(ap.parse_args())

# initialize the list of class labels MobileNet SSD was trained to

# detect, then generate a set of bounding box colors for each class

CLASSES = ["background", "aeroplane", "bicycle", "bird", "boat",

"bottle", "bus", "car", "cat", "chair", "cow", "diningtable",

"dog", "horse", "motorbike", "person", "pottedplant", "sheep",

"sofa", "train", "tvmonitor"]

COLORS = np.random.uniform(0, 255, size=(len(CLASSES), 3))

# load our serialized model from disk

print("[INFO] loading model...")

net = cv2.dnn.readNetFromCaffe(args["prototxt"], args["model"])

# specify the target device as the Myriad processor on the NCS

net.setPreferableTarget(cv2.dnn.DNN_TARGET_MYRIAD)

# initialize the video stream, allow the cammera sensor to warmup,

# and initialize the FPS counter

print("[INFO] starting video stream...")

vs = VideoStream(usePiCamera=True).start()

time.sleep(2.0)

fps = FPS().start()

# loop over the frames from the video stream

while True:

# grab the frame from the threaded video stream and resize it

# to have a maximum width of 400 pixels

frame = vs.read()

frame = imutils.resize(frame, width=400)

# grab the frame dimensions and convert it to a blob

(h, w) = frame.shape[:2]

blob = cv2.dnn.blobFromImage(frame, 0.007843, (300, 300), 127.5)

# pass the blob through the network and obtain the detections and

# predictions

net.setInput(blob)

detections = net.forward()

# loop over the detections

for i in np.arange(0, detections.shape[2]):

# extract the confidence (i.e., probability) associated with

# the prediction

confidence = detections[0, 0, i, 2]

# filter out weak detections by ensuring the `confidence` is

# greater than the minimum confidence

if confidence > args["confidence"]:

# extract the index of the class label from the

# `detections`, then compute the (x, y)-coordinates of

# the bounding box for the object

idx = int(detections[0, 0, i, 1])

box = detections[0, 0, i, 3:7] * np.array([w, h, w, h])

(startX, startY, endX, endY) = box.astype("int")

# draw the prediction on the frame

label = "{}: {:.2f}%".format(CLASSES[idx],

confidence * 100)

cv2.rectangle(frame, (startX, startY), (endX, endY),

COLORS[idx], 2)

y = startY - 15 if startY - 15 > 15 else startY + 15

cv2.putText(frame, label, (startX, y),

cv2.FONT_HERSHEY_SIMPLEX, 0.5, COLORS[idx], 2)

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the `q` key was pressed, break from the loop

if key == ord("q"):

break

# update the FPS counter

fps.update()

# stop the timer and display FPS information

fps.stop()

print("[INFO] elasped time: {:.2f}".format(fps.elapsed()))

print("[INFO] approx. FPS: {:.2f}".format(fps.fps()))

# do a bit of cleanup

cv2.destroyAllWindows()

vs.stop()

Lines 33-35 (highlighted in yellow) are new. But only one of those lines is interesting.

On Line 35, we tell OpenCV’s DNN module to use the Myriad coprocessor using net.setPreferableTarget(cv2.dnn.DNN_TARGET_MYRIAD) .

The Myriad processor is built into the Movidius Neural Compute Stick. You can use this same method if you’re running OpenVINO + OpenCV on a device with an embedded Myriad chip (i.e. without the bulky USB stick).

For a detailed explanation on the code, be sure to refer to this post.

Also, be sure to refer to this Movidius APIv1 blog post from early 2018 where I demonstrated object detection using Movidius and the Raspberry Pi. It’s incredible that 215 lines of significantly more complicated code are required for the previous Movidius API, in comparison to 103 lines of much easier to follow code using OpenVINO.

I think those line number differences speak for themselves in terms of reduced complexity, time, and development cost savings, but what are the actual results? How fast is OpenVINO with Movidius?

Let’s find out in the next section.

OpenVINO object detection results

To run today’s script, first, you’ll need to grab the “Downloads” associated with this post.

From there, unpack the zip and navigate into the directory.

Activate your virtual environment using the recommended method above:

$ source ~/start_openvino.sh Starting Python 3.7 with OpenCV-OpenVINO 4.2.0 bindings...

To perform object detection with OpenVINO, just execute the following command:

$ python openvino_real_time_object_detection.py --prototxt MobileNetSSD_deploy.prototxt \ --model MobileNetSSD_deploy.caffemodel [INFO] loading model... [INFO] starting video stream... [INFO] elasped time: 55.35 [INFO] approx. FPS: 8.31

As you can see, we’re reaching 8.31FPS over approximately one minute.

I’ve gathered additional results using MobileNet SSD as shown in the table below:

OpenVINO and the Movidius NCS 2 are very fast, a huge speedup from previous versions.

It’s amazing that the results are > 8x in comparison to using only the RPi 3B+ CPU (no Movidius coprocessor).

The two rightmost columns (light blue columns 3 and 4) show the OpenVINO comparison between the NCS1 and the NCS2.

Note that the 2nd column statistic is with the RPi 3B (not the 3B+). It was taken in February 2018 using the previous API and previous RPi hardware.

So, what’s next?

I’ve written a new book to maximize computer vision + deep learning capability on resource-constrained devices such as the Raspberry Pi single board computer (SBC).

Inside, you’ll learn and develop your skills using techniques that I’ve amassed through my years of working with computer vision on the Raspberry Pi, Intel Movidius NCS, Google Coral EdgeTPU, NVIDIA Jetson Nano, and more.

The book covers over 40 projects (including 60+ chapters) on embedded Computer Vision and Deep Learning.

A handful of the highlighted projects include:

- Traffic counting and vehicle speed detection

- Real-time face recognition

- Building a classroom attendance system

- Automatic hand gesture recognition

- Daytime and nighttime wildlife monitoring

- Security applications

- Deep Learning classification, object detection, and human pose estimation on resource-constrained devices

- … and much more!

As a bonus, included are pre-configured Raspbian .img files (for the Raspberry Pi 4B/3B+/3B and Raspberry Pi Zero W) and pre-configured Jetson Nano .img files (for the NVIDIA Jetson Nano A02/B01) so you can skip the tedious installation headaches and get to the fun part (code and deployment).

If you’re just as excited as I am, grab the free table of contents by clicking here:

Troubleshooting and Frequently Asked Questions (FAQ)

Did you encounter an error installing OpenCV and OpenVINO on your Raspberry Pi?

Don’t become frustrated.

The first time you install the software on your Raspberry Pi it can be very frustrating. The last thing I want for you to do is give up!

Here are some common question and answers — be sure to read them and see if they apply to you.

Q. How do I flash an operating system on to my Raspberry Pi memory card?

A. I recommend that you:

- Grab a 32GB memory card. The SanDisk 32GB 98MB/s microSD cards work really well and are what I recommend.

- Flash Raspbian Stretch with Etcher to the card. Etcher is supported by most major operating systems.

- Insert the card into your Raspberry Pi and begin with the “Assumptions” and “Step 1” sections in this blog post.

Q. Can I use Python 2.7?

A. Python 2.7 reached sunset on January 1st, 2020. I would not advise using it.

Q. Why can’t I just apt-get install OpenCV and have OpenVINO support?

A. Avoid this “solution” at all costs even though it might work. First, this method likely won’t install OpenVINO until it is more popular. Secondly, apt-get doesn’t play nice with virtual environments and you won’t have control over your compile and build.

Q. The mkvirtualenv and workon commands yield a “command not found error”. I’m not sure what to do next.

A. There a number of reasons why you would be seeing this error message, all of come from to Step #4:

- First, ensure you have installed

virtualenvandvirtualenvwrapperproperly using thepippackage manager. Verify by runningpip freezeand ensure that you see bothvirtualenvandvirtualenvwrapperare in the list of installed packages. - Your

~/.bashrcfile may have mistakes. Examine the contents of your~/.bashrcfile to see the properexportandsourcecommands are present (check Step #4 for the commands that should be appended to~/.bashrc). - You might have forgotten to

sourceyour~/.bashrc. Make sure you runsource ~/.bashrcafter editing it to ensure you have access to themkvirtualenvandworkoncommands.

Q. When I open a new terminal, logout, or reboot my Raspberry Pi, I cannot execute the mkvirtualenv or workon commands.

A. If you’re on the Raspbian desktop, this will likely occur. The default profile that is loaded when you launch a terminal, for some reason, doesn’t source the ~/.bashrc file. Please refer to #2 from the previous question. Over SSH, you probably won’t run into this.

Q. When I try to import OpenCV, I encounter this message: Import Error: No module named cv2 .

A. There are several reasons this could be happening and unfortunately, it is hard to diagnose. I recommend the following suggestions to help diagnose and resolve the error:

- Ensure your

openvinovirtual environment is active by using theworkon openvinoandsource setupvars.shcommands. If this command gives you an error, then verify thatvirtualenvandvirtualenvwrapperare properly installed. - Try investigating the contents of the

site-packagesdirectory in youropenvinovirtual environment. You can find thesite-packagesdirectory in~/.virtualenvs/openvino/lib/python3.5/site-packages/. Ensure (1) there is acv2sym-link directory in thesite-packagesdirectory and (2) it’s properly sym-linked. - Be sure to

findthecv2*.sofile as demonstrated in Step #6.

Q. What if my question isn’t listed here?

A. Please leave a comment below or send me an email. If you post a comment below, just be aware that code doesn’t format well in the comment form and I may have to respond to you via email instead.

Looking for more free OpenVINO content?

I have a number of Intel Movidius / OpenVINO blog posts for your enjoyment here on PyImageSearch.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

Today we learned about Intel’s OpenVINO toolkit and how it can be used to improve deep learning inference speed on the Raspberry Pi.

You also learned how to install the OpenVINO toolkit, including the OpenVINO-optimized version of OpenCV on the Raspberry Pi.

We then ran a simple MobileNet SSD deep learning object detection model. It only required one line of code to set the target device to the Myriad processor on the Movidius stick.

We also demonstrated that the Movidius NCS + OpenVINO is quite fast, dramatically outperforming object detection speed on the Raspberry Pi’s CPU.

And if you’re interested in learning more about how to build real-world computer vision + deep learning projects on the Raspberry Pi, be sure to check out my new book, Raspberry Pi for Computer Vision.

To download the source code to this post (and be notified when future tutorials are published here on PyImageSearch), just drop your email in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!