In last week’s blog post we got our feet wet by implementing a simple object tracking algorithm called “centroid tracking”.

Today, we are going to take the next step and look at eight separate object tracking algorithms built right into OpenCV!

A dataset containing videos or sequences of images with annotated objects is invaluable for understanding and implementing object tracking. It allows the tracking algorithm to learn how objects move and change over time.

Roboflow has free tools for each stage of the computer vision pipeline that will streamline your workflows and supercharge your productivity.

Sign up or Log in to your Roboflow account to access state of the art dataset libaries and revolutionize your computer vision pipeline.

You can start by choosing your own datasets or using our PyImageSearch’s assorted library of useful datasets.

Bring data in any of 40+ formats to Roboflow, train using any state-of-the-art model architectures, deploy across multiple platforms (API, NVIDIA, browser, iOS, etc), and connect to applications or 3rd party tools.

You see, while our centroid tracker worked well, it required us to run an actual object detector on each frame of the input video. For the vast majority of circumstances, having to run the detection phase on each and every frame is undesirable and potentially computationally limiting.

Instead, we would like to apply object detection only once and then have the object tracker be able to handle every subsequent frame, leading to a faster, more efficient object tracking pipeline.

The question is — can OpenCV help us achieve such object tracking?

The answer is undoubtedly a resounding “Yes”.

To learn how to apply object tracking using OpenCV’s built-in object trackers, just keep reading.

OpenCV Object Tracking

In the first part of today’s blog post, we are going to briefly review the eight object tracking algorithms built-in to OpenCV.

From there I’ll demonstrate how we can use each of these object trackers in real-time.

Finally, we’ll review the results of each of OpenCV’s object trackers, noting which ones worked under what situations and which ones didn’t.

Let’s go ahead and get started tracking objects with OpenCV!

8 OpenCV Object Tracking Implementations

You might be surprised to know that OpenCV includes eight (yes, eight!) separate object tracking implementations that you can use in your own computer vision applications.

I’ve included a brief highlight of each object tracker below:

- BOOSTING Tracker: Based on the same algorithm used to power the machine learning behind Haar cascades (AdaBoost), but like Haar cascades, is over a decade old. This tracker is slow and doesn’t work very well. Interesting only for legacy reasons and comparing other algorithms. (minimum OpenCV 3.0.0)

- MIL Tracker: Better accuracy than BOOSTING tracker but does a poor job of reporting failure. (minimum OpenCV 3.0.0)

- KCF Tracker: Kernelized Correlation Filters. Faster than BOOSTING and MIL. Similar to MIL and KCF, does not handle full occlusion well. (minimum OpenCV 3.1.0)

- CSRT Tracker: Discriminative Correlation Filter (with Channel and Spatial Reliability). Tends to be more accurate than KCF but slightly slower. (minimum OpenCV 3.4.2)

- MedianFlow Tracker: Does a nice job reporting failures; however, if there is too large of a jump in motion, such as fast moving objects, or objects that change quickly in their appearance, the model will fail. (minimum OpenCV 3.0.0)

- TLD Tracker: I’m not sure if there is a problem with the OpenCV implementation of the TLD tracker or the actual algorithm itself, but the TLD tracker was incredibly prone to false-positives. I do not recommend using this OpenCV object tracker. (minimum OpenCV 3.0.0)

- MOSSE Tracker: Very, very fast. Not as accurate as CSRT or KCF but a good choice if you need pure speed. (minimum OpenCV 3.4.1)

- GOTURN Tracker: The only deep learning-based object detector included in OpenCV. It requires additional model files to run (will not be covered in this post). My initial experiments showed it was a bit of a pain to use even though it reportedly handles viewing changes well (my initial experiments didn’t confirm this though). I’ll try to cover it in a future post, but in the meantime, take a look at Satya’s writeup. (minimum OpenCV 3.2.0)

My personal suggestion is to:

- Use CSRT when you need higher object tracking accuracy and can tolerate slower FPS throughput

- Use KCF when you need faster FPS throughput but can handle slightly lower object tracking accuracy

- Use MOSSE when you need pure speed

Satya Mallick also provides some additional information on these object trackers in his article as well.

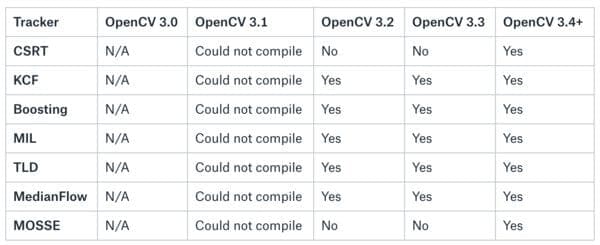

Object Trackers have been in active development in OpenCV 3. Here is a brief summary of which versions of OpenCV the trackers appear in:

Note: Despite following the instructions in this issue on GitHub and turning off precompiled headers, I was not able to get OpenCV 3.1 to compile.

Now that you’ve had a brief overview of each of the object trackers, let’s get down to business!

Object Tracking with OpenCV

To perform object tracking using OpenCV, open up a new file, name it opencv_object_tracker.py , and insert the following code:

# import the necessary packages from imutils.video import VideoStream from imutils.video import FPS import argparse import imutils import time import cv2

We begin by importing our required packages. Ensure that you have OpenCV installed (I recommend OpenCV 3.4+) and that you have imutils installed:

$ pip install --upgrade imutils

Now that our packages are imported, let’s parse two command line arguments:

# construct the argument parser and parse the arguments

ap = argparse.ArgumentParser()

ap.add_argument("-v", "--video", type=str,

help="path to input video file")

ap.add_argument("-t", "--tracker", type=str, default="kcf",

help="OpenCV object tracker type")

args = vars(ap.parse_args())

Our command line arguments include:

--video: The optional path to the input video file. If this argument is left off, then the script will use your webcam.--tracker: The OpenCV object tracker to use. By default, it is set tokcf(Kernelized Correlation Filters). For a full list of possible tracker code strings refer to the next code block or to the section below, “Object Tracking Results”.

Let’s handle the different types of trackers:

# extract the OpenCV version info

(major, minor) = cv2.__version__.split(".")[:2]

# if we are using OpenCV 3.2 OR BEFORE, we can use a special factory

# function to create our object tracker

if int(major) == 3 and int(minor) < 3:

tracker = cv2.Tracker_create(args["tracker"].upper())

# otherwise, for OpenCV 3.3 OR NEWER, we need to explicitly call the

# appropriate object tracker constructor:

else:

# initialize a dictionary that maps strings to their corresponding

# OpenCV object tracker implementations

OPENCV_OBJECT_TRACKERS = {

"csrt": cv2.TrackerCSRT_create,

"kcf": cv2.TrackerKCF_create,

"boosting": cv2.TrackerBoosting_create,

"mil": cv2.TrackerMIL_create,

"tld": cv2.TrackerTLD_create,

"medianflow": cv2.TrackerMedianFlow_create,

"mosse": cv2.TrackerMOSSE_create

}

# grab the appropriate object tracker using our dictionary of

# OpenCV object tracker objects

tracker = OPENCV_OBJECT_TRACKERS[args["tracker"]]()

# initialize the bounding box coordinates of the object we are going

# to track

initBB = None

As denoted in Figure 2 not all trackers are present in each minor version of OpenCV 3+.

There’s also an implementation change that occurs at OpenCV 3.3. Prior to OpenCV 3.3, tracker objects must be created with cv2.Tracker_create and passing an uppercase string of the tracker name (Lines 22 and 23).

For OpenCV 3.3+, each tracker can be created with their own respective function call such as cv2.TrackerKCF_create . The dictionary, OPENCV_OBJECT_TRACKERS , contains seven of the eight built-in OpenCV object trackers (Lines 30-38). It maps the object tracker command line argument string (key) with the actual OpenCV object tracker function (value).

On Line 42 the tracker object is instantiated based on the command line argument for the tracker and the associated key from OPENCV_OBJECT_TRACKERS .

Note: I am purposely leaving GOTURN out of the set of usable object trackers as it requires additional model files.

We also initialize initBB to None (Line 46). This variable will hold the bounding box coordinates of the object that we select with the mouse.

Next, let’s initialize our video stream and FPS counter:

# if a video path was not supplied, grab the reference to the web cam

if not args.get("video", False):

print("[INFO] starting video stream...")

vs = VideoStream(src=0).start()

time.sleep(1.0)

# otherwise, grab a reference to the video file

else:

vs = cv2.VideoCapture(args["video"])

# initialize the FPS throughput estimator

fps = None

Lines 49-52 handle the case in which we are accessing our webcam. Here we initialize the webcam video stream with a one-second pause for the camera sensor to “warm up”.

Otherwise, the --video command line argument was provided so we’ll initialize a video stream from a video file (Lines 55 and 56).

Let’s begin looping over frames from the video stream:

# loop over frames from the video stream

while True:

# grab the current frame, then handle if we are using a

# VideoStream or VideoCapture object

frame = vs.read()

frame = frame[1] if args.get("video", False) else frame

# check to see if we have reached the end of the stream

if frame is None:

break

# resize the frame (so we can process it faster) and grab the

# frame dimensions

frame = imutils.resize(frame, width=500)

(H, W) = frame.shape[:2]

We grab a frame on Lines 65 and 66 as well as handle the case where there are no frames left in a video file on Lines 69 and 70.

In order for our object tracking algorithms to process the frame faster, we resize the input frame to 50 pixels (Line 74) — the less data there is to process, the faster our object tracking pipeline will run.

We then grab the width and height of the frame as we’ll need the height later (Line 75).

Now let’s handle the case where an object has already been selected:

# check to see if we are currently tracking an object

if initBB is not None:

# grab the new bounding box coordinates of the object

(success, box) = tracker.update(frame)

# check to see if the tracking was a success

if success:

(x, y, w, h) = [int(v) for v in box]

cv2.rectangle(frame, (x, y), (x + w, y + h),

(0, 255, 0), 2)

# update the FPS counter

fps.update()

fps.stop()

# initialize the set of information we'll be displaying on

# the frame

info = [

("Tracker", args["tracker"]),

("Success", "Yes" if success else "No"),

("FPS", "{:.2f}".format(fps.fps())),

]

# loop over the info tuples and draw them on our frame

for (i, (k, v)) in enumerate(info):

text = "{}: {}".format(k, v)

cv2.putText(frame, text, (10, H - ((i * 20) + 20)),

cv2.FONT_HERSHEY_SIMPLEX, 0.6, (0, 0, 255), 2)

If an object has been selected, we need to update the location of the object. To do so, we call the update method on Line 80 passing only the frame to the function. The update method will locate the object’s new position and return a success boolean and the bounding box of the object.

If successful, we draw the new, updated bounding box location on the frame (Lines 83-86). Keep in mind that trackers can lose objects and report failure so the success boolean may not always be True .

Our FPS throughput estimator is updated on Lines 89 and 90.

On Lines 94-98 we construct a list of textual information to display on the frame . Subsequently, we draw the information on the frame on Lines 101-104.

From there, let’s show the frame and handle selecting an object with the mouse:

# show the output frame

cv2.imshow("Frame", frame)

key = cv2.waitKey(1) & 0xFF

# if the 's' key is selected, we are going to "select" a bounding

# box to track

if key == ord("s"):

# select the bounding box of the object we want to track (make

# sure you press ENTER or SPACE after selecting the ROI)

initBB = cv2.selectROI("Frame", frame, fromCenter=False,

showCrosshair=True)

# start OpenCV object tracker using the supplied bounding box

# coordinates, then start the FPS throughput estimator as well

tracker.init(frame, initBB)

fps = FPS().start()

We’ll display a frame and continue to loop unless a key is pressed.

When the “s” key is pressed, we’ll “select” an object ROI using cv2.selectROI . This function allows you to manually select an ROI with your mouse while the video stream is frozen on the frame.

The user must draw the bounding box and then press “ENTER” or “SPACE” to confirm the selection. If you need to reselect the region, simply press “ESCAPE”.

Using the bounding box info reported from the selection function, our tracker is initialized on Line 120. We also initialize our FPS counter on the subsequent Line 121.

Of course, we could also use an actual, real object detector in place of manual selection here as well.

Last week’s blog post already showed you how to combine a face detector with object tracking. For other objects, I would suggest referring to this blog post on real-time deep learning object detection to get you started. In a future blog post in this object tracking series, I’ll be showing you how to combine both the object detection and object tracking phase into a single script.

Lastly, let’s handle if the “q” key (for “quit”) is pressed or if there are no more frames in the video, thus exiting our loop:

# if the `q` key was pressed, break from the loop

elif key == ord("q"):

break

# if we are using a webcam, release the pointer

if not args.get("video", False):

vs.stop()

# otherwise, release the file pointer

else:

vs.release()

# close all windows

cv2.destroyAllWindows()

This last block simply handles the case where we have broken out of the loop. All pointers are released and windows are closed.

Object Tracking Results

In order to follow along and apply object tracking using OpenCV to the videos in this blog post, make sure you use the “Downloads” section to grab the code + videos.

From there, open up a terminal and execute the following command:

$ python opencv_object_tracking.py --video dashcam_boston.mp4 --tracker csrt

Be sure to edit the two command line arguments as desired: --video and --tracker .

If you have downloaded the source code + videos associated with this tutorial, the available arguments for --video include the following video files:

american_pharoah.mp4dashcam_boston.mp4drone.mp4nascar_01.mp4nascar_02.mp4race.mp4- …and feel free to experiment with your own videos or other videos you find online!

The available arguments for --tracker include:

csrtkcfboostingmiltldmedianflowmosse

Refer to the section, “8 OpenCV Object Tracking Implementations” above for more information about each tracker.

You can also use your computer’s webcam — simply leave off the video file argument:

$ python opencv_object_tracking.py --tracker csrt

In the following example video I have demonstrated how OpenCV’s object trackers can be used to track an object for an extended amount of time (i.e., an entire horse race) versus just short clips:

Video and Audio Credits

To create the examples for this blog post I needed to use clips from a number of different videos.

A massive “thank you” to Billy Higdon (Dashcam Boston), The New York Racing Association, Inc. (American Pharaoh), Tom Wadsworth (Multirotor UAV Tracking Cars for Aerial Filming), NASCAR (Danica Patrick leads NASCAR lap), GERrevolt (Usain Bolt 9.58 100m New World Record Berlin), Erich Schall, and Soularflair.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary

In today’s blog post you learned how to utilize OpenCV for object tracking. Specifically, we reviewed the eight object tracking algorithms (as of OpenCV 3.4) included in the OpenCV library:

- CSRT

- KCF

- Boosting

- MIL

- TLD

- MedianFlow

- MOSSE

- GOTURN

I recommend using either CSRT, KCF, or MOSSE for most object tracking applications:

- Use CSRT when you need higher object tracking accuracy and can tolerate slower FPS throughput

- Use KCF when you need faster FPS throughput but can handle slightly lower object tracking accuracy

- Use MOSSE when you need pure speed

From there, we applied each of OpenCV’s eight object trackers to various tasks, including sprinting, horse racing, auto racing, drone/UAV tracker, and dash cams for vehicles.

In next week’s blog post you’ll learn how to apply multi-object tracking using a special, built-in (but mostly unknown) OpenCV function.

To be notified when next week’s blog post on multi-object tracking goes live, just enter your email address in the form below!

Download the Source Code and FREE 17-page Resource Guide

Enter your email address below to get a .zip of the code and a FREE 17-page Resource Guide on Computer Vision, OpenCV, and Deep Learning. Inside you'll find my hand-picked tutorials, books, courses, and libraries to help you master CV and DL!