Table of Contents

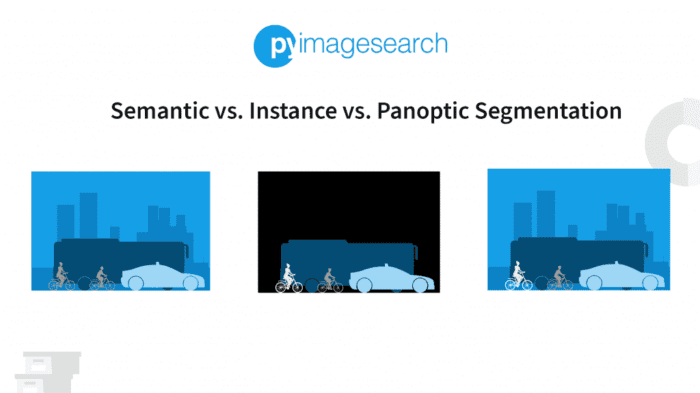

Semantic vs. Instance vs. Panoptic Segmentation

In this tutorial, you will learn about Semantic vs. Instance vs. Panoptic Segmentation.

How Do These Image Segmentation Techniques Differ?

Kirillov et al. (2018) presented a new type of image segmentation technique known as panoptic segmentation, which opened up a debate for determining the best among the three (i.e., semantic vs. instance vs. panoptic) image segmentation techniques.

Before we delve into this debate, it is important to understand the fundamentals of image segmentation, including the comparison between things and stuff.

Image segmentation is a computer vision and image processing technique that involves grouping or labeling similar regions or segments in an image on a pixel level. A class label or a mask represents each segment of pixels.

In image segmentation, an image has two main components: things and stuff. Things correspond to countable objects in an image (e.g., people, flowers, birds, animals, etc.). In comparison, stuff represents amorphous regions (or repeating patterns) of similar material, which is uncountable (e.g., road, sky, and grass).

In this lesson, we’ll differentiate semantic vs. instance vs. panoptic image segmentation techniques based on how they treat things and stuff.

Let’s begin!

The Difference

The difference between semantic vs. instance vs. panoptic segmentation lies in how they process the things and stuff in the image.

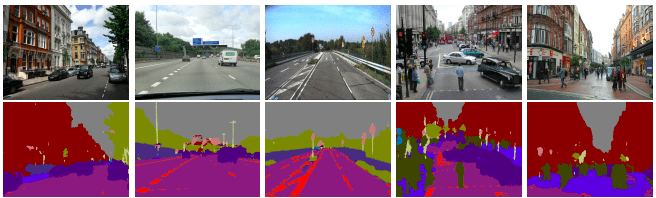

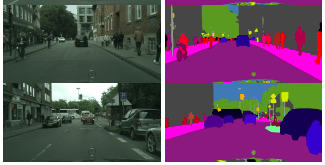

Semantic segmentation studies the uncountable stuff in an image. It analyzes each image pixel and assigns a unique class label based on the texture it represents. For example, in Figure 1, an image contains two cars, three pedestrians, a road, and the sky. The two cars represent the same texture as do the three pedestrians.

Semantic segmentation would assign unique class labels to each of these textures or categories. However, semantic segmentation’s output cannot differentiate or count the two cars or three pedestrians separately. Commonly used semantic segmentation techniques include SegNet, U-Net, DeconvNet, and FCNs.

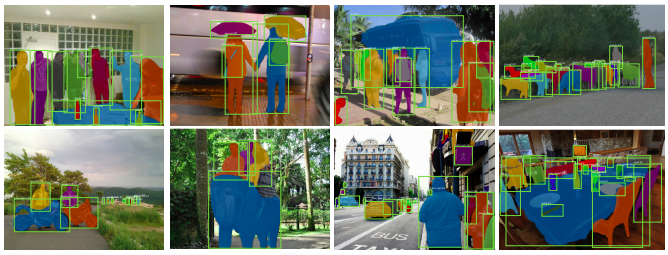

Instance segmentation typically deals with tasks related to countable things. It can detect each object or instance of a class present in an image and assigns it a different mask or bounding box with a unique identifier.

For example, instance segmentation would identify the two cars in the previous example separately as, let’s say, car_1 and car_2. Commonly used instance segmentation techniques are Mask R-CNN, Faster R-CNN, PANet, and YOLACT. Figure 2 demonstrates different instance segmentation detections.

The goal of both semantic and instance segmentation techniques is to process a scene coherently. Naturally, we want to identify both stuff and things in a scene to build more practical real-world applications. Researchers devised a solution to reconcile both stuff and things within a scene (i.e., panoptic segmentation).

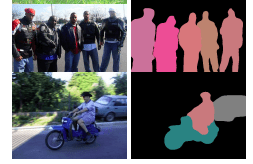

Panoptic segmentation is the best of both worlds. It presents a unified image segmentation approach where each pixel in a scene is assigned a semantic label (due to semantic segmentation) and a unique instance identifier (due to instance segmentation).

Panoptic segmentation assigns each pixel only one pair of a semantic label and an instance identifier. However, objects can have overlapping pixels. In this case, panoptic segmentation resolves the discrepancy by favoring the object instance, as the priority is to identify each thing rather than stuff. Figure 3 demonstrates different panoptic segmentation detections.

Most panoptic segmentation models are based on the Mask R-CNN method. Its backbone architectures include UPSNet, FPSNet, EPSNet, and VPSNet.

Evaluation Metrics

Each segmentation technique uses different evaluation metrics to assess the predicted masks or identifiers in a scene. That is because stuff and things are processed differently.

Semantic segmentation normally employs the Intersection over Union (IoU) metric (also referred to as the Jaccard Index), which checks the similarity between the predicted and ground truth masks. It determines how much area overlaps between the two masks. Besides IoU, we can also use the dice coefficient, pixel accuracy, and mean accuracy metrics to perform a more robust evaluation. These metrics do not consider object-level labels.

Instance segmentation, on the other hand, uses Average Precision (AP) as the standard evaluation metric. The AP metric uses the IoU on a pixel-to-pixel basis for each object instance.

Finally, panoptic segmentation uses the Panoptic Quality (PQ) metric, which evaluates the predicted masks and instance identifiers for both things and stuff. PQ unifies evaluation over all classes by multiplying segmentation quality (SQ) and recognition quality (RQ) terms. SQ represents the average IoU score of the matched segments, while RQ is the F1 score calculated using the precision and recall values of the predicted masks.

Real-World Applications

All three image segmentation techniques have overlapping applications in computer vision and image processing. Together, they offer many real-world applications, helping humankind increase its cognitive bandwidth. Some real-world applications for semantic and instance segmentation include:

- Autonomous vehicles or self-driving cars: 3D semantic segmentation allows vehicles to better understand their environment by identifying different objects on the street. At the same time, instance segmentation identifies each object instance to provide greater depth for calculating speed and distance.

- Analyzing medical scans: Both techniques identify tumors and other abnormalities in MRI, CT, and X-ray scans.

- Satellite or aerial imagery: Both techniques offer a method to map the world from space or an altitude. They can outline world objects like rivers, oceans, roads, agricultural fields, buildings, etc. This is similar to their application in scene understanding.

Panoptic segmentation takes visual perception in autonomous vehicles to the next level. It produces finely grained masks with pixel-level accuracy, allowing self-driving cars to make more accurate driving decisions. Additionally, panoptic segmentation is finding more applications in medical image analysis, data annotation, data augmentation, UAV (unmanned aerial vehicle) remote sensing, video surveillance, and crowd counting. In all domains, panoptic segmentation provides greater depth and accuracy while predicting masks and bounding boxes.

What's next? We recommend PyImageSearch University.

86+ total classes • 115+ hours hours of on-demand code walkthrough videos • Last updated: February 2026

★★★★★ 4.84 (128 Ratings) • 16,000+ Students Enrolled

I strongly believe that if you had the right teacher you could master computer vision and deep learning.

Do you think learning computer vision and deep learning has to be time-consuming, overwhelming, and complicated? Or has to involve complex mathematics and equations? Or requires a degree in computer science?

That’s not the case.

All you need to master computer vision and deep learning is for someone to explain things to you in simple, intuitive terms. And that’s exactly what I do. My mission is to change education and how complex Artificial Intelligence topics are taught.

If you're serious about learning computer vision, your next stop should be PyImageSearch University, the most comprehensive computer vision, deep learning, and OpenCV course online today. Here you’ll learn how to successfully and confidently apply computer vision to your work, research, and projects. Join me in computer vision mastery.

Inside PyImageSearch University you'll find:

- ✓ 86+ courses on essential computer vision, deep learning, and OpenCV topics

- ✓ 86 Certificates of Completion

- ✓ 115+ hours hours of on-demand video

- ✓ Brand new courses released regularly, ensuring you can keep up with state-of-the-art techniques

- ✓ Pre-configured Jupyter Notebooks in Google Colab

- ✓ Run all code examples in your web browser — works on Windows, macOS, and Linux (no dev environment configuration required!)

- ✓ Access to centralized code repos for all 540+ tutorials on PyImageSearch

- ✓ Easy one-click downloads for code, datasets, pre-trained models, etc.

- ✓ Access on mobile, laptop, desktop, etc.

Summary: Which One Should I Use?

Image segmentation is an important part of the AI revolution. It is the core component of autonomous applications across various industries (e.g., manufacturing, retail, healthcare, and transportation).

Historically, image segmentation was ineffective on a large scale due to hardware limitations. Today, with GPUs, cloud TPUs, and edge computing, image segmentation applications are accessible to general consumers.

In this lesson, we discussed semantic vs. instance vs. panoptic image segmentation techniques. All three techniques present valid applications in academia and the real world. In the last few years, panoptic segmentation has seen more growth among researchers to advance the field of computer vision. In contrast, semantic segmentation and instance segmentation have numerous real-world applications as their algorithms are more mature. In any form, image segmentation is essential in progressing hyperautomation across industries.

Reference

Shiledarbaxi, N. “Semantic vs Instance vs Panoptic: Which Image Segmentation Technique to Choose,” Developers Corner, 2021.

Unleash the potential of computer vision with Roboflow - Free!

- Step into the realm of the future by signing up or logging into your Roboflow account. Unlock a wealth of innovative dataset libraries and revolutionize your computer vision operations.

- Jumpstart your journey by choosing from our broad array of datasets, or benefit from PyimageSearch’s comprehensive library, crafted to cater to a wide range of requirements.

- Transfer your data to Roboflow in any of the 40+ compatible formats. Leverage cutting-edge model architectures for training, and deploy seamlessly across diverse platforms, including API, NVIDIA, browser, iOS, and beyond. Integrate our platform effortlessly with your applications or your favorite third-party tools.

- Equip yourself with the ability to train a potent computer vision model in a mere afternoon. With a few images, you can import data from any source via API, annotate images using our superior cloud-hosted tool, kickstart model training with a single click, and deploy the model via a hosted API endpoint. Tailor your process by opting for a code-centric approach, leveraging our intuitive, cloud-based UI, or combining both to fit your unique needs.

- Embark on your journey today with absolutely no credit card required. Step into the future with Roboflow.

Join the PyImageSearch Newsletter and Grab My FREE 17-page Resource Guide PDF

Enter your email address below to join the PyImageSearch Newsletter and download my FREE 17-page Resource Guide PDF on Computer Vision, OpenCV, and Deep Learning.

Comment section

Hey, Adrian Rosebrock here, author and creator of PyImageSearch. While I love hearing from readers, a couple years ago I made the tough decision to no longer offer 1:1 help over blog post comments.

At the time I was receiving 200+ emails per day and another 100+ blog post comments. I simply did not have the time to moderate and respond to them all, and the sheer volume of requests was taking a toll on me.

Instead, my goal is to do the most good for the computer vision, deep learning, and OpenCV community at large by focusing my time on authoring high-quality blog posts, tutorials, and books/courses.

If you need help learning computer vision and deep learning, I suggest you refer to my full catalog of books and courses — they have helped tens of thousands of developers, students, and researchers just like yourself learn Computer Vision, Deep Learning, and OpenCV.

Click here to browse my full catalog.